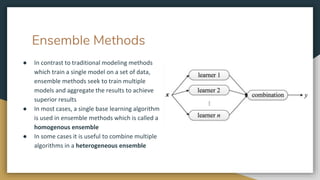

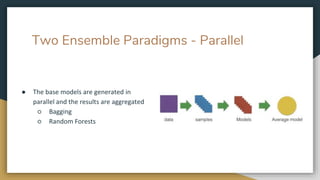

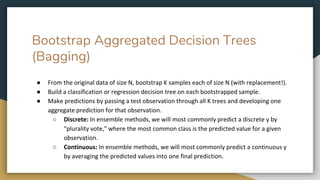

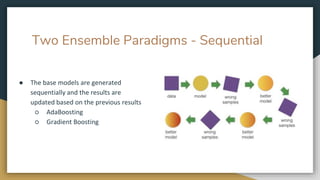

Ensemble methods improve model accuracy by combining multiple models, either through parallel approaches like bagging and random forests, or sequential approaches like adaboosting and gradient boosting. These methods help mitigate issues related to hypothesis space, computation, and representation that single models face. By aggregating predictions from various models, ensemble techniques can reduce variance and better approximate the true underlying function being modeled.