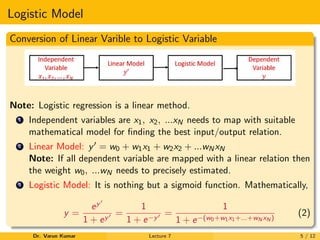

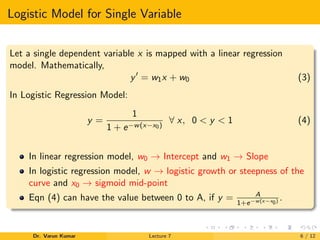

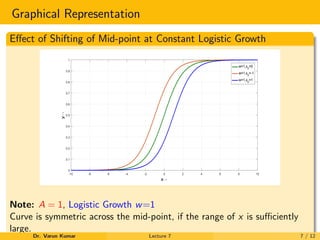

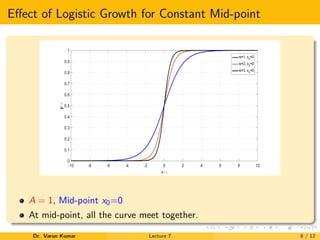

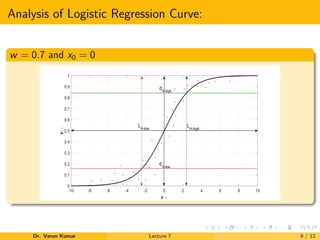

This document provides an introduction to logistic regression. It outlines key features such as using a logistic function to model a binary dependent variable that can take on values of 0 or 1. Logistic regression is a linear method that uses the logistic function to transform predictions. The document discusses applications in machine learning, medical science, social science, and industry. It also provides details on logistic regression models, including converting linear variables to logistic variables using a sigmoid function and examining the effects of varying the logistic growth and midpoint parameters on the logistic regression curve.