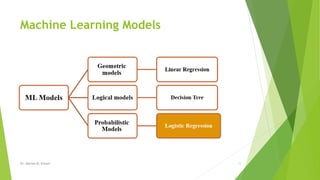

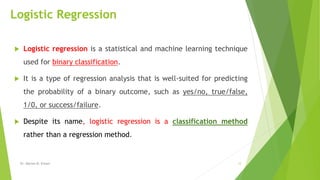

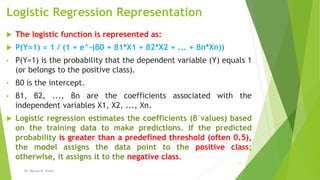

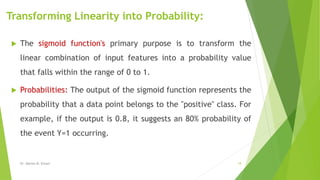

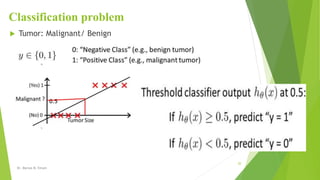

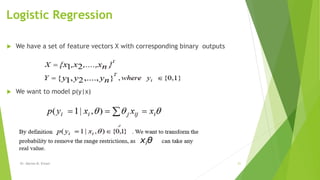

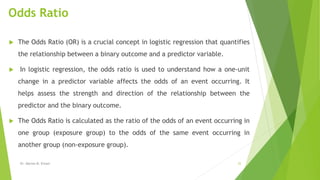

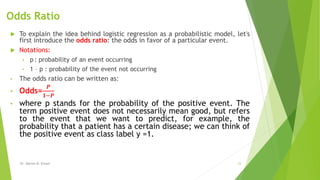

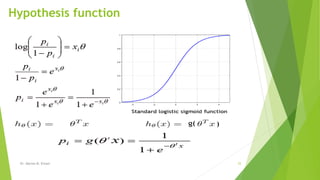

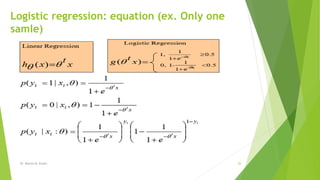

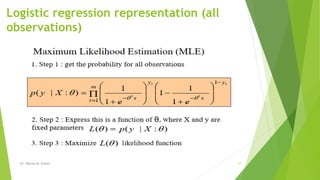

The document provides an introduction to logistic regression, detailing its application as a statistical technique for binary classification problems where the target variable is categorical. It explains the logistic function's role in estimating probabilities and outlines the process of building a predictive model using training data. Additionally, it highlights the importance of the odds ratio and evaluation metrics in assessing classification models.