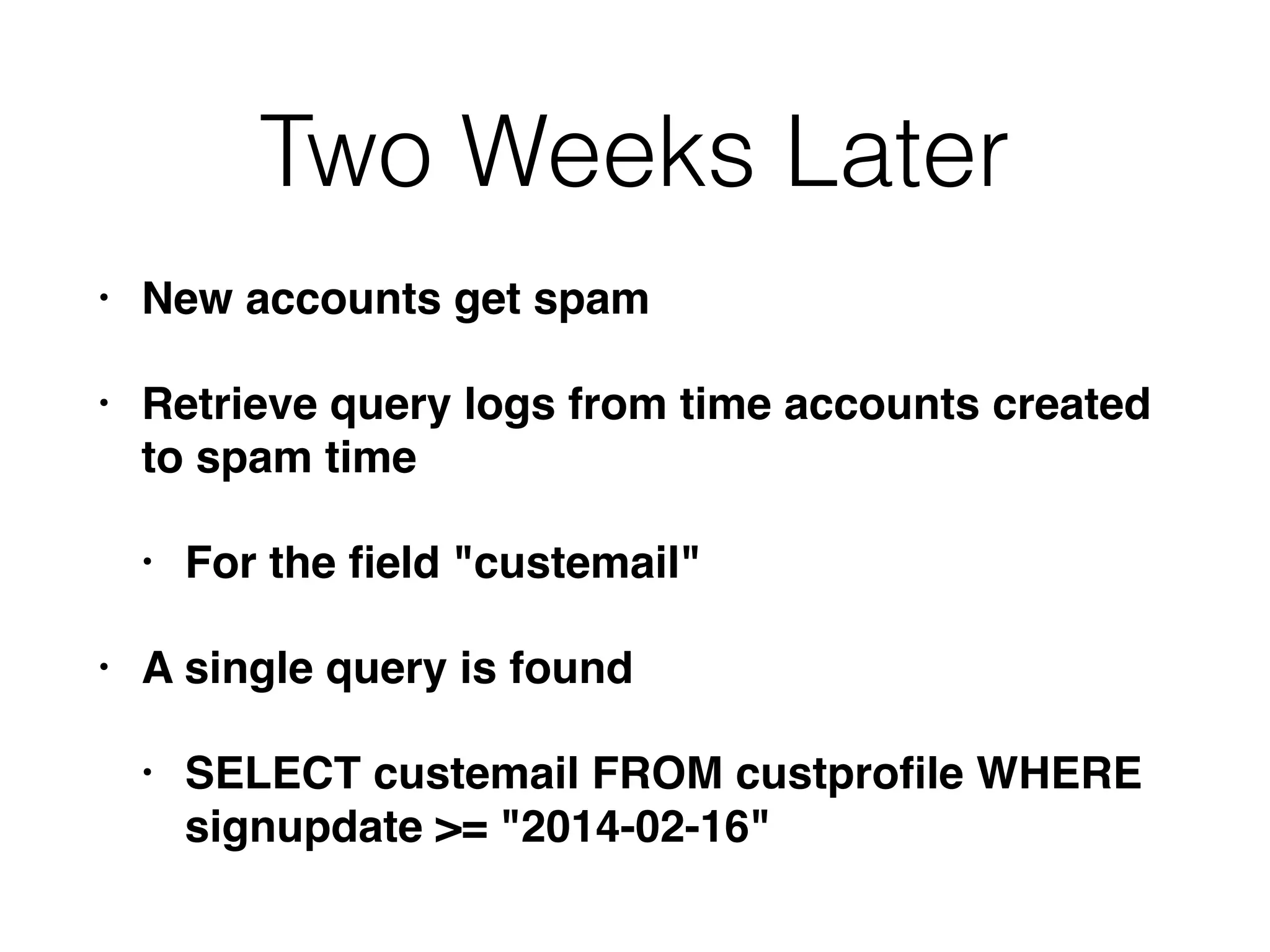

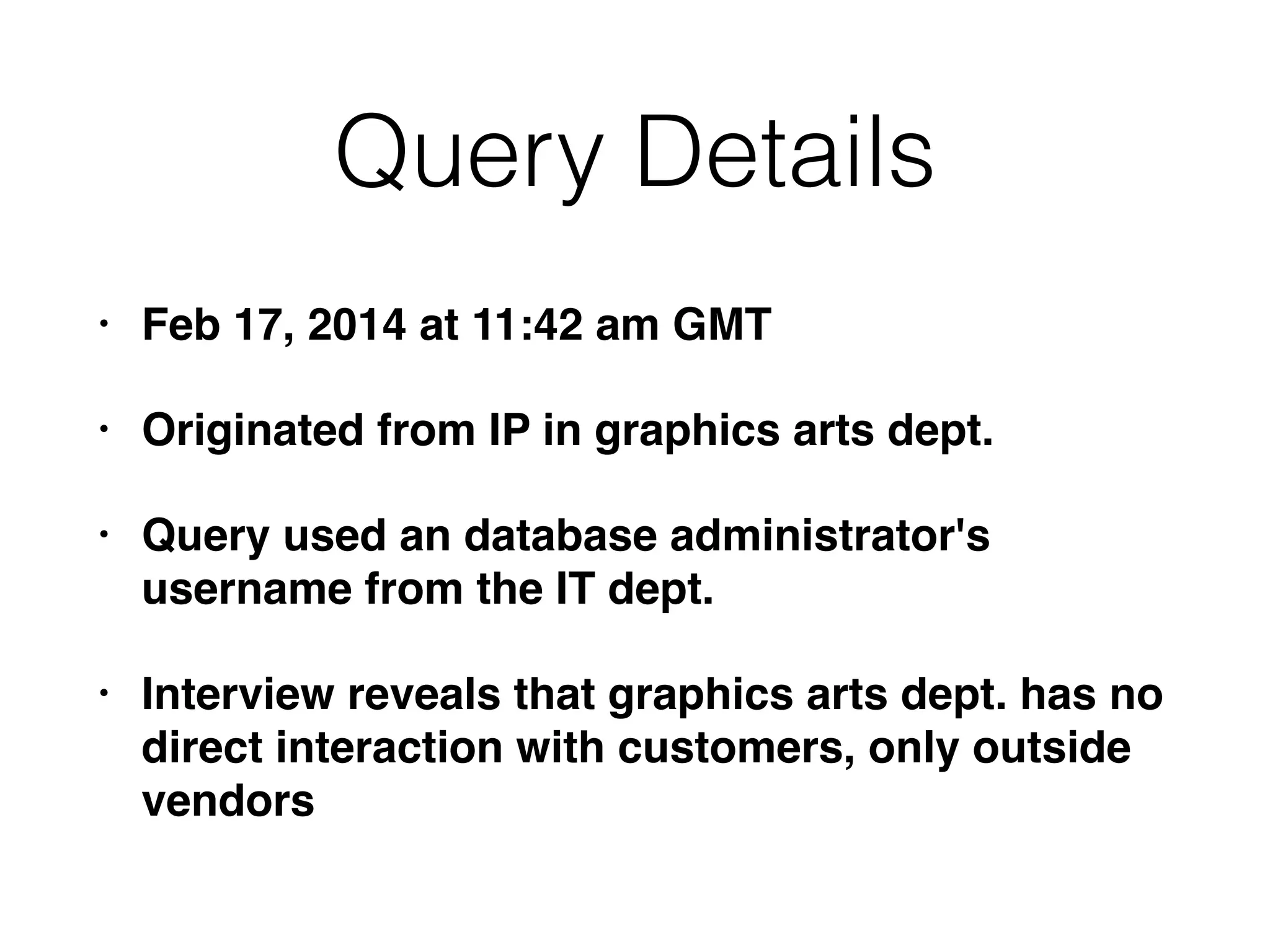

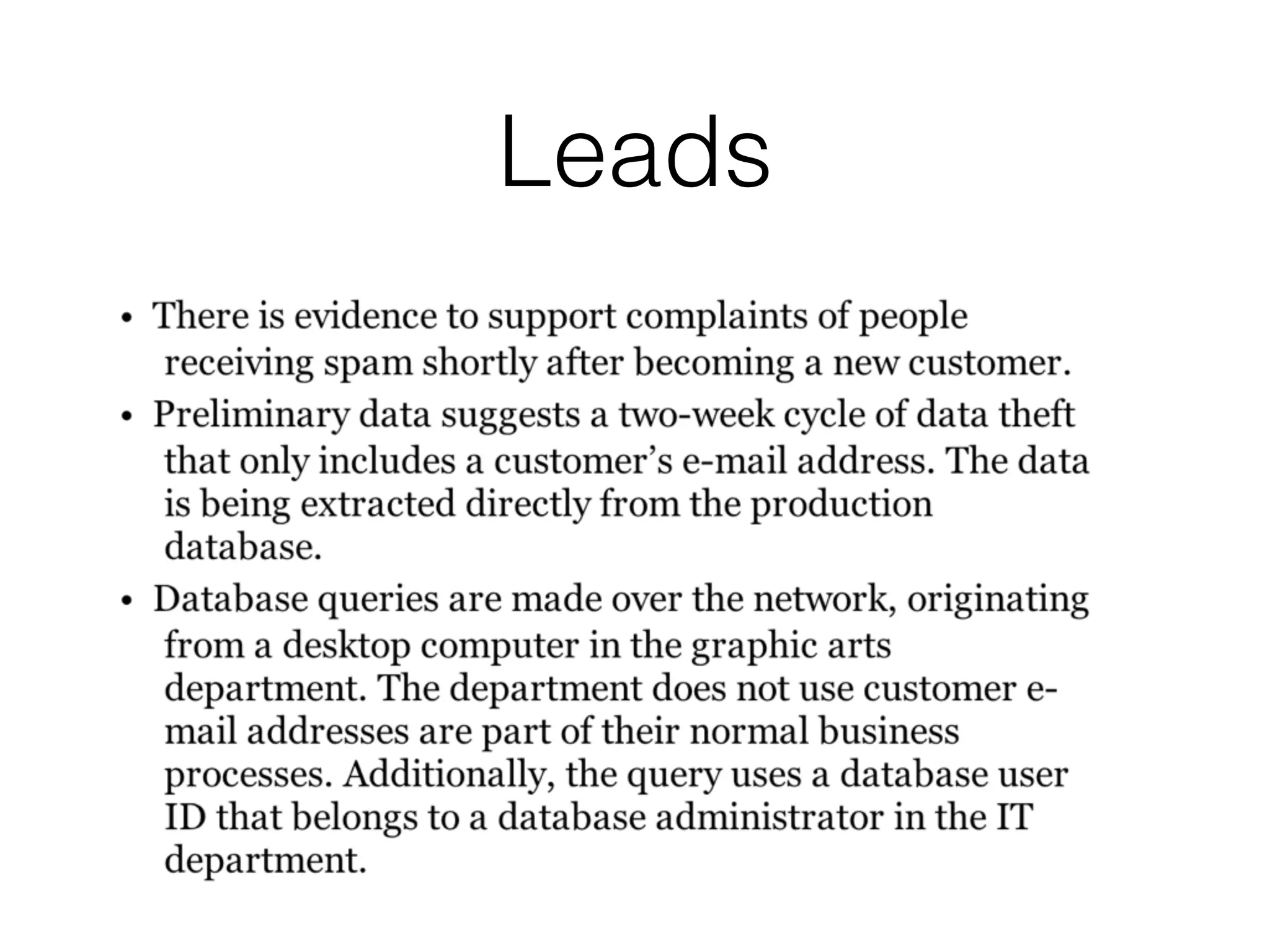

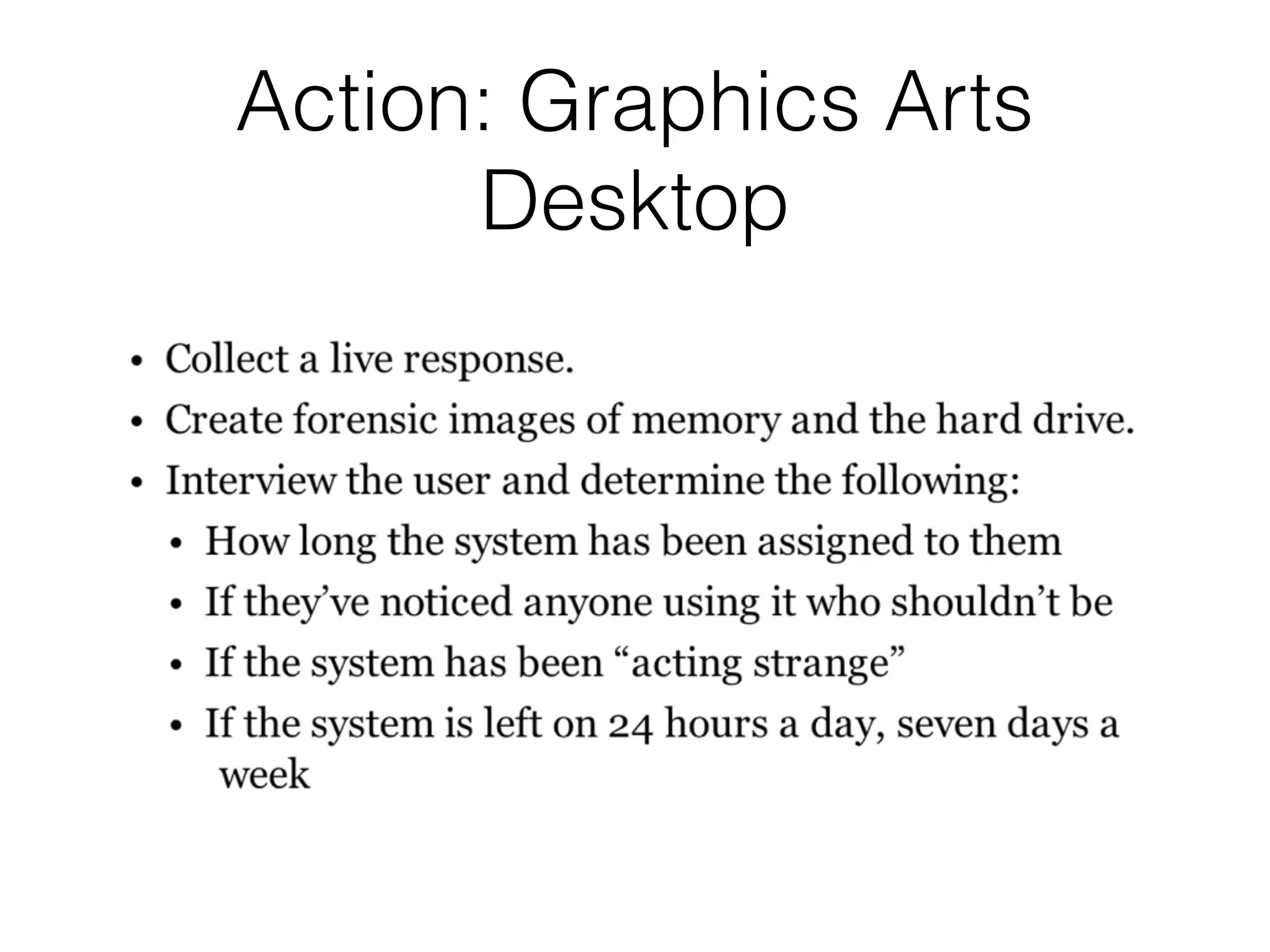

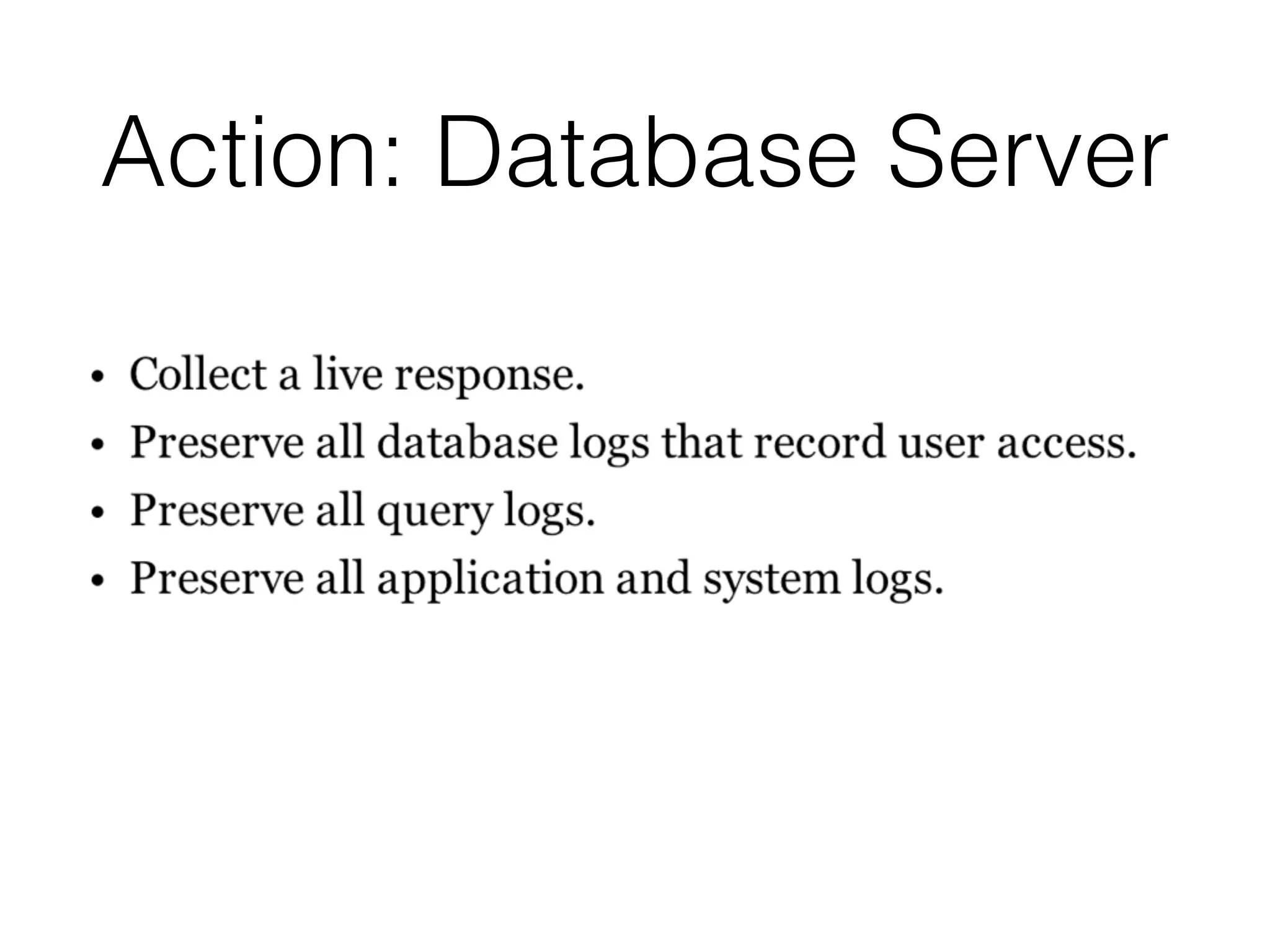

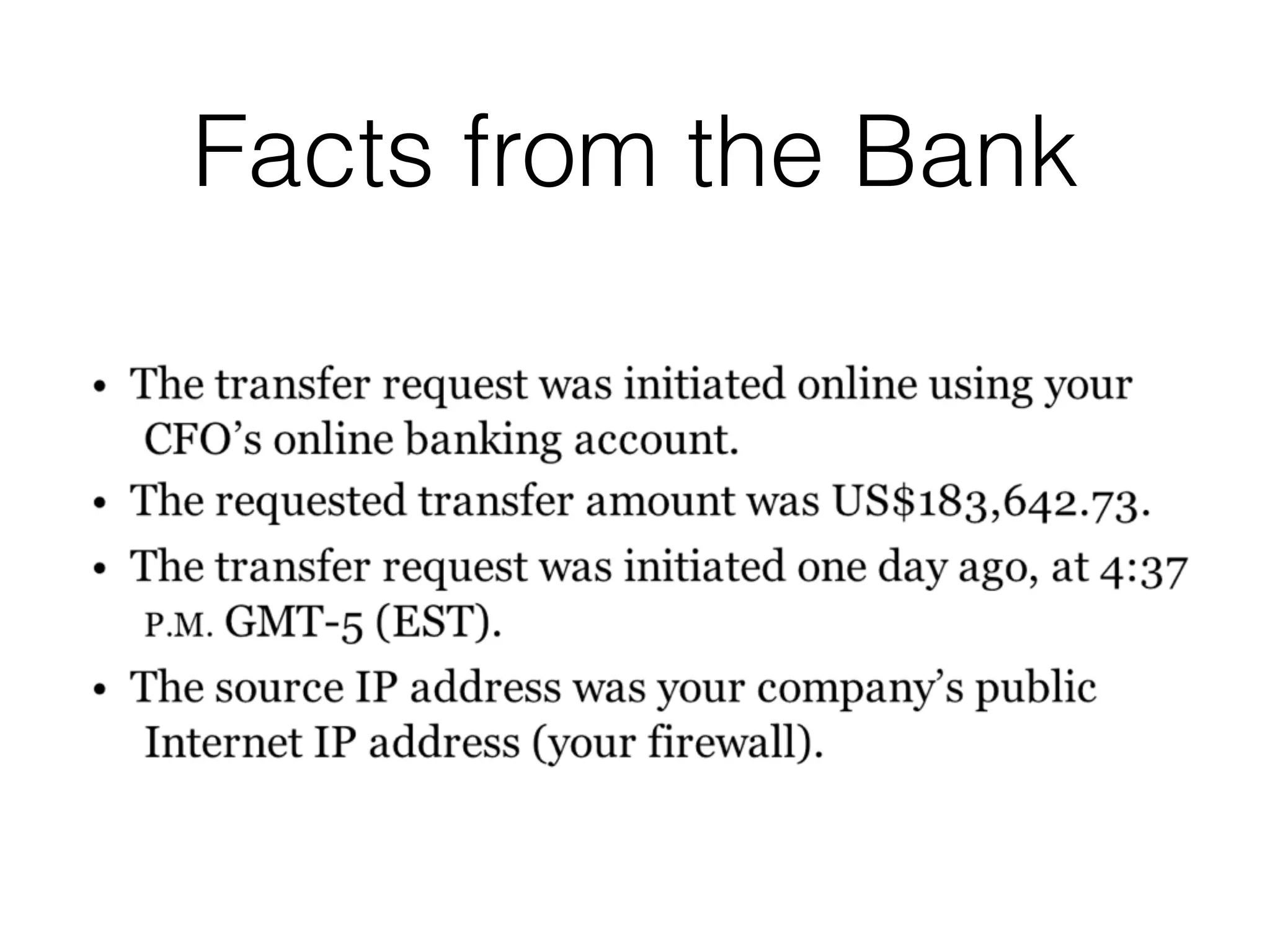

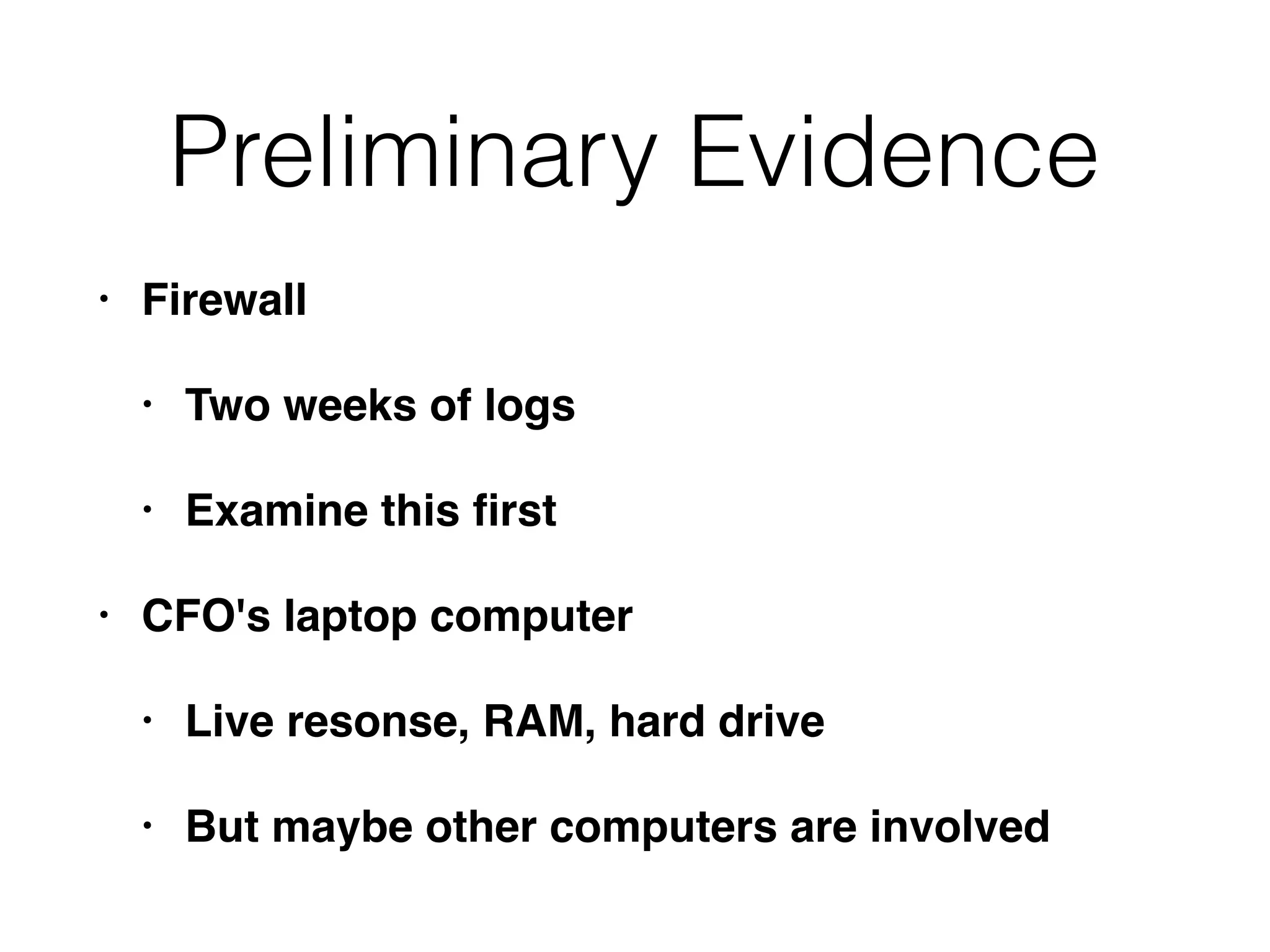

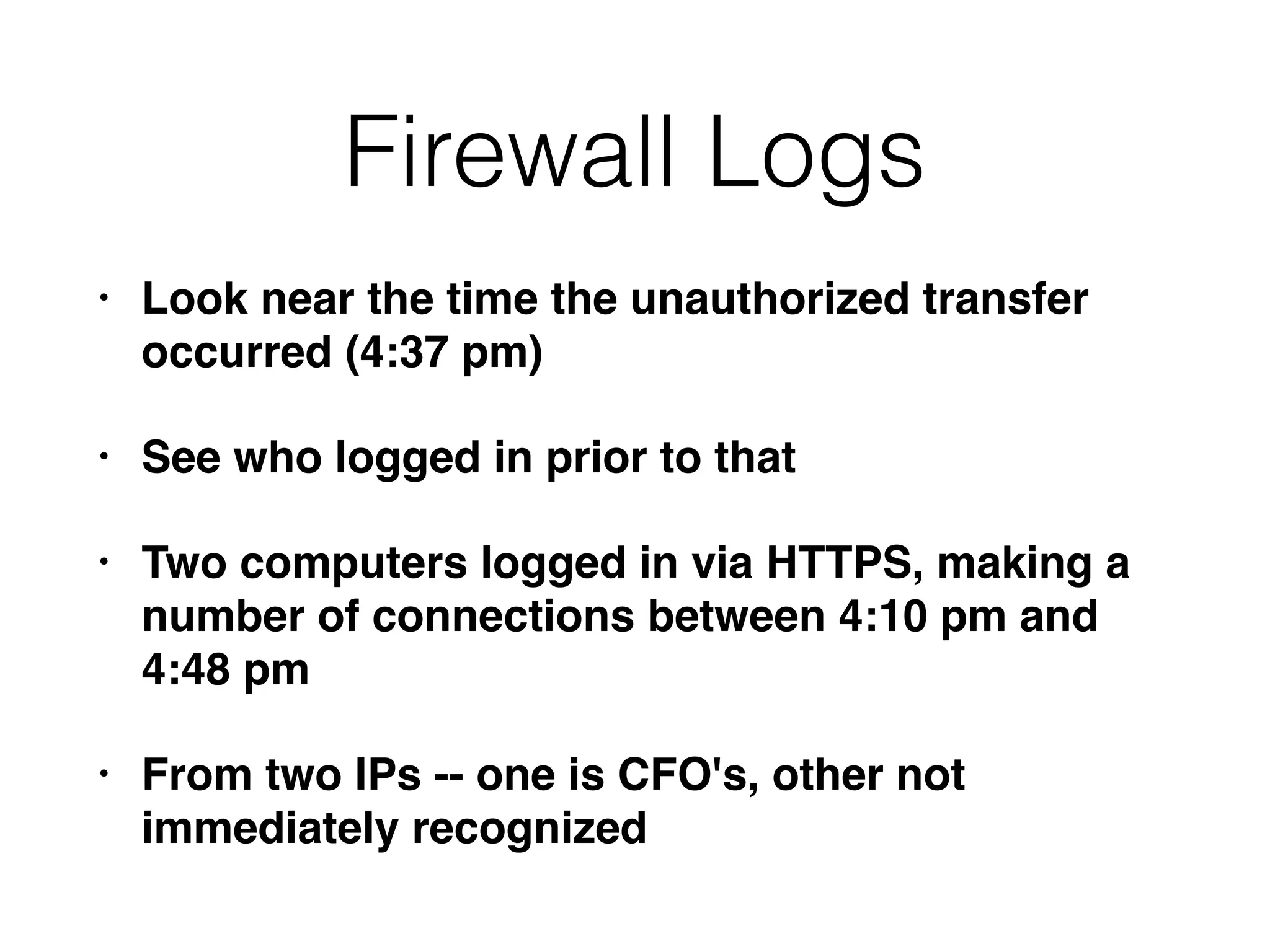

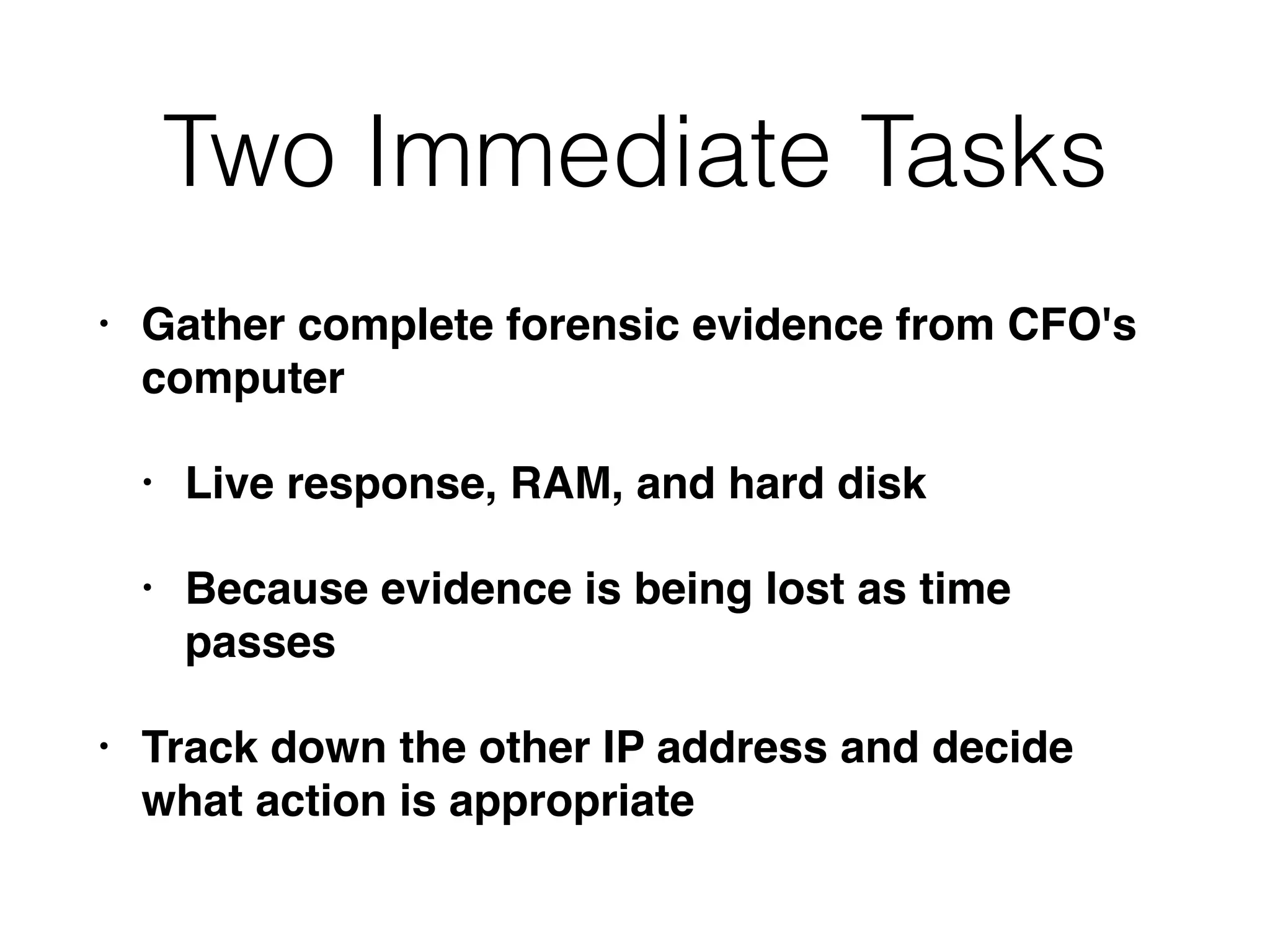

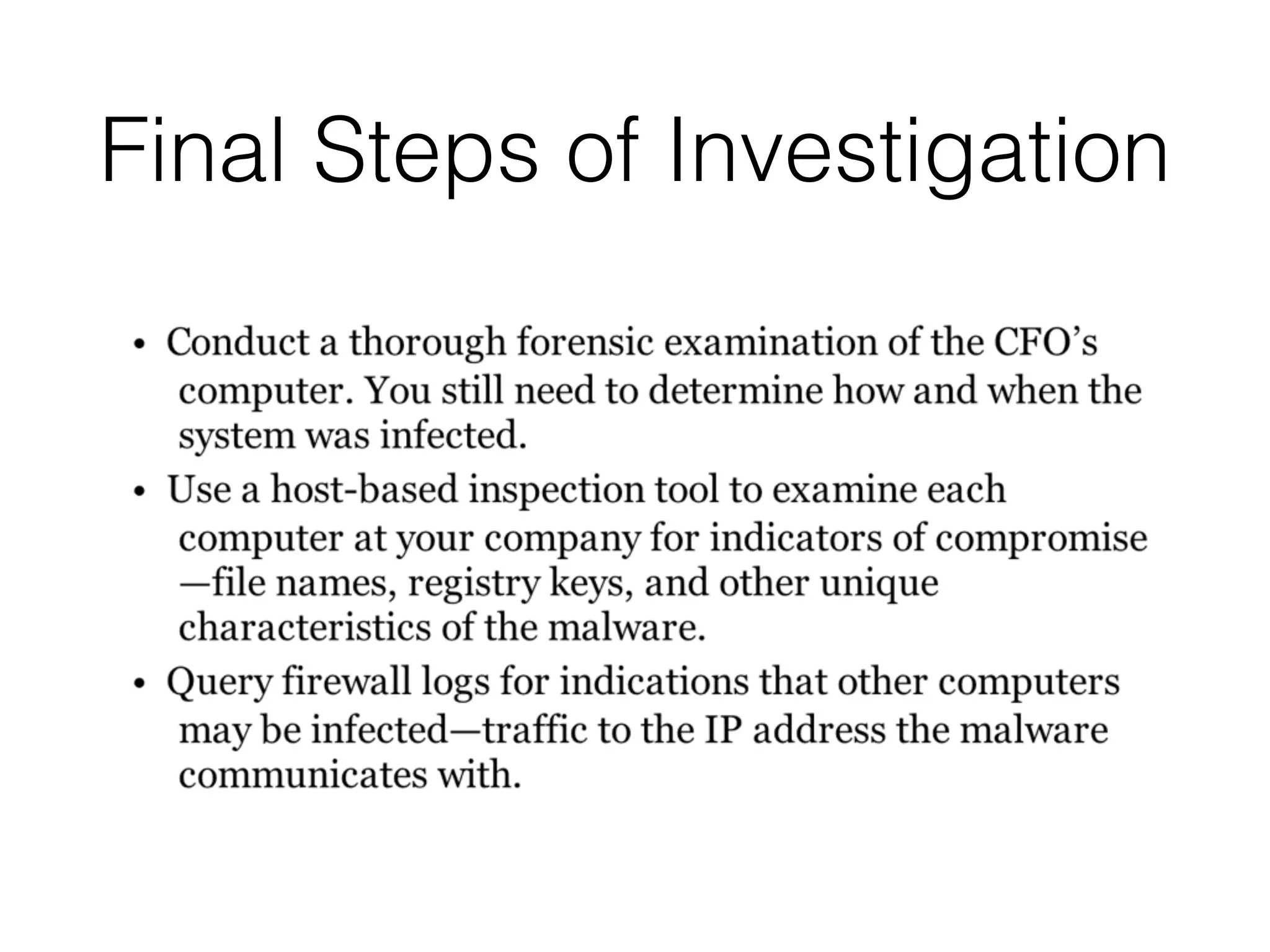

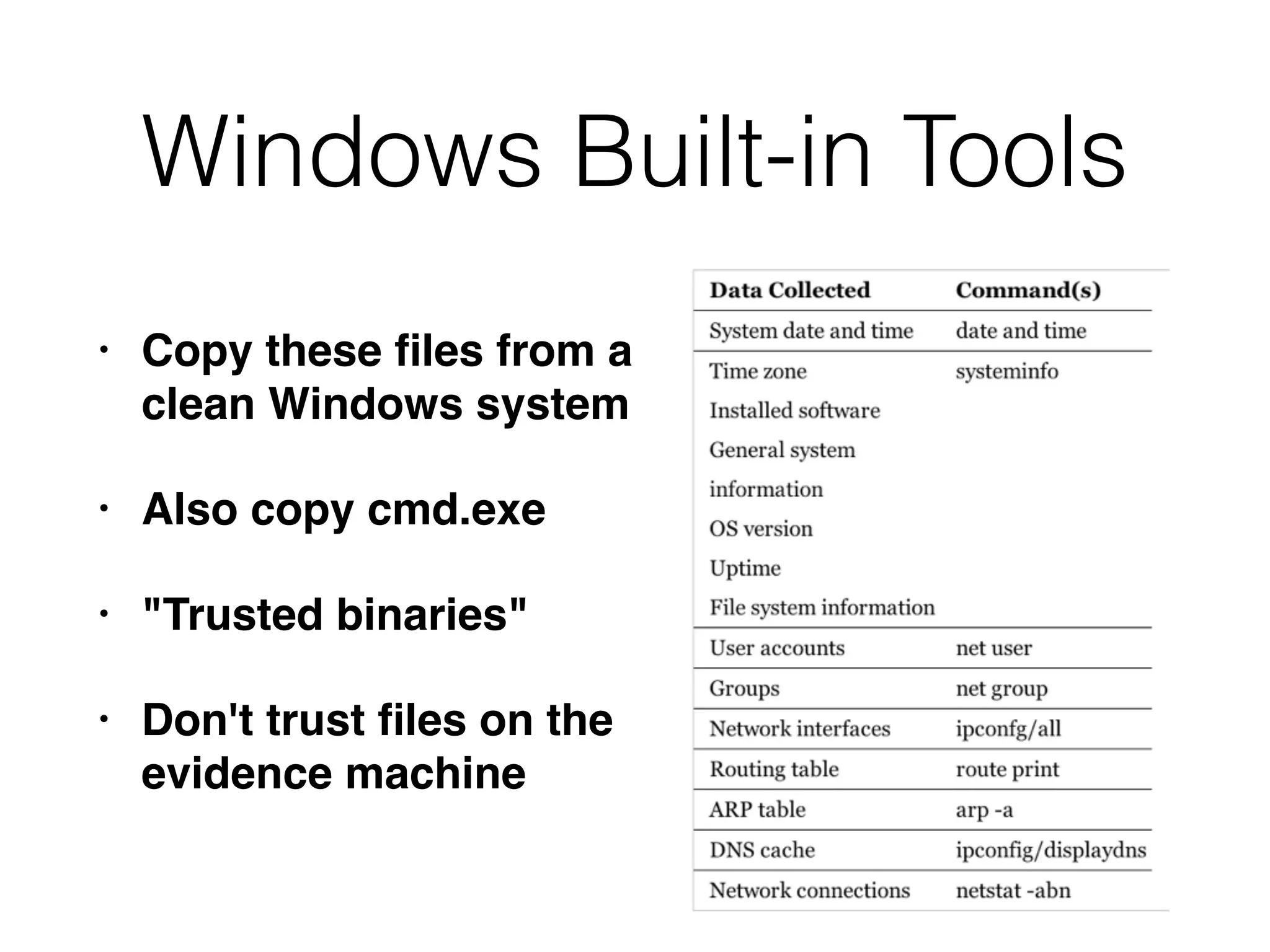

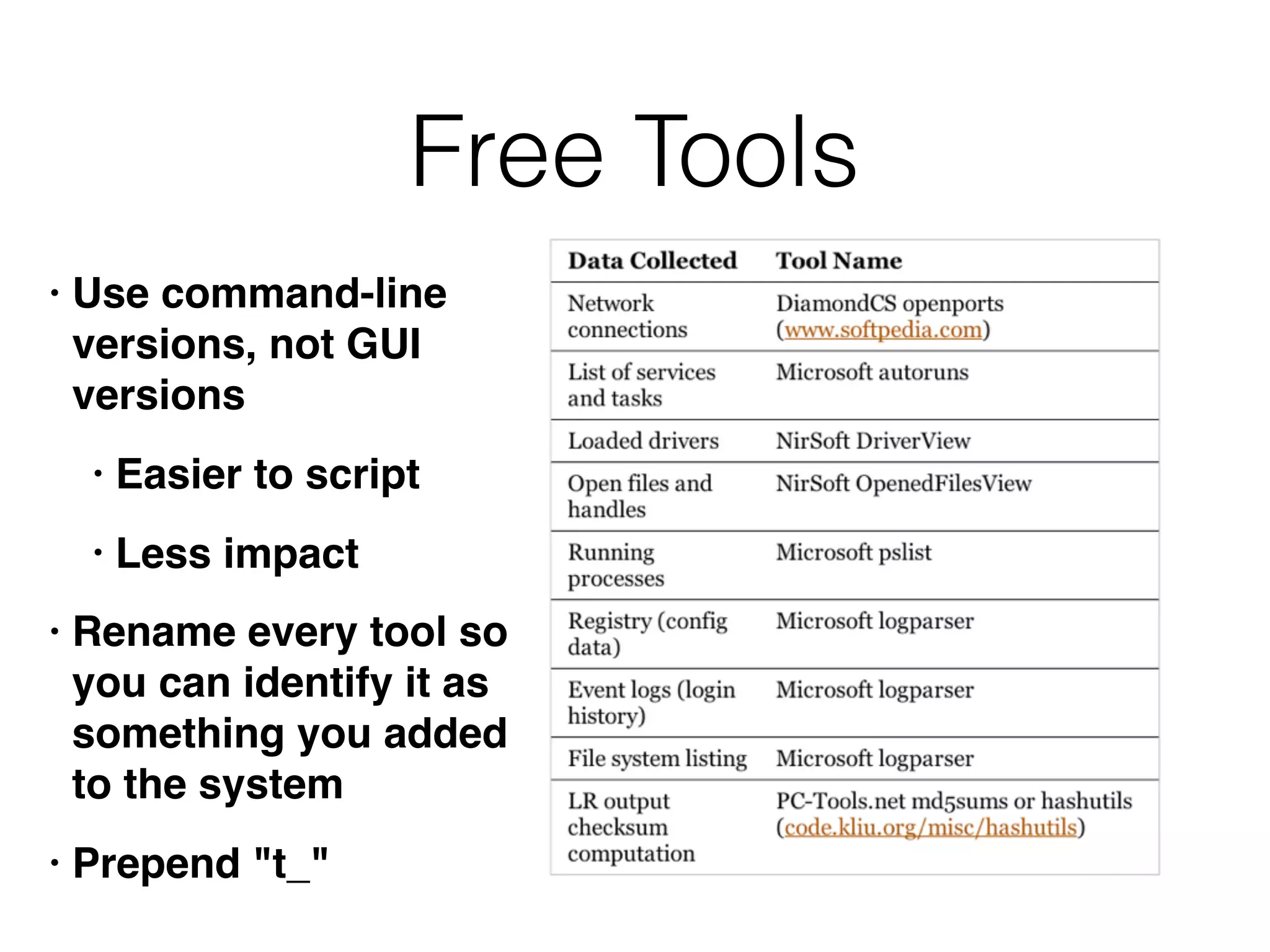

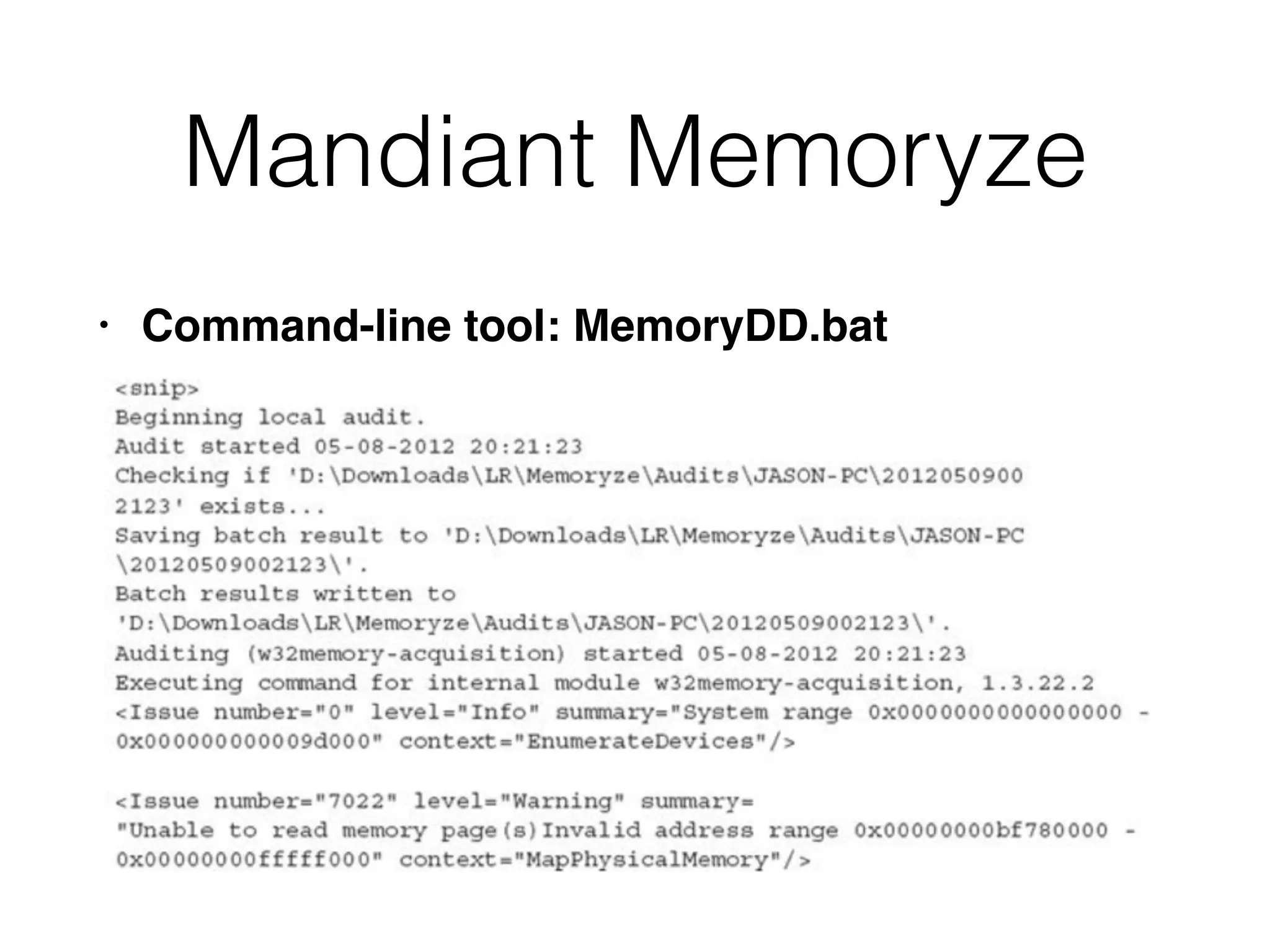

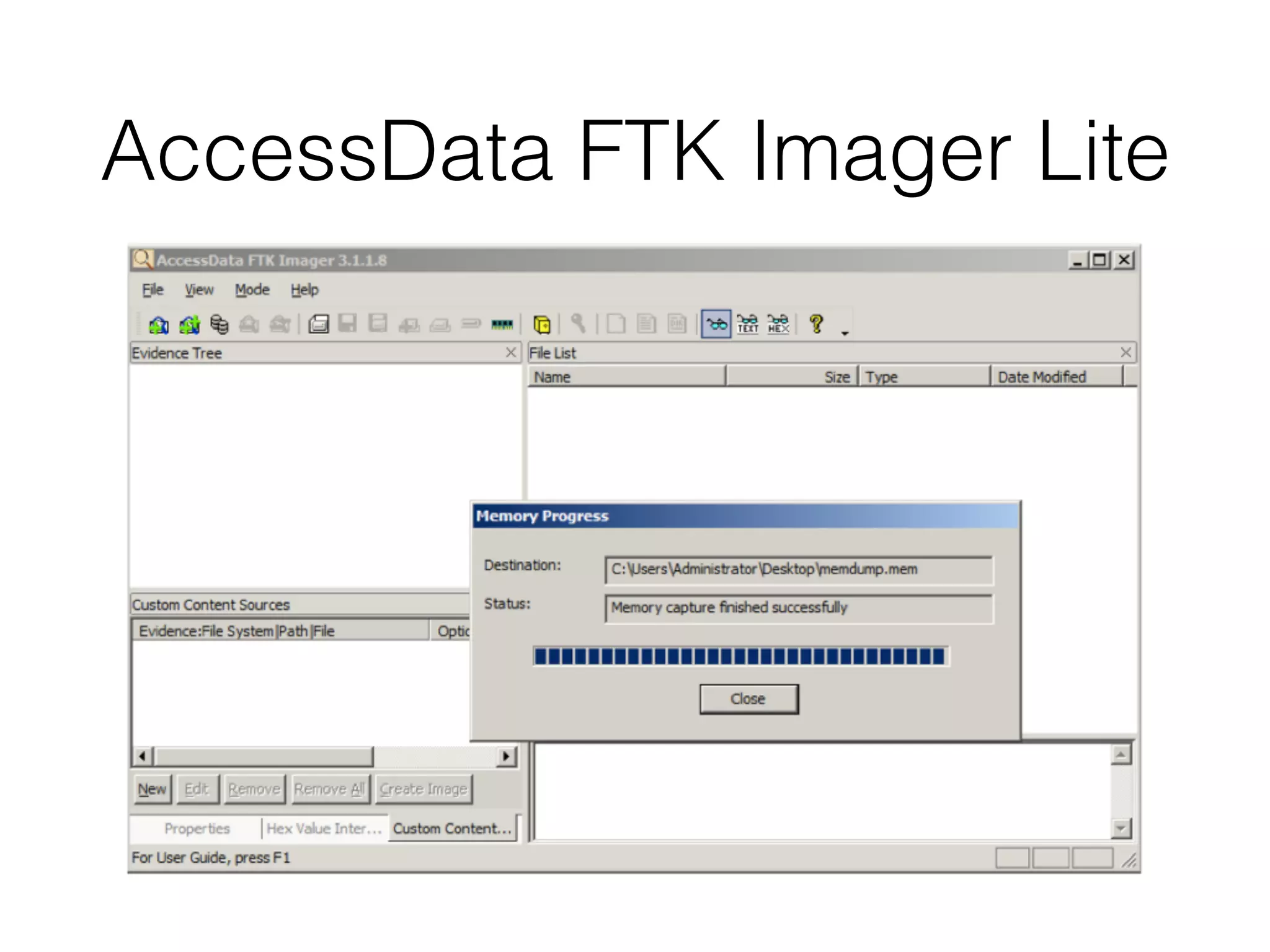

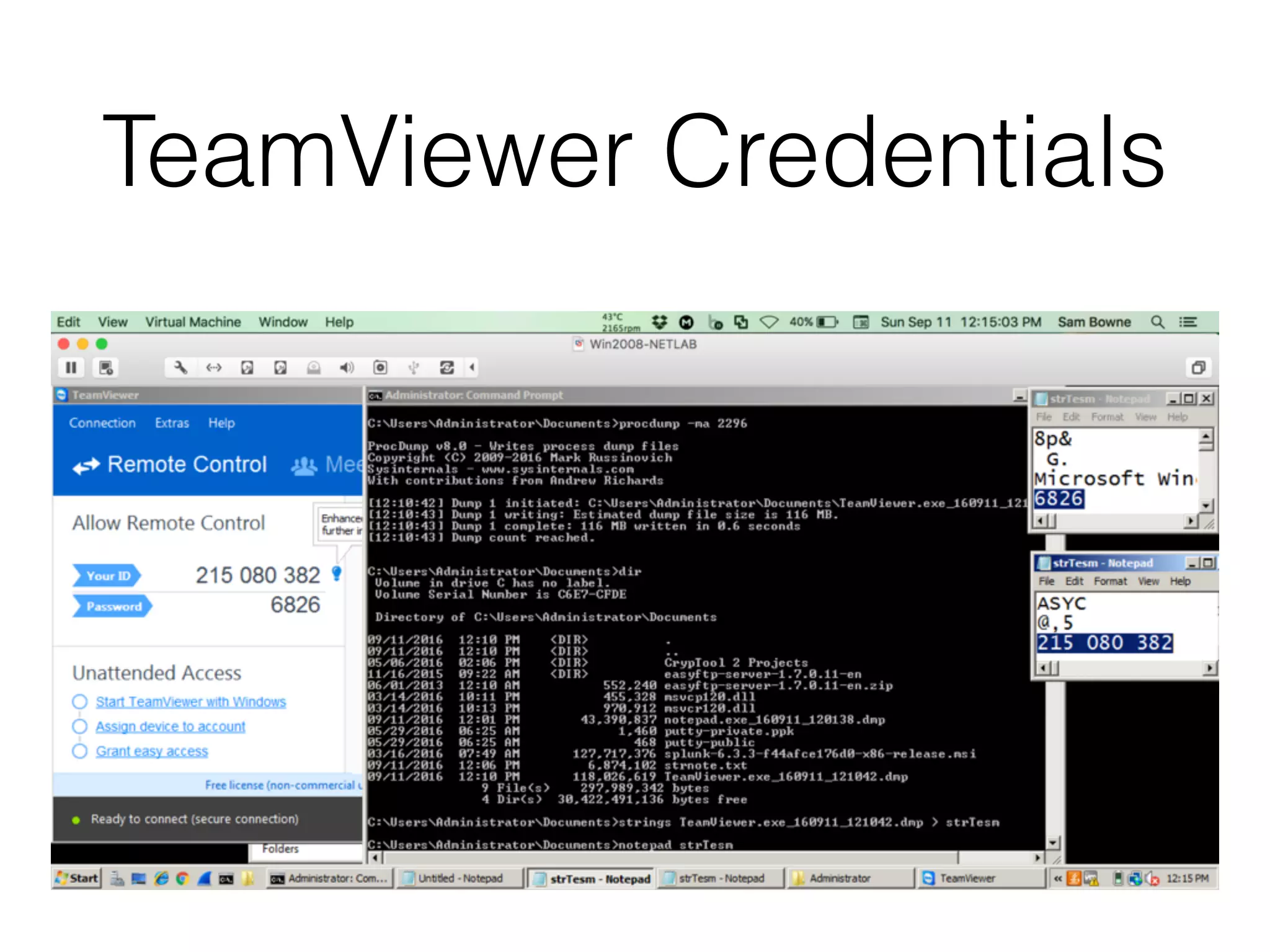

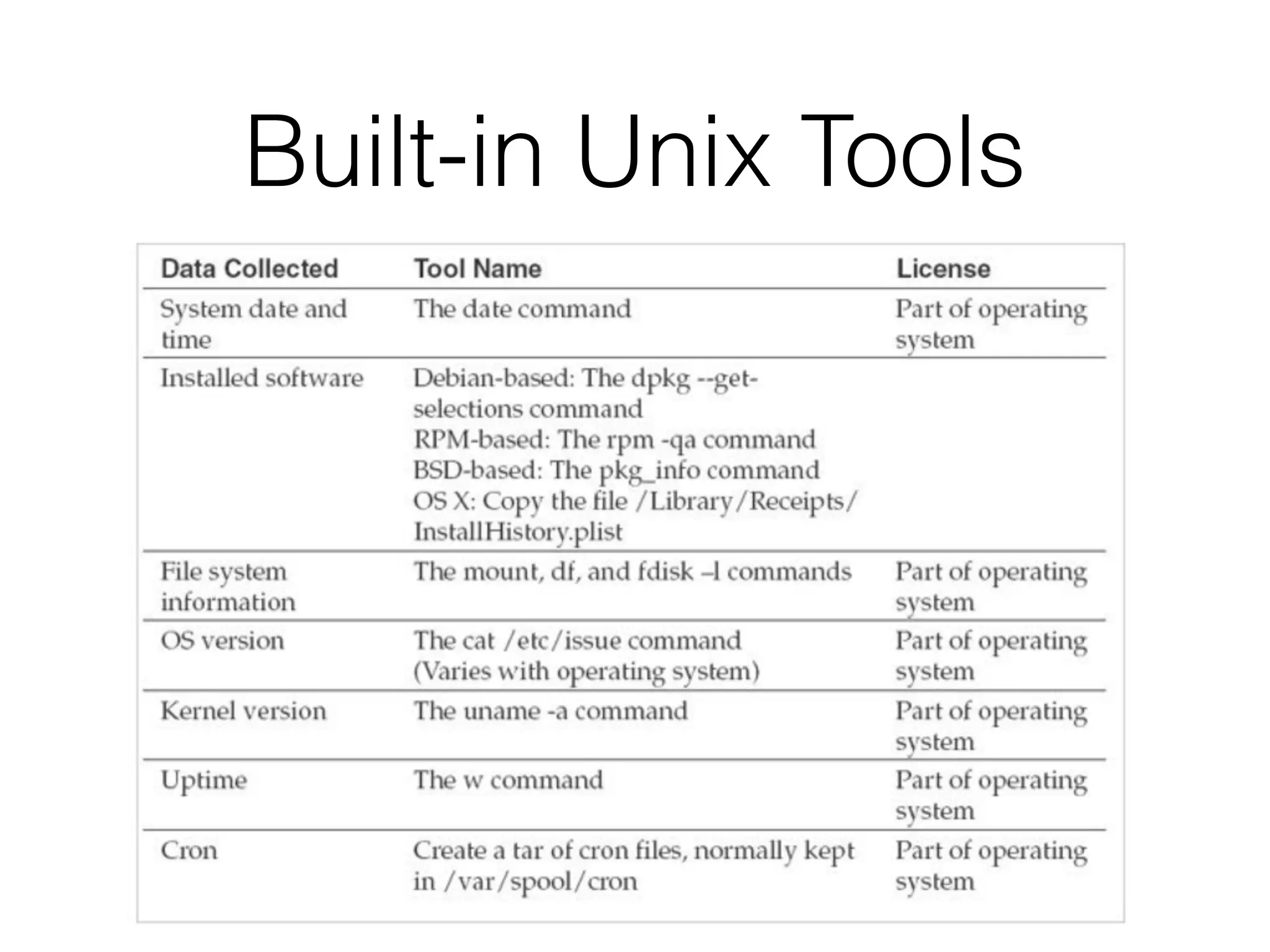

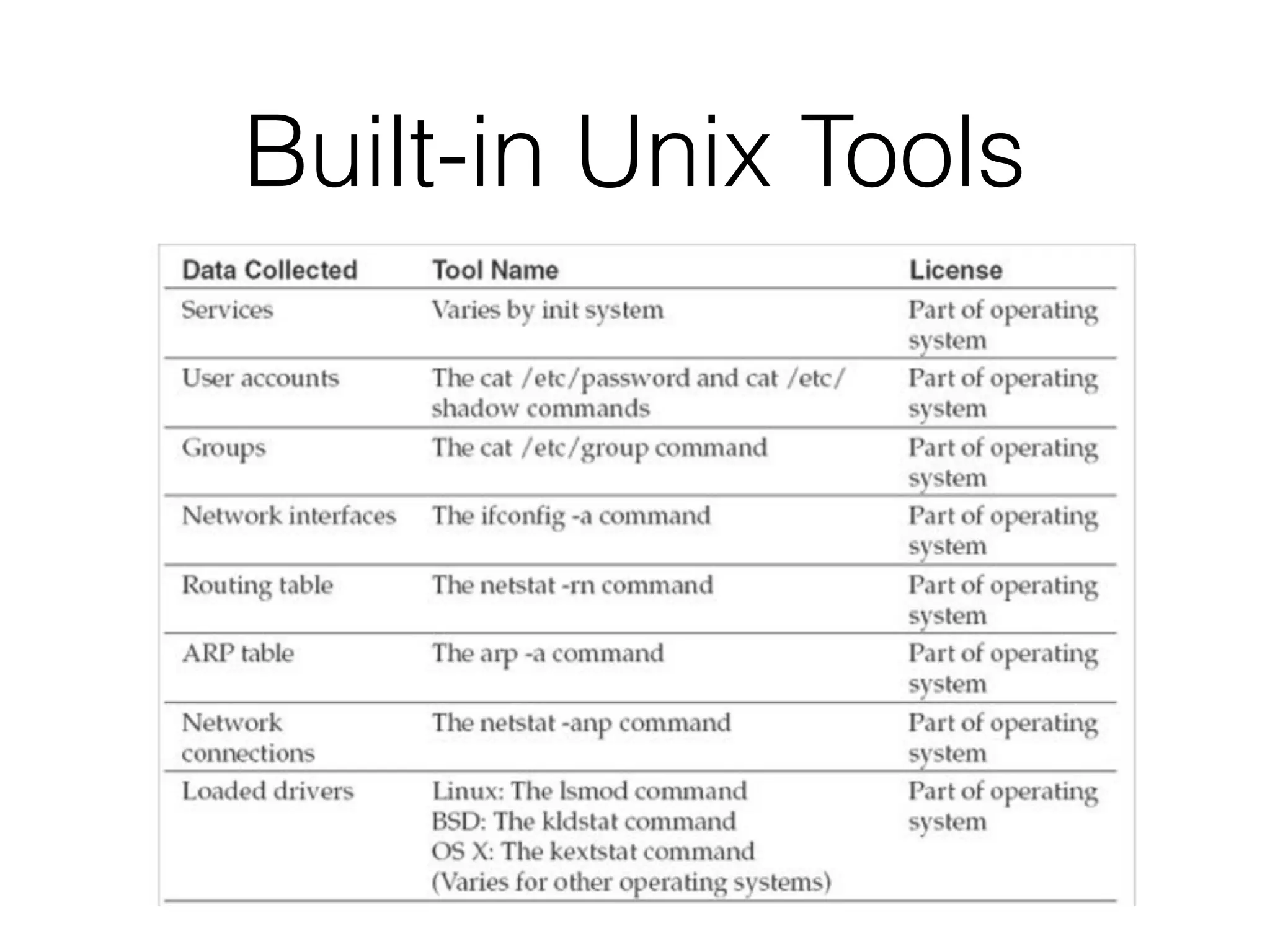

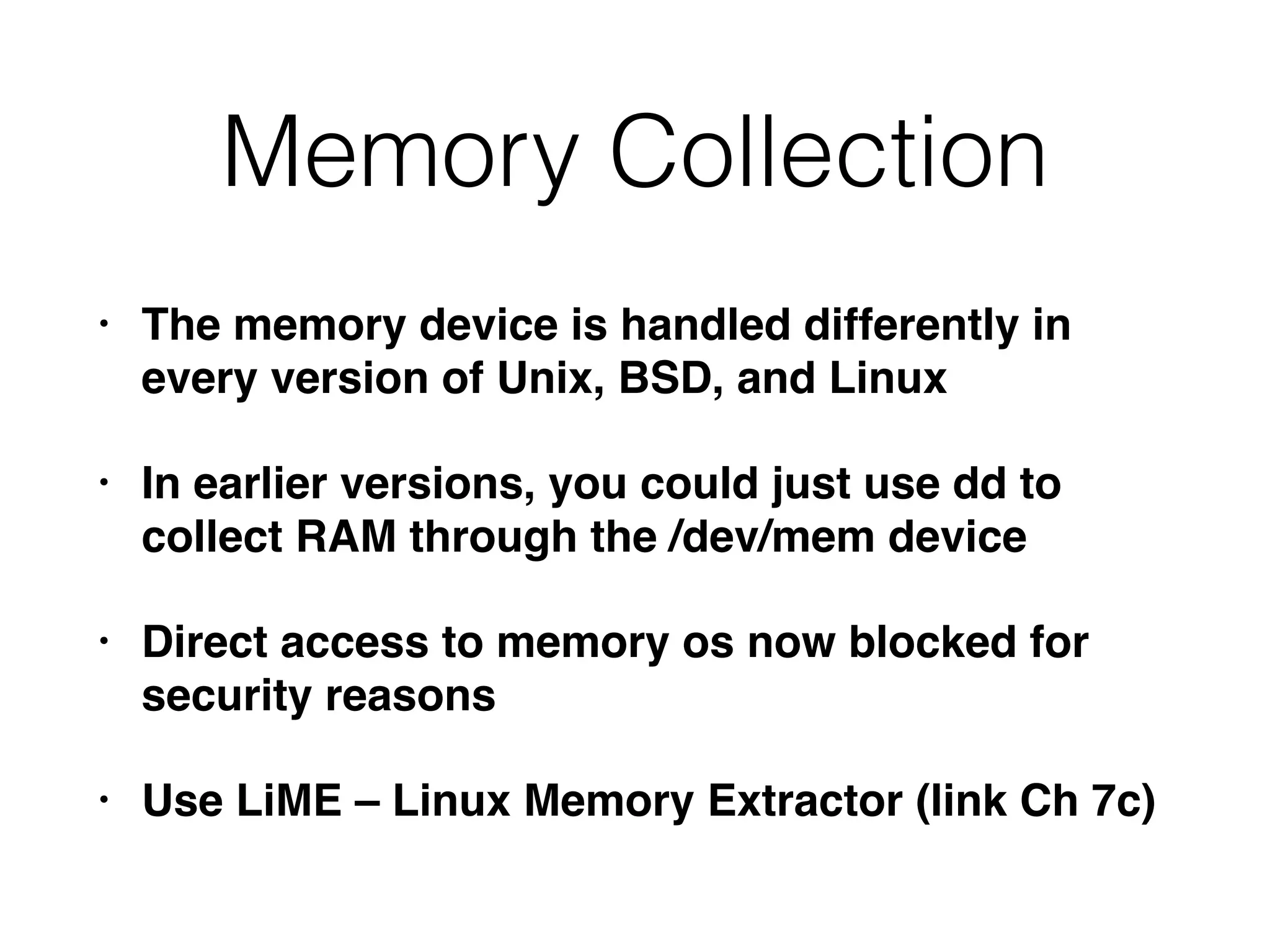

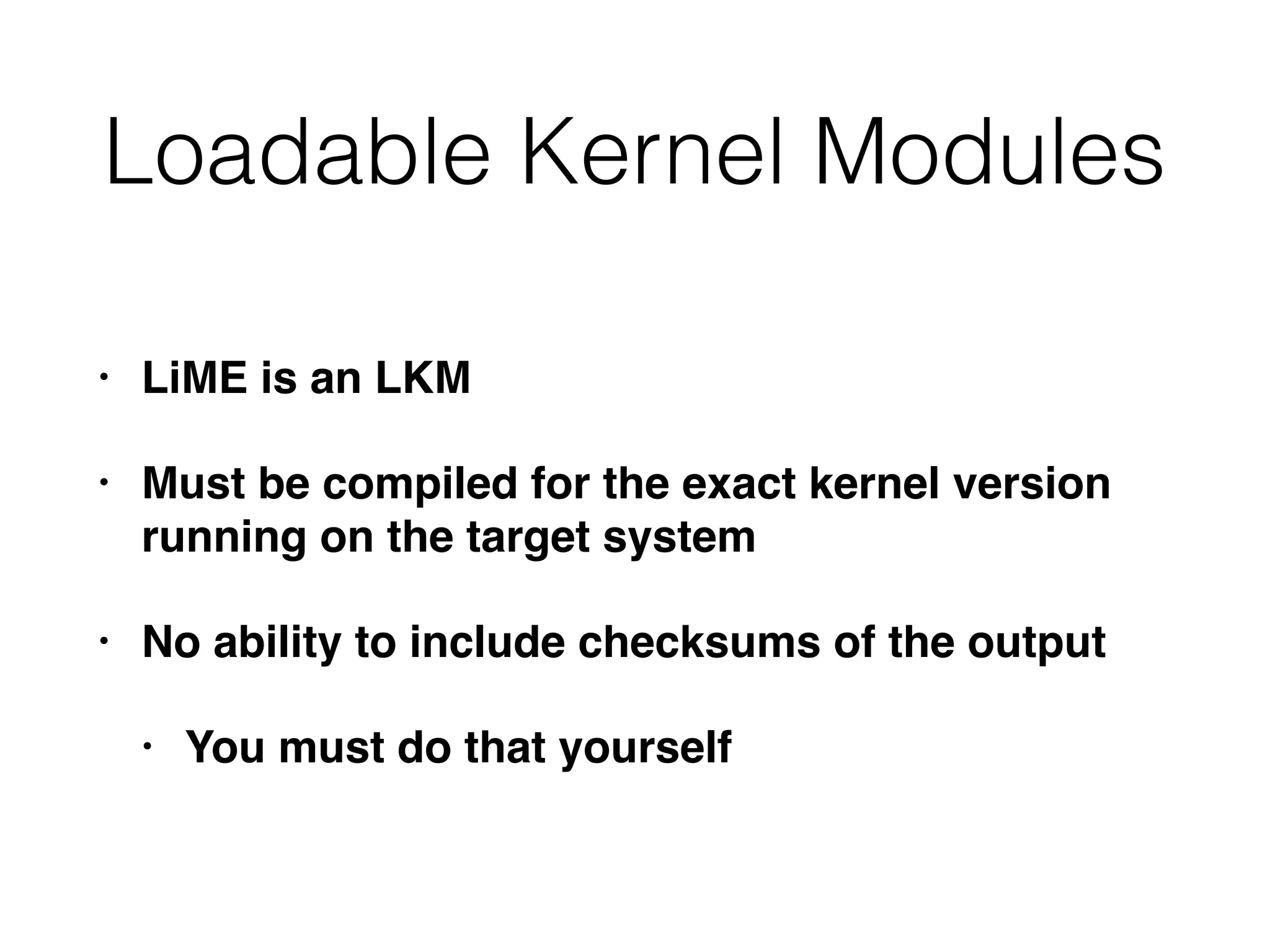

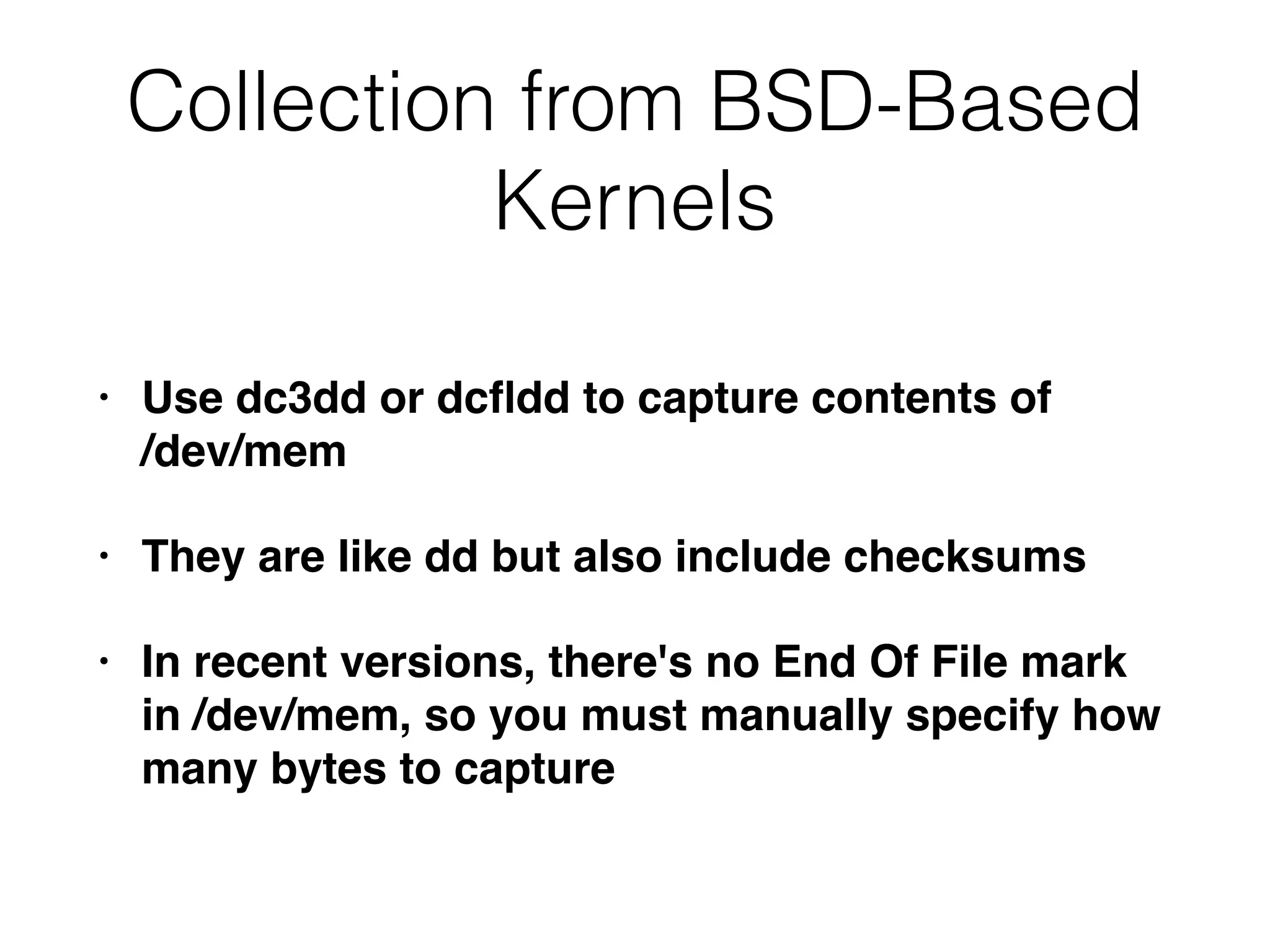

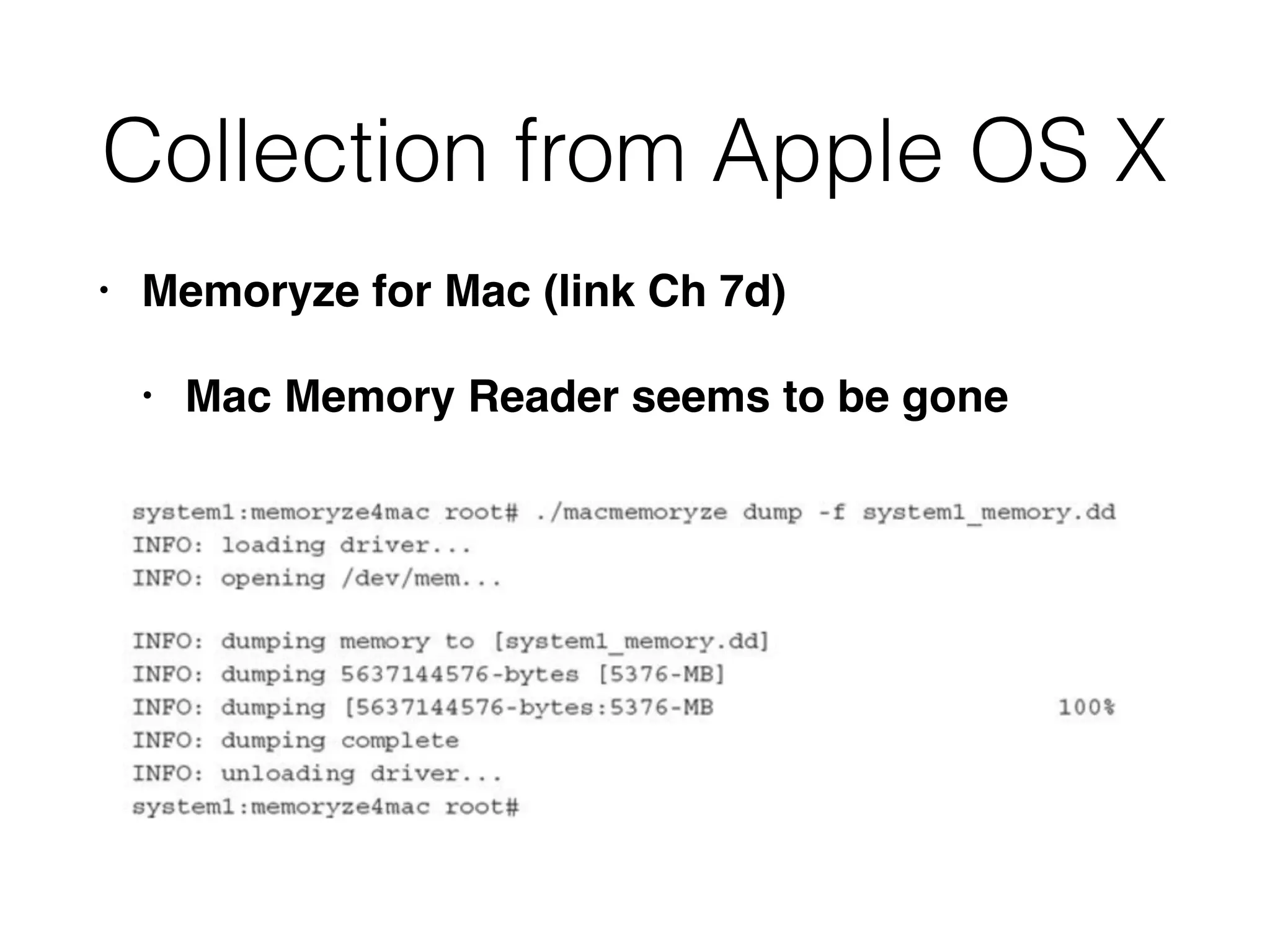

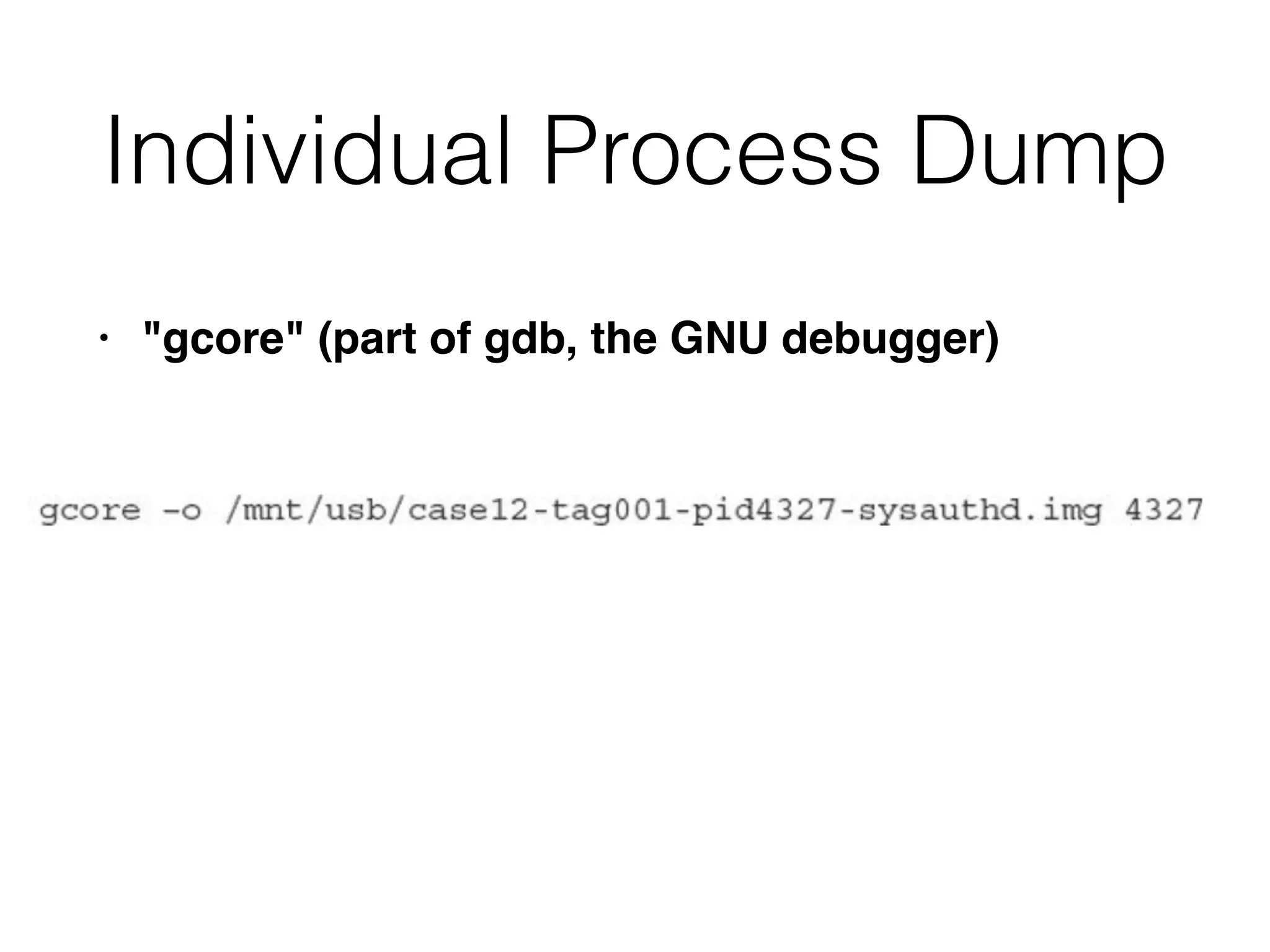

The document discusses the process of computer forensics and incident response, highlighting the importance of gathering preliminary evidence from multiple sources to establish the scope of an incident. It includes examples of data loss scenarios where customer data was compromised and dives into live data collection methods to preserve volatile evidence. The content emphasizes the necessity of a well-structured approach and the potential pitfalls in forensic investigations.