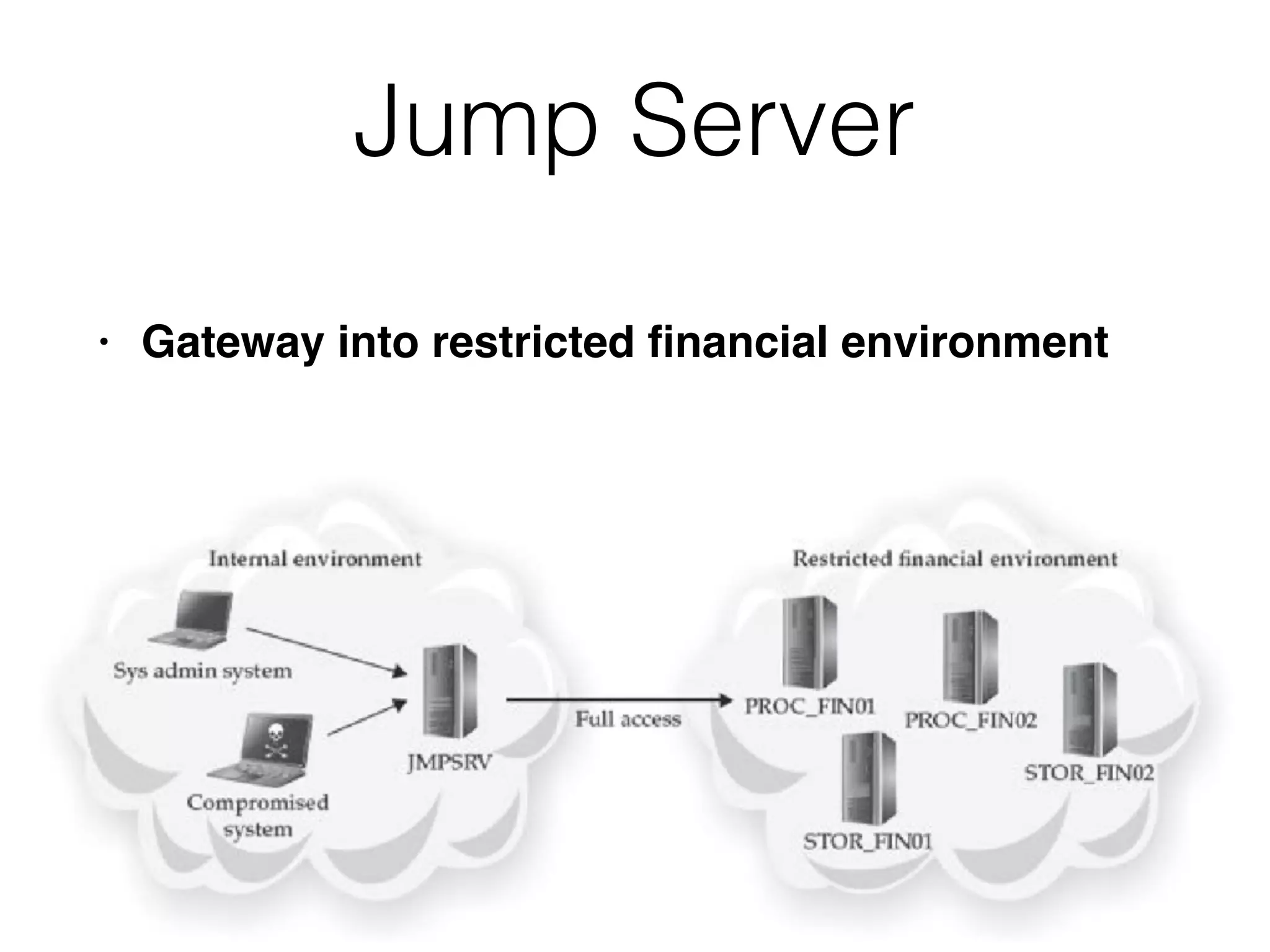

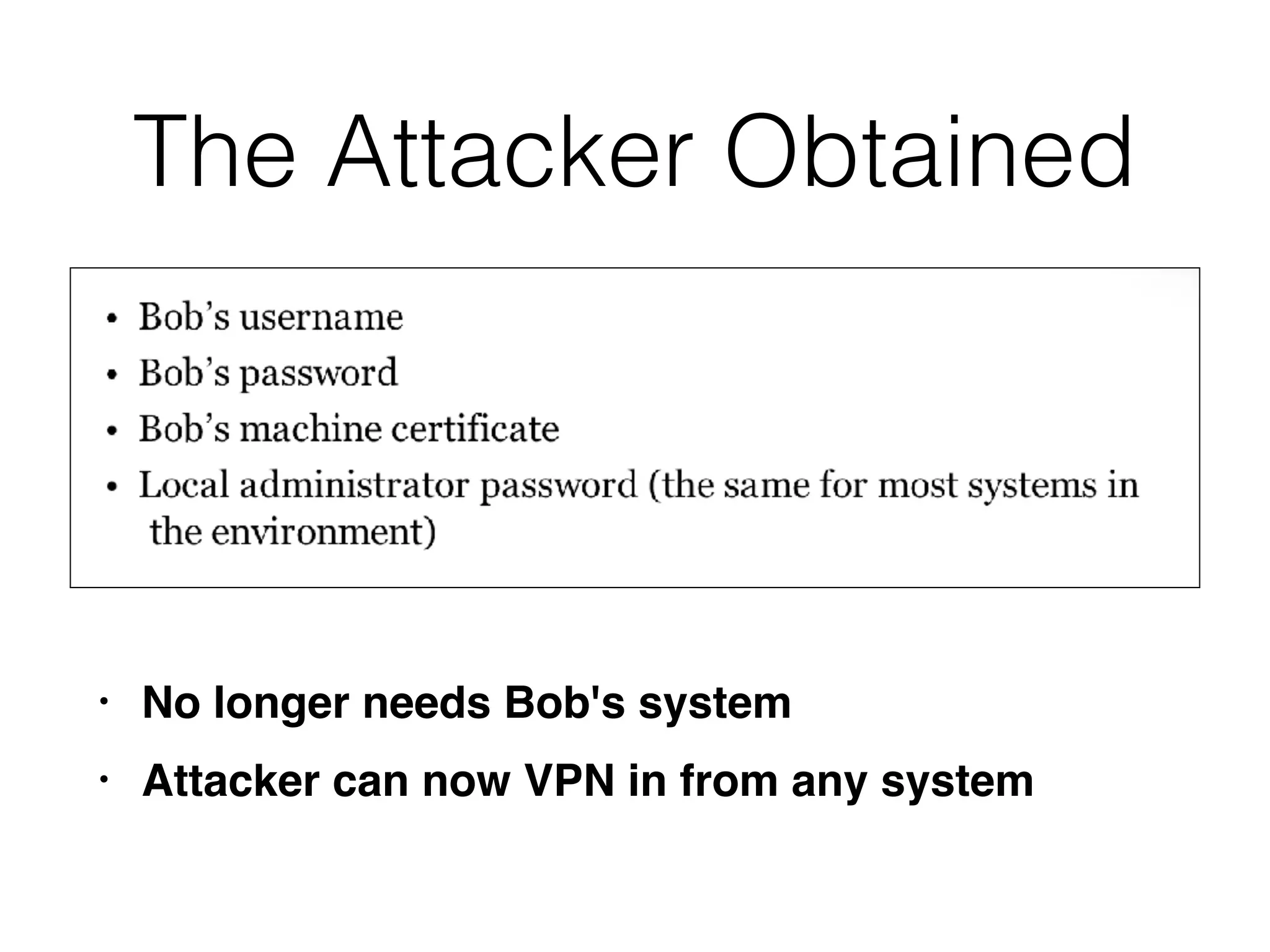

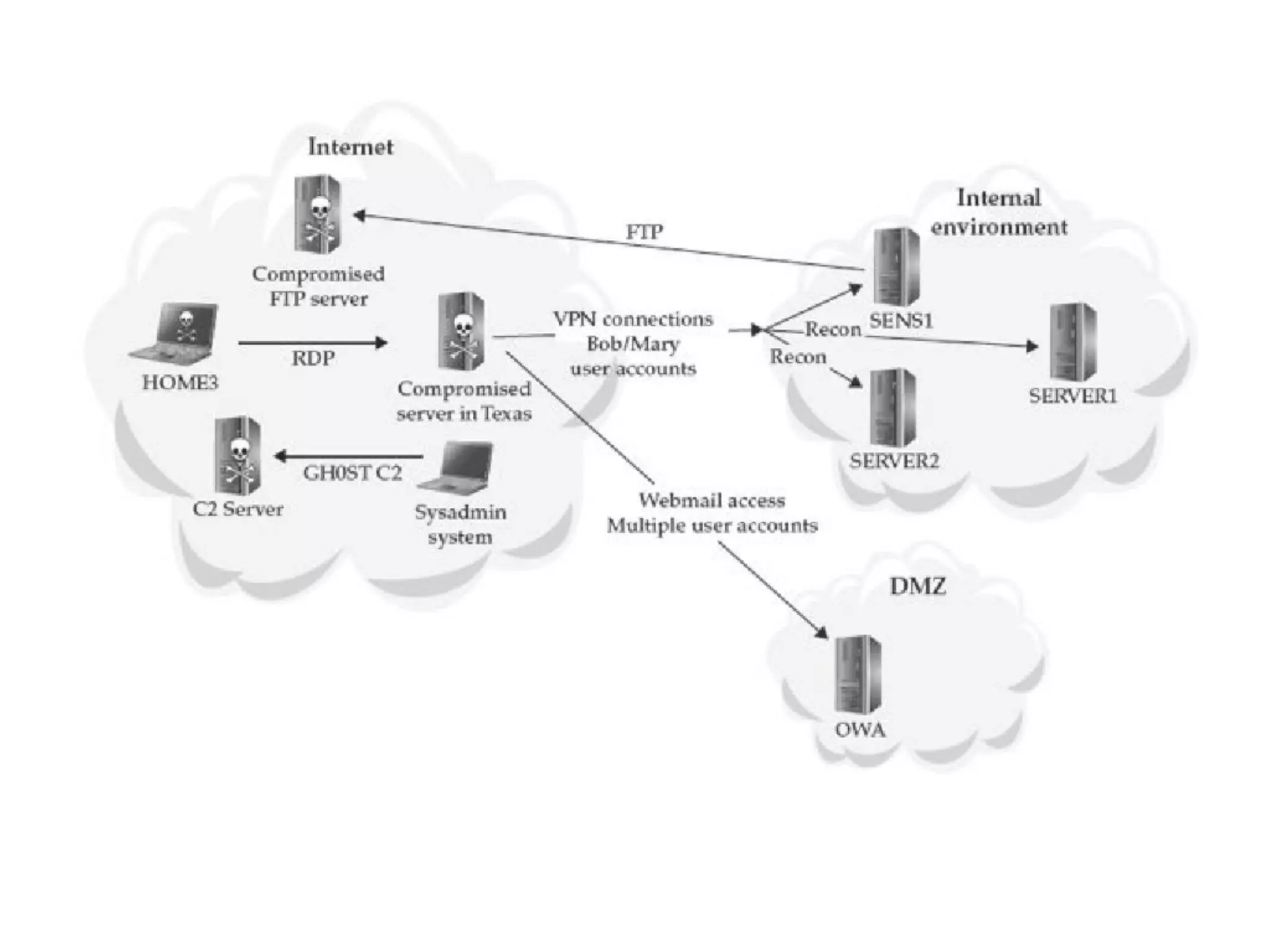

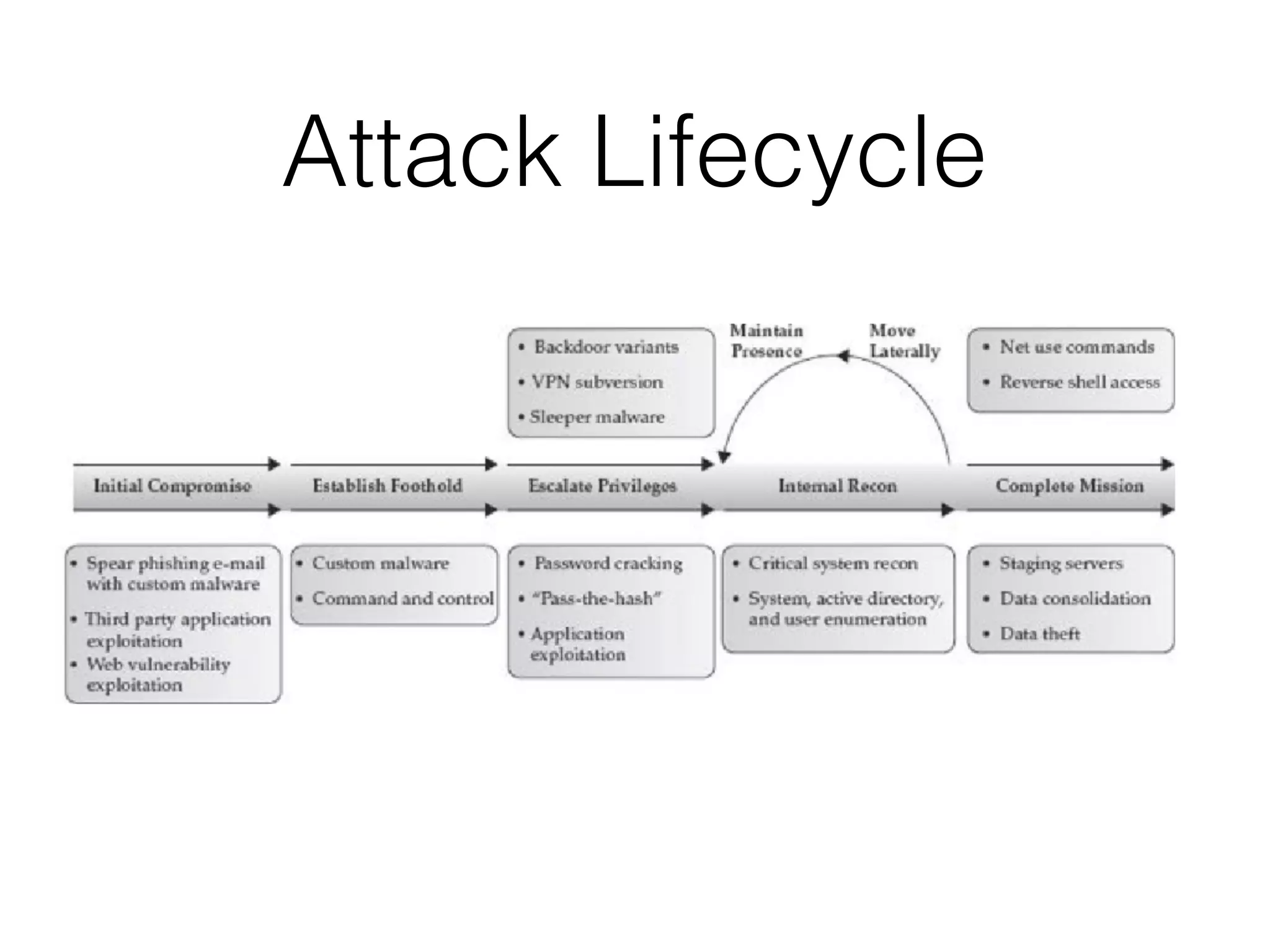

The document summarizes two real-world incident response cases. The first case involved a 10-month attack where an attacker exploited an SQL injection vulnerability and eventually stole millions of payment card records over three months. The second case describes a spear phishing email that installed malware, allowing the attacker to compromise VPN credentials and steal sensitive engineering data over several weeks until a SIEM detected anomalous VPN access patterns. Both cases resulted in comprehensive incident response and remediation efforts.