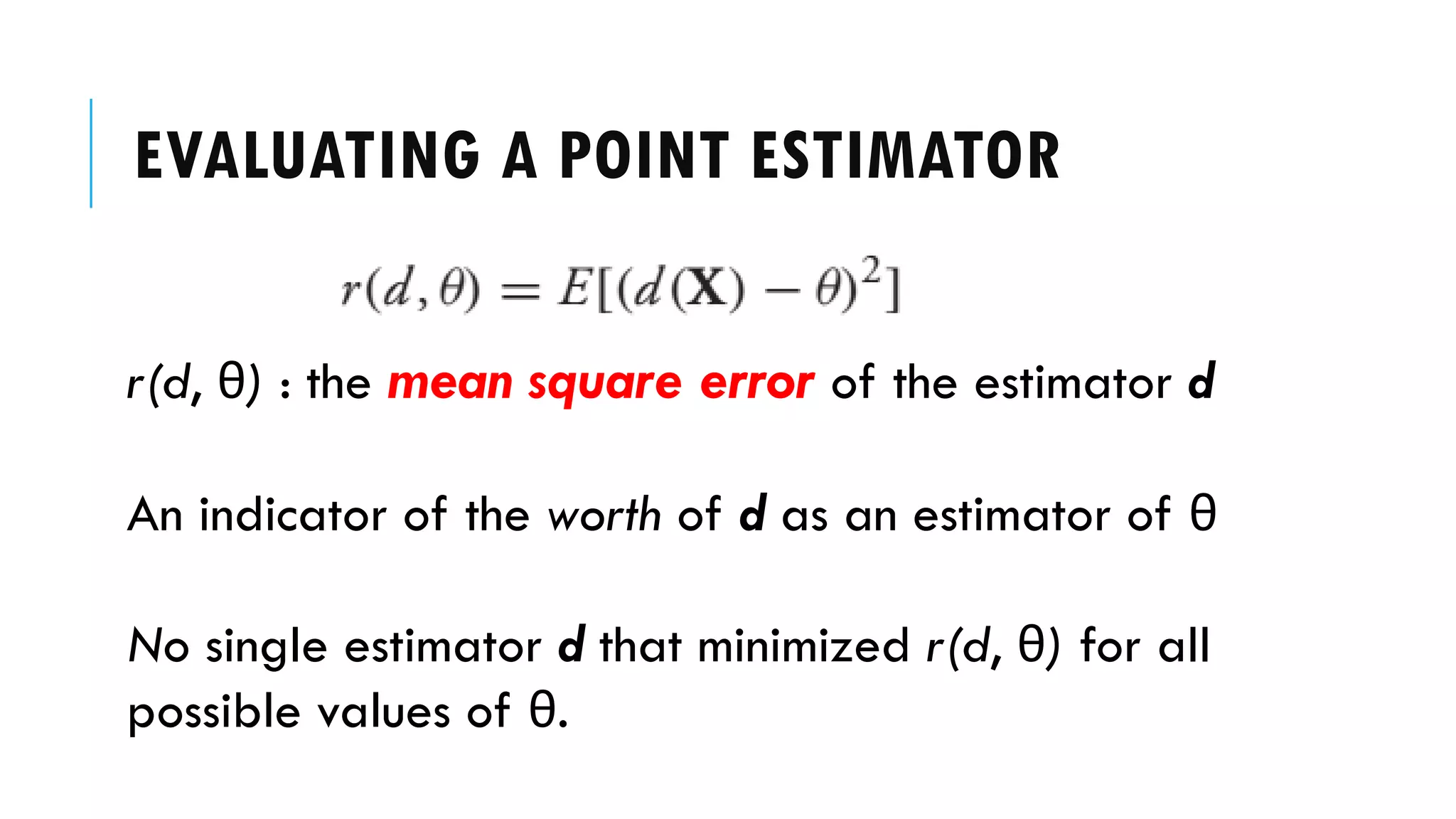

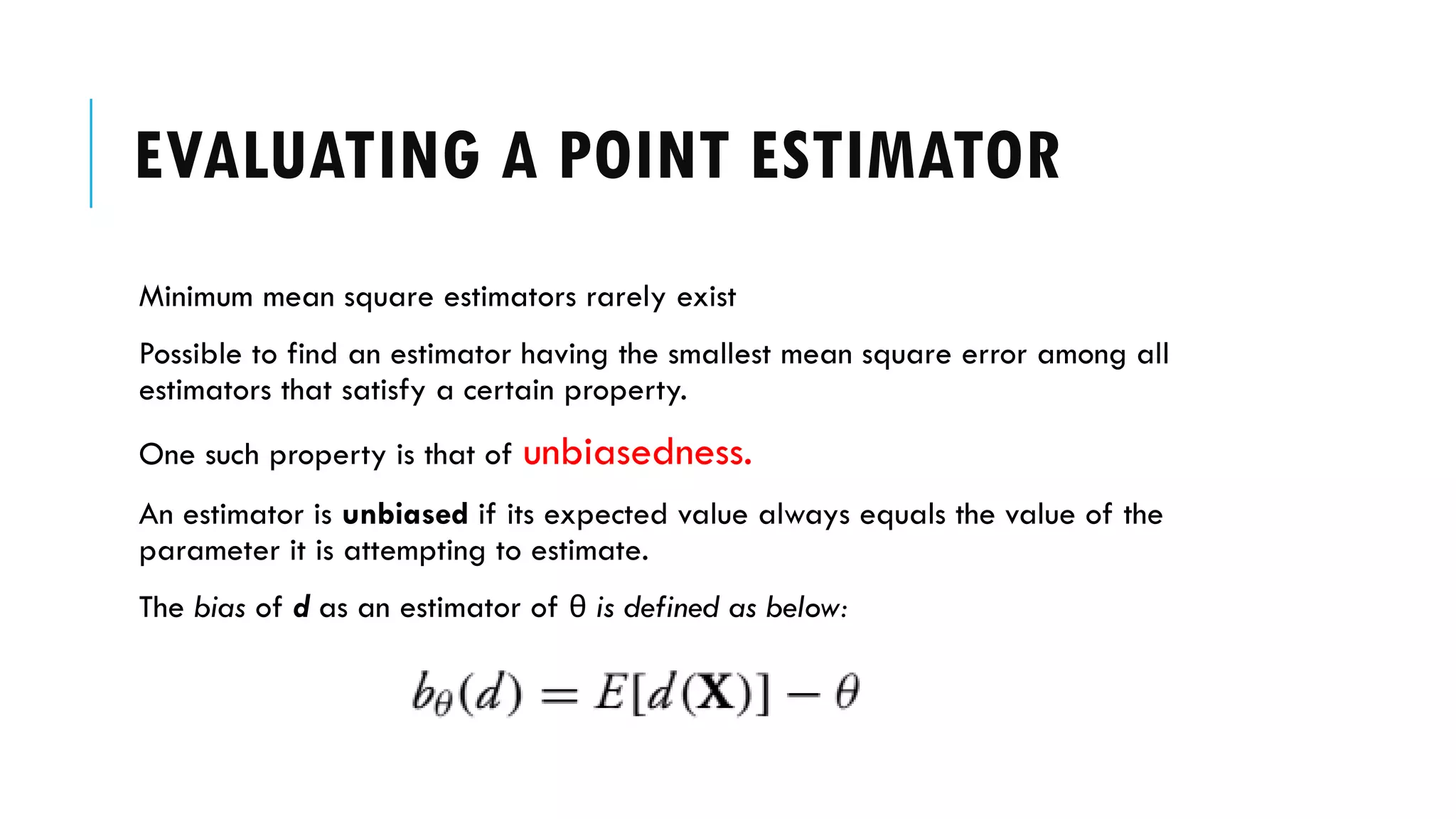

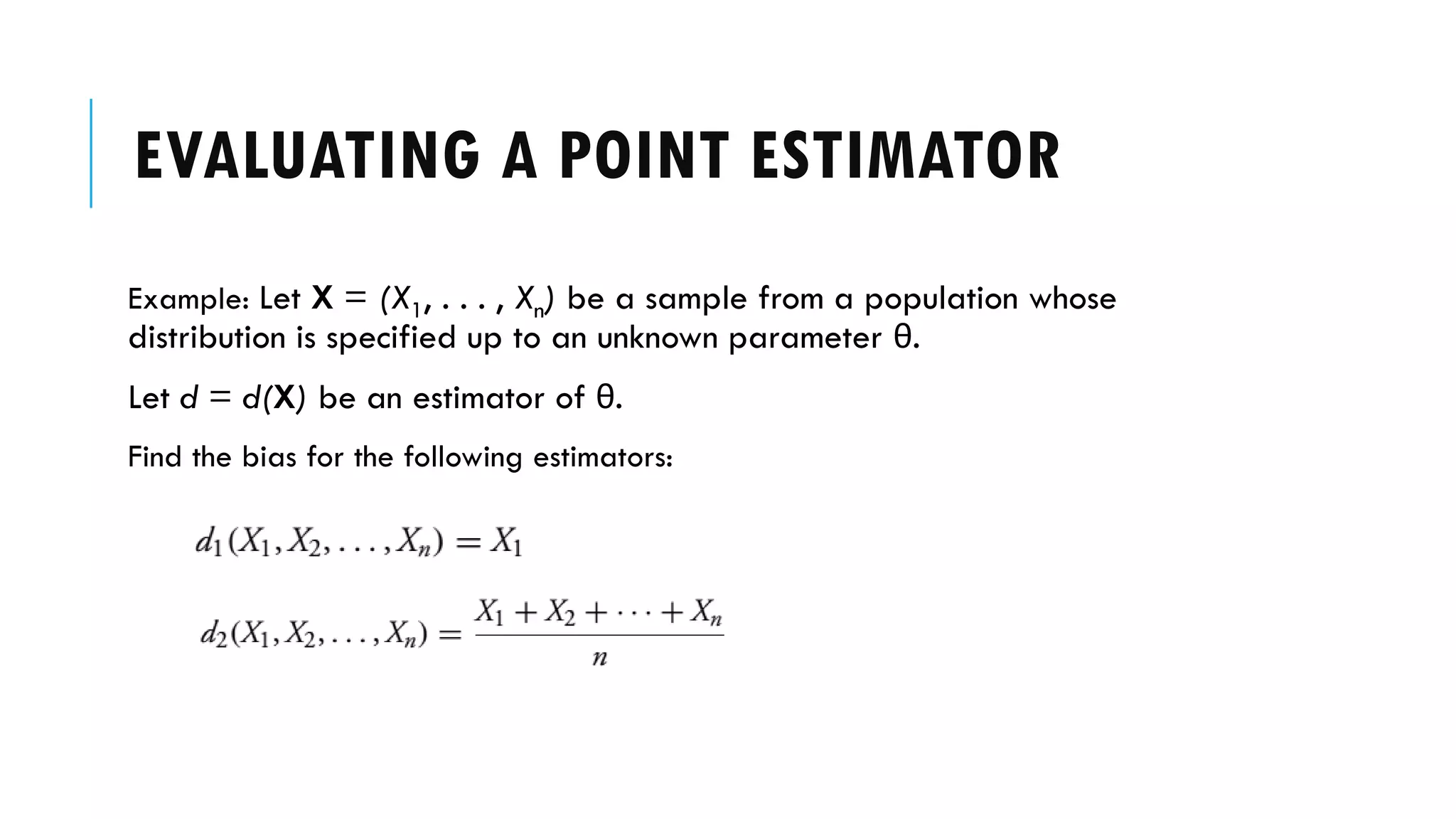

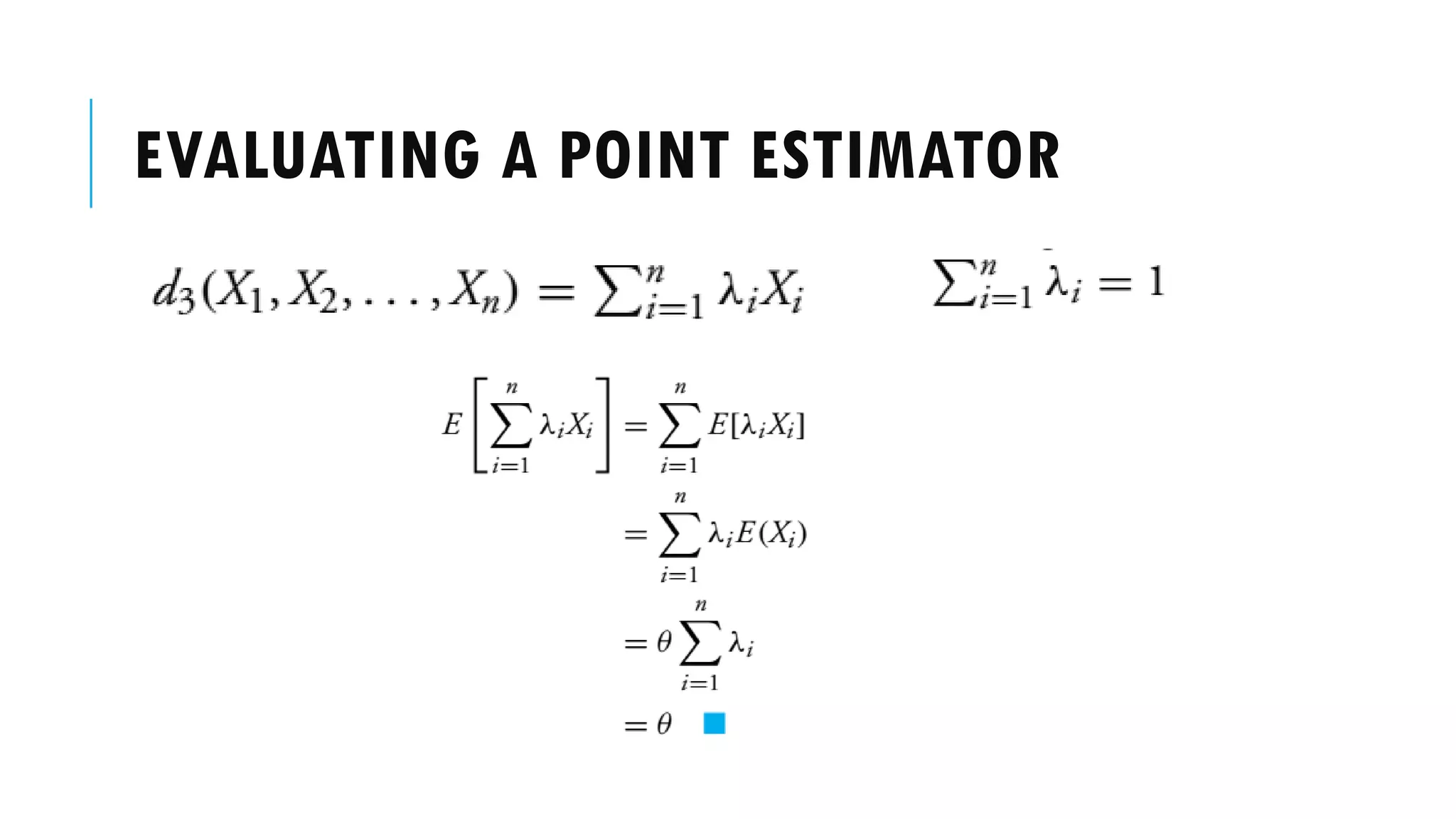

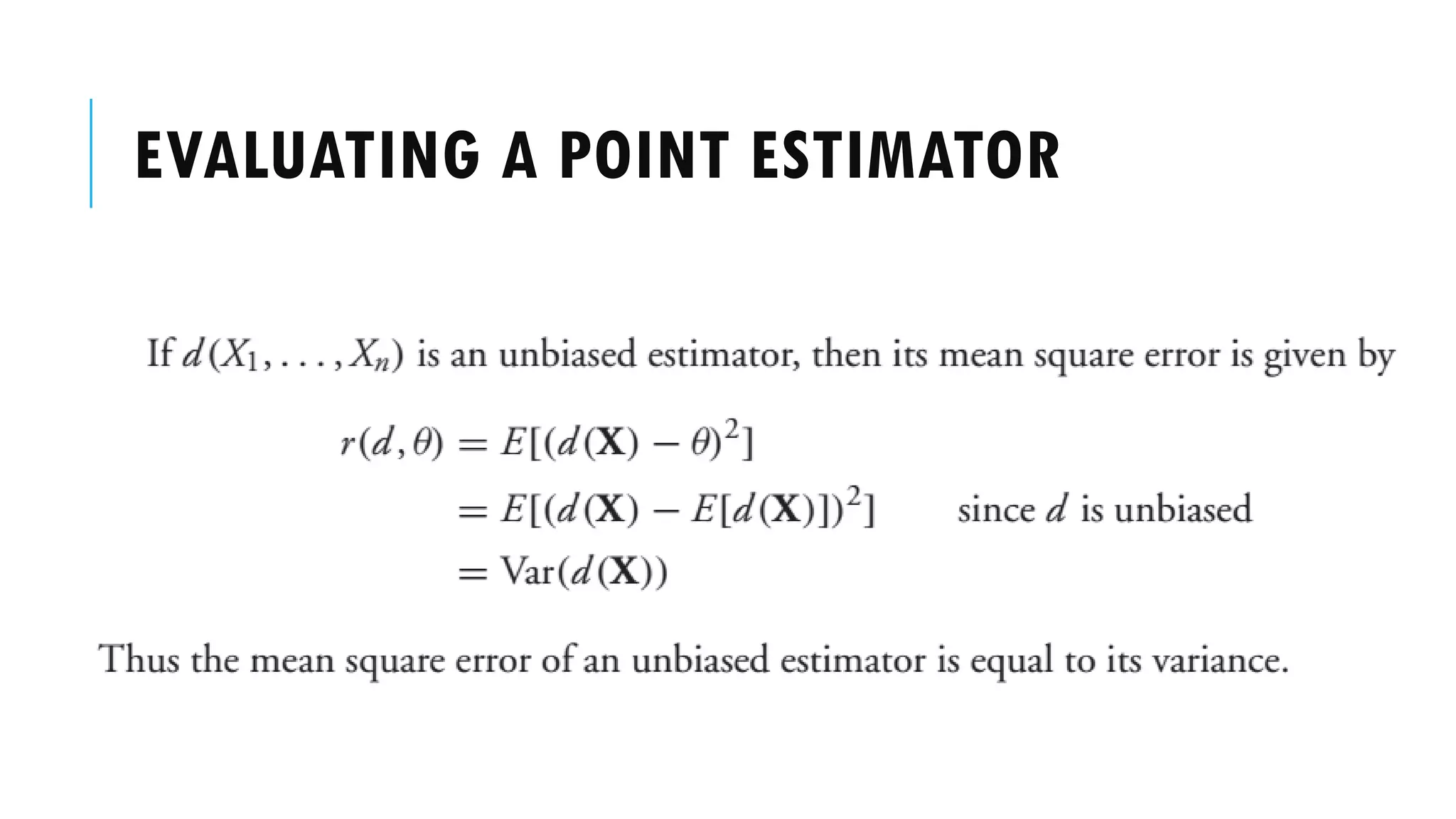

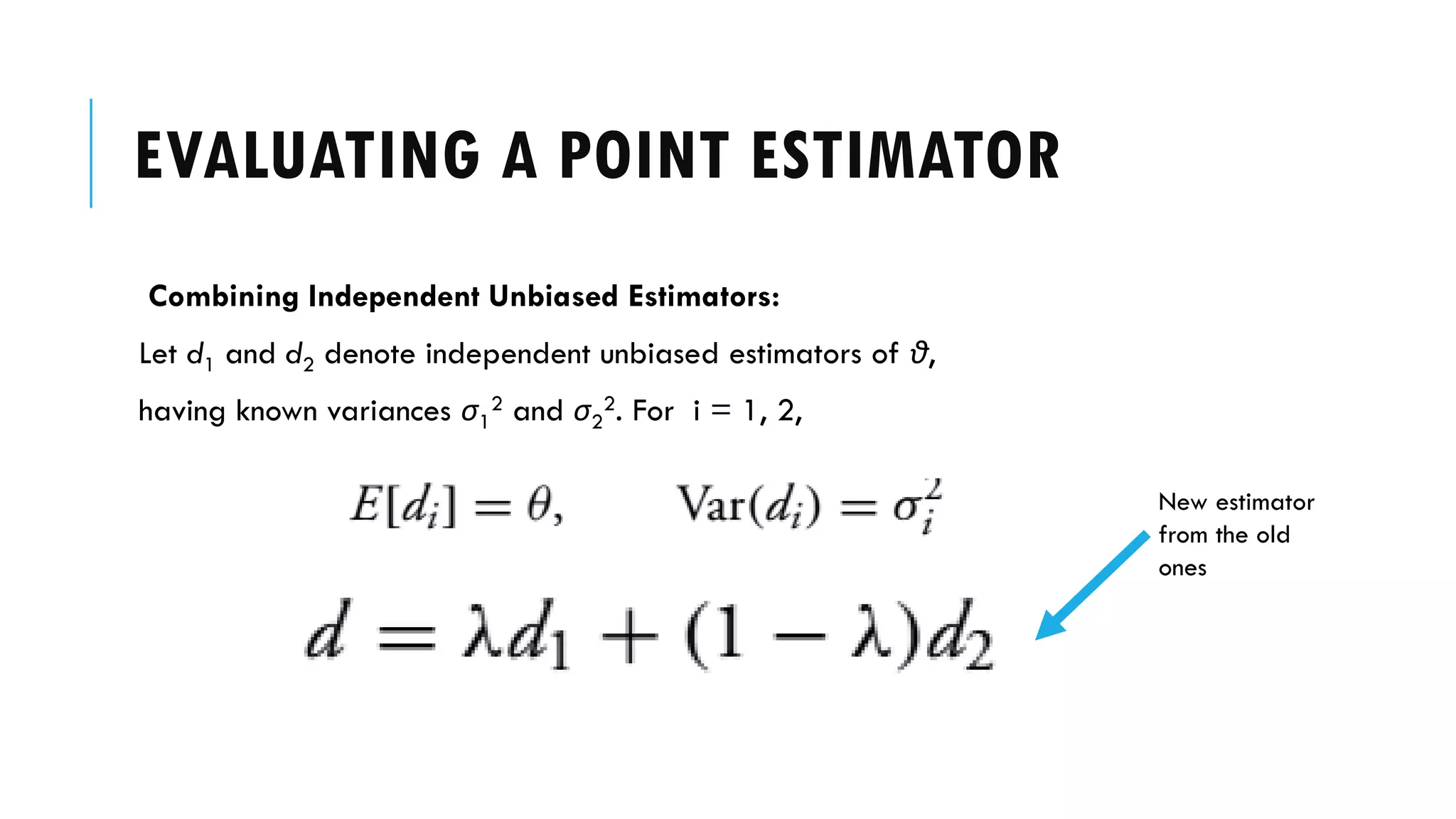

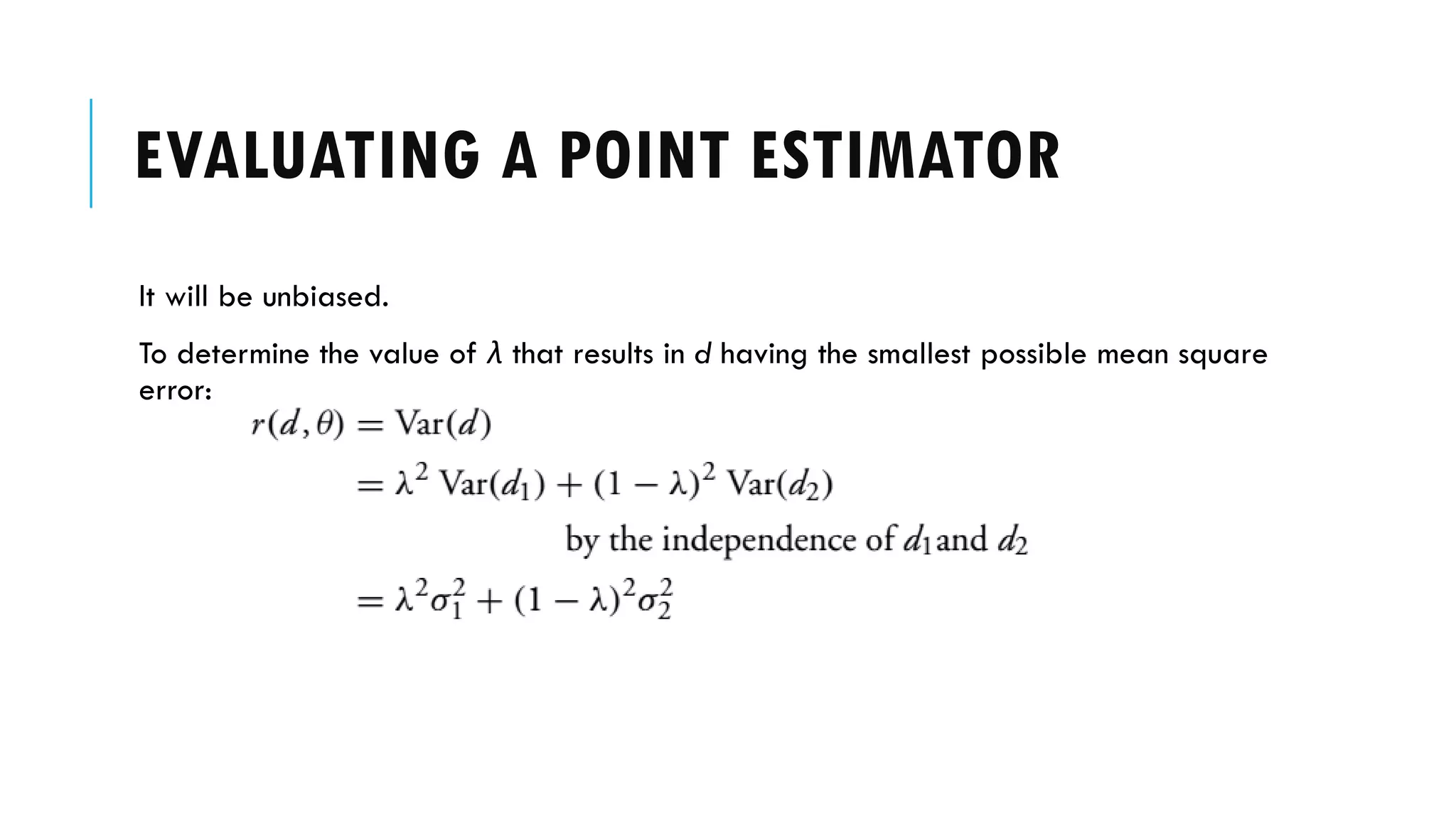

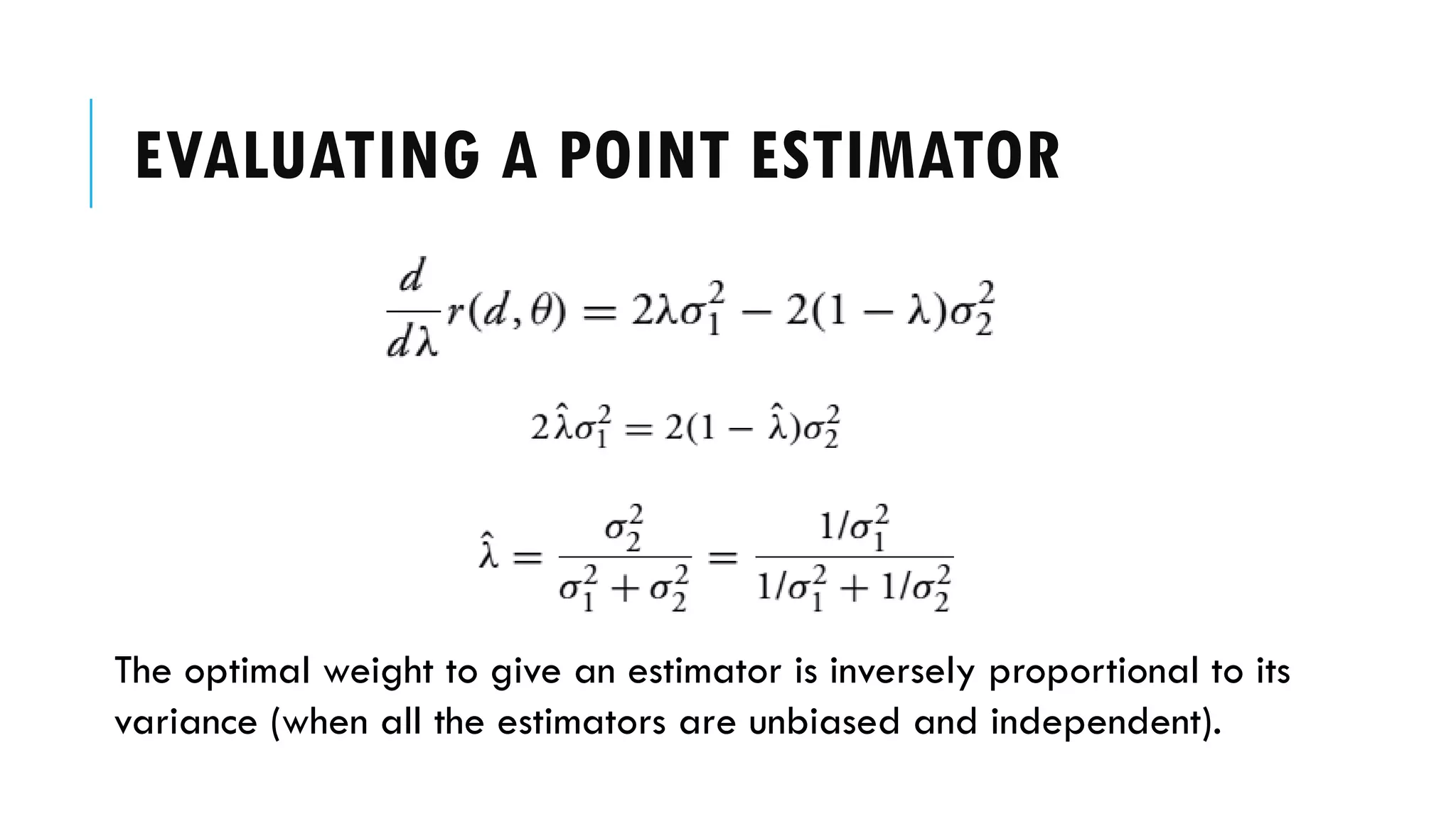

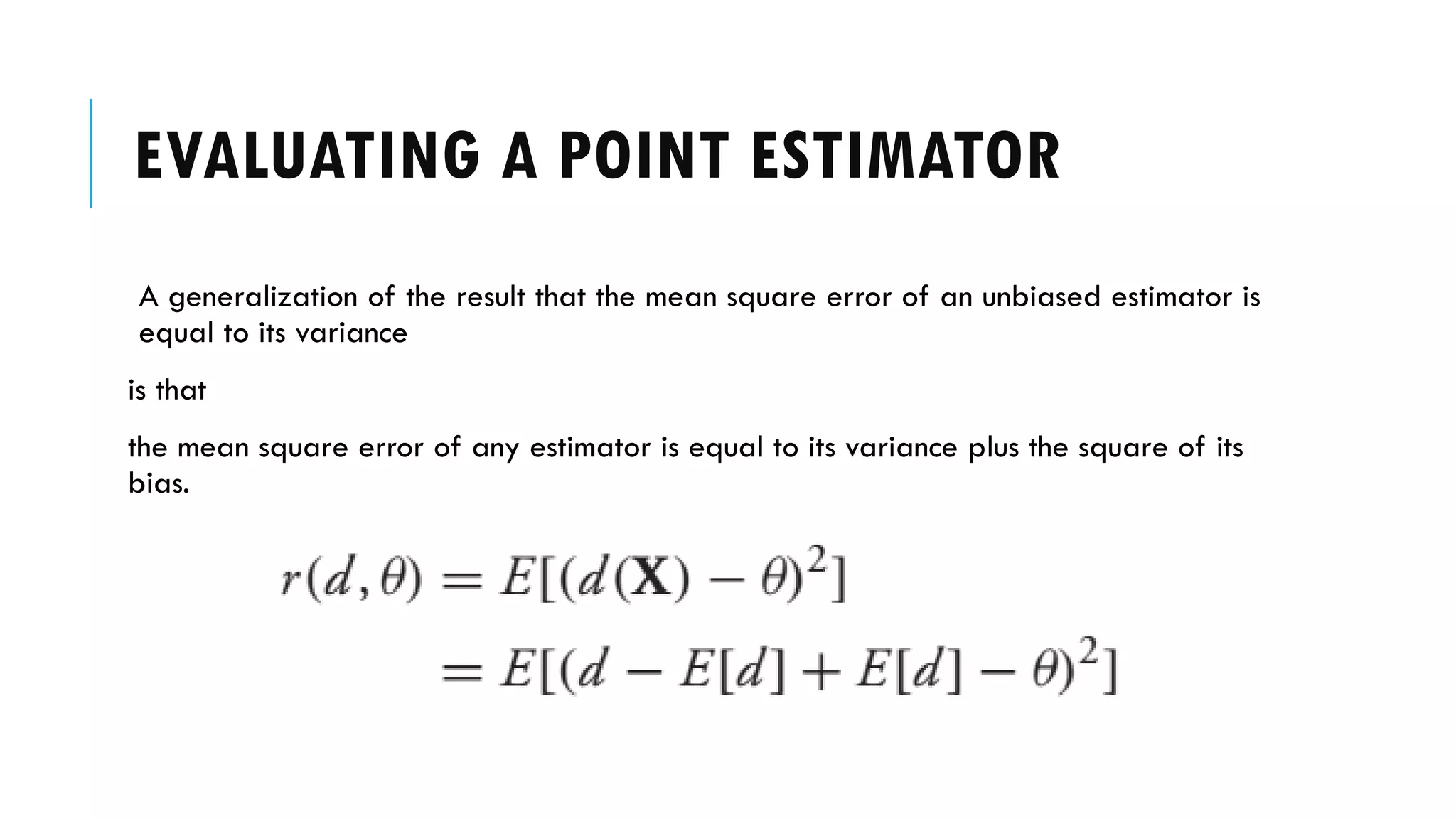

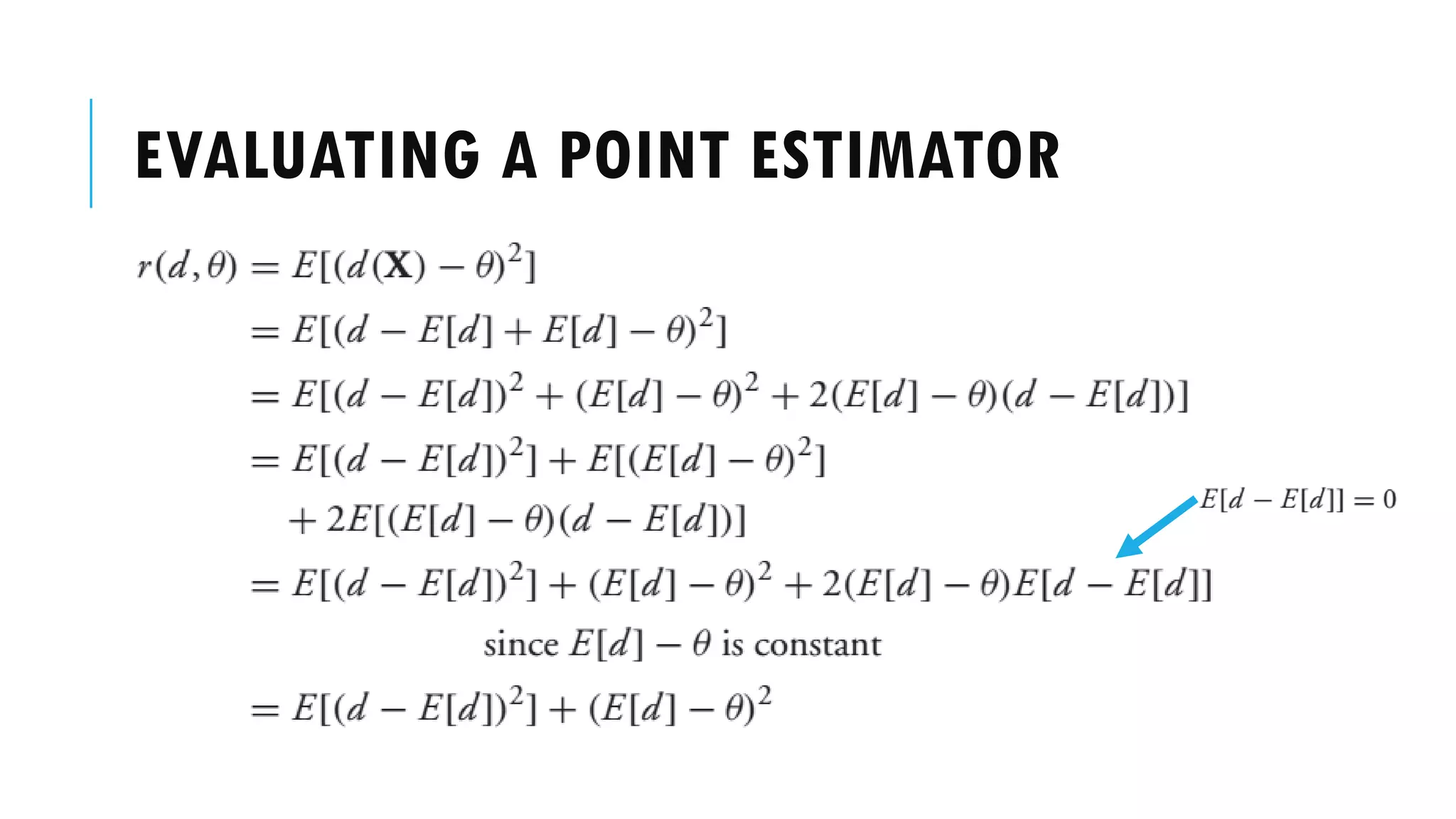

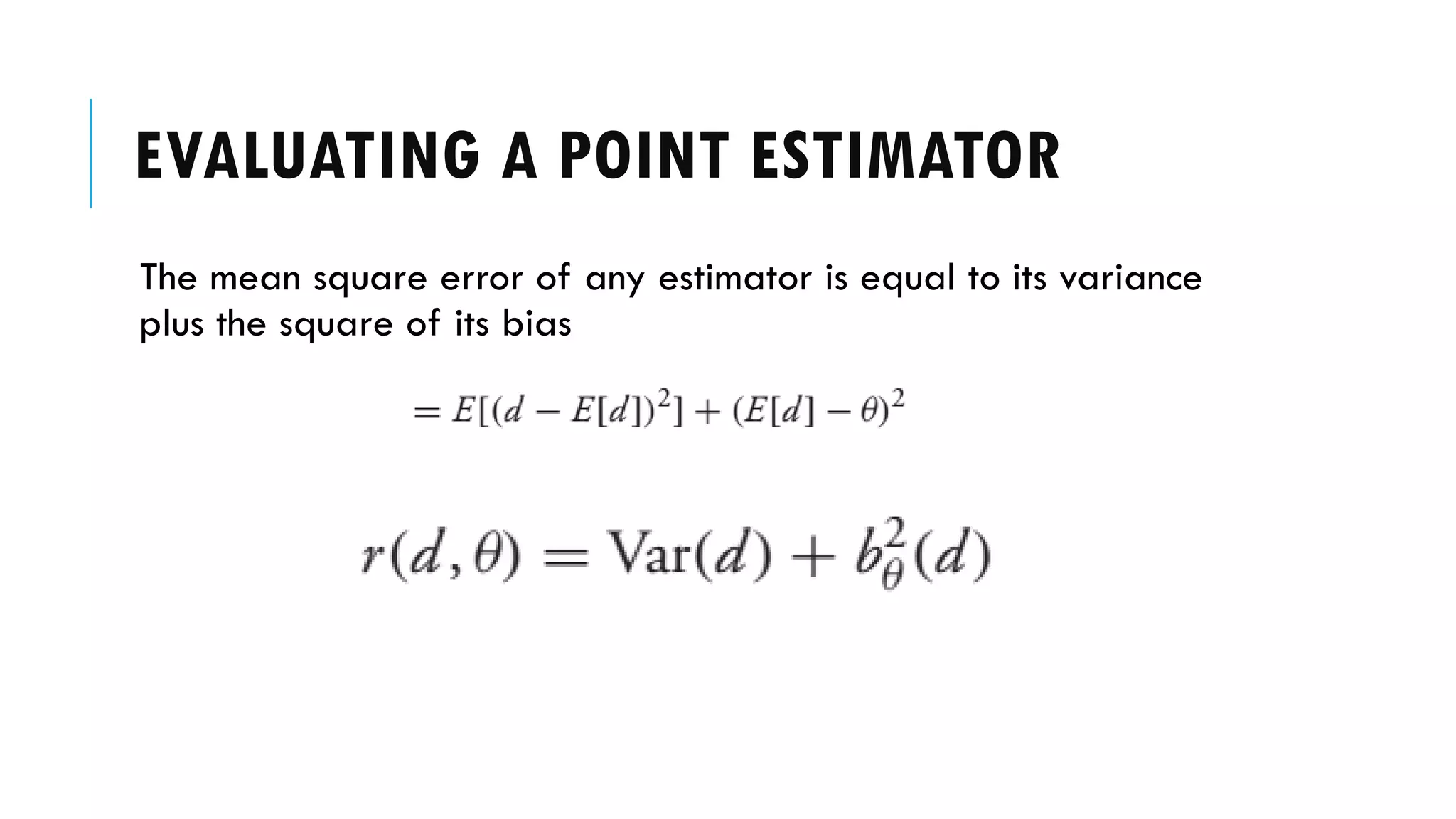

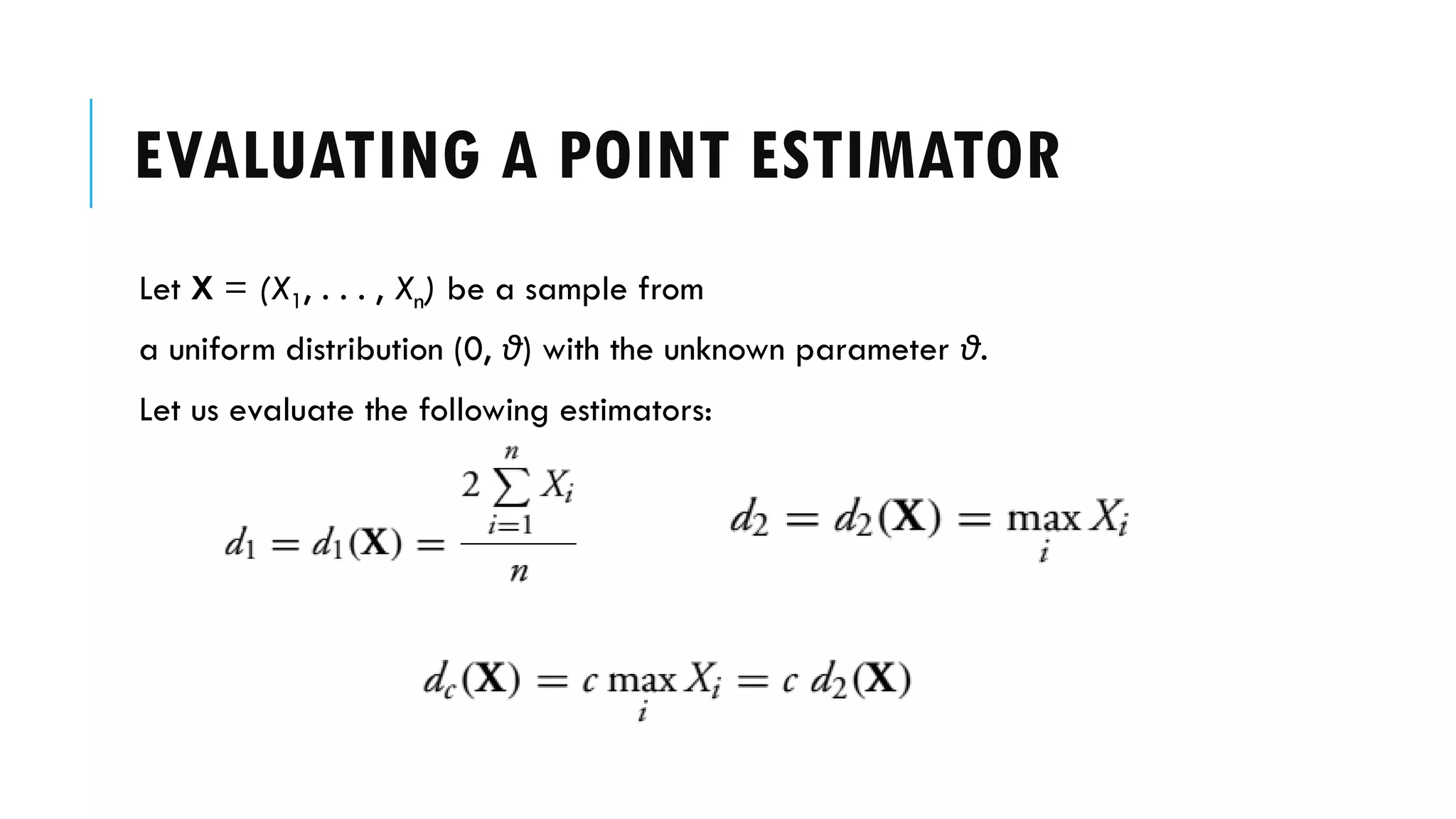

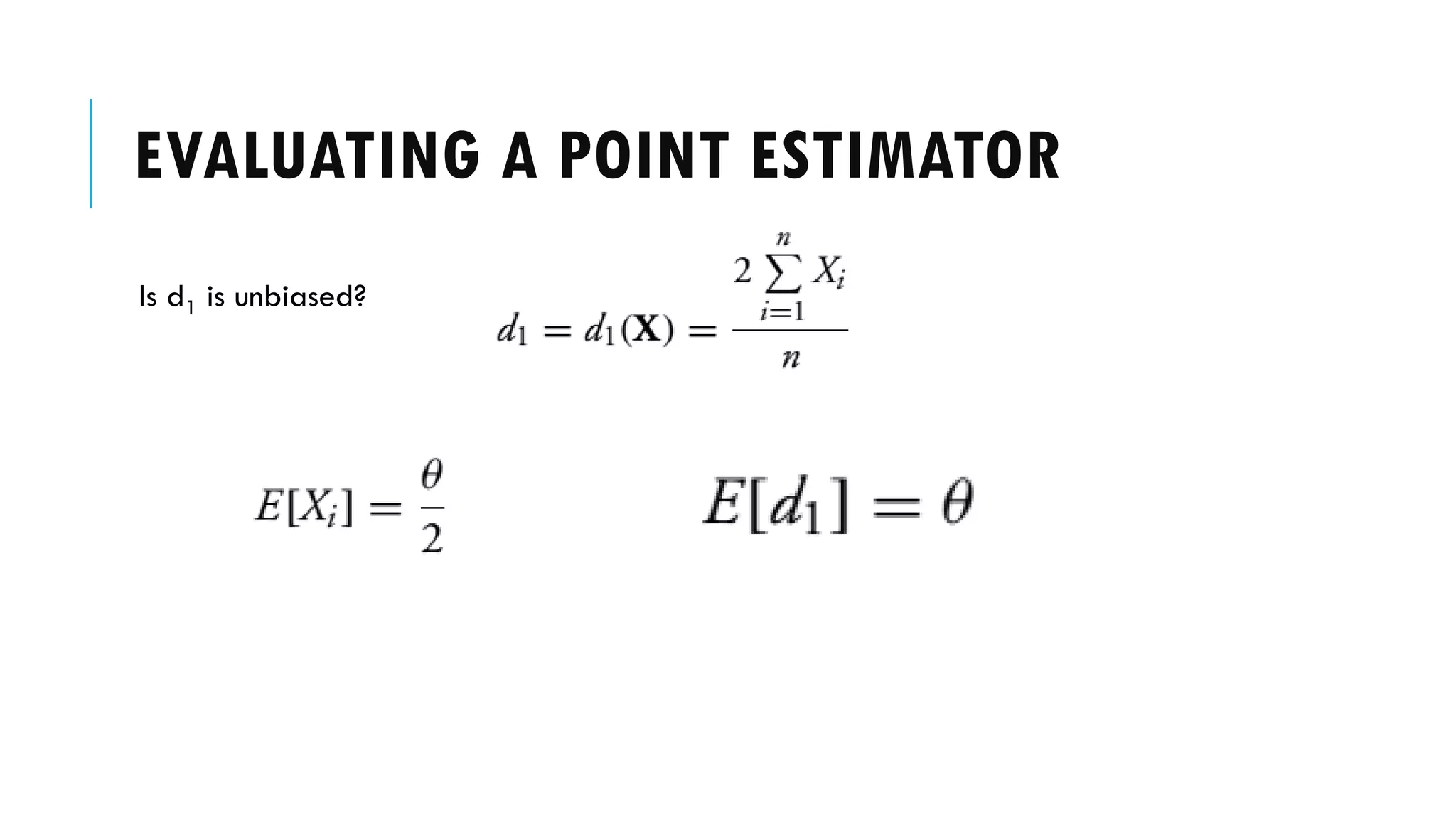

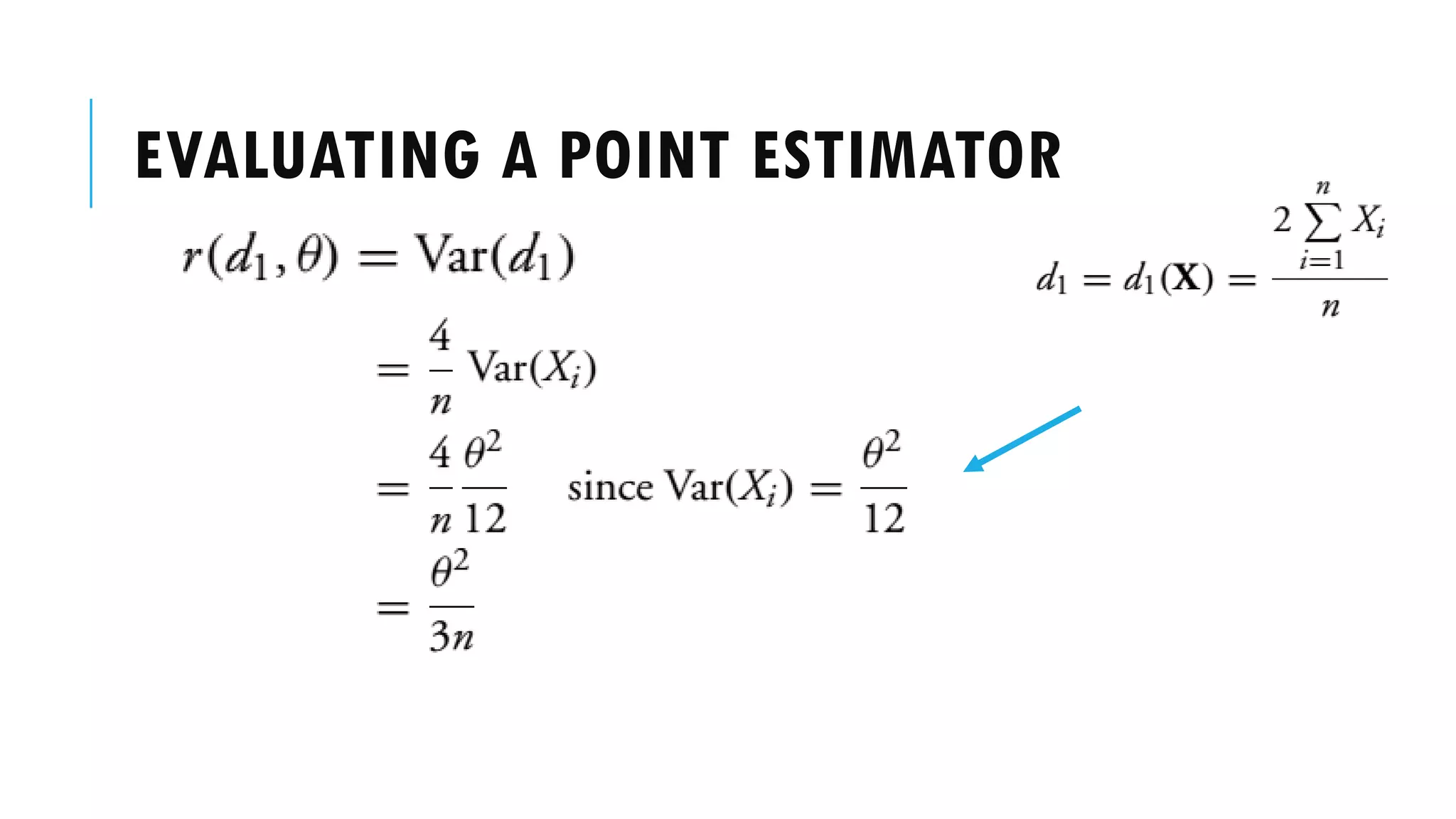

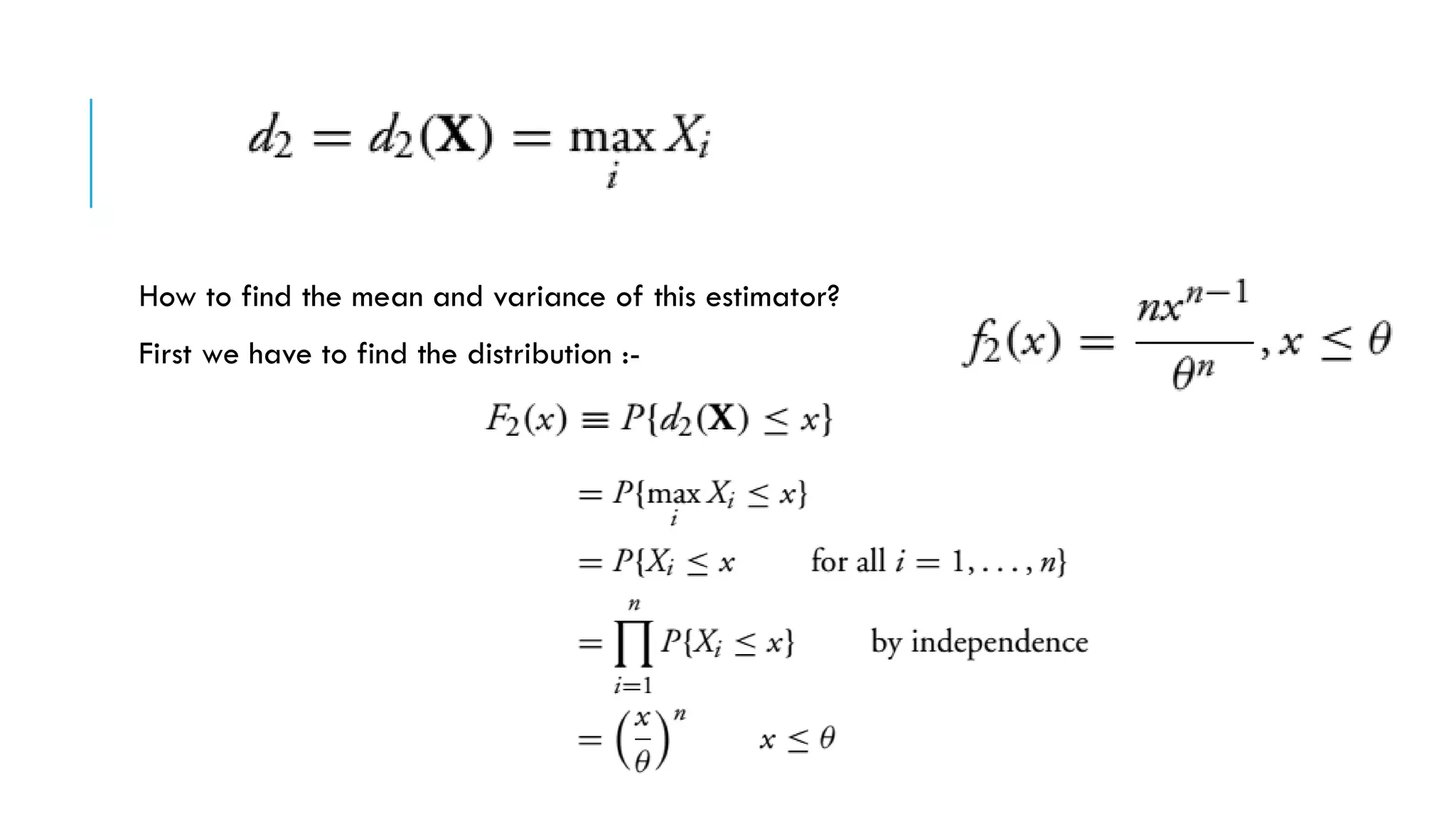

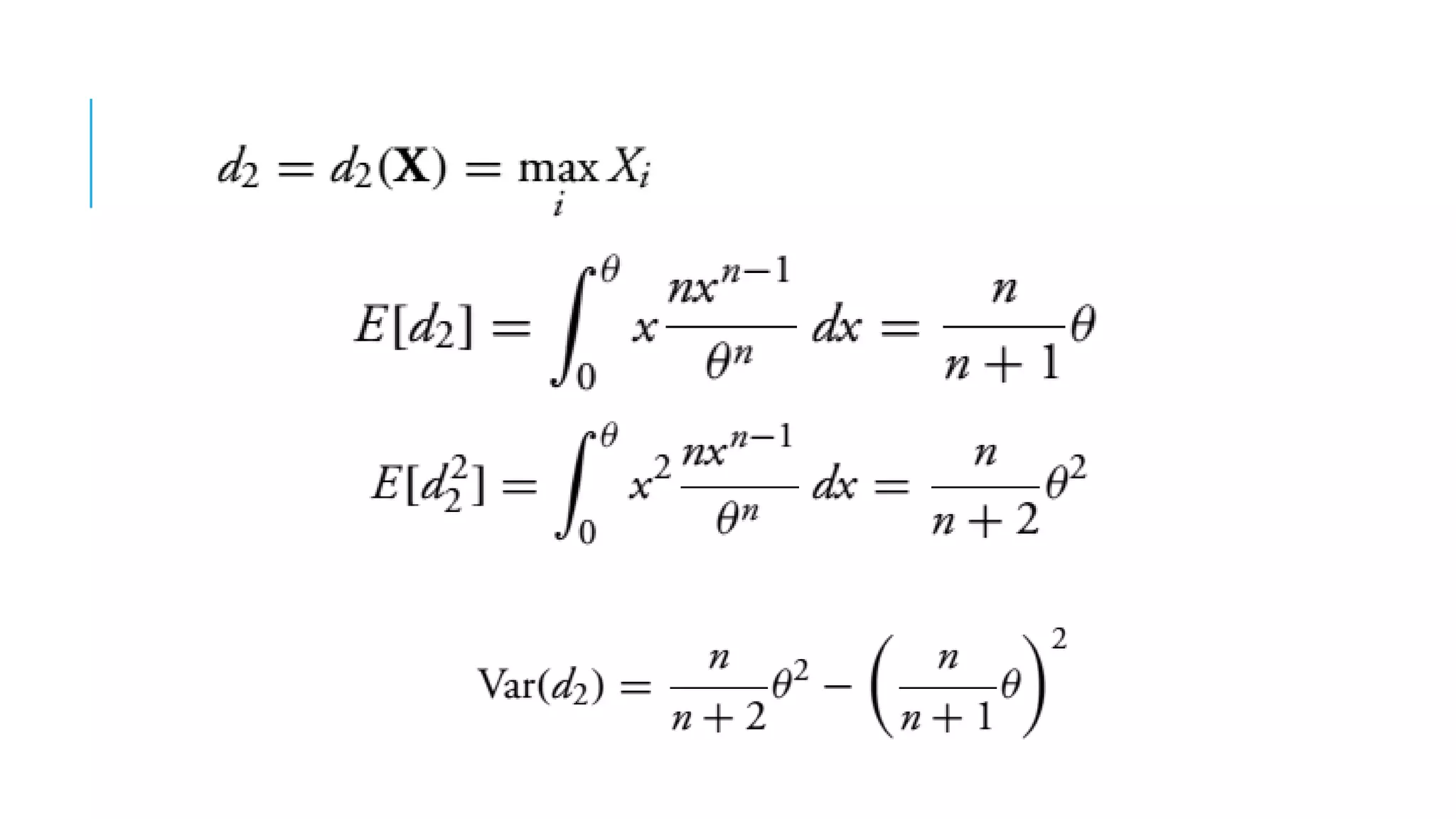

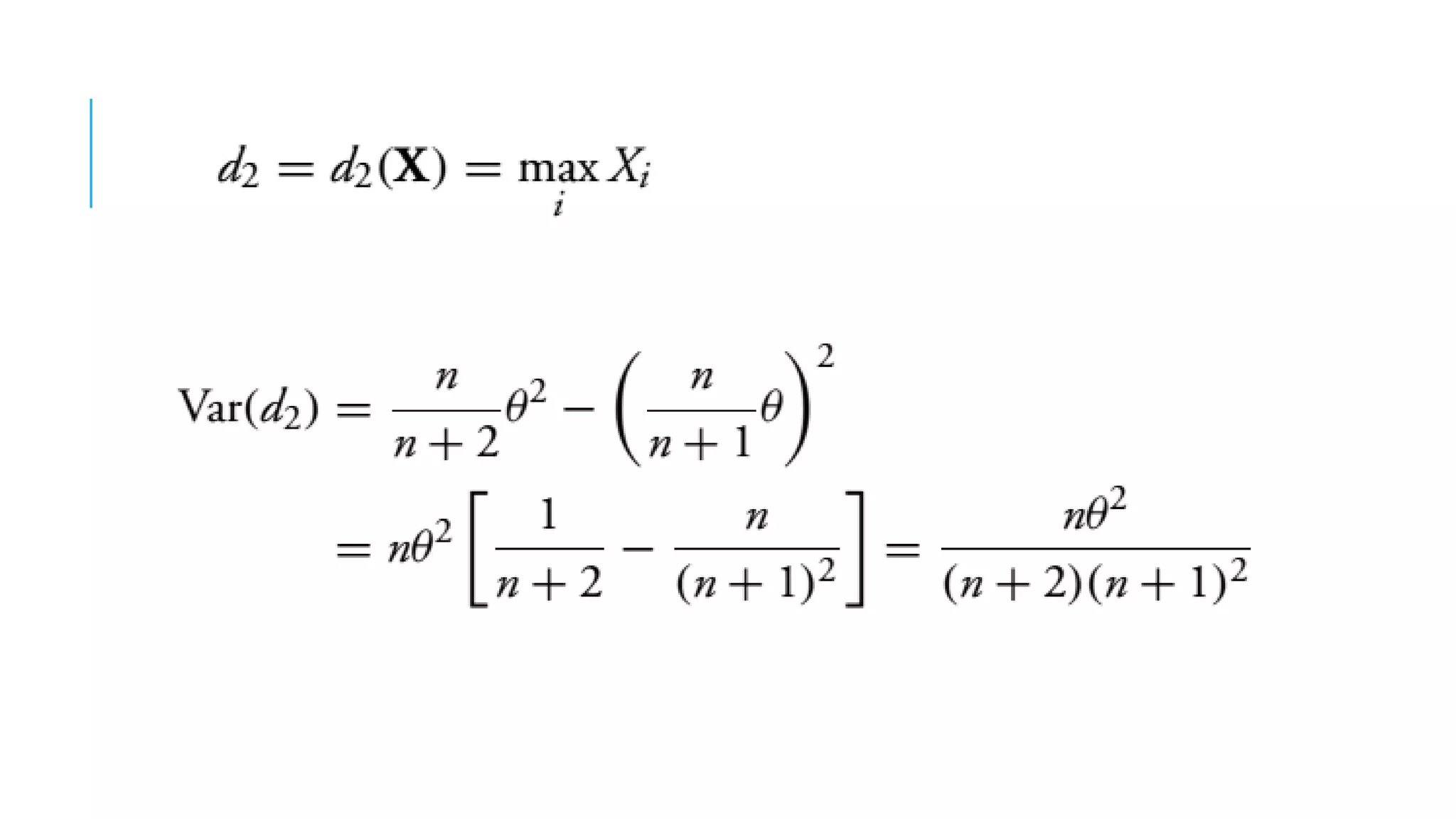

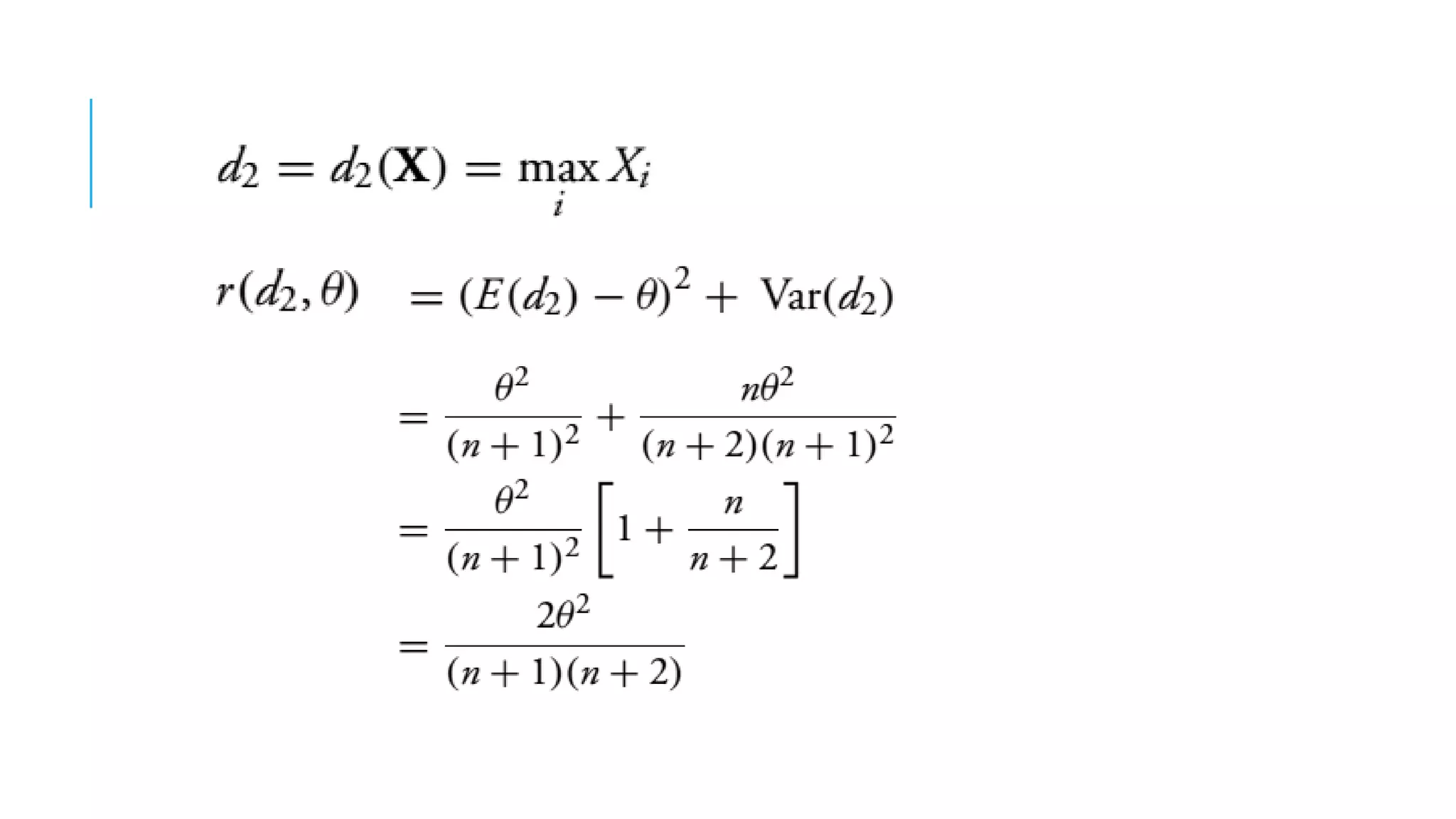

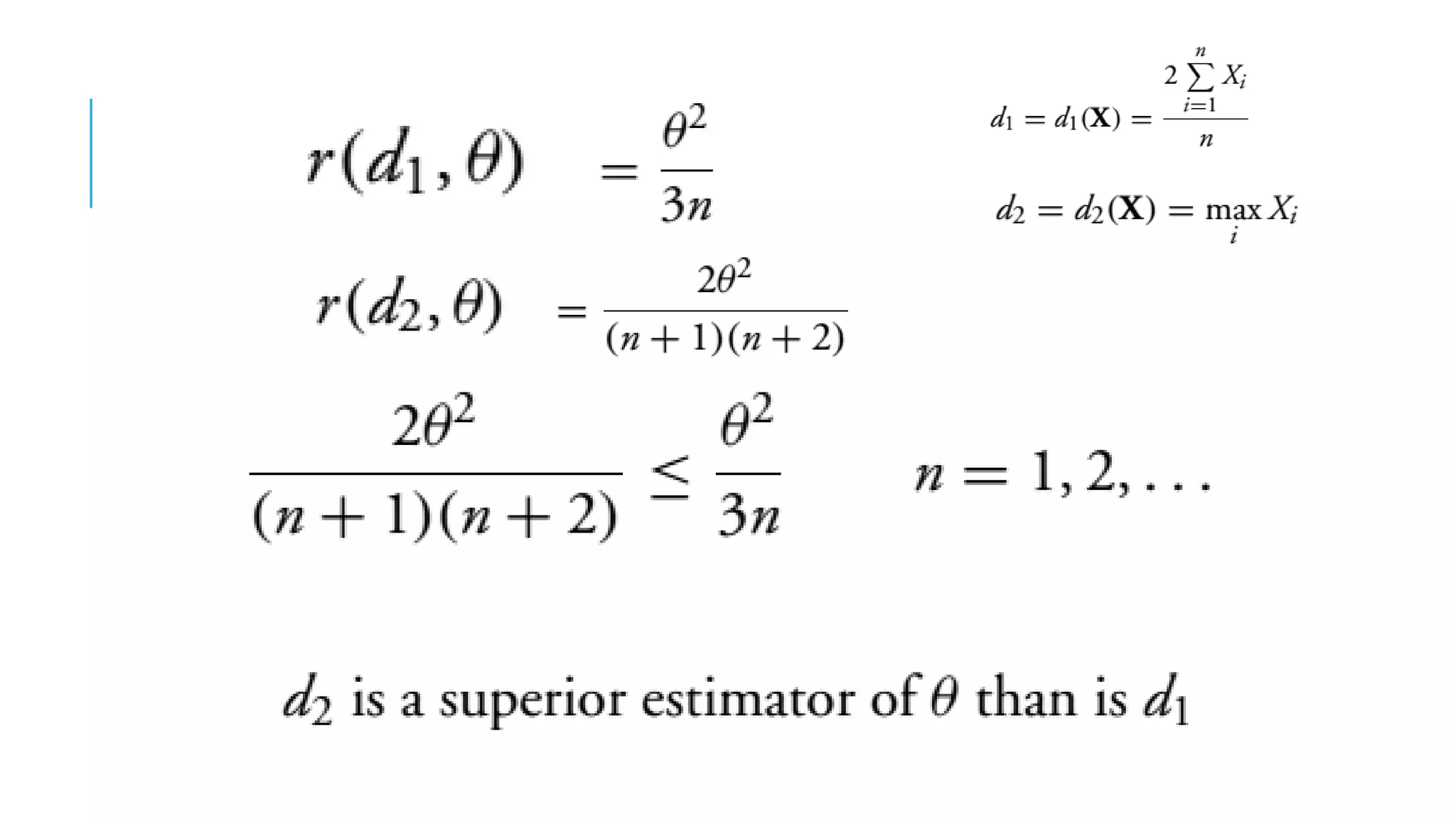

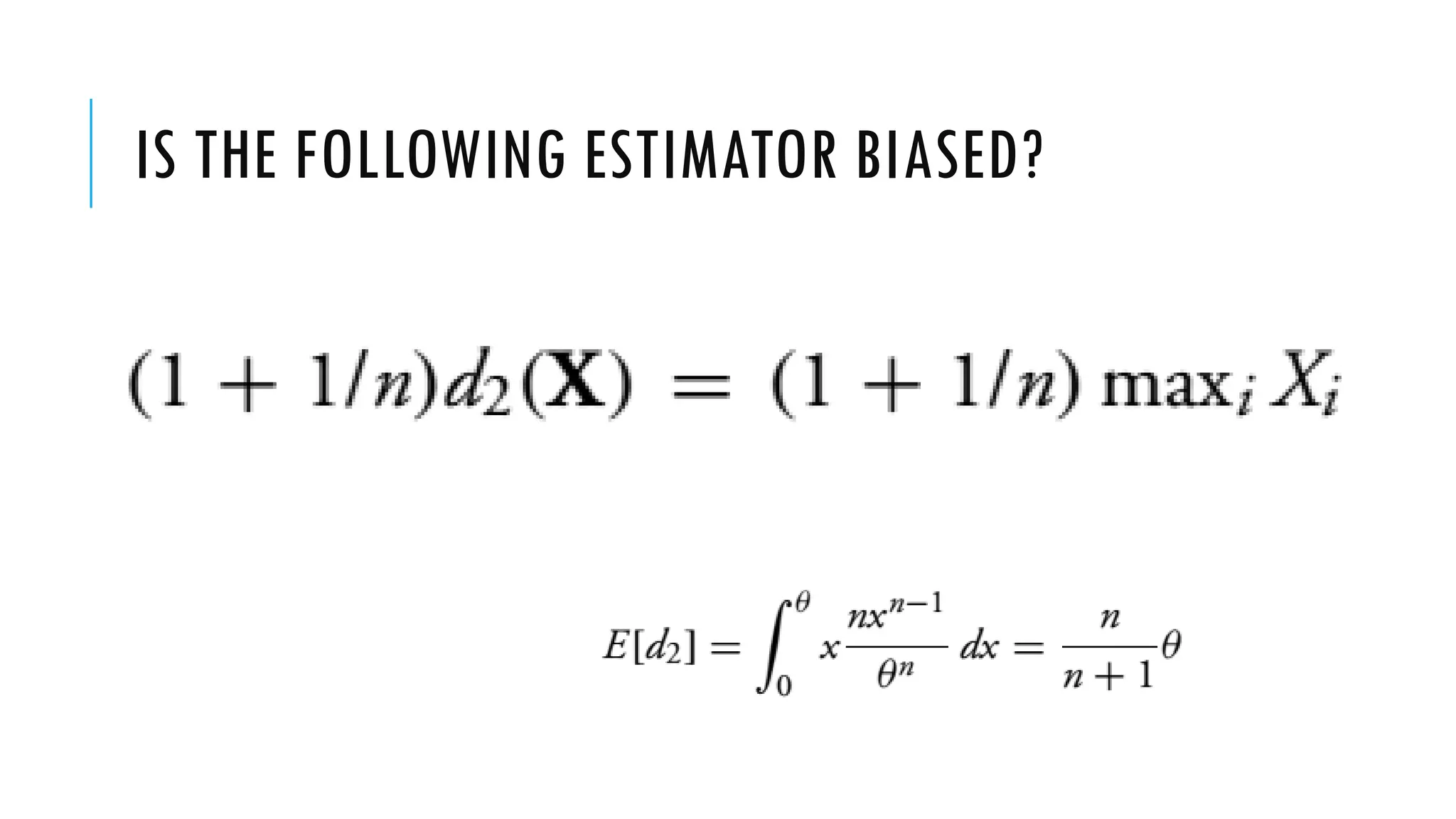

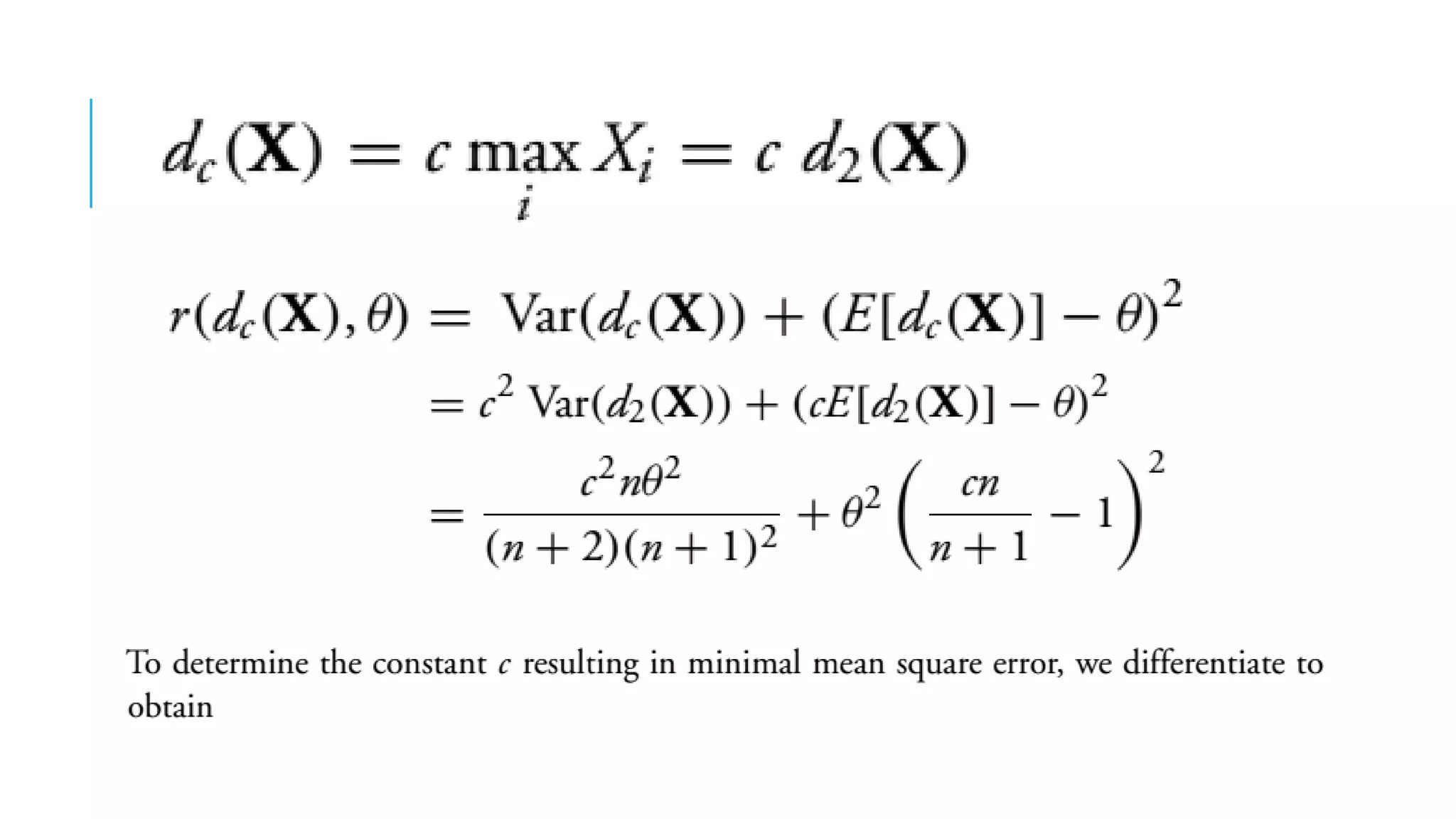

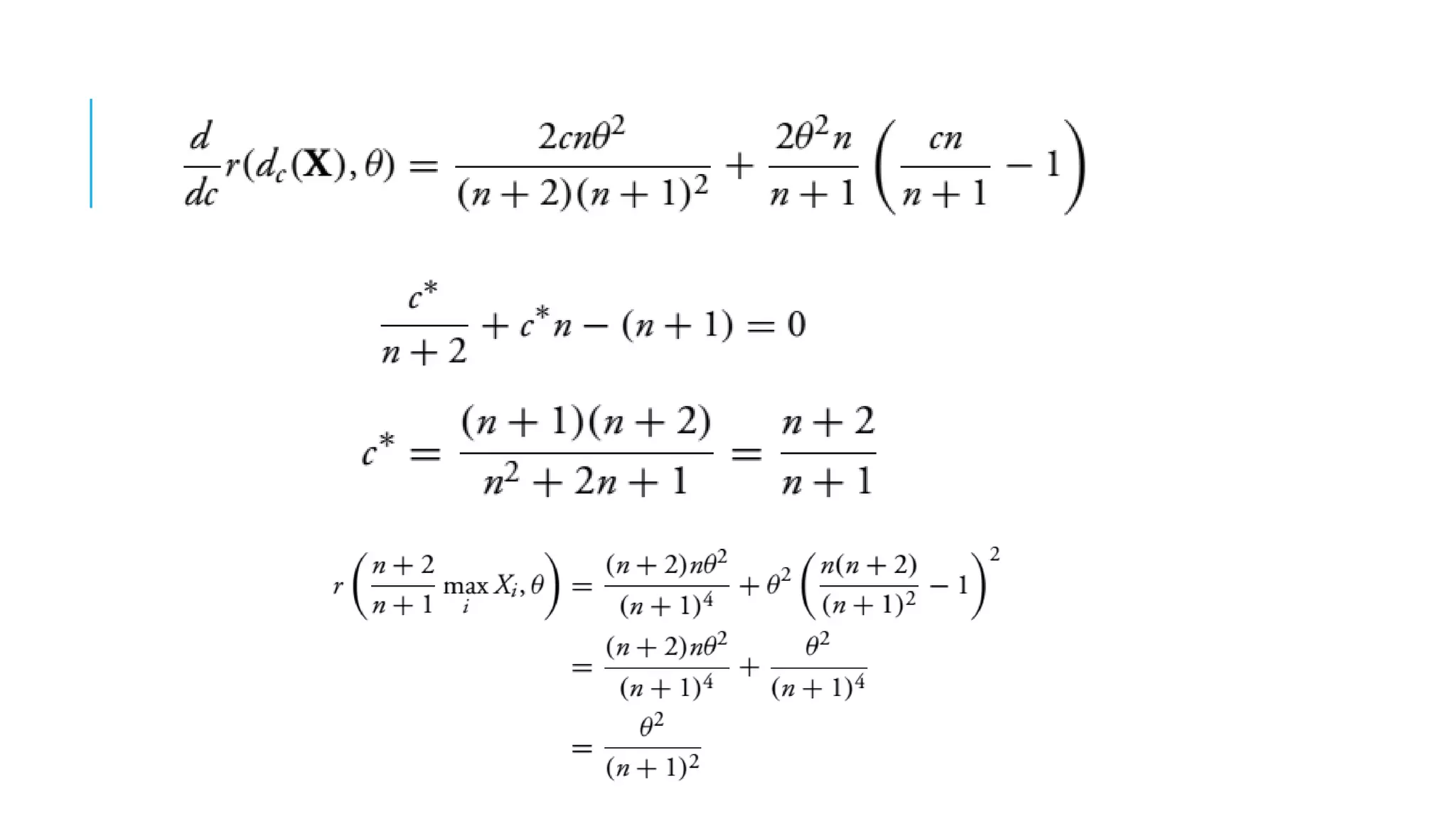

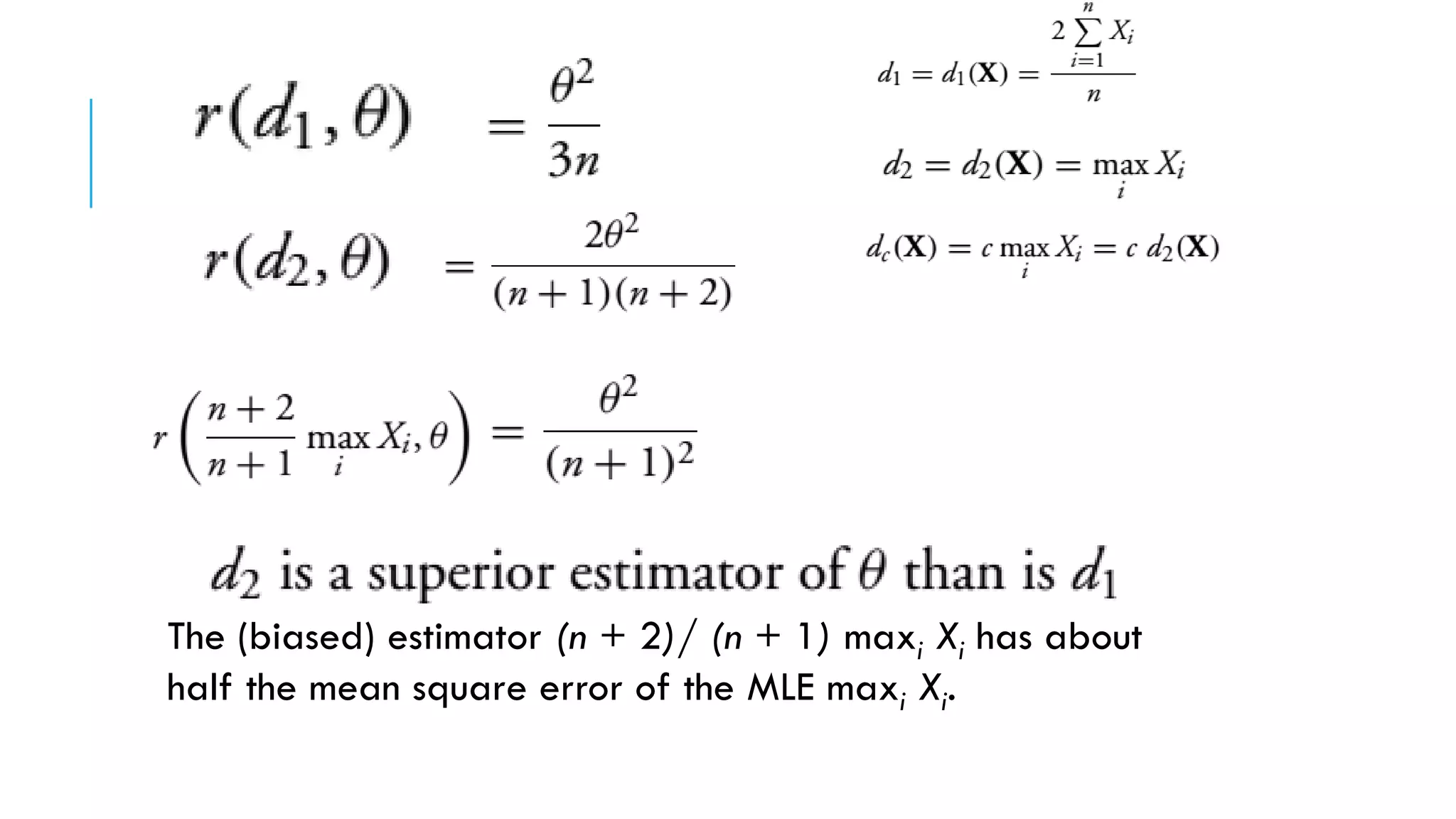

This document discusses evaluating point estimators. It defines mean square error as an indicator for determining the worth of an estimator. There is rarely a single estimator that minimizes mean square error for all possible parameter values. Unbiased estimators, where the expected value equals the parameter, are commonly used. Bias is defined as the expected value of the estimator minus the parameter. Combining independent unbiased estimators results in an estimator with variance equal to the weighted sum of the individual variances. The mean square error of any estimator is equal to its variance plus the square of its bias. Examples are provided to illustrate evaluating bias and finding mean and variance of estimators.