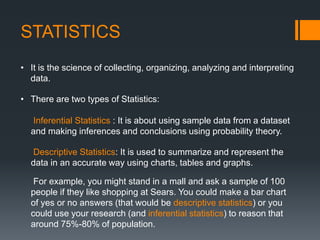

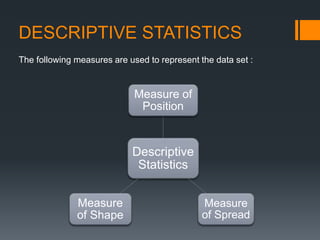

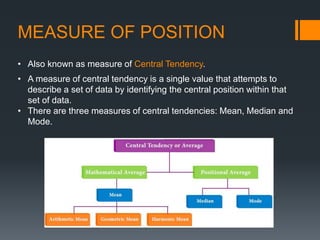

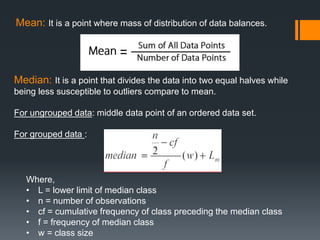

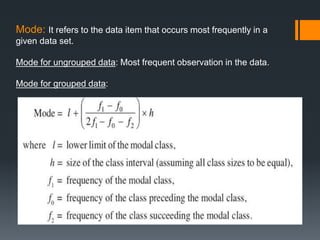

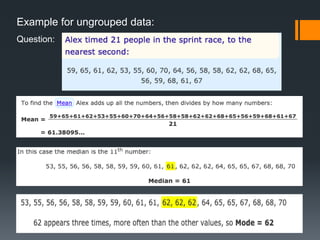

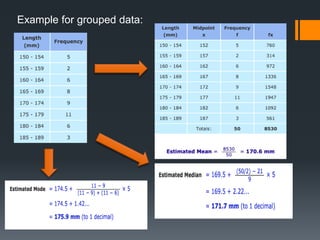

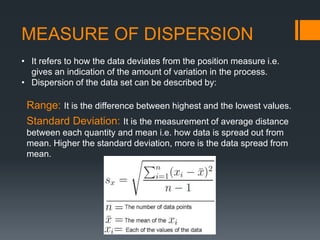

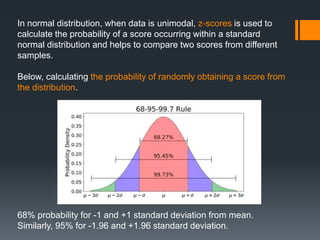

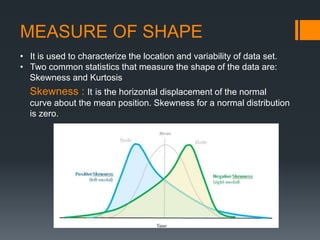

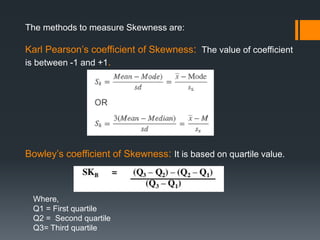

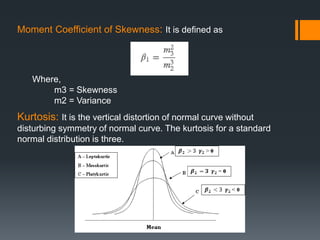

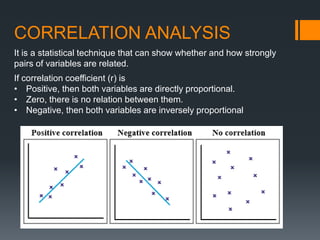

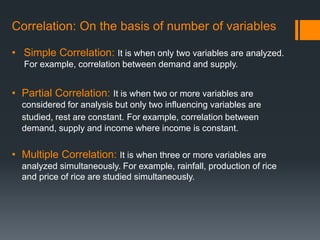

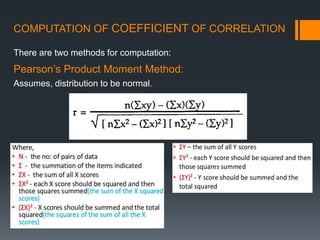

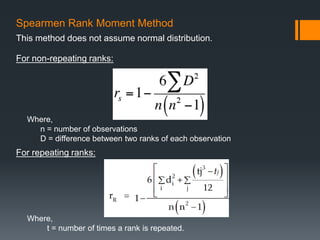

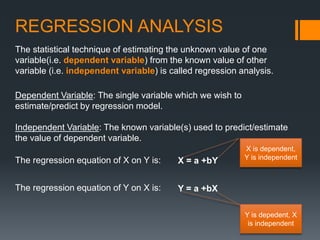

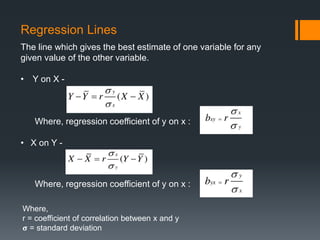

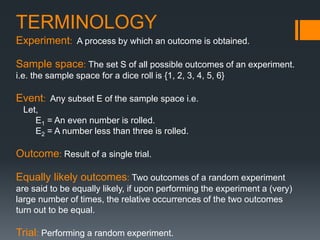

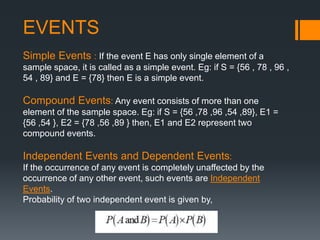

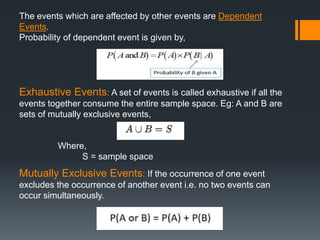

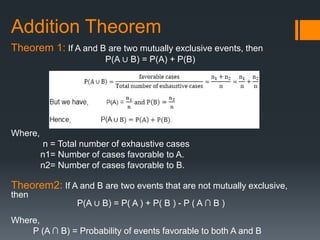

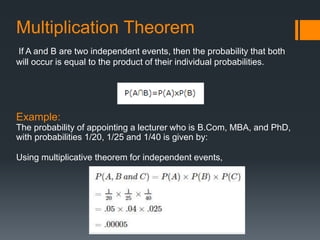

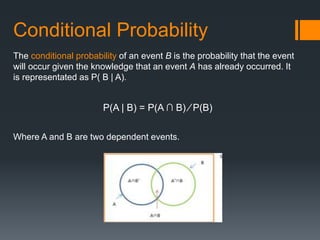

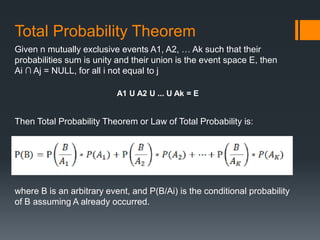

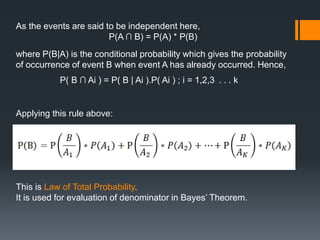

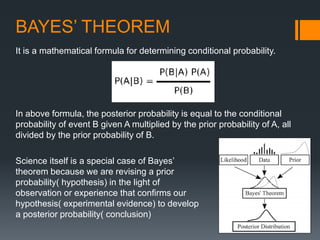

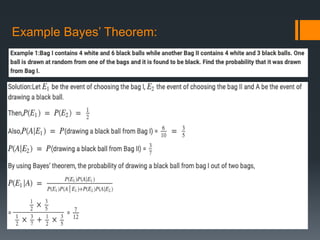

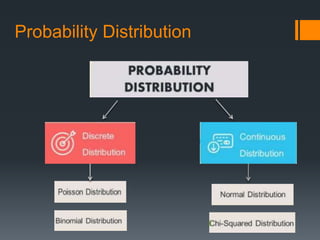

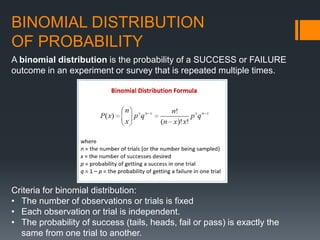

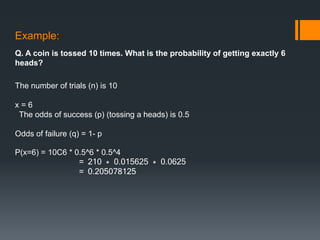

This document provides an introduction to statistics and probability. It discusses key concepts in descriptive statistics including measures of central tendency (mean, median, mode), measures of dispersion (range, standard deviation), and measures of shape (skewness, kurtosis). It also covers correlation analysis, regression analysis, and foundational probability topics such as sample spaces, events, independent and dependent events, and theorems like the addition rule, multiplication rule, and total probability theorem.