This document provides an overview of statistical inference concepts including:

1. Best unbiased estimators, which have minimum mean squared error for a given parameter. The best unbiased estimator, if it exists, must be a function of a sufficient statistic.

2. Sufficiency and the Rao-Blackwell theorem, which states that conditioning an estimator on a sufficient statistic produces a uniformly better estimator.

3. The Cramér-Rao lower bound, which provides a lower bound on the variance of unbiased estimators. Examples are given to illustrate key concepts like when the bound may not hold.

4. Examples are worked through to find minimum variance unbiased estimators, maximum likelihood estimators, and confidence intervals for various distributions

![2 SUFFICIENCY AND UNBIASED ESTIMATION 3

But since W has uniform minimum variance, we must have V arθ(T) ≥ V arθ(W). Therefore

V arθ(T) = V arθ(W). This implies Covθ(W, W ) =

√

V arθW · V arθW . The attainment of equality

in Cauchy-Schwartz inequality requires that:

W = aW + b (2)

Equal variance and equal expectation give a = 1, b = 0.

This completes the proof.

2 SUFFICIENCY AND UNBIASED ESTIMATION

Recall that T is a sufficient statistic for θ if the distribution of x|T = t does not depend on θ.

Informally, we say that T summarizes all the information about θ contained in the sample. This

notion is elegantly illustrated by the Rao-Blackwell Theorem.

Theorem 2 (Rao-Blackwell) Let W be an unbiased estimator of θ and let T be a sufficient

statistic for θ. Define φ(T) = E(W|T). Then φ(T) is an unbiased estimator and V arθφ(T) ≤

V arθW for all θ. That is, φ(T) is uniformaly better estimator of W.

Proof First notice that since the distribution of W(X1, ..., Xn)|T = t does not depend on θ,

φ(t) = E(W|T = t) is not a function of θ. Therefore, φ(T) is indeed an estimator.

Now,

Eφ(T) = E(E(W|T)) = E(W) = θ;

Also,

V arθ(W) = Eθ(V ar(W|T)) + V arθ(E(W|T))

≥ V arθ(E(W|T))

= V arθφ(T)

This completes the proof.

The Rao-Blackwell tells us that for any unbiased estimator W we can always find φ(T), an

estimator based on a sufficient statistic, such that it is uniformly better than W.

Corollary The best unbiased estimator for θ, if one exists, must be a function of a sufficient

statistic.

Intuitively, since the best unbiased estimator has the highest precision, it must have captured

all the information available in the sample.

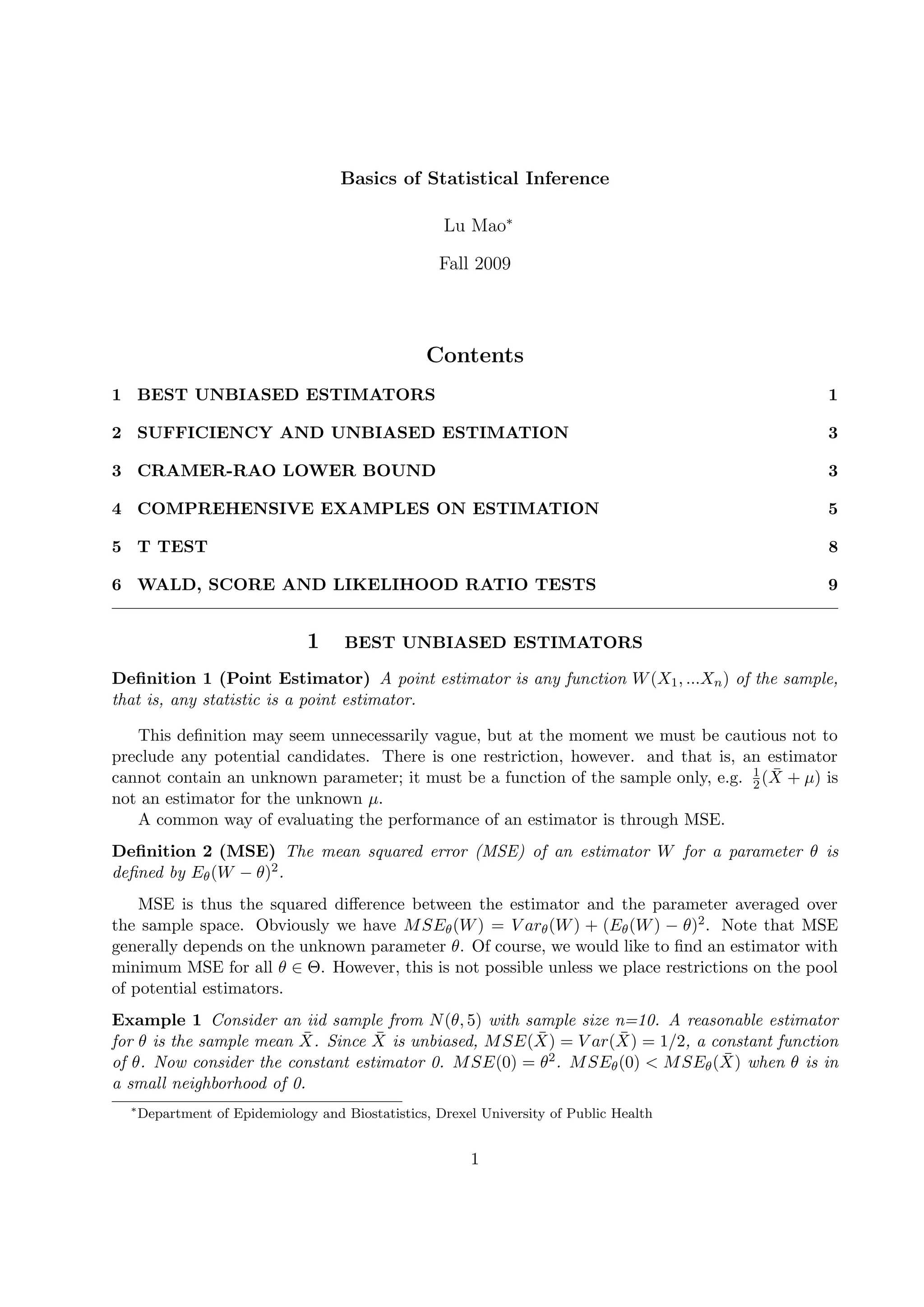

3 CRAMER-RAO LOWER BOUND

Theorem 3 (Cram´er-Rao Inequality) Let X1, ..., Xn be an iid sample with density f(x|θ), and

let W(X1, ...Xn) be an estimator such that V ar(W) < ∞ and that

d

dθ

EθW =

χ

∂

∂θ

[W(x)f(x)]dx (3)

Then,

V arθ(W) ≥

( d

dθ EθW(X))2

nI(θ)

where I(θ) = Eθ(( ∂

∂θ log f(X|θ))2) is the Fisher Information.](https://image.slidesharecdn.com/4d4927e1-e0bc-470a-98b2-a3669cf116c4-151112002951-lva1-app6892/85/Problem_Session_Notes-3-320.jpg)

![3 CRAMER-RAO LOWER BOUND 4

Corollary In addtion to the conditions specified in Theorem 3, if W is an unbiased estimator of

θ, then.

V arθ(W) ≥

1

nI(θ)

Condition (3) involves an interchange between differentiation and integration. A common situation

in which (3) fails to hold is when the support of the density f(x|θ)depends on θ.

Example 3 (A counter-example of CRLB) Let X1, ...Xn be iid Uniform [0, θ]. Calculate the

“Fisher Information” I(θ) and find an unbiased estimator W(θ) such that V arθW < 1

nI(θ) .

Solution Since log f(X|θ) = log 1

θ = − log θ, we have

I(θ) = Eθ((

∂

∂θ

log f(X|θ))2

)

= Eθ((

∂

∂θ

(− log θ))2

)

= Eθ

1

θ2

=

1

θ2

So 1

nI(θ) = θ2

n . Now, since obviously E ¯X = θ

2, we have that W = 2 ¯X is an unbiased estimator of θ.

But

V arθW = 4V arθ

¯X

=

4

n

· V arθXi

=

4

n

·

θ2

12

=

θ2

3n

<

θ2

n

Fortunately, condition (3) is satisfied for “reasonable” estimators in the exponential family. Further,

I(θ) = −Eθ(

∂2

∂θ2

log f(X|θ)) (4)

holds for the exponential family.

In light of previous discussions, if the variance of an unbiased estimator attains the CRLB, it

is the best unbiased estimator.

Example 4 Show that ¯X is the best unbiased estimator for λ in a Poisson(λ) random sample.](https://image.slidesharecdn.com/4d4927e1-e0bc-470a-98b2-a3669cf116c4-151112002951-lva1-app6892/85/Problem_Session_Notes-4-320.jpg)

![REFERENCES 11

The test statistic is

Zs =

Sθ(X)

I(θ)

It can be shown that under H0,

Sθ0 (X)

I(θ0)

→ N(0, 1)

Therefore a level α score test rejects H0 : θ = θ0 when

S2

θ0

(X)

I(θ0)

> χ2

1(α)

References

[1] George Casella and Roger L. Berger, Statistical Inference, Duxbury Press, 2nd ed, 2001.

[2] E. L. Lehmann and George Casella, Theory of Point Estimation, Springer Texts in Statistics,

2nd ed, 1998.](https://image.slidesharecdn.com/4d4927e1-e0bc-470a-98b2-a3669cf116c4-151112002951-lva1-app6892/85/Problem_Session_Notes-11-320.jpg)