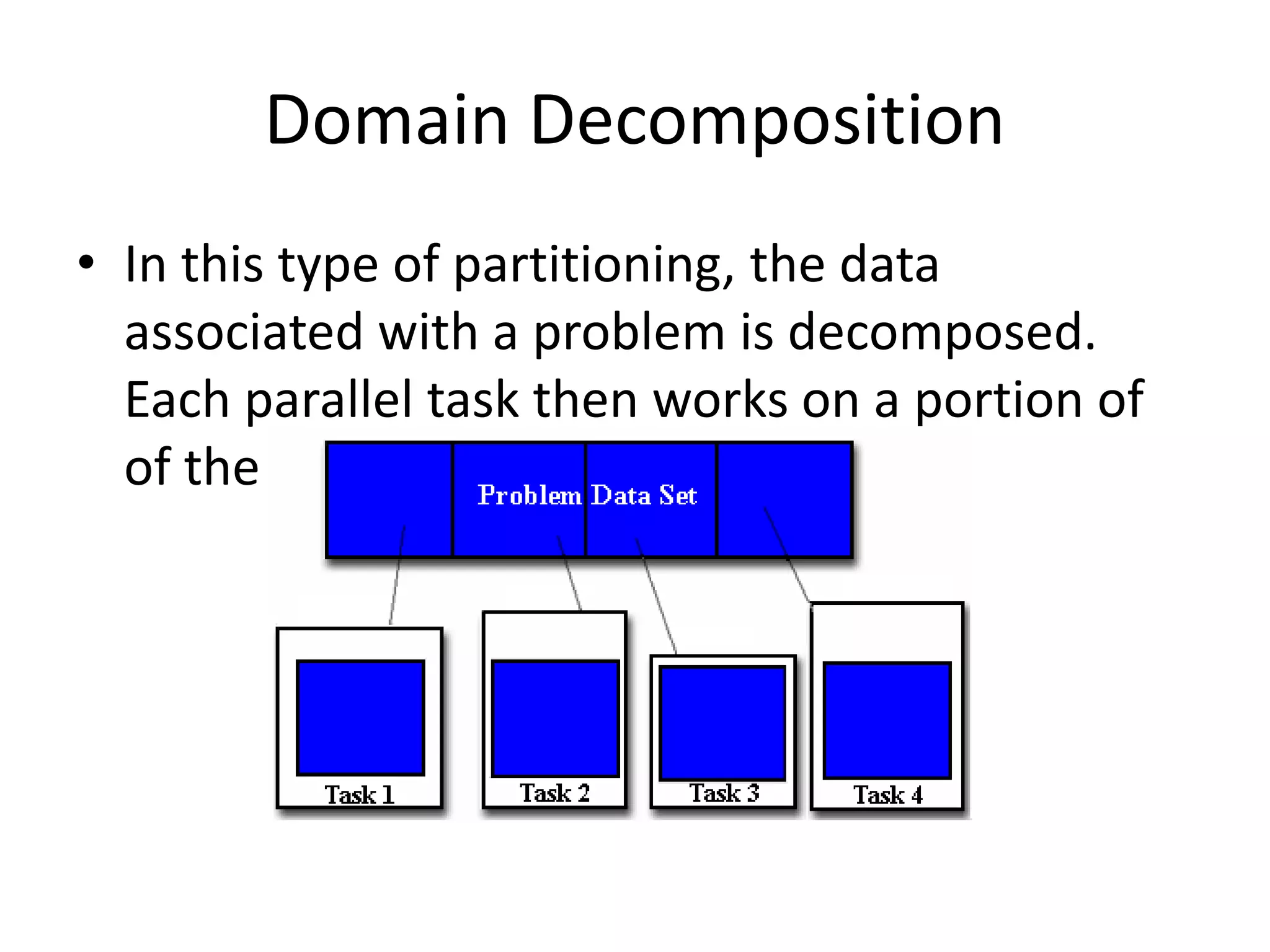

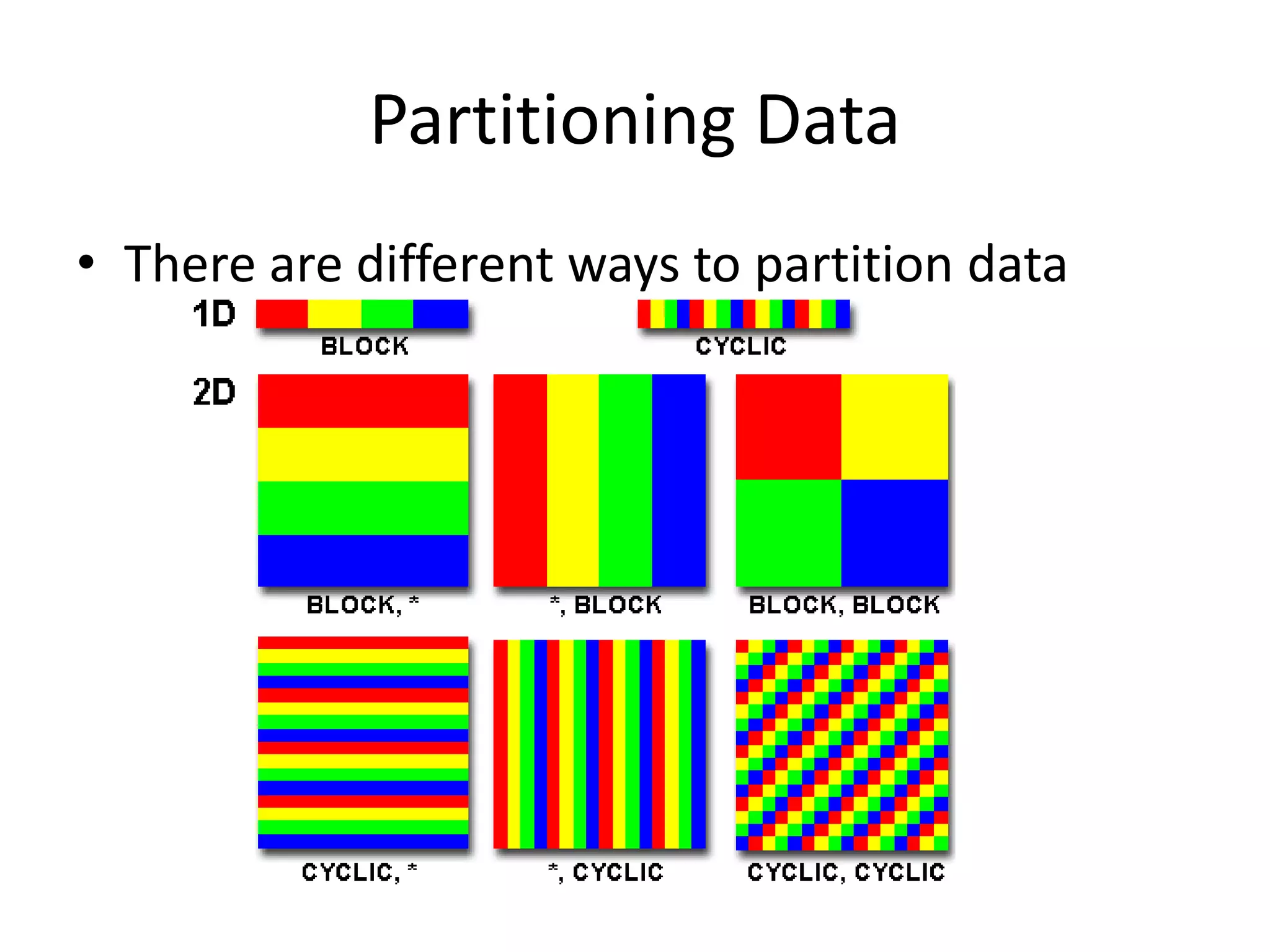

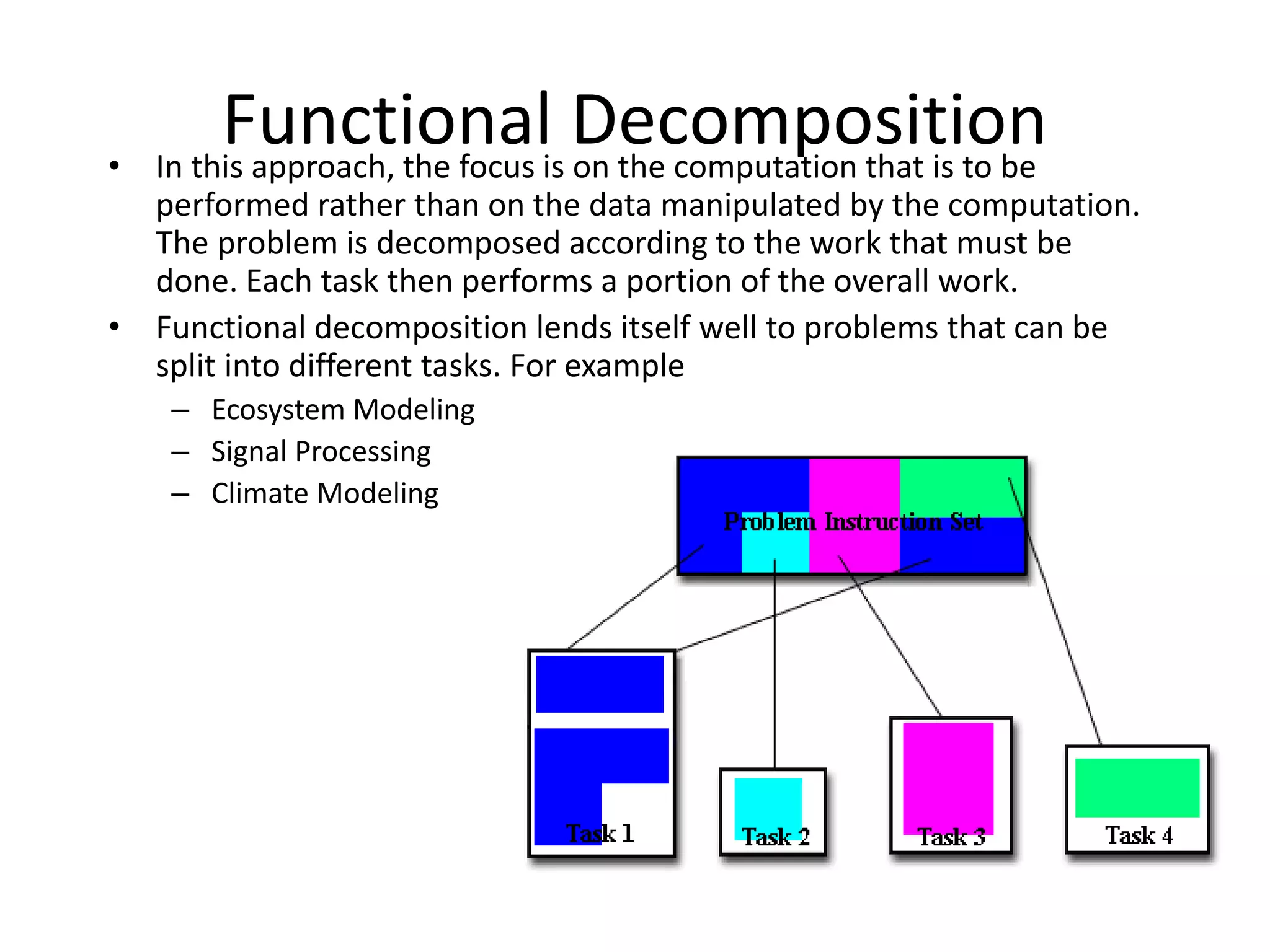

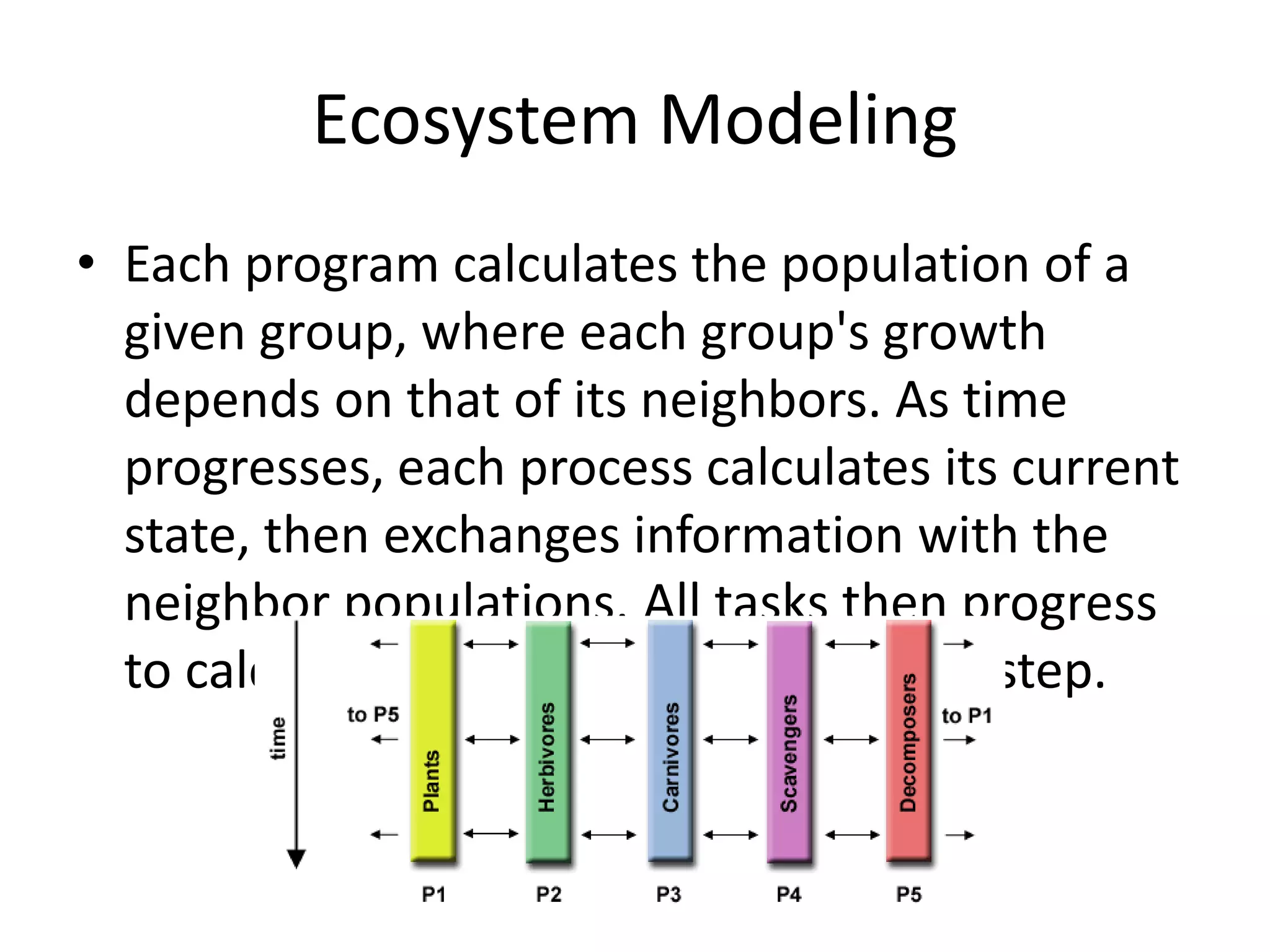

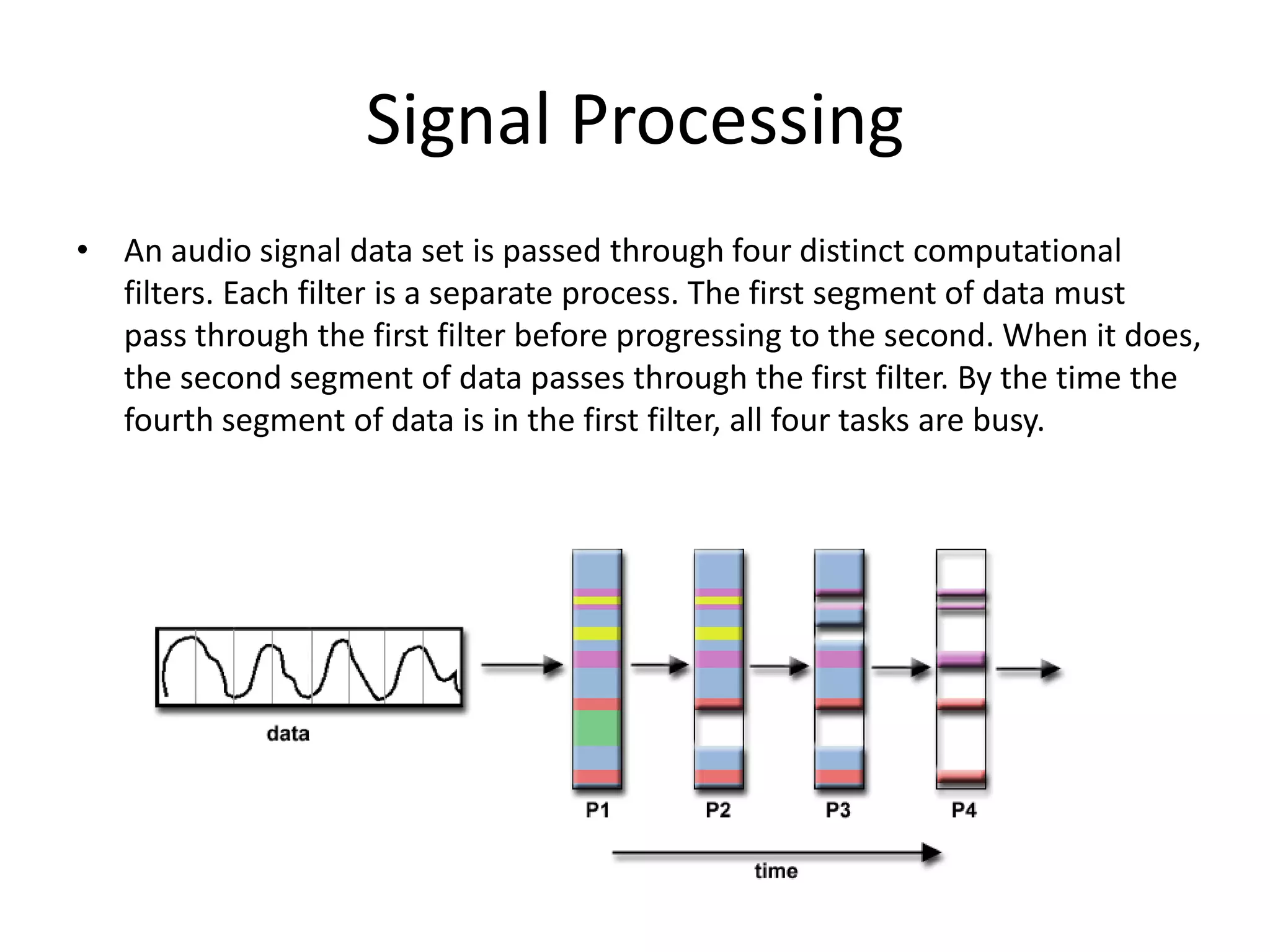

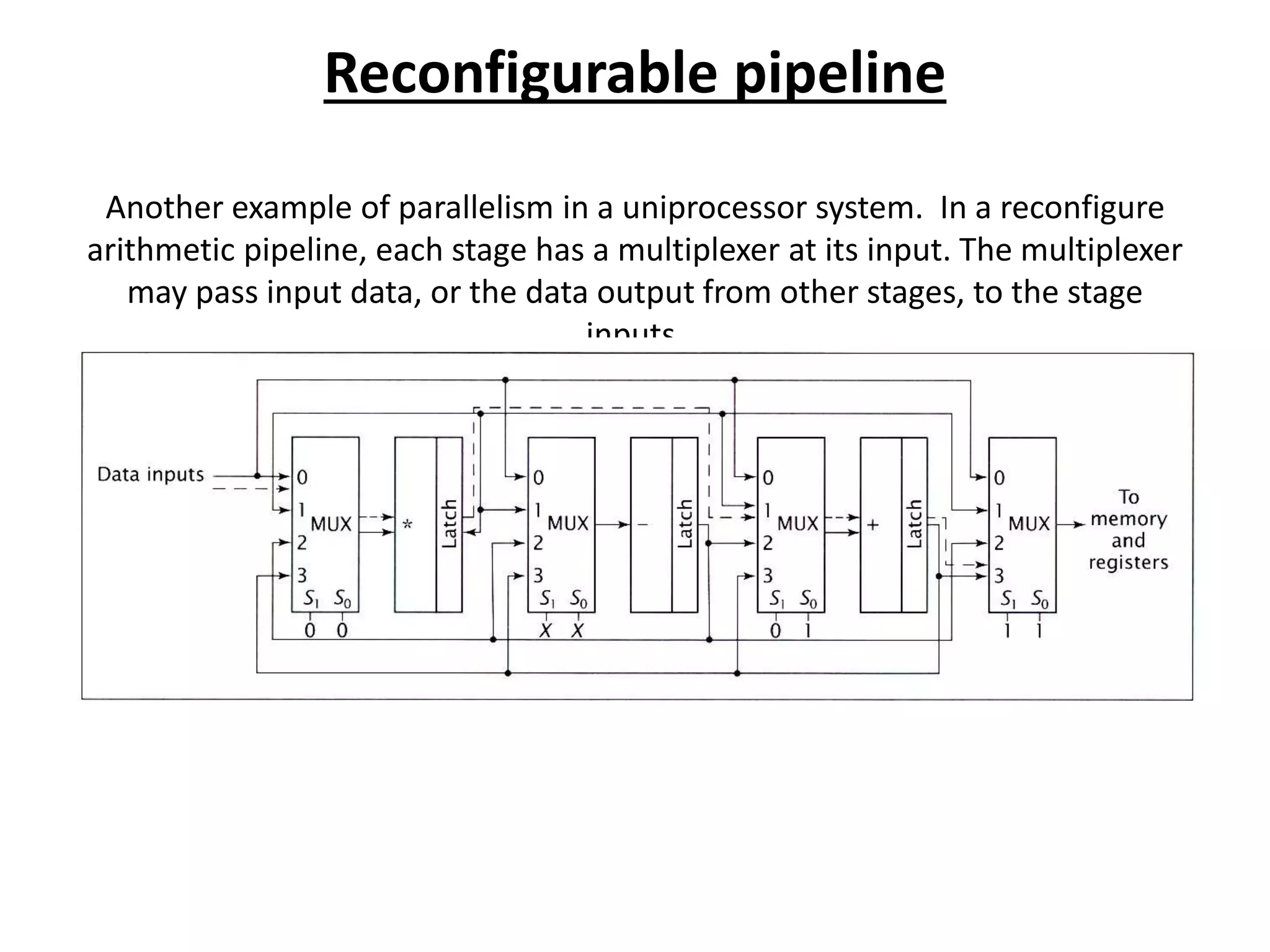

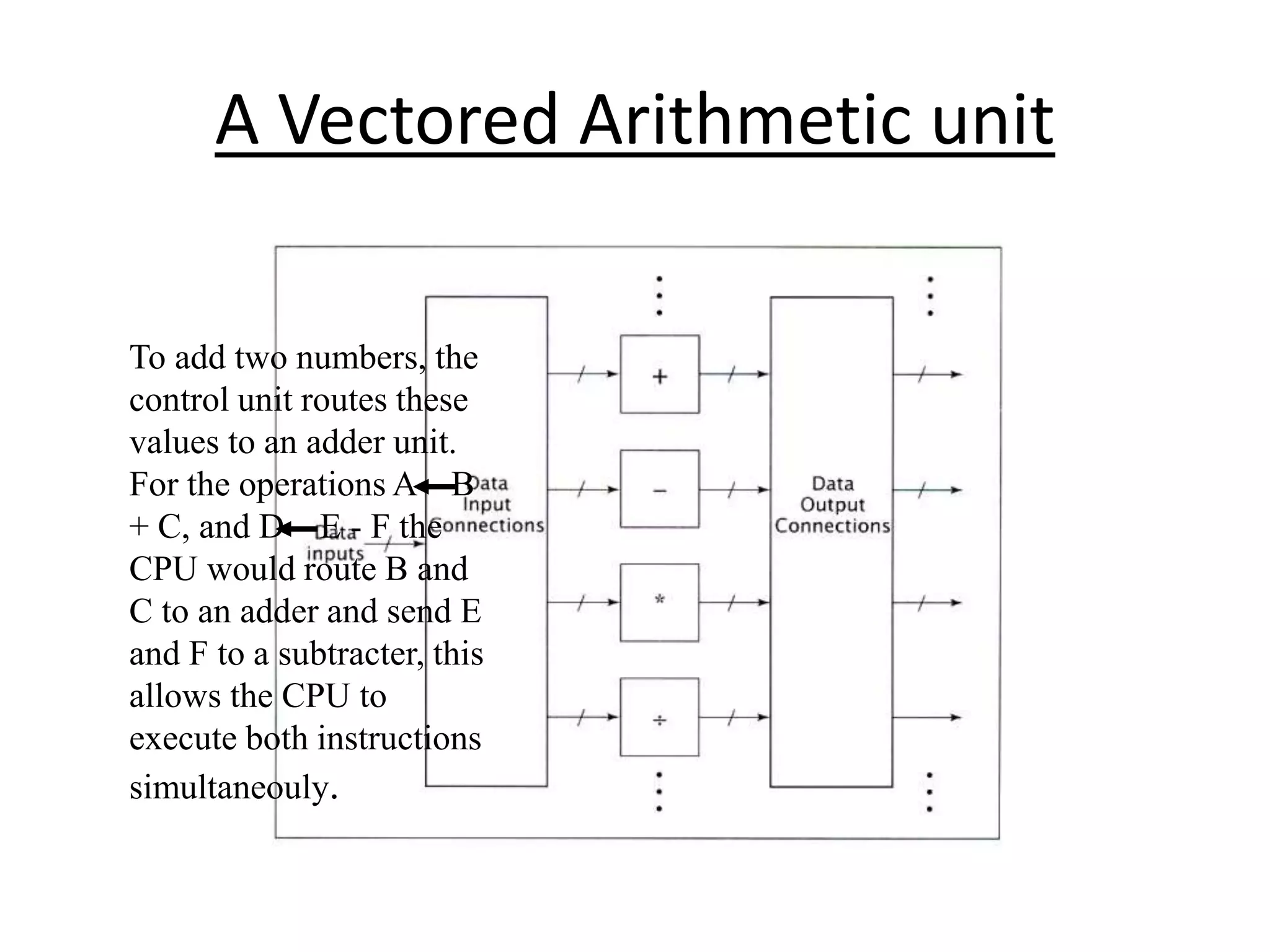

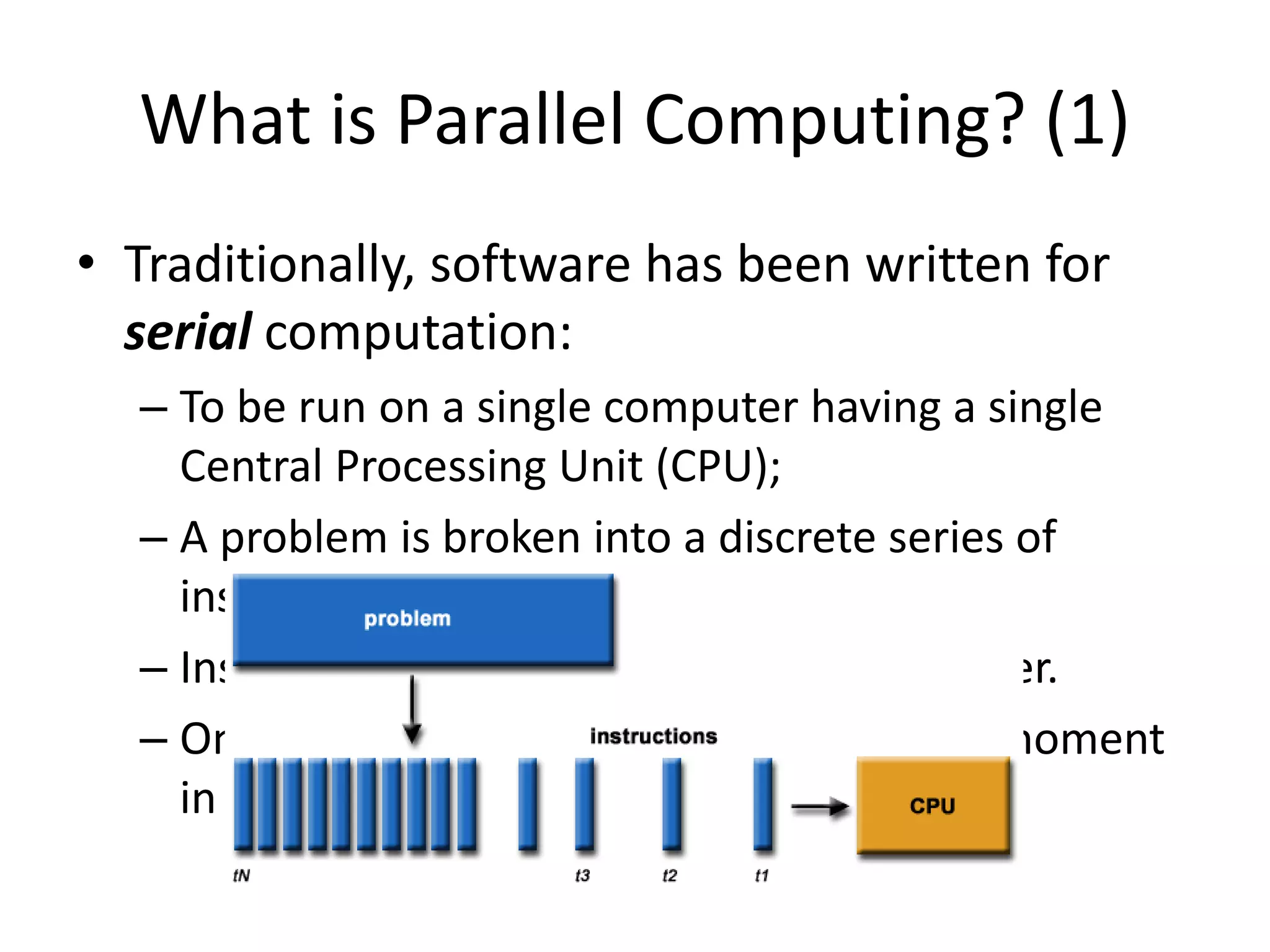

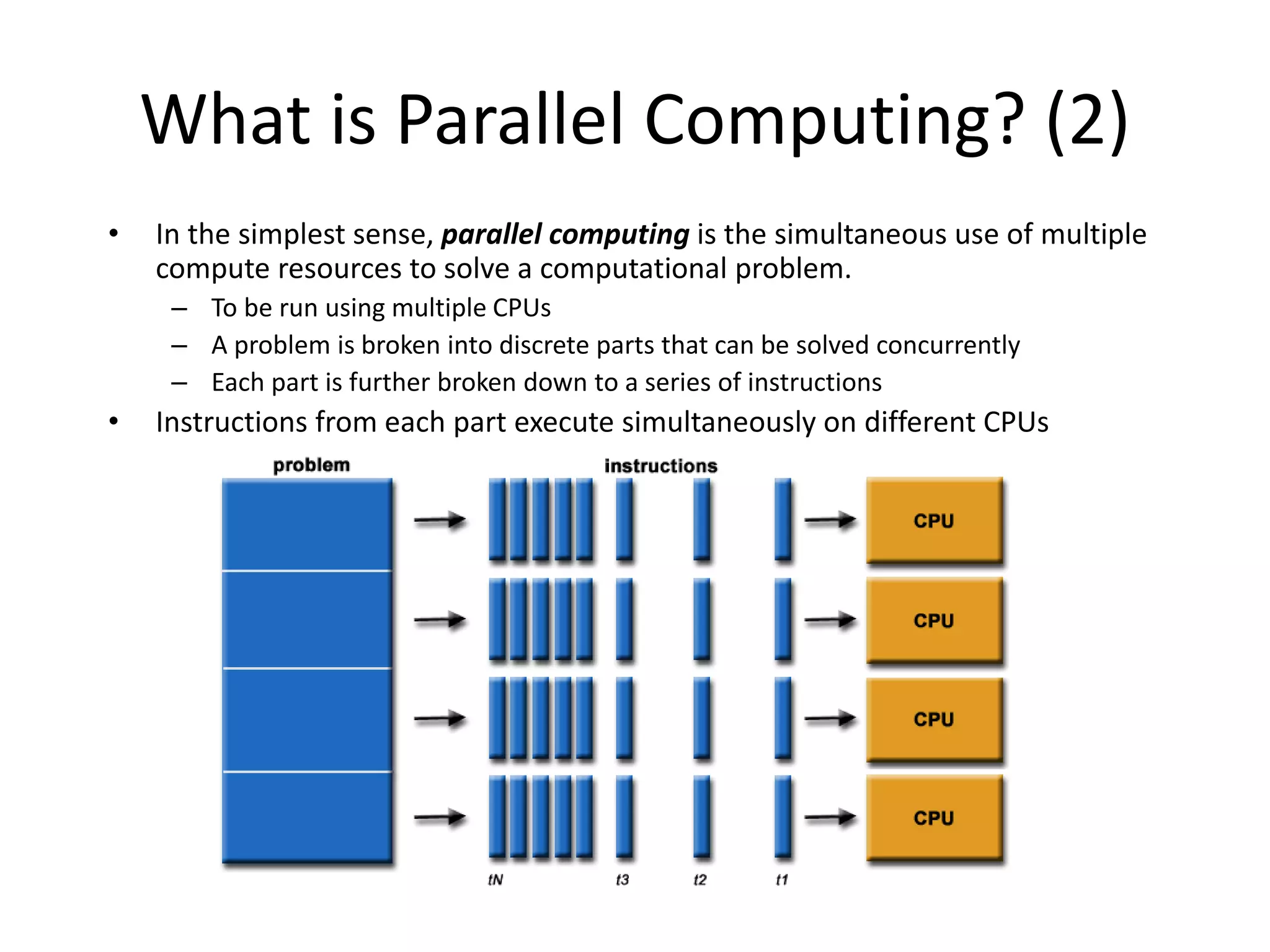

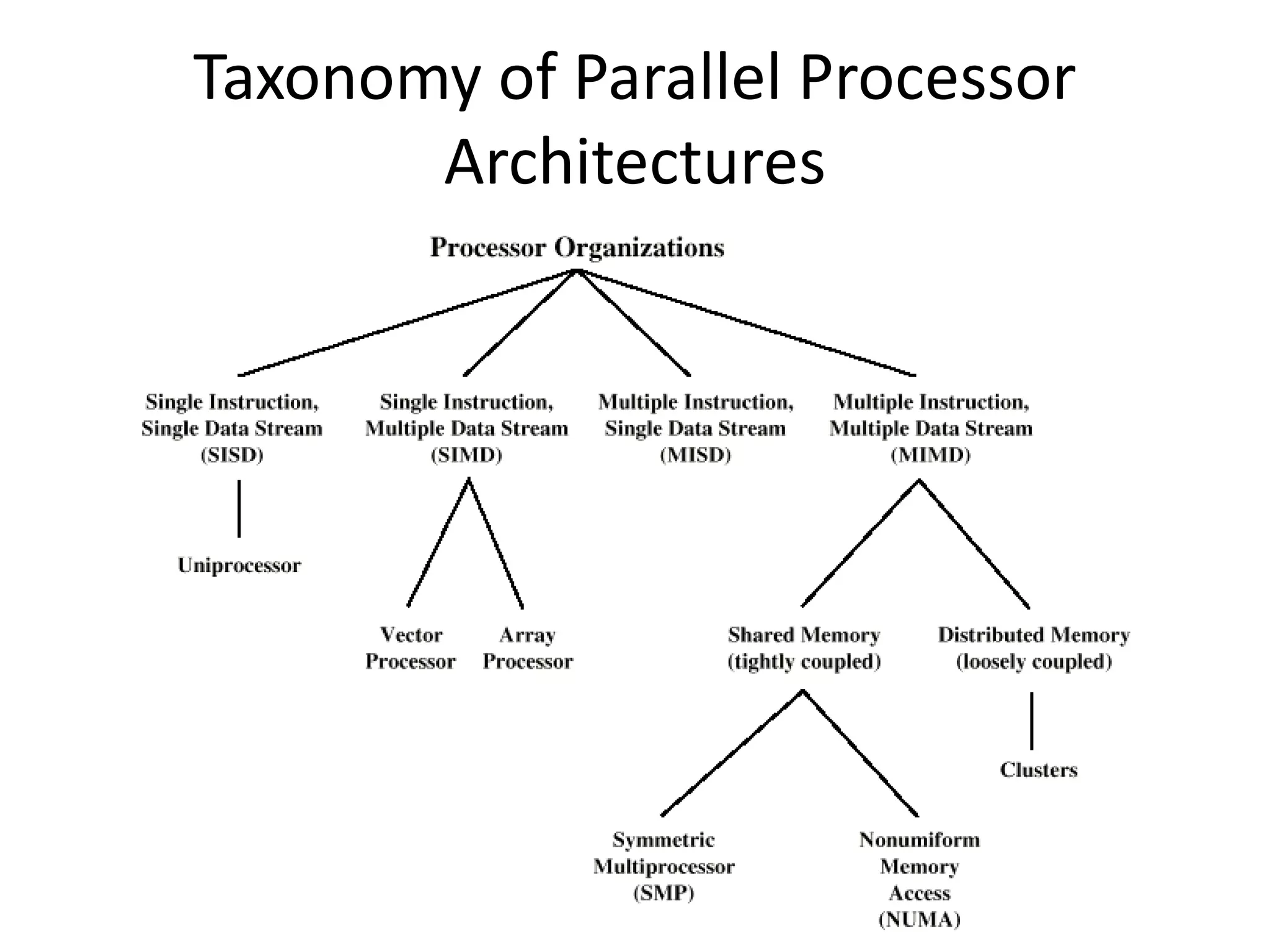

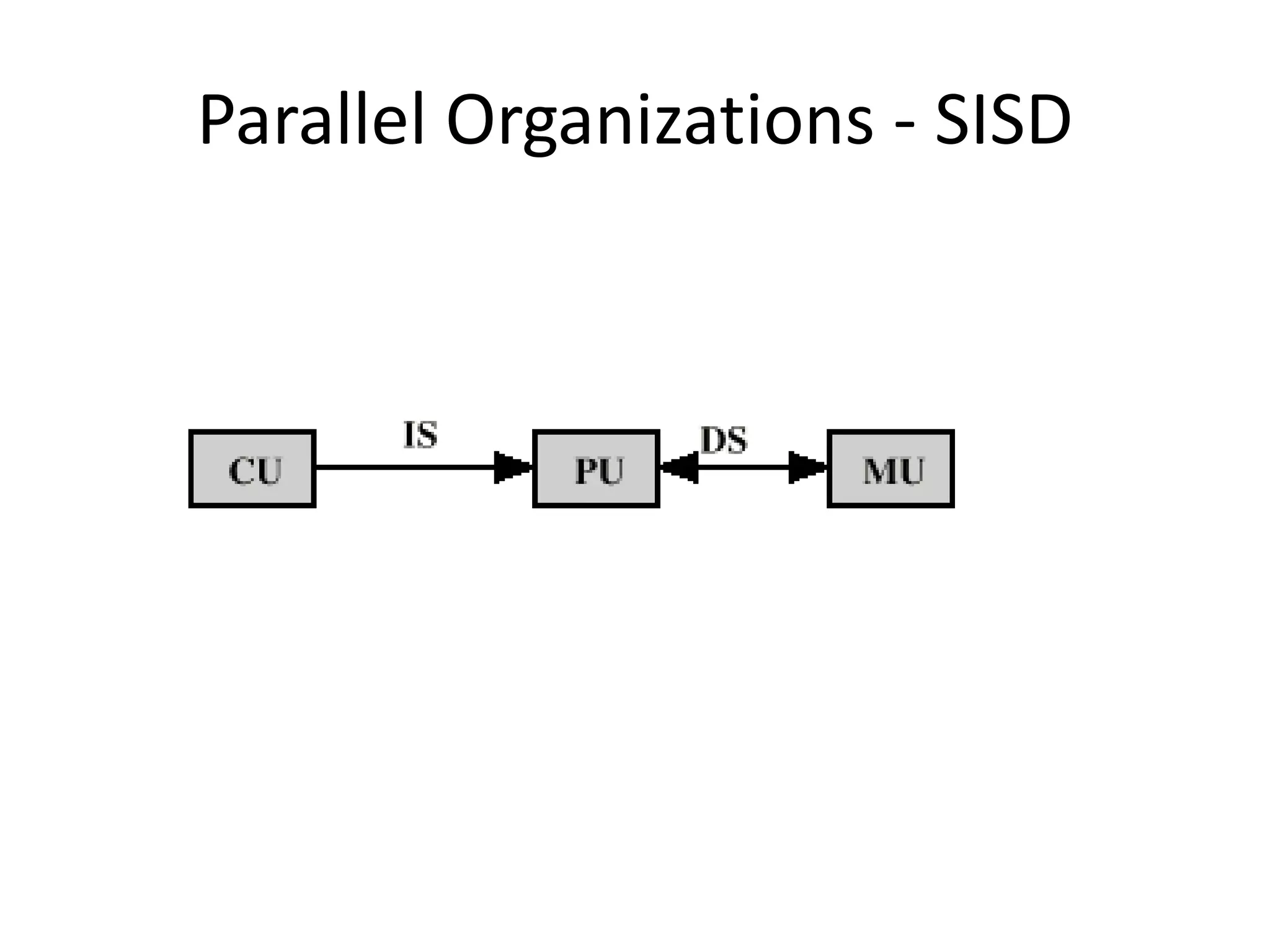

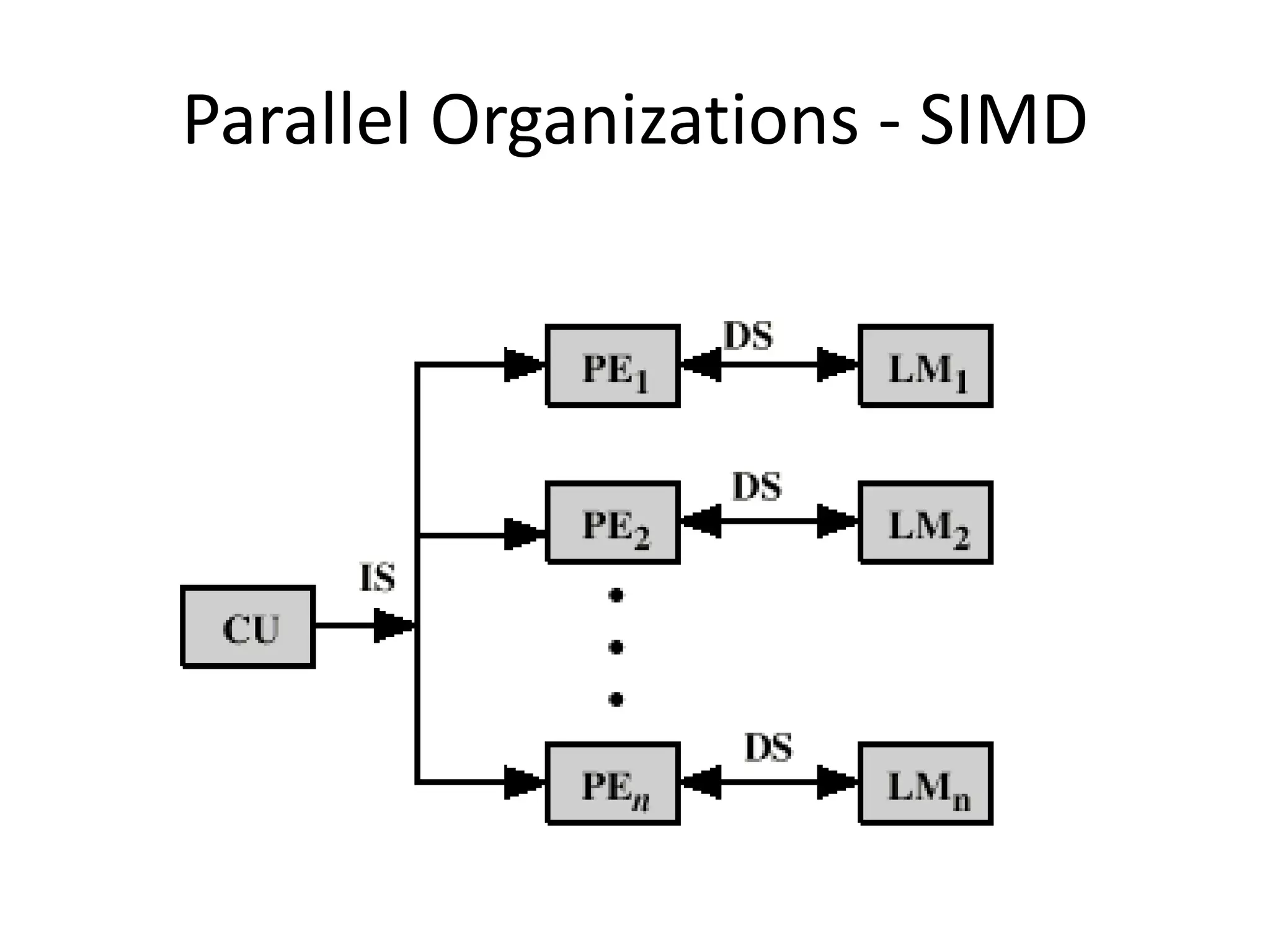

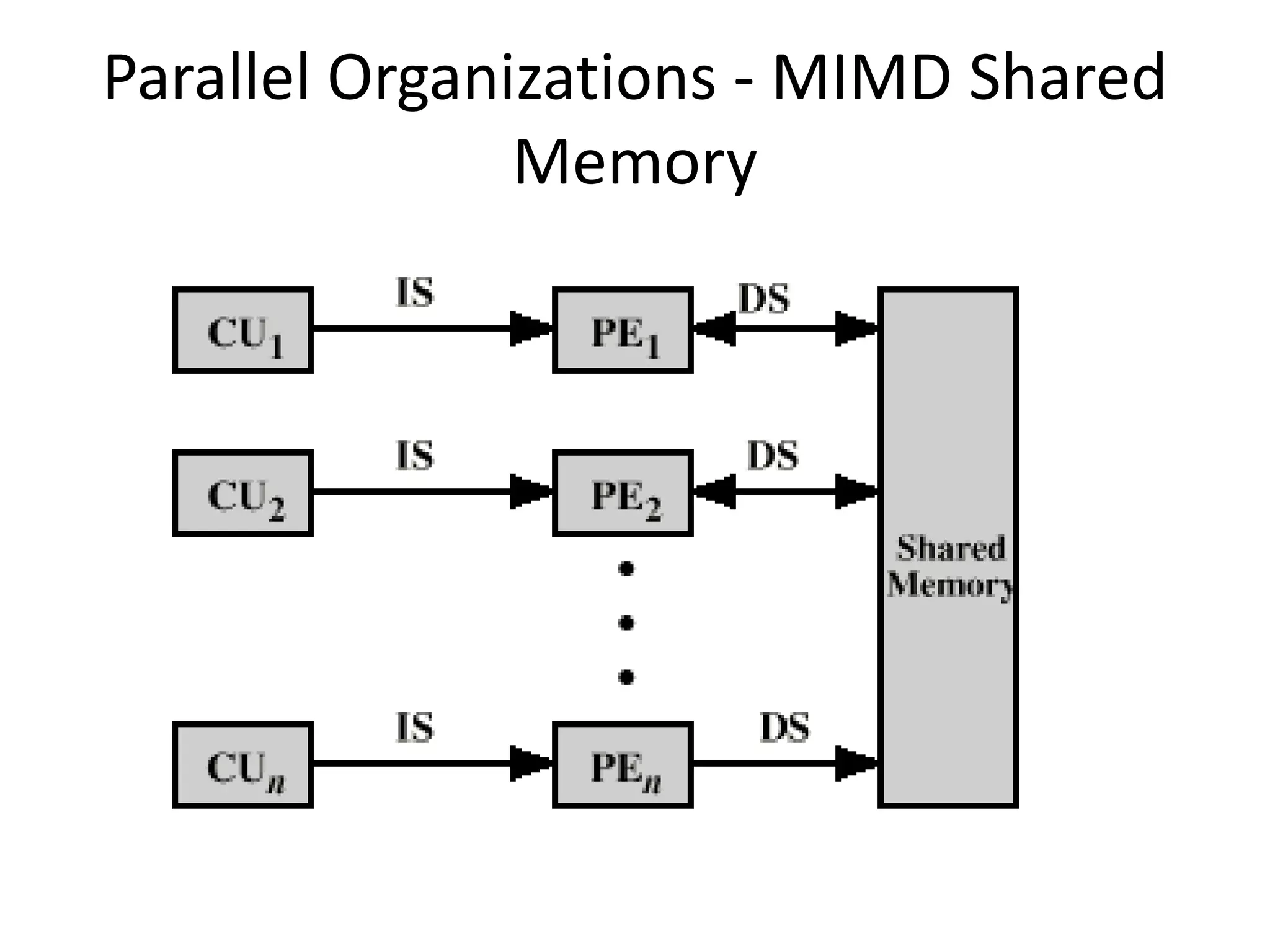

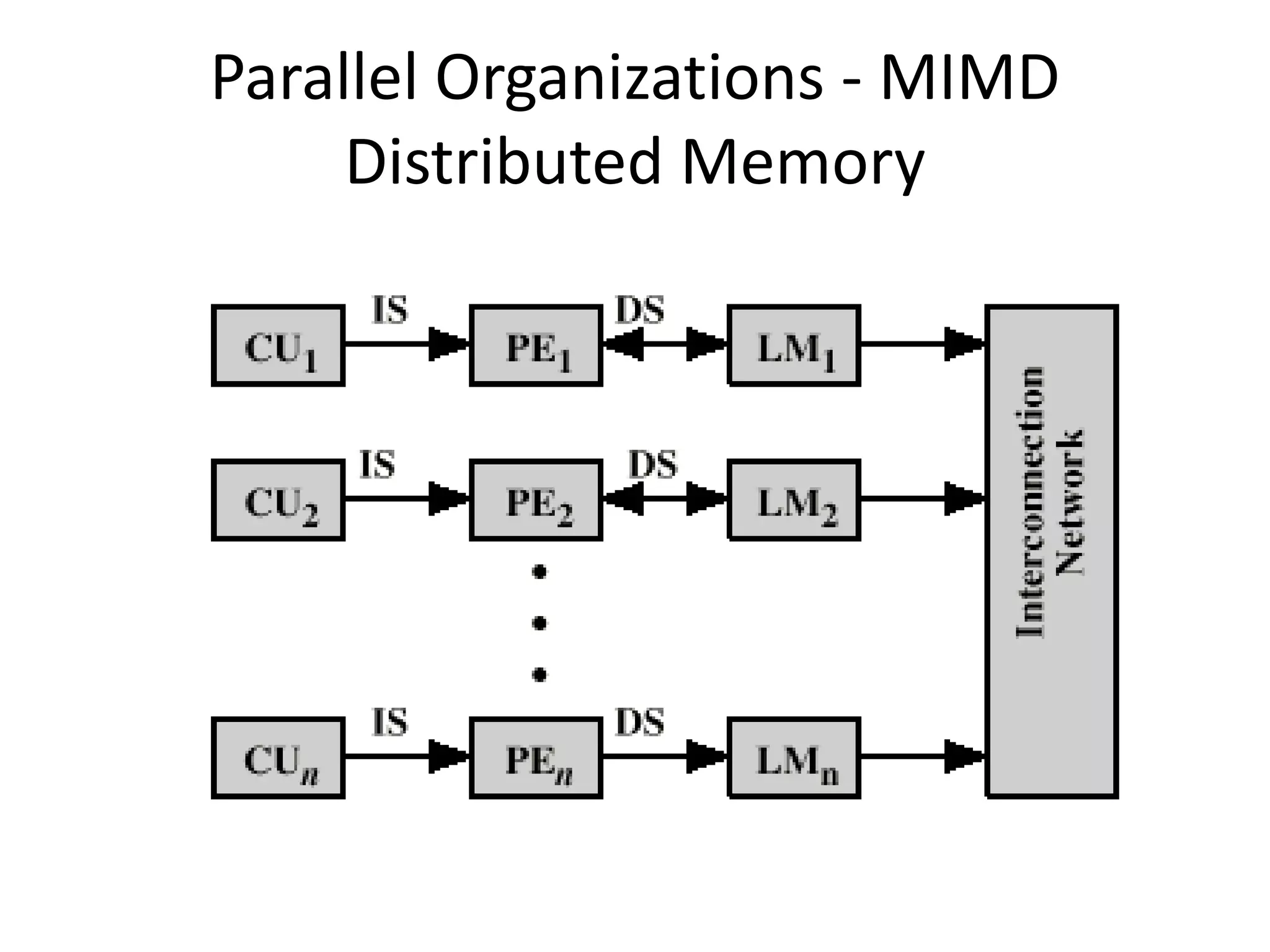

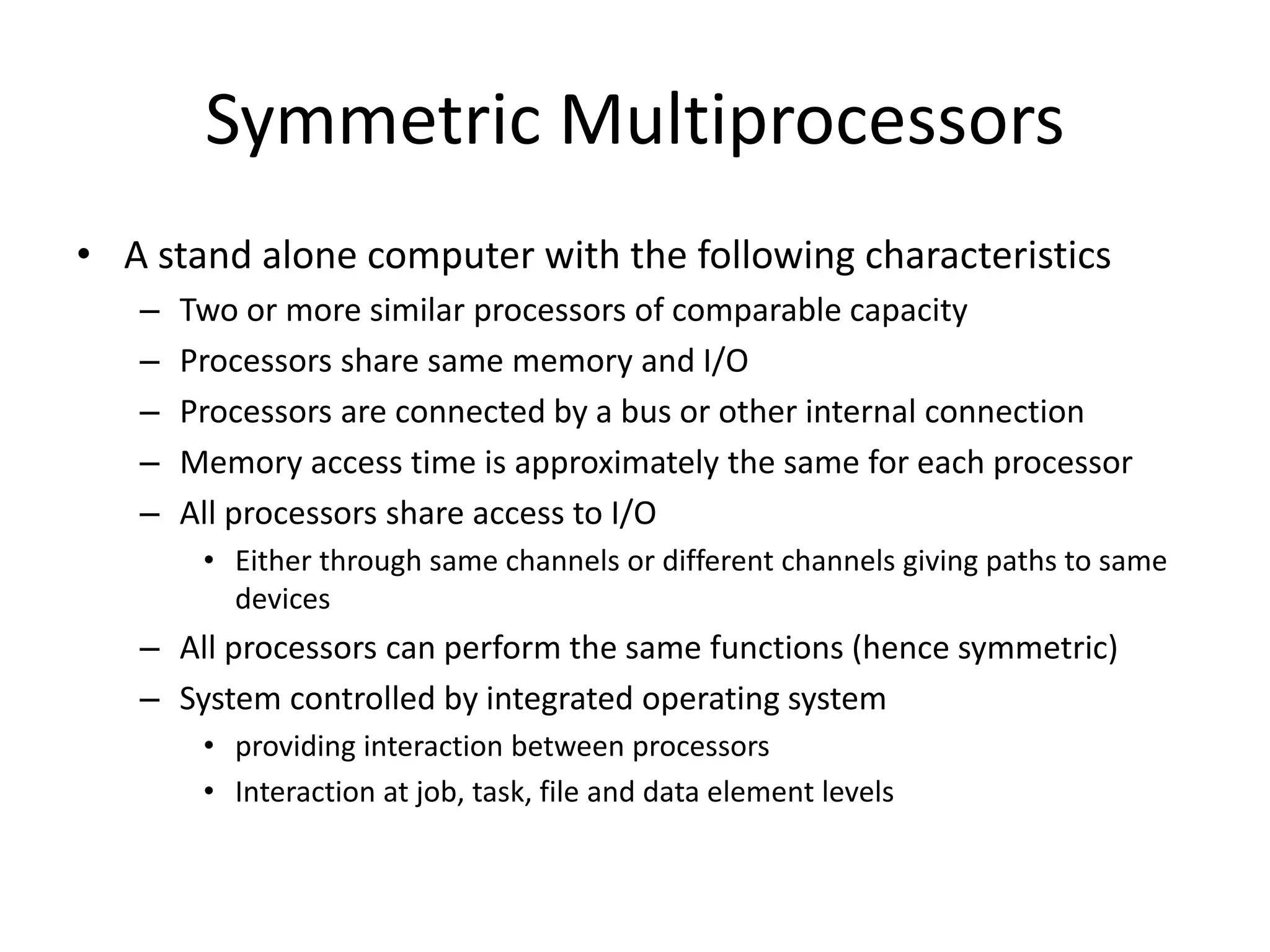

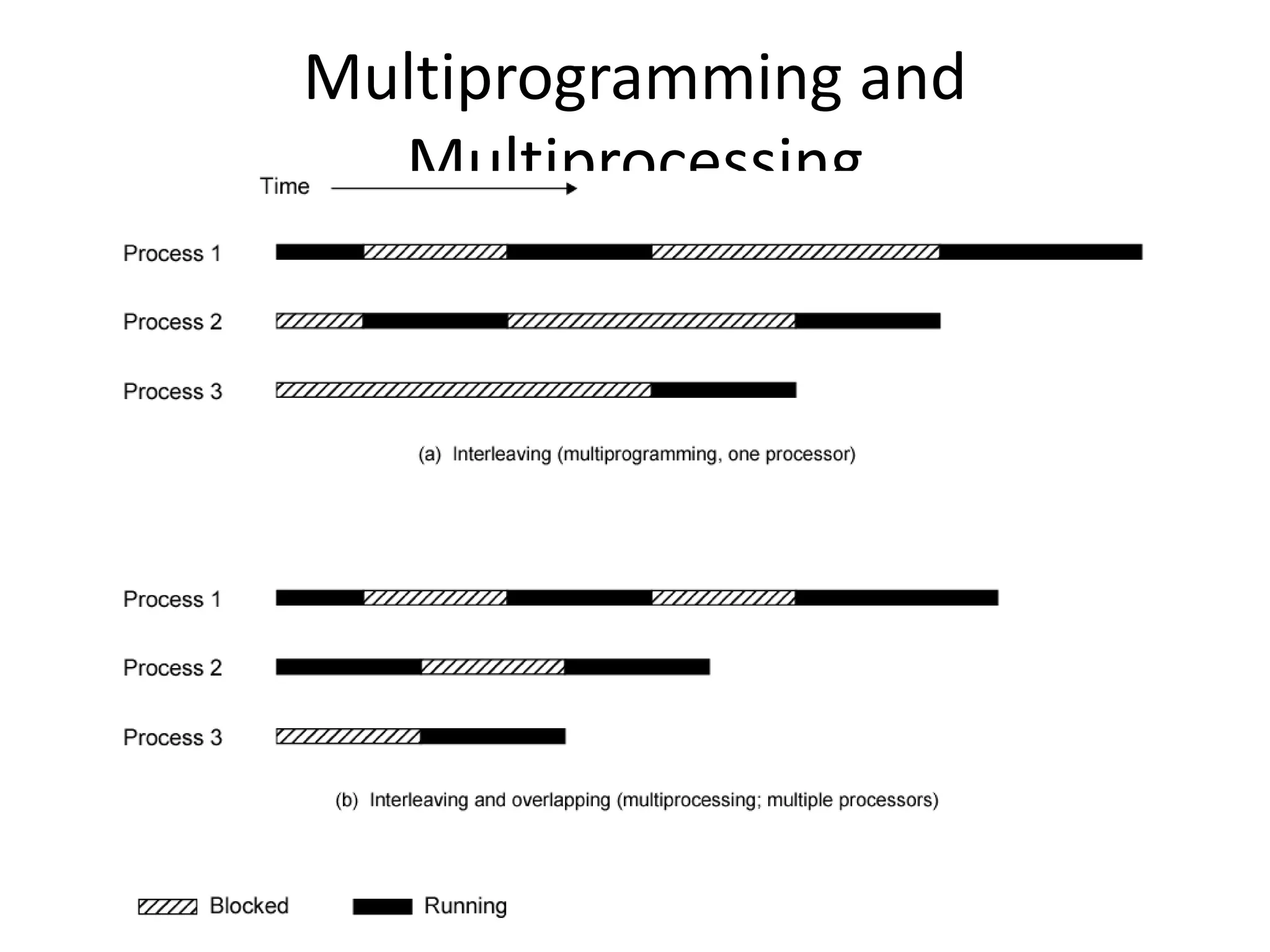

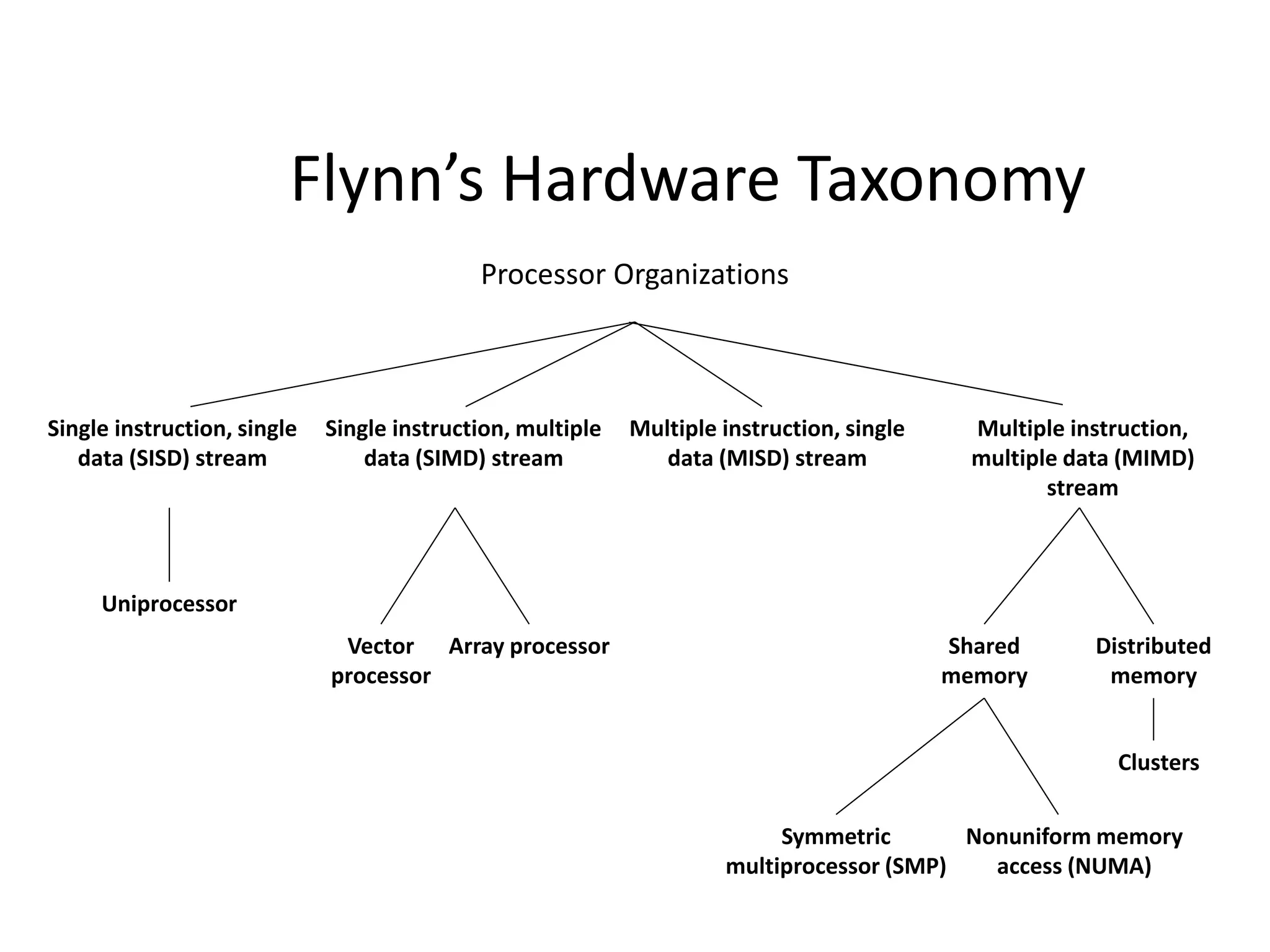

The document discusses parallel processing, emphasizing the importance of decomposition in designing parallel programs through domain and functional approaches. It covers the principles of parallel computing, including resource utilization across multiple processors, and introduces Flynn's classification for multiprocessor systems. Furthermore, it highlights the need for parallel computing to address larger problems, improve efficiency, and overcome limitations associated with serial computing.