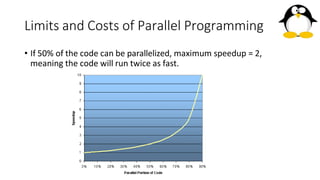

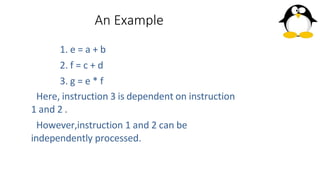

This document provides an overview of parallel and distributed computing. It begins by outlining the key learning outcomes of studying this topic, which include defining parallel algorithms, analyzing parallel performance, applying task decomposition techniques, and performing parallel programming. It then reviews the history of computing from the batch era to today's network era. The rest of the document discusses parallel computing concepts like Flynn's taxonomy, shared vs distributed memory systems, limits of parallelism based on Amdahl's law, and different types of parallelism including bit-level, instruction-level, data, and task parallelism. It concludes by covering parallel implementation in both software through parallel programming and in hardware through parallel processing.