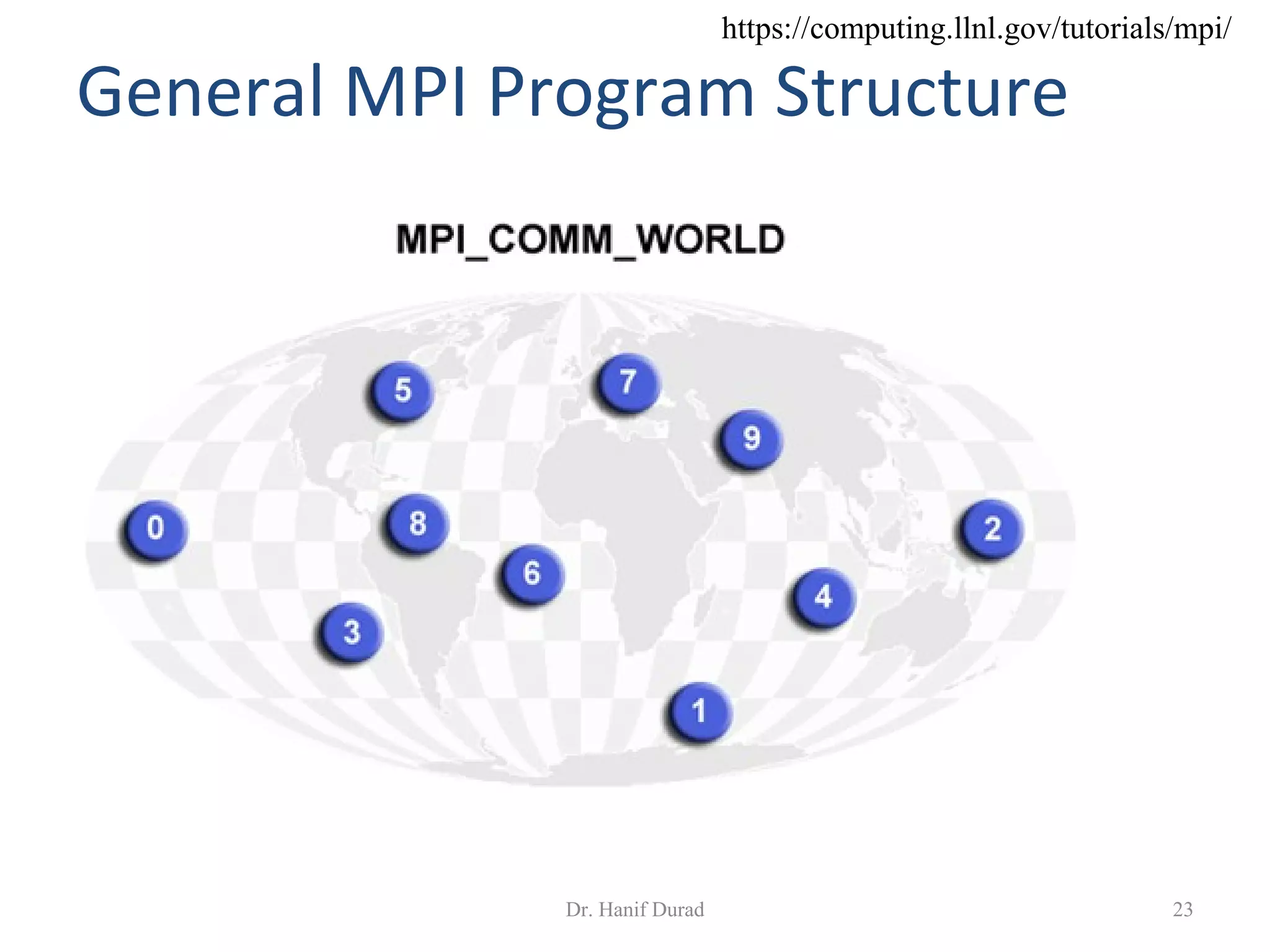

This document summarizes an introduction to MPI lecture. It outlines the lecture topics which include models of communication for parallel programming, MPI libraries, features of MPI, programming with MPI, using the MPI manual, compilation and running MPI programs, and basic MPI concepts. It provides examples of "Hello World" programs in C, Fortran, and C++. It also discusses what was learned in the lecture which includes processes, communicators, ranks, and the default communicator MPI_COMM_WORLD. The document concludes with noting the general MPI program structure involves initialization, communication/computation, and finalization steps. For homework, it asks to modify the previous "Hello World" program to also print the processor name executing each process using MPI_

![Hello (C)

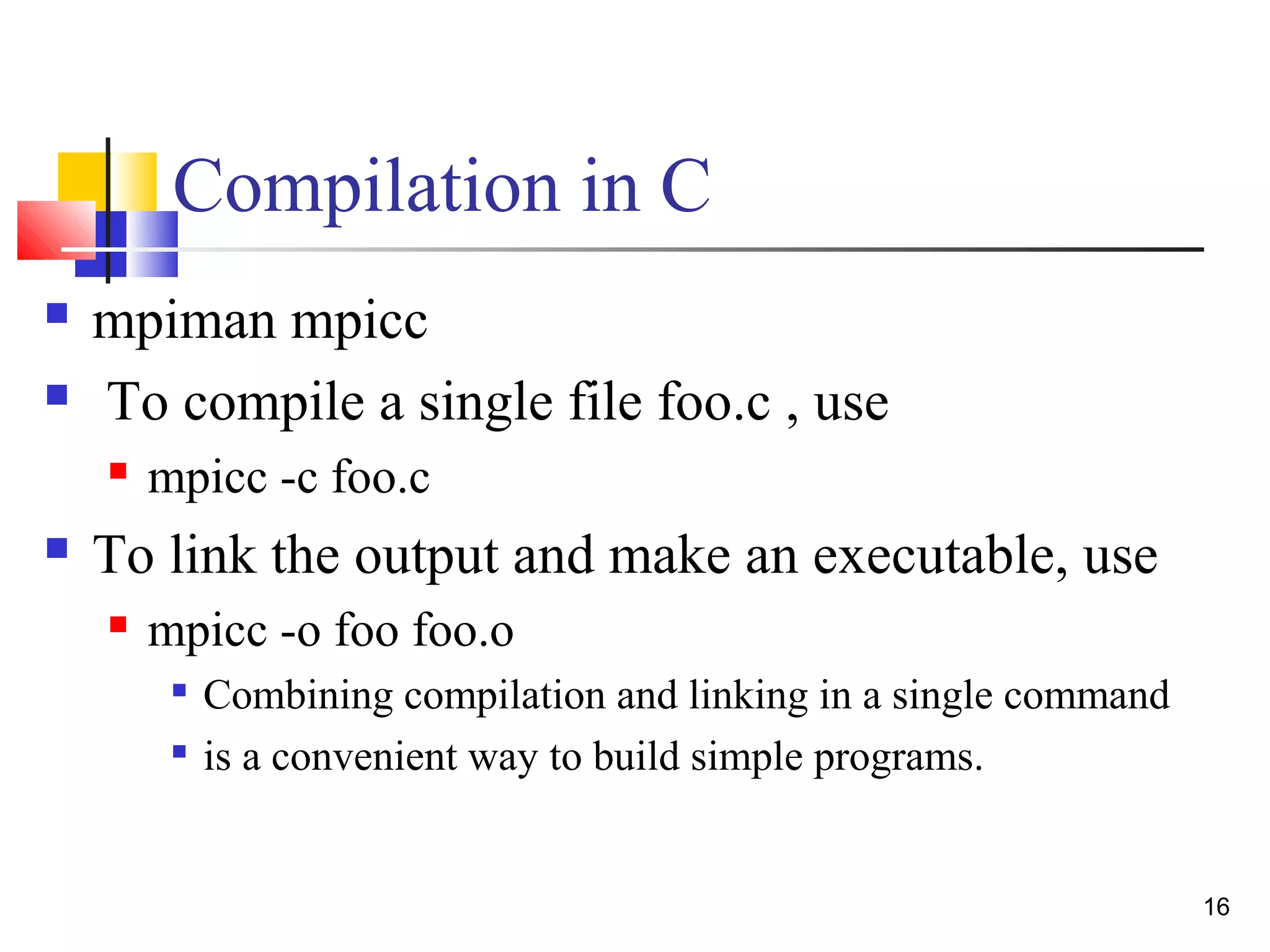

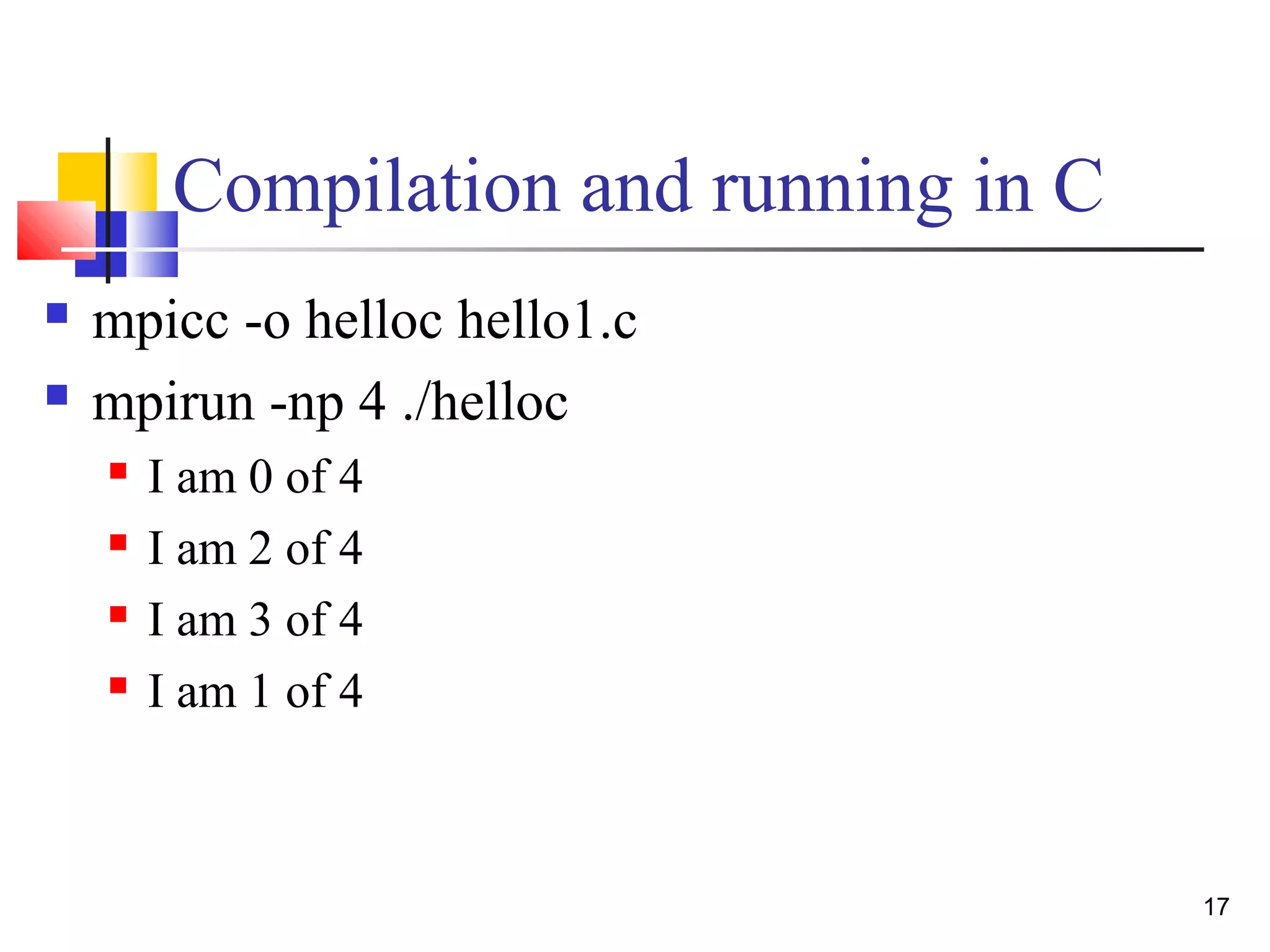

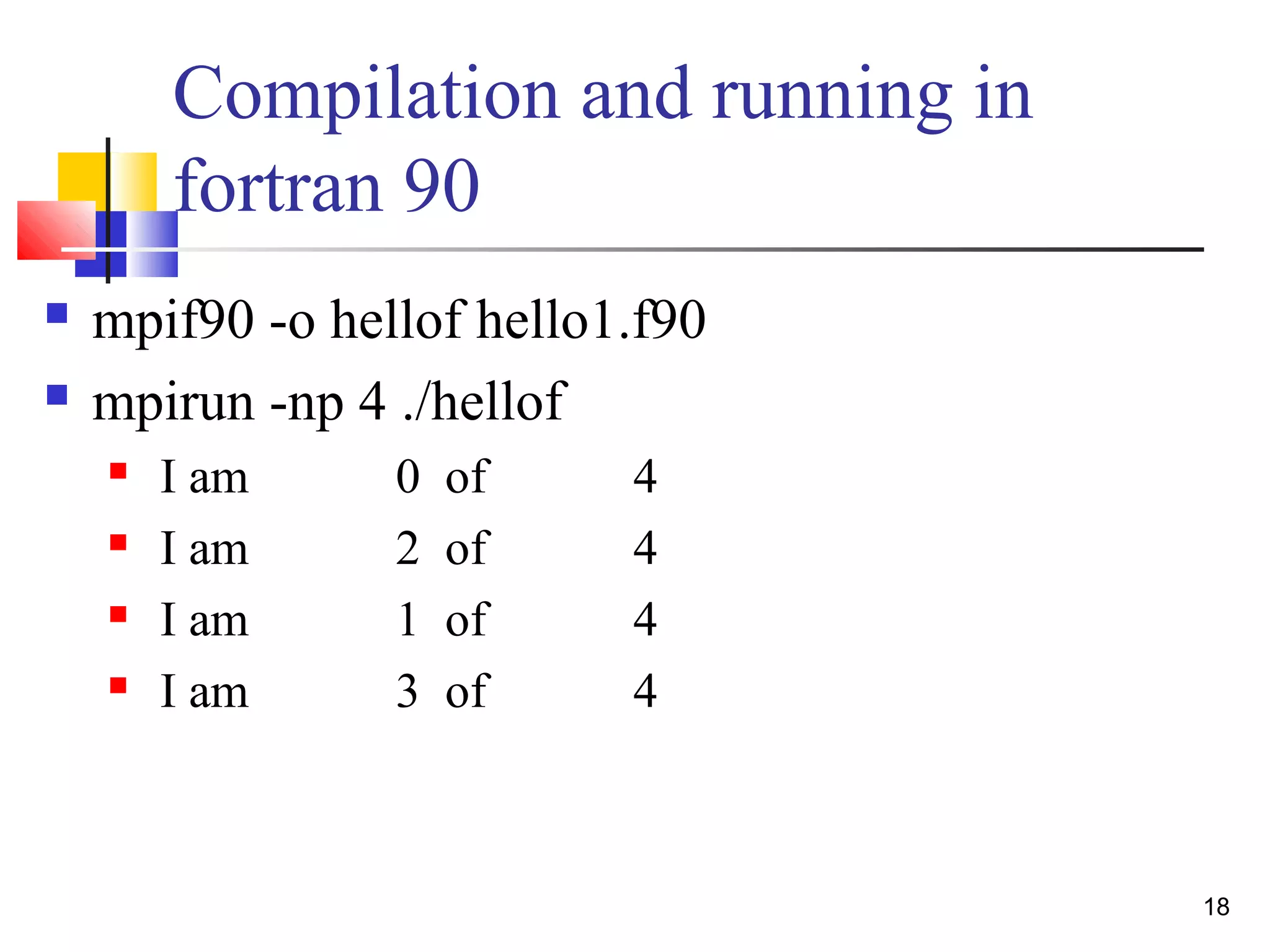

#include "mpi.h"

#include <stdio.h>

int main( int argc, char *argv[] )

{

int rank, size;

MPI_Init( &argc, &argv );

MPI_Comm_rank( MPI_COMM_WORLD, &rank );

MPI_Comm_size( MPI_COMM_WORLD, &size );

printf( "I am %d of %dn", rank, size );

MPI_Finalize();

return 0;

}

Dr. Hanif Durad 12

IntroMPI.ppt](https://image.slidesharecdn.com/mpi1-150607121241-lva1-app6892/75/Introduction-to-MPI-12-2048.jpg)

![Hello (C++)

Dr. Hanif Durad 14

#include "mpi.h"

#include <iostream>

int main( int argc, char *argv[] )

{

int rank, size;

MPI::Init(argc, argv);

rank = MPI::COMM_WORLD.Get_rank();

size = MPI::COMM_WORLD.Get_size();

std::cout << "I am " << rank << " of " << size <<

"n";

MPI::Finalize();

return 0;

}

IntroMPI.ppt](https://image.slidesharecdn.com/mpi1-150607121241-lva1-app6892/75/Introduction-to-MPI-14-2048.jpg)