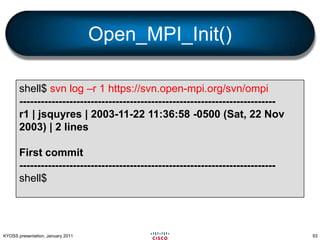

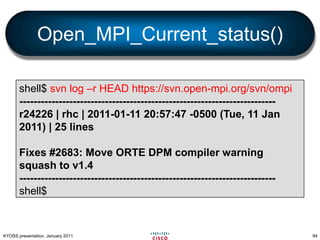

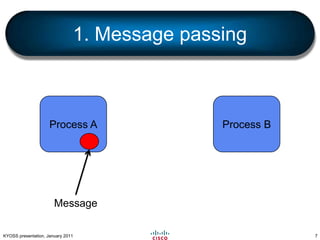

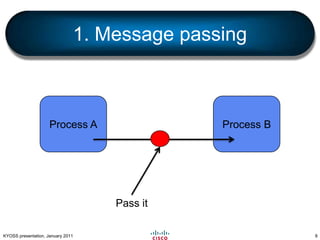

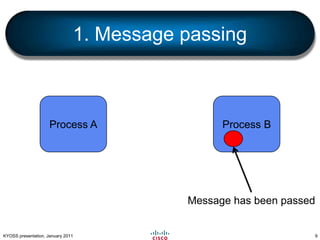

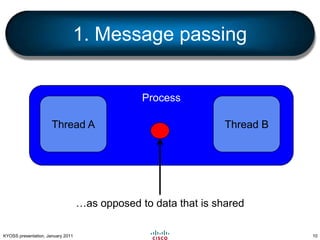

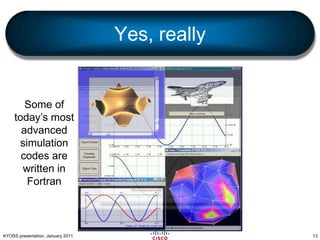

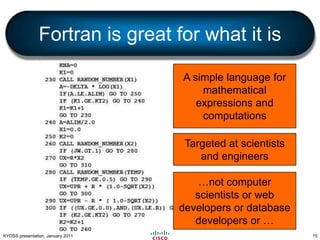

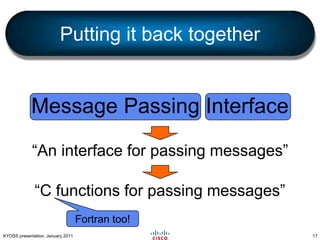

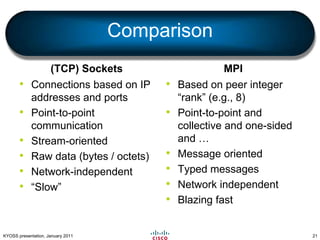

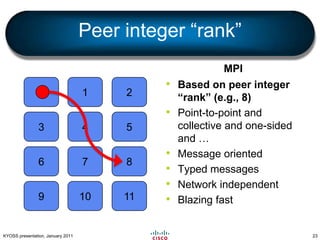

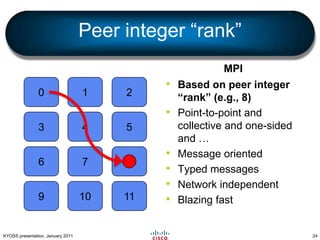

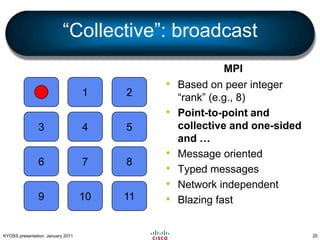

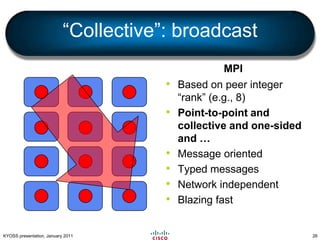

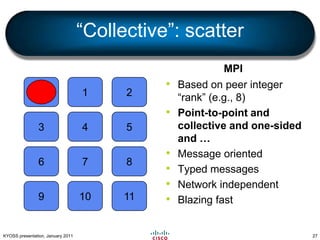

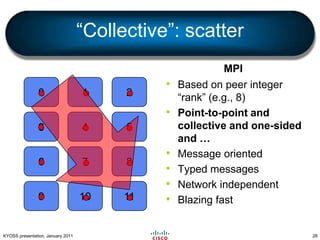

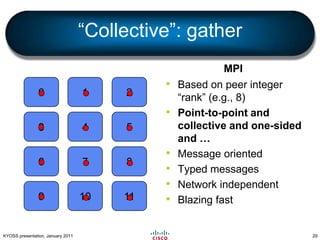

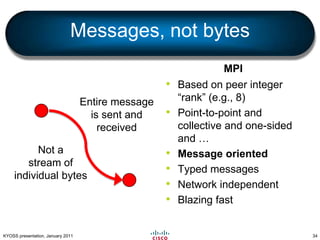

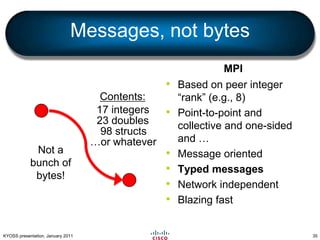

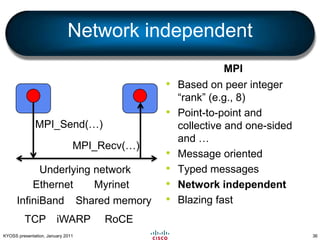

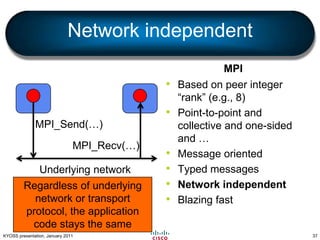

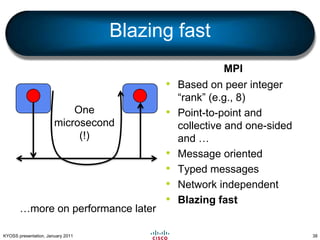

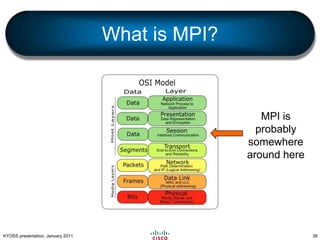

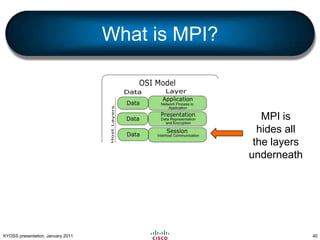

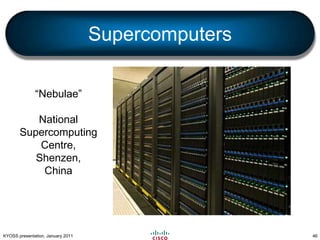

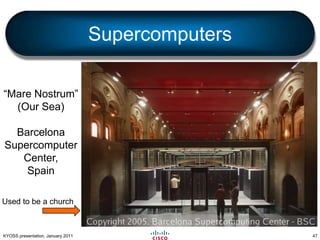

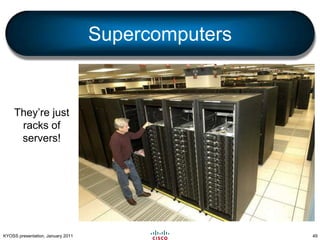

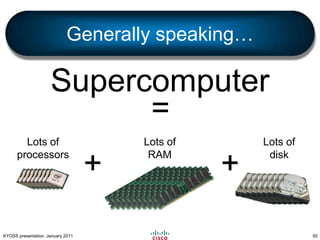

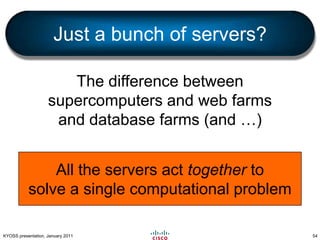

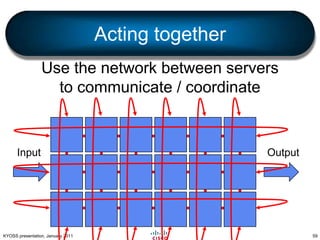

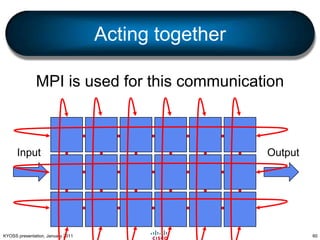

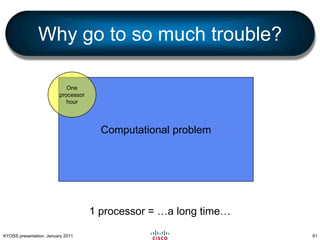

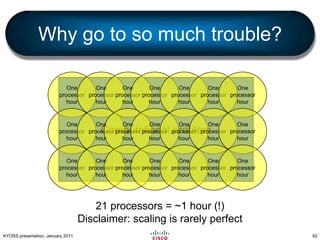

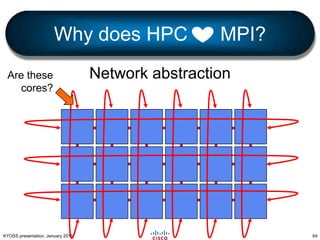

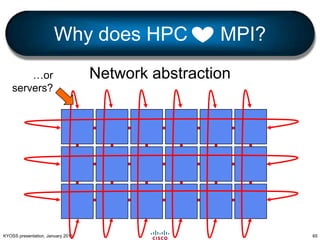

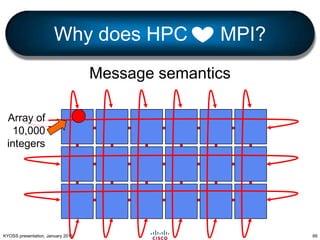

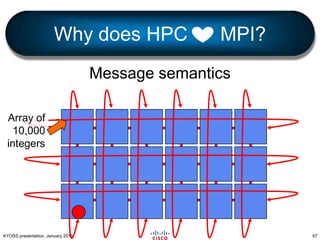

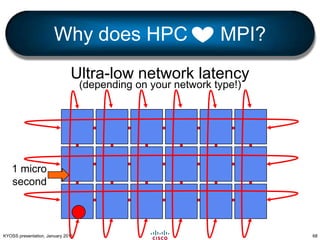

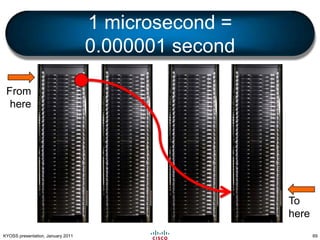

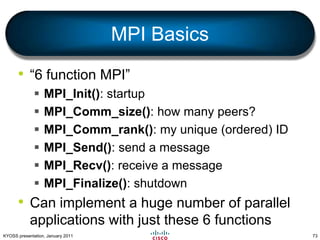

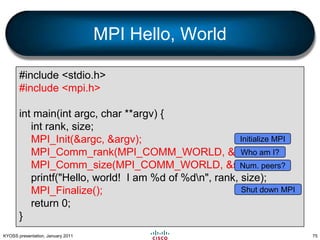

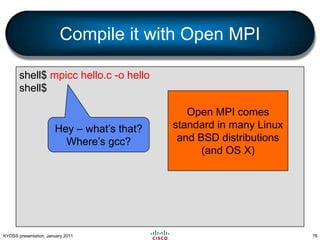

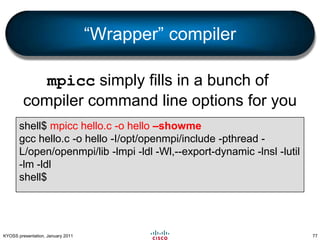

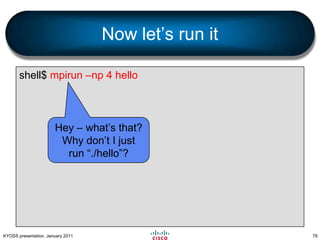

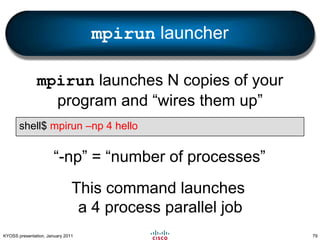

The document is a presentation by Jeff Squyres about the Message Passing Interface (MPI) and its implementations, particularly Open MPI. It explains the concepts of message passing in high-performance computing, the role of MPI in supercomputers, and provides a basic overview of MPI functions and usage through examples. Additionally, it highlights the community and development of Open MPI since its inception in 2003.

![Send a simple messageint rank;double buffer[SIZE];MPI_Comm_rank(MPI_COMM_WORLD, &rank);if (0 == rank) { /* …initialize buffer[]… */MPI_Send(buffer, SIZE, MPI_DOUBLE, 1, 123, MPI_COMM_WORLD);} else if (1 == rank) {MPI_Recv(buffer, SIZE, MPI_DOUBLE, 0, 123, MPI_COMM_WORLD, MPI_STATUS_IGNORE);}If I’m number 0, send the buffer[] array to number 1If I’m number 1, receive thebuffer[] array from number 0](https://image.slidesharecdn.com/kyoss-mpi-pdf-able-110204104651-phpapp02/85/The-Message-Passing-Interface-MPI-in-Layman-s-Terms-90-320.jpg)