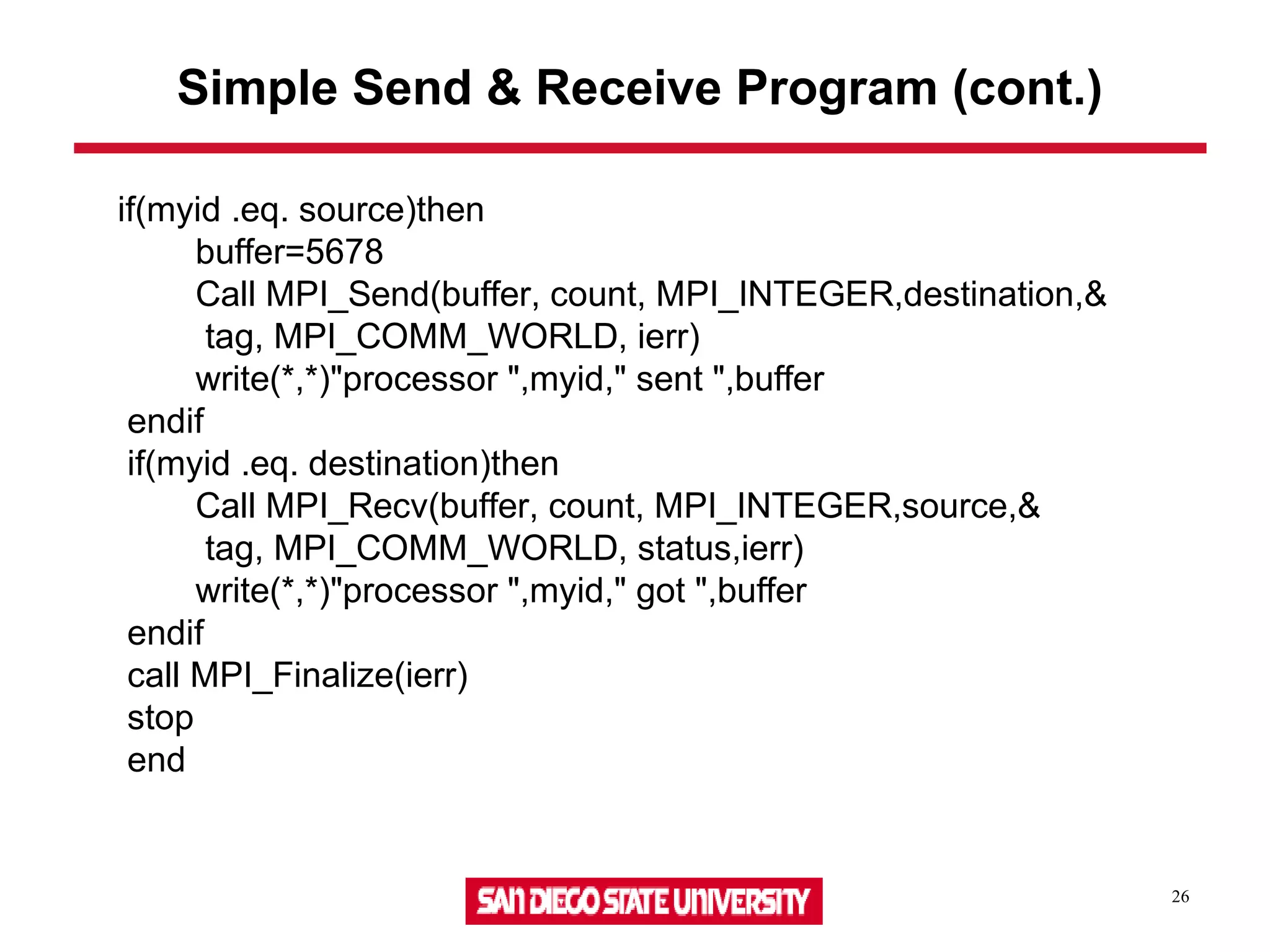

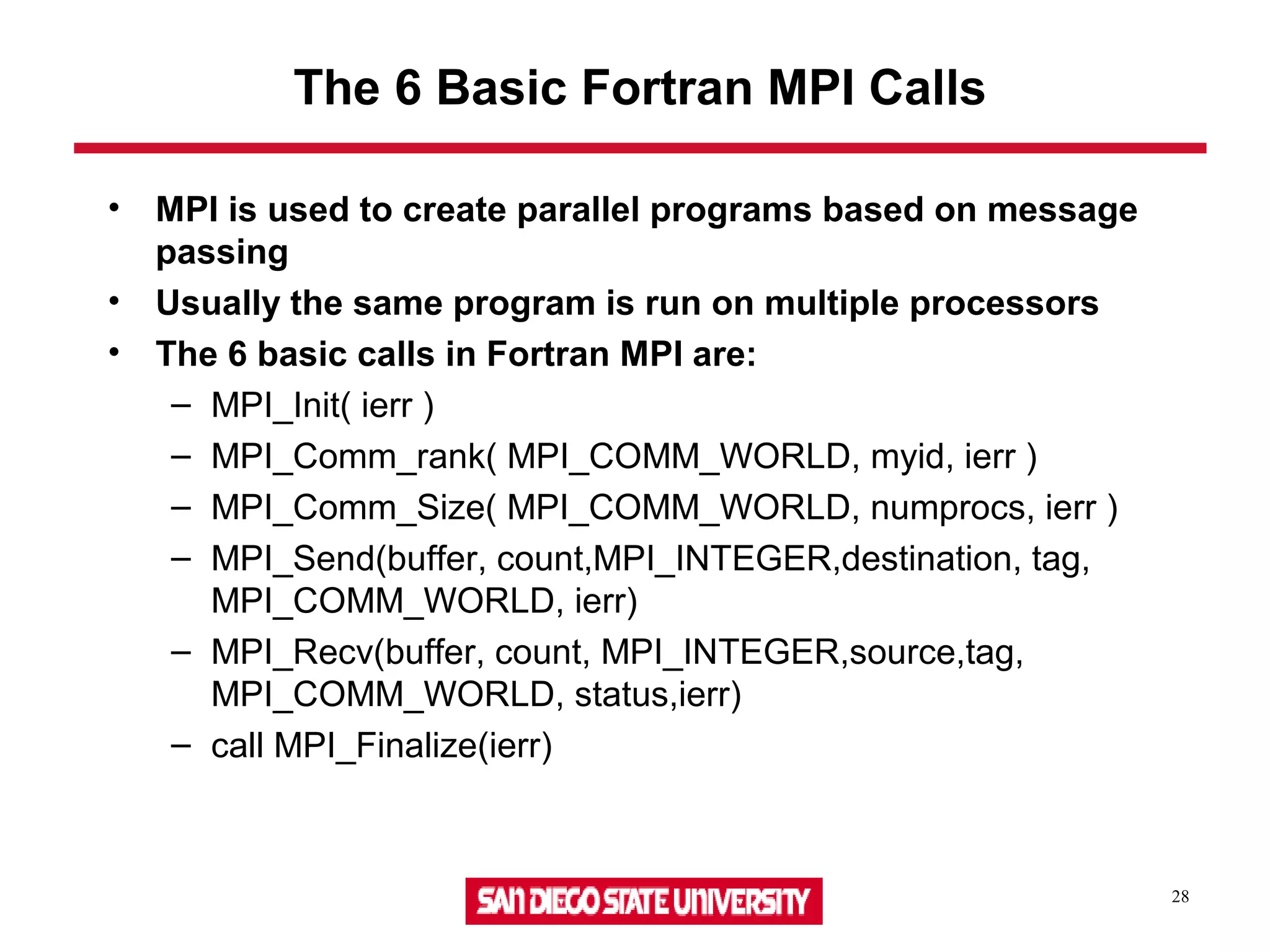

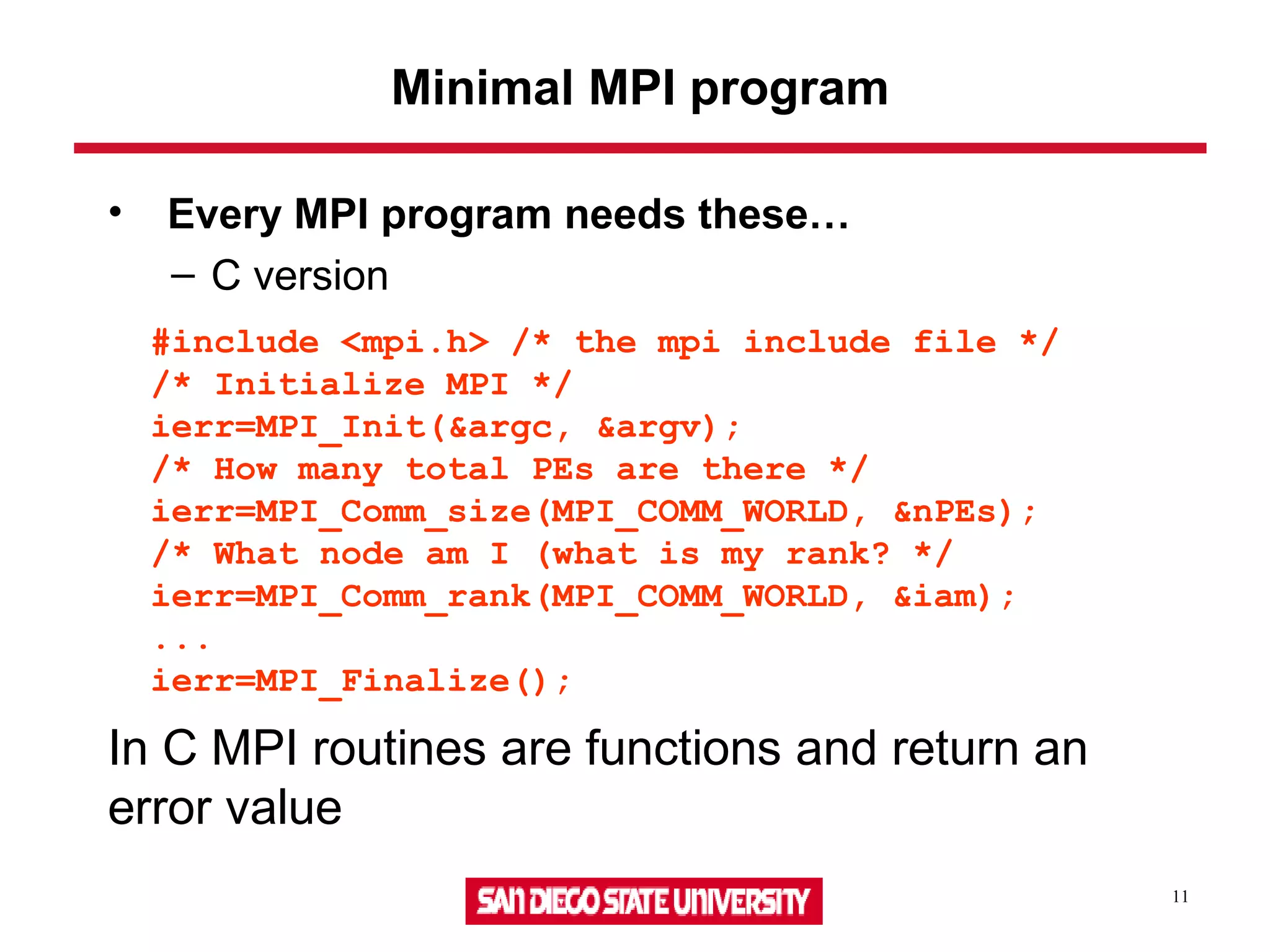

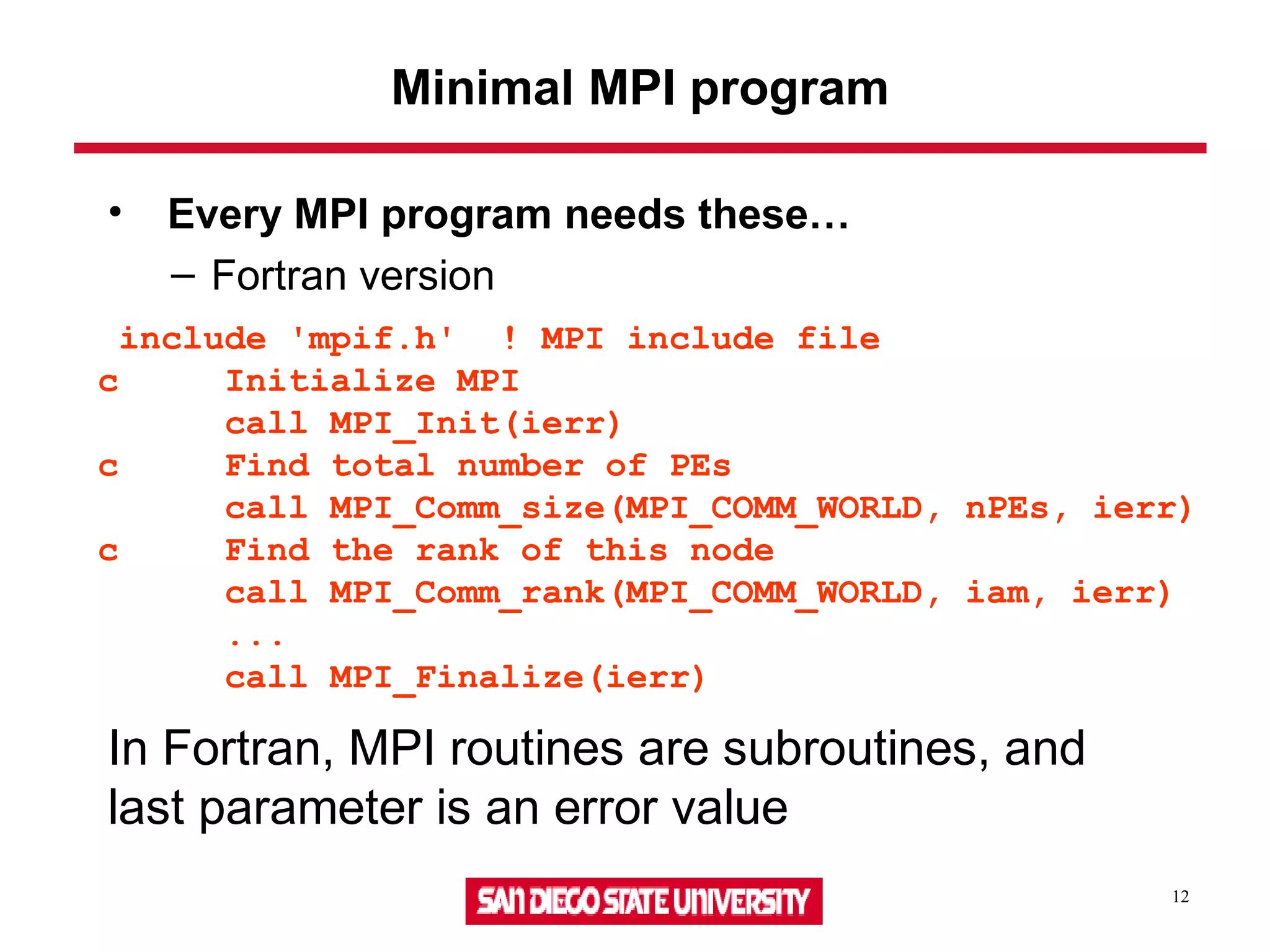

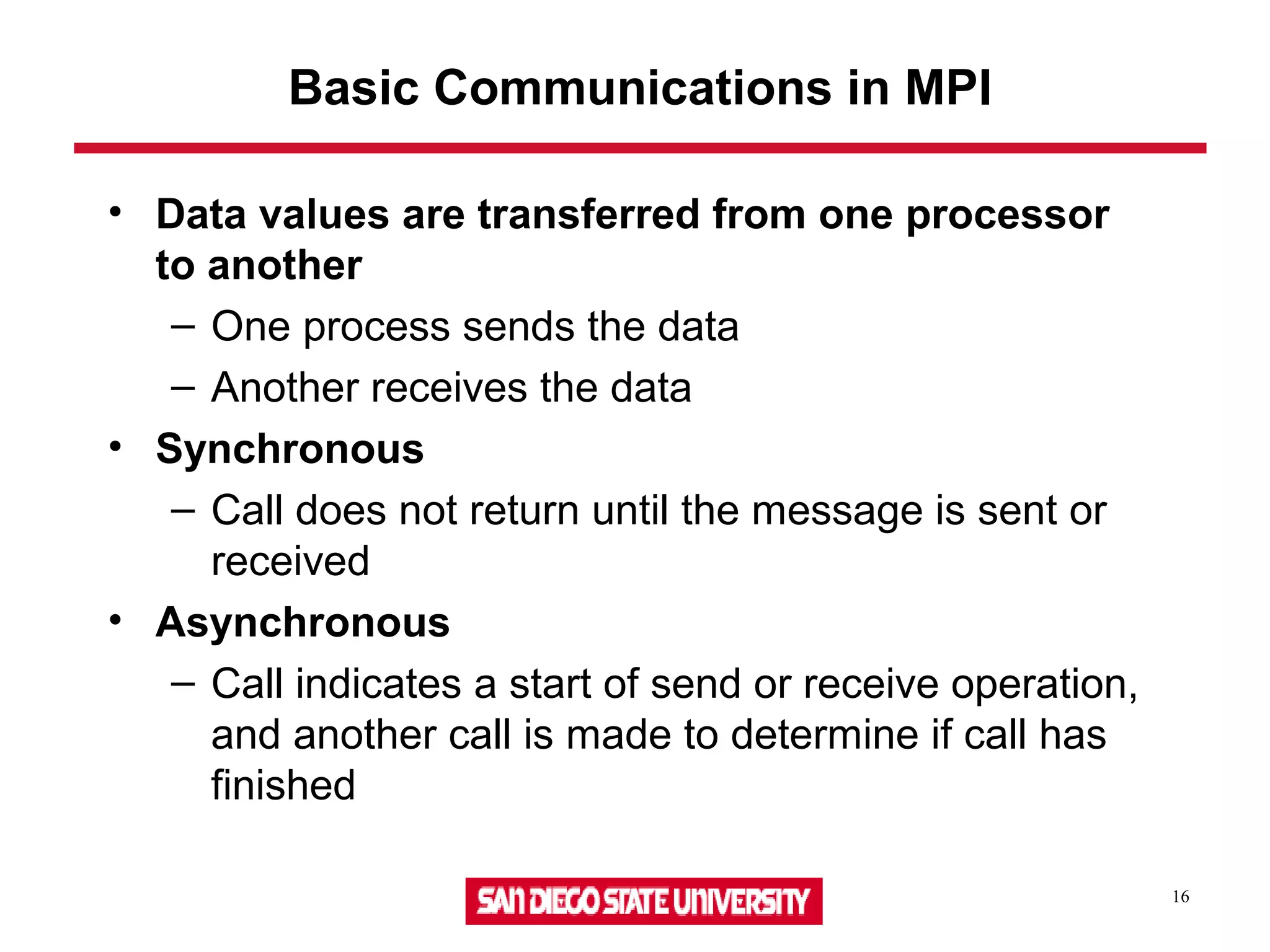

This document provides an overview of Message Passing Interface (MPI) including advantages of the message passing programming model, background on MPI, key concepts, and examples of basic MPI communications. The 6 basic MPI calls in C and Fortran are described which include MPI_Init, MPI_Comm_rank, MPI_Comm_Size, MPI_Send, MPI_Recv, and MPI_Finalize. A simple example program demonstrates a basic send and receive of an integer between two processors.

![14

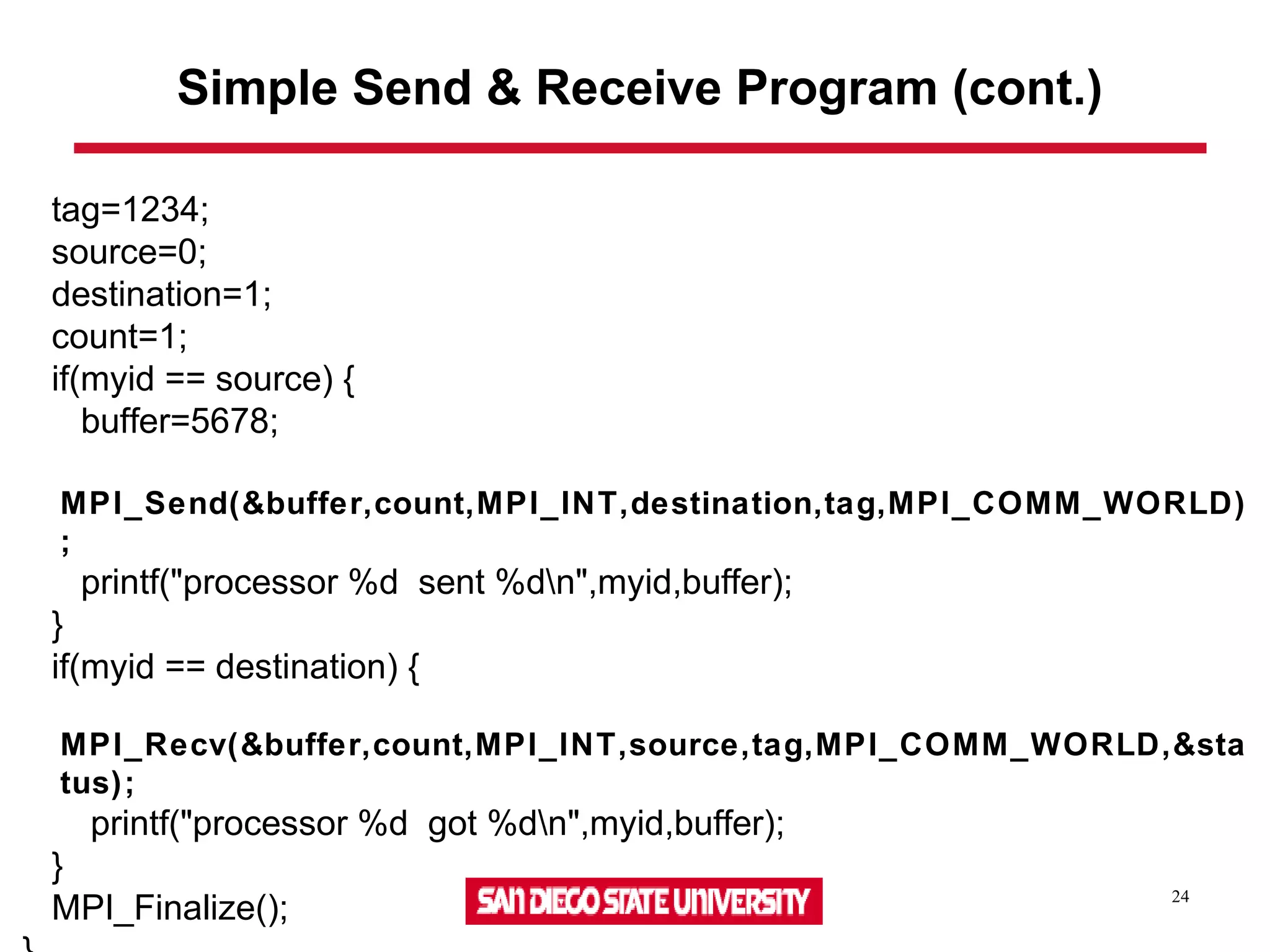

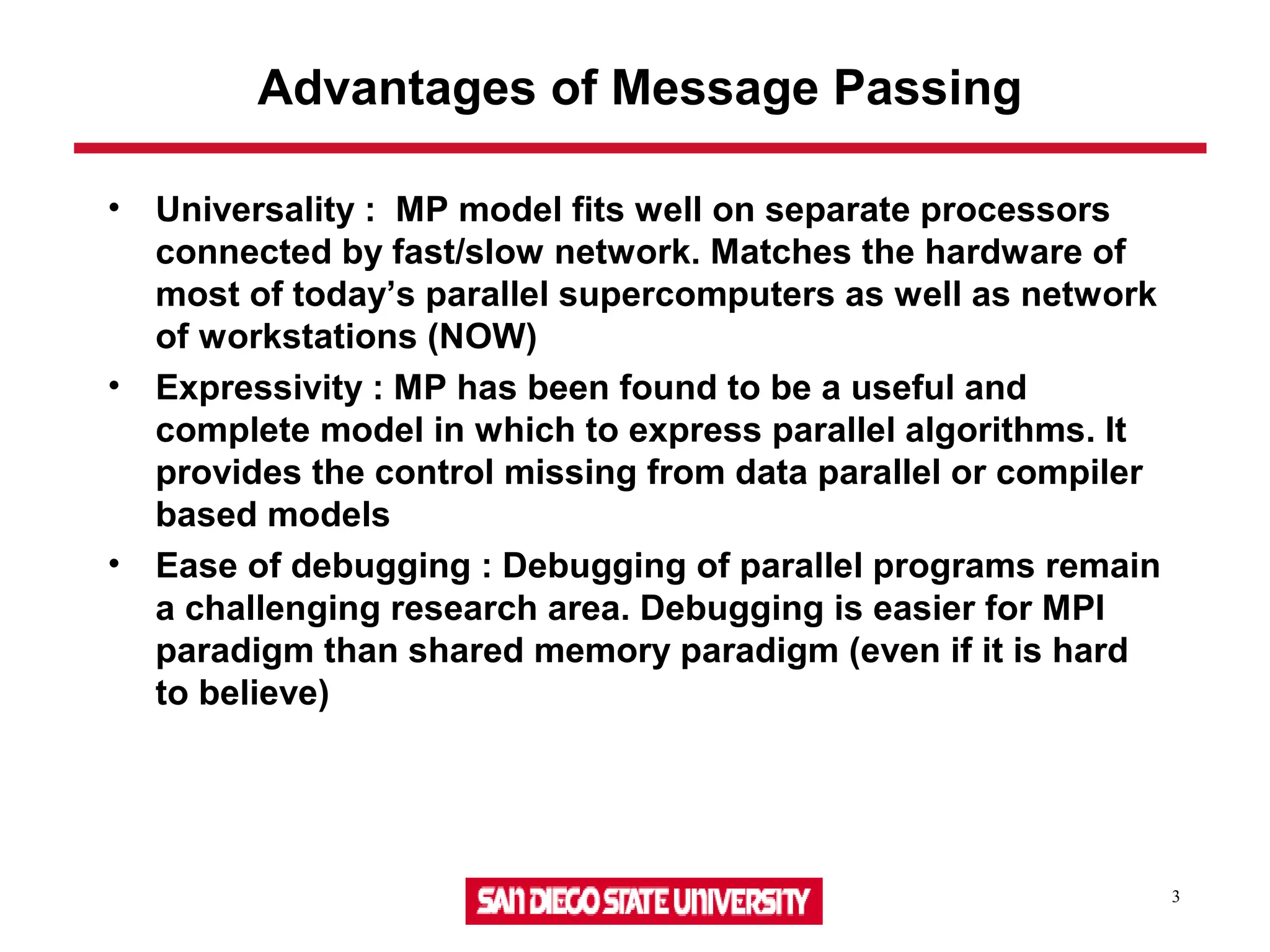

#include <stdio.h>

#include <math.h>

#include <mpi.h>

int main(argc, argv)

int argc;

char *argv[ ];

{

int myid, numprocs;

MPI_Init (&argc, &argv) ;

MPI_Comm_size(MPI_COMM_WORLD, &numprocs) ;

MPI_Comm_rank(MPI_COMM_WORLD, &myid) ;

printf(“Hello from %dn”, myid) ;

printf(“Numprocs is %dn”, numprocs) ;

MPI_Finalize():

}

C/MPI version of “Hello, World”](https://image.slidesharecdn.com/lecture9-150702053544-lva1-app6892/75/Lecture9-14-2048.jpg)

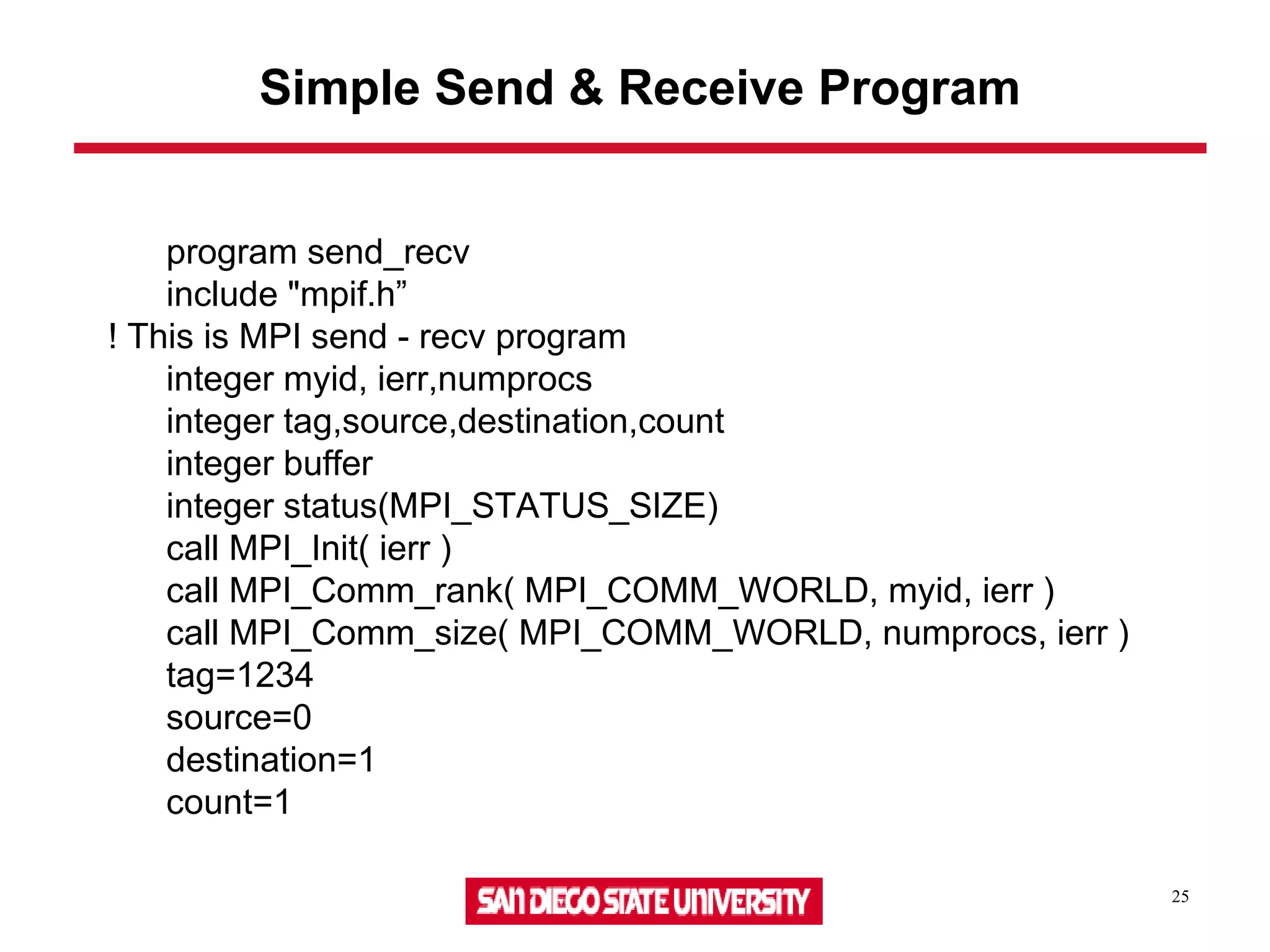

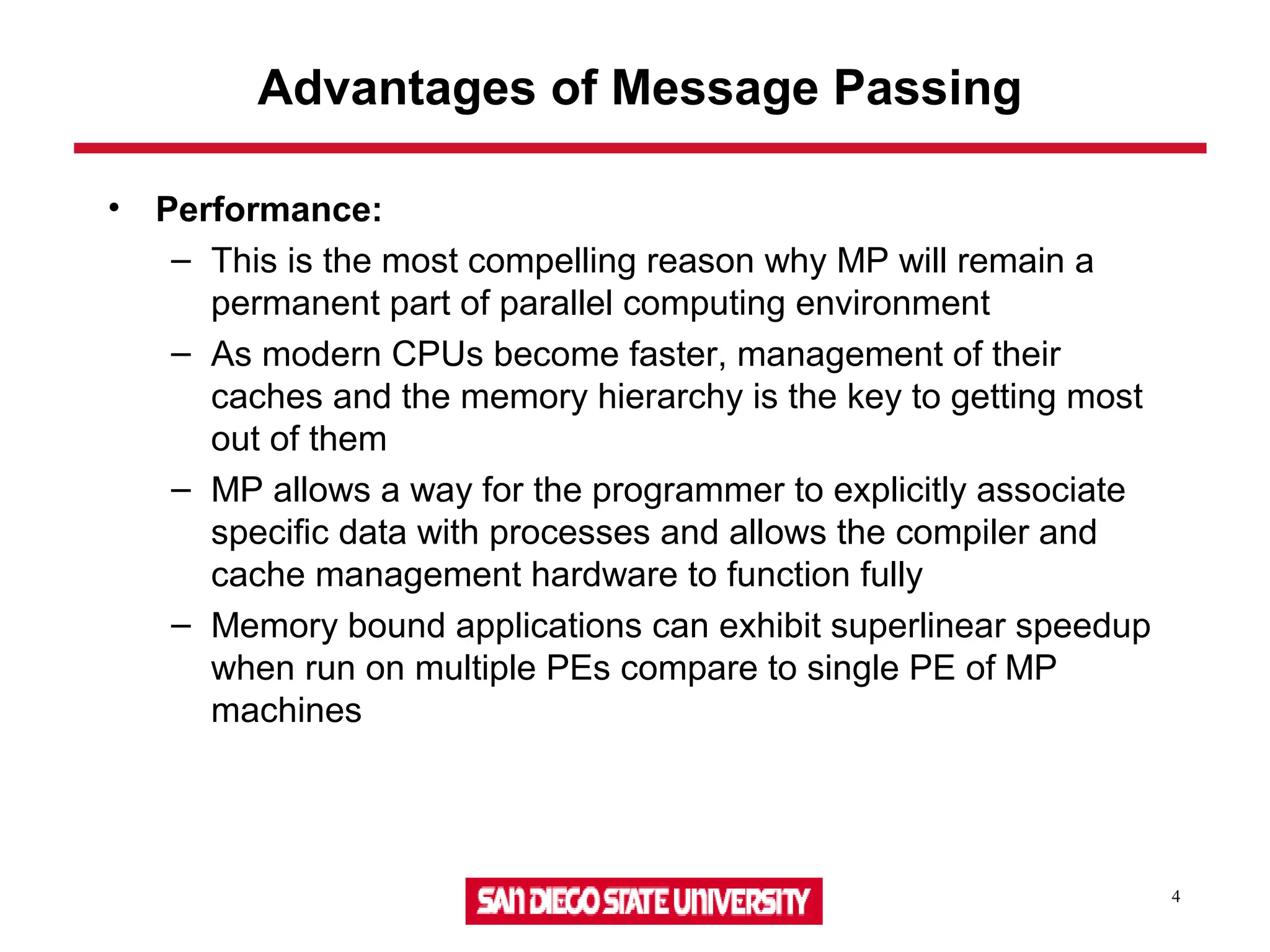

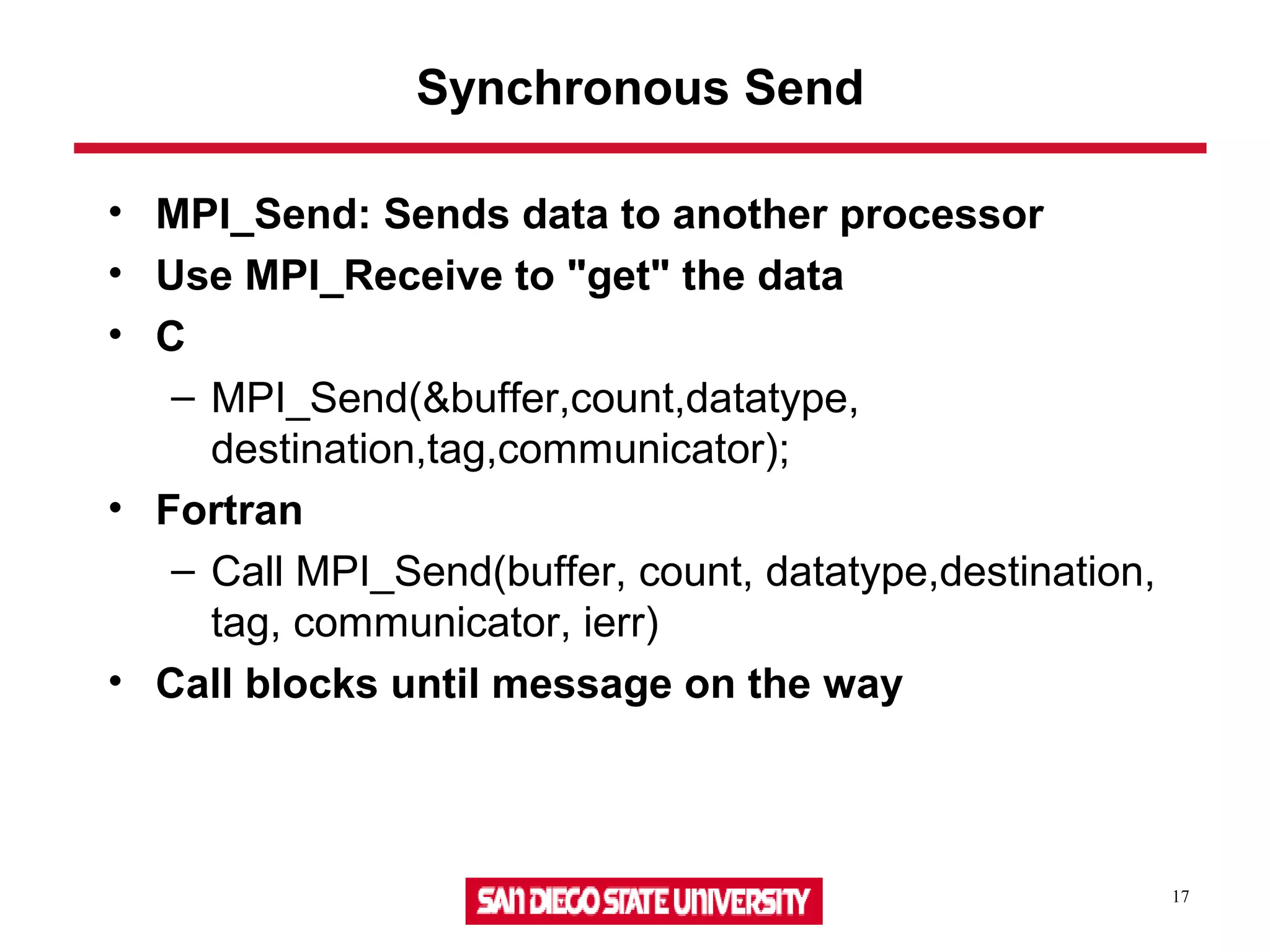

![23

Simple Send & Receive Program

#include <stdio.h>

#include "mpi.h"

#include <math.h>

/************************************************************

This is a simple send/receive program in MPI

************************************************************/

int main(argc,argv)

int argc;

char *argv[];

{

int myid, numprocs;

int tag,source,destination,count;

int buffer;

MPI_Status status;

MPI_Init(&argc,&argv);

MPI_Comm_size(MPI_COMM_WORLD,&numprocs);

MPI_Comm_rank(MPI_COMM_WORLD,&myid);](https://image.slidesharecdn.com/lecture9-150702053544-lva1-app6892/75/Lecture9-23-2048.jpg)