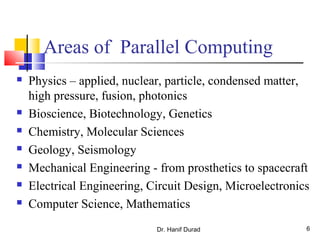

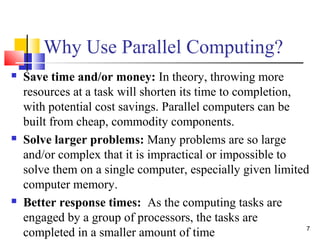

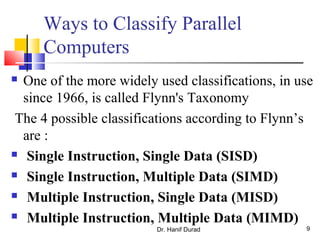

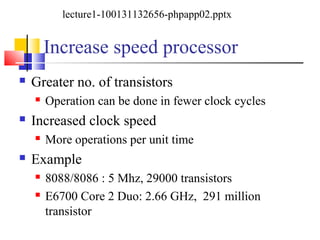

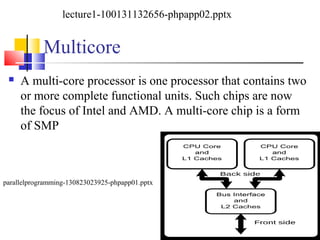

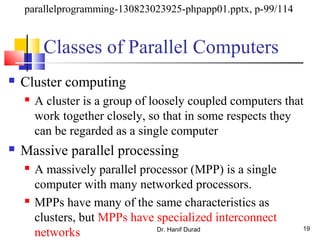

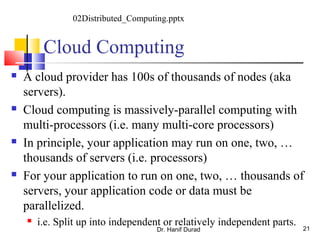

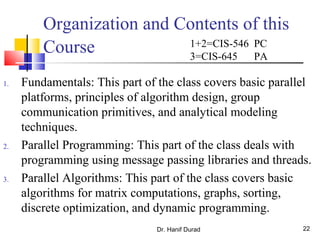

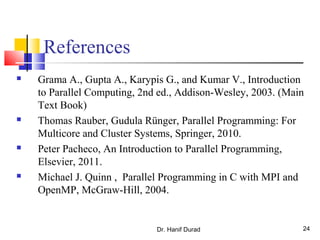

This document outlines the course policies and contents of an introduction to parallel computing course. The course will cover fundamentals of parallel platforms, parallel programming using message passing and threads, and parallel algorithms. It will introduce concepts like multicore processing, GPGPU computing, and parallel programming models. The course is divided into sections on fundamentals, programming, and algorithms. References for further reading on parallel and distributed computing are also provided.