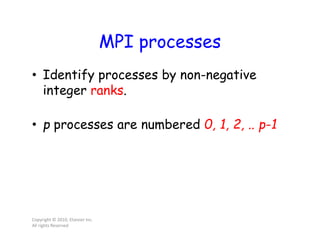

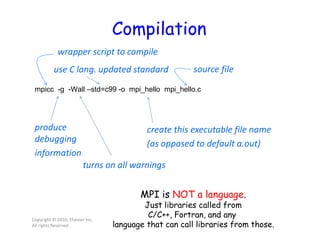

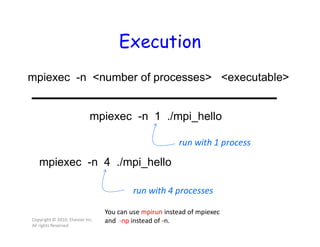

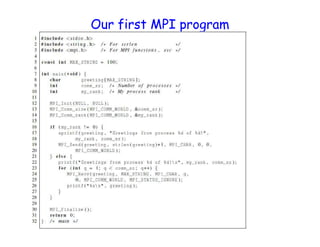

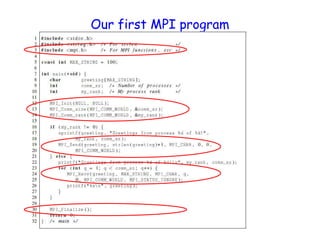

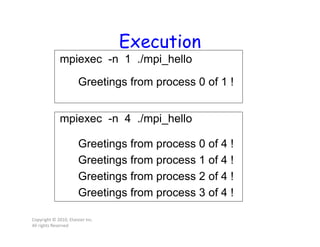

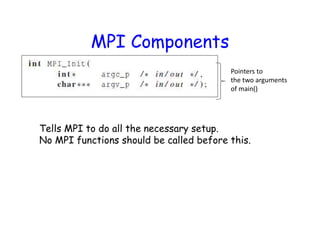

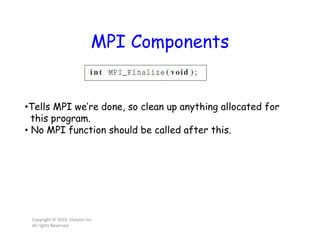

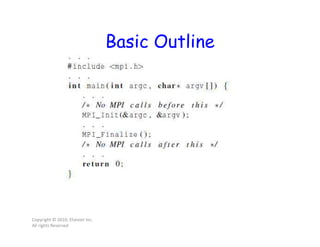

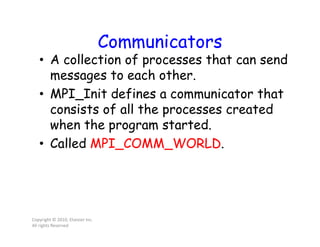

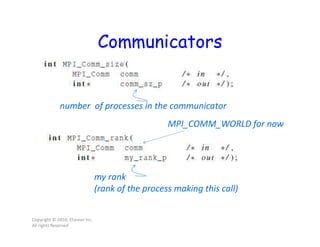

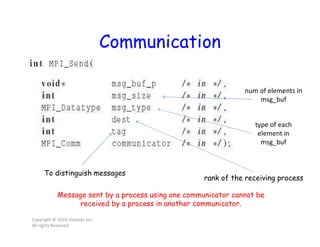

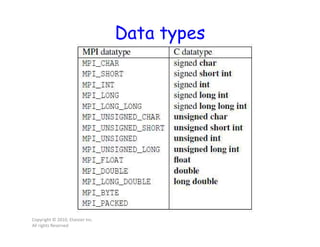

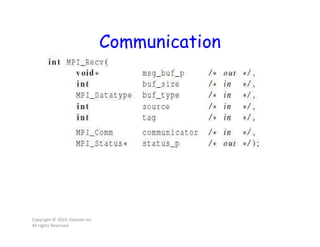

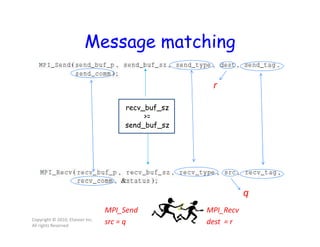

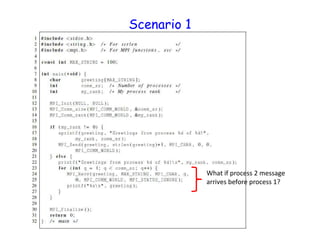

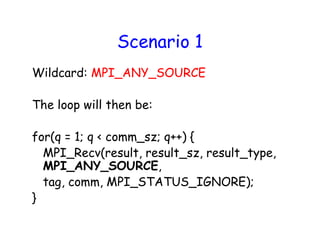

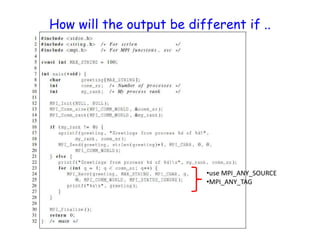

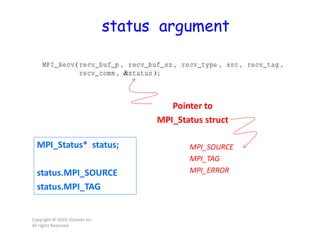

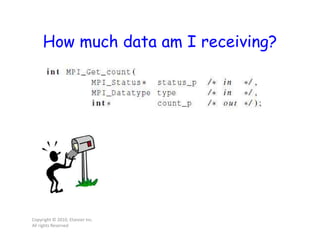

This document discusses parallel computing using MPI (Message Passing Interface). It covers key MPI concepts like processes, communicators, data types, and communication using Send and Receive. The document provides code examples to initialize MPI, identify processes, compile and run MPI programs, and send/receive messages between processes. It also discusses issues like non-deterministic message order and the use of wildcards to handle them.