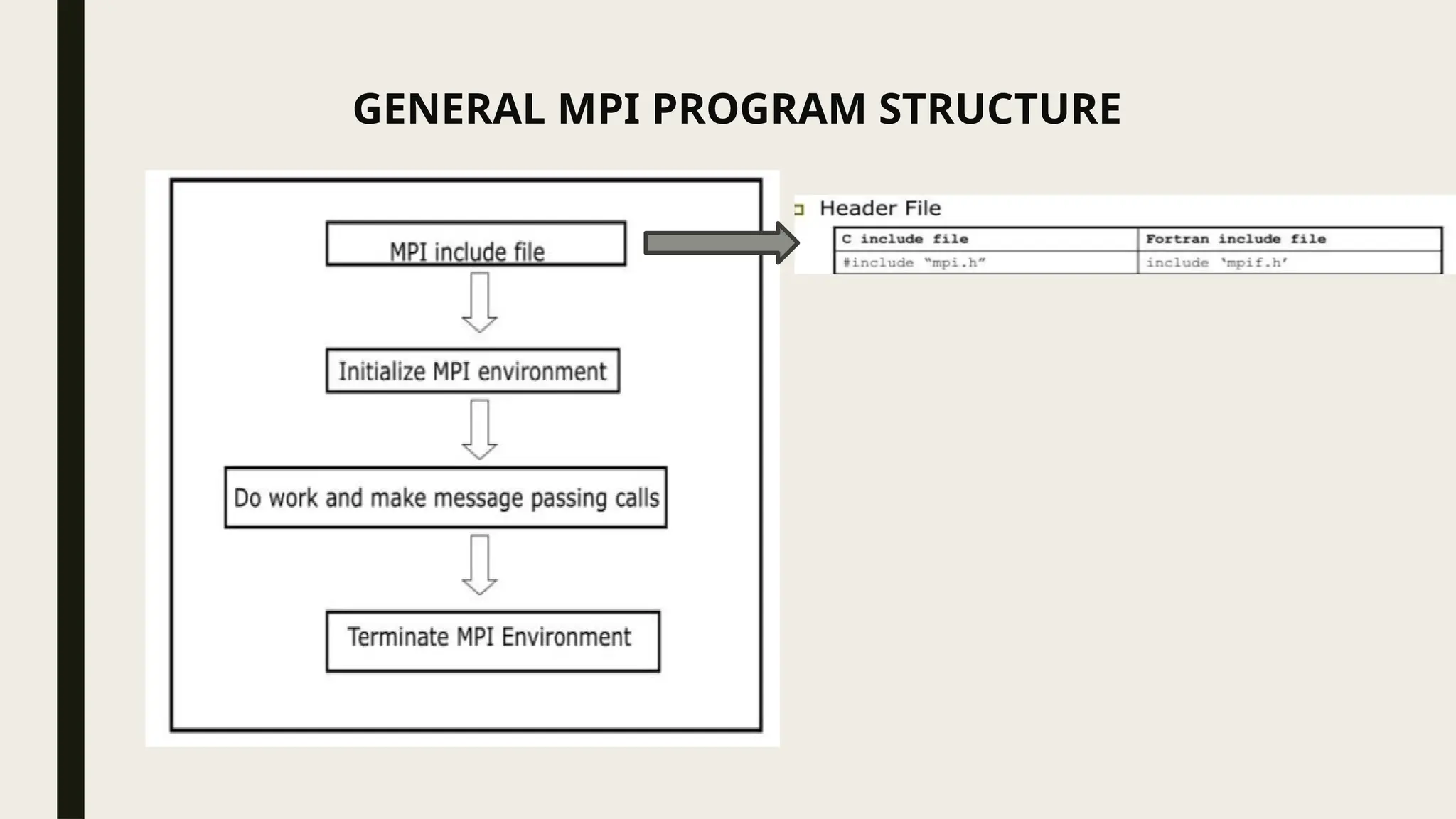

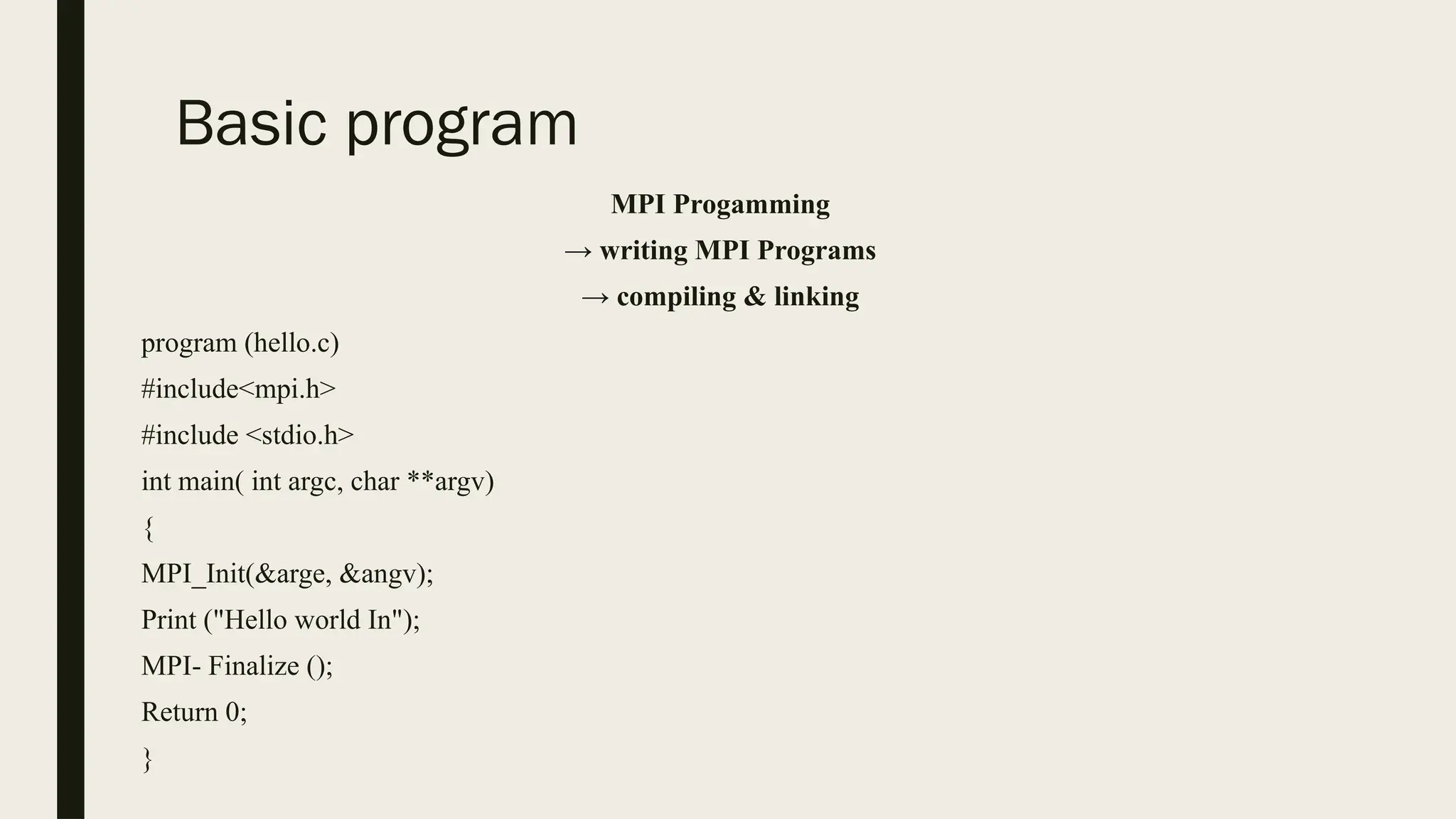

The document provides an overview of the Message Passing Interface (MPI), a standardized system for communication among parallel processes in distributed computing. It discusses key concepts such as message passing, process communication, and the scalability of MPI across various hardware platforms, along with common implementations and benefits. Additionally, it outlines MPI communication modes, message passing operations, and a basic structure for MPI programming.