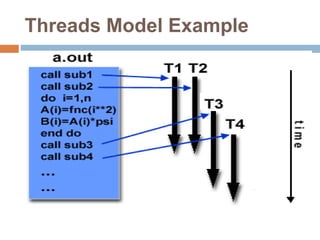

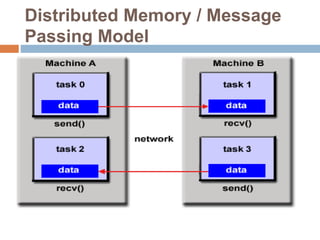

The document discusses various parallel programming models, including shared memory, message passing, threads, and data parallel models. It outlines the advantages and disadvantages of each model, detailing how they manage tasks, data locality, and communication between processes. Additionally, it touches on specific implementations such as POSIX threads, OpenMP, and the Message Passing Interface (MPI), highlighting their respective features and usage in programming.