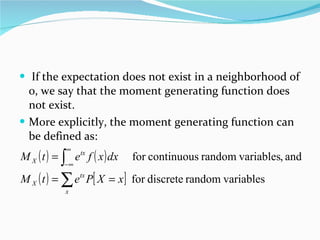

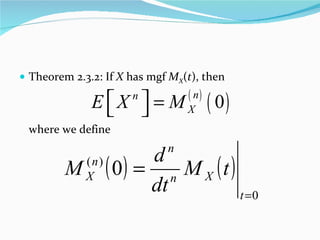

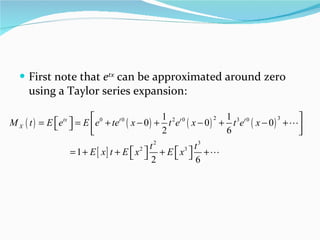

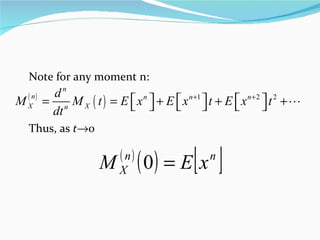

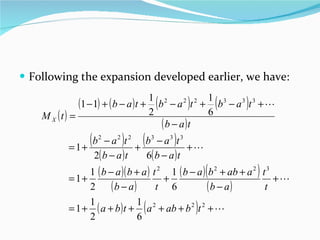

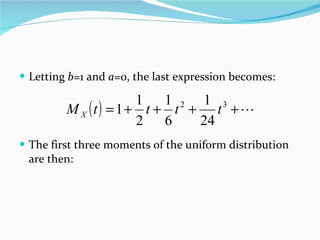

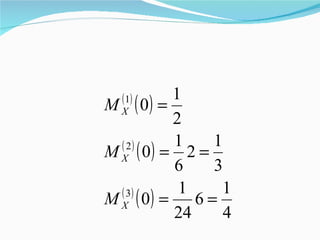

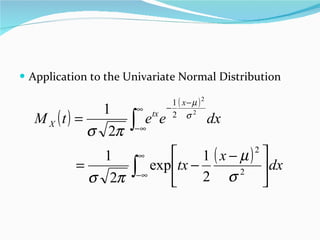

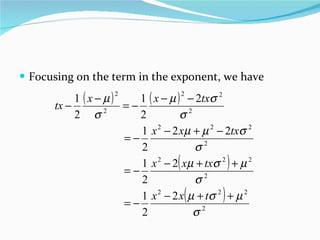

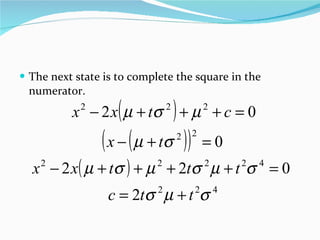

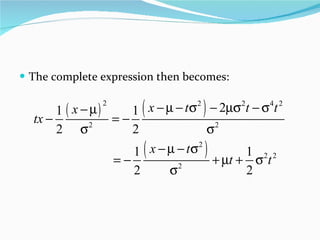

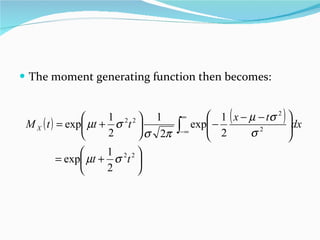

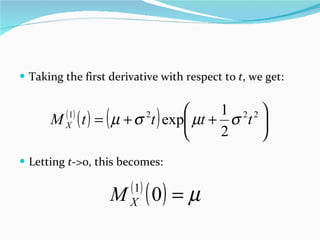

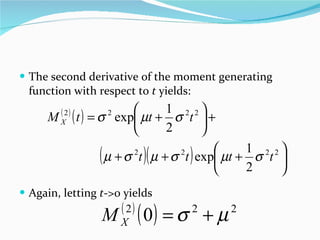

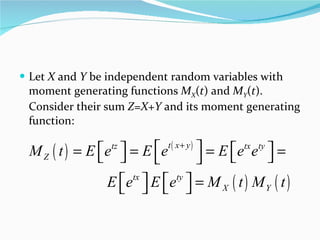

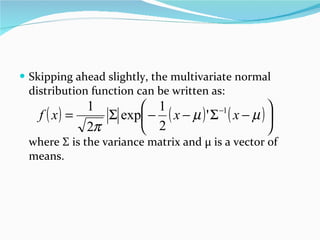

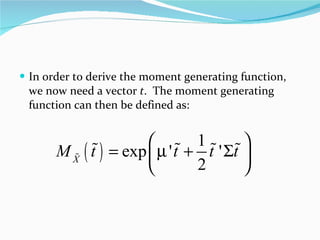

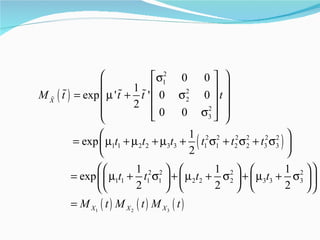

The document defines the moment generating function (MGF) of a random variable X as the expectation of e^tx, provided the expectation exists in some neighborhood of 0. The MGF fully characterizes the distribution of X and can be used to find moments. For the uniform distribution on [0,1], the MGF is (e^t - 1)/t. For the normal distribution with mean μ and variance σ^2, the MGF is e^(tμ + 1/2t^2σ^2). The MGF of independent random variables X and Y is the product of their individual MGFs.

![DEFINITION Definition 2.3.3. Let X be a random variable with cdf F X . The moment generating function (mgf) of X (or F X ), denoted M X (t) , is provided that the expectation exists for t in some neighborhood of 0. That is, there is an h >0 such that, for all t in – h < t < h , E [ e tX ] exists.](https://image.slidesharecdn.com/momentgeneratingfunction-111202085240-phpapp01/85/Moment-generating-function-2-320.jpg)