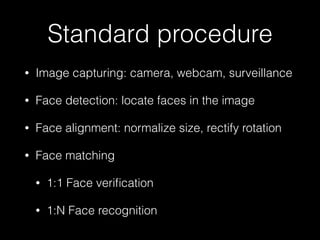

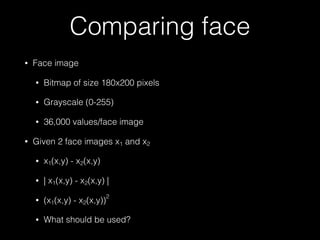

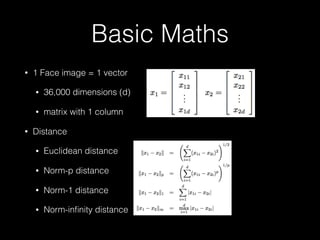

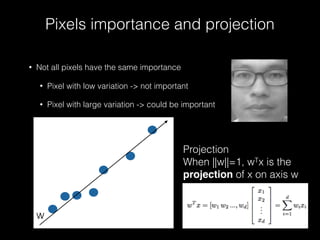

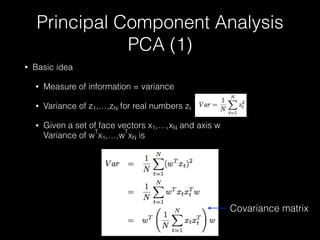

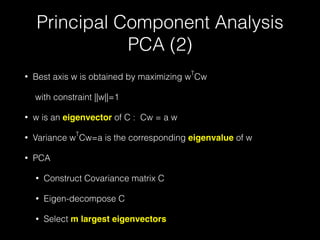

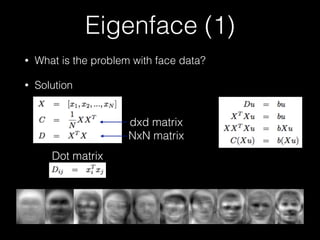

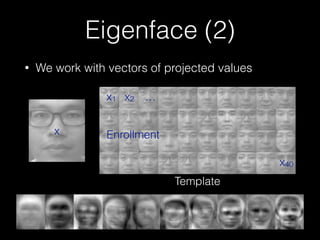

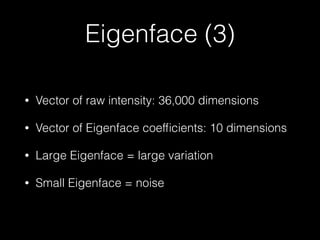

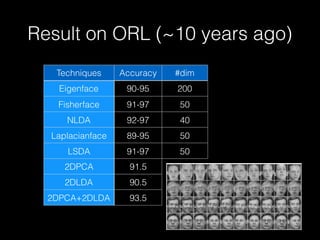

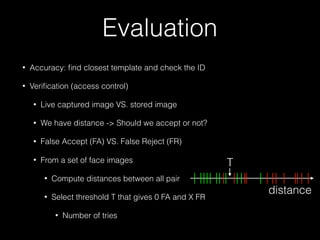

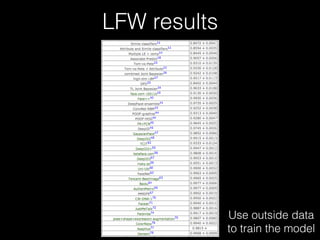

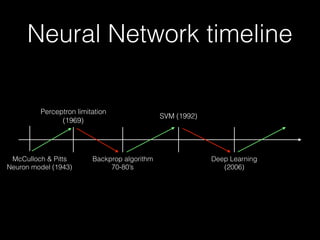

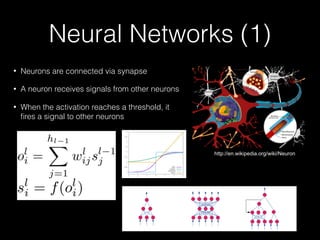

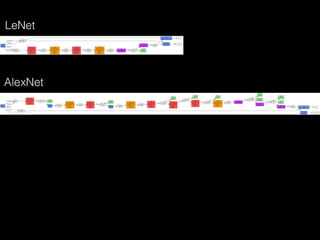

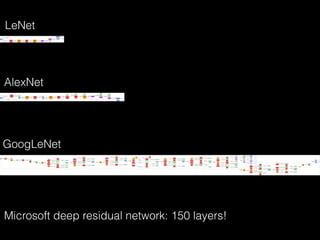

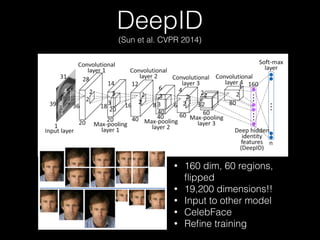

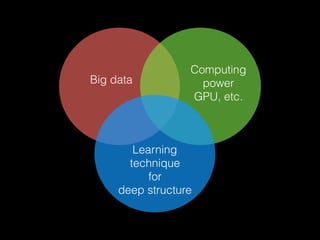

The document outlines the standard procedures and techniques used in face recognition and deep learning, including image capturing, face detection, alignment, and matching. It discusses various methods such as PCA, eigenfaces, and other advanced techniques to enhance recognition accuracy while addressing limitations like occlusion, lighting conditions, and pose variations. Deep learning developments in neural networks and applications like CNNs are also covered, emphasizing their importance in modern face recognition systems.