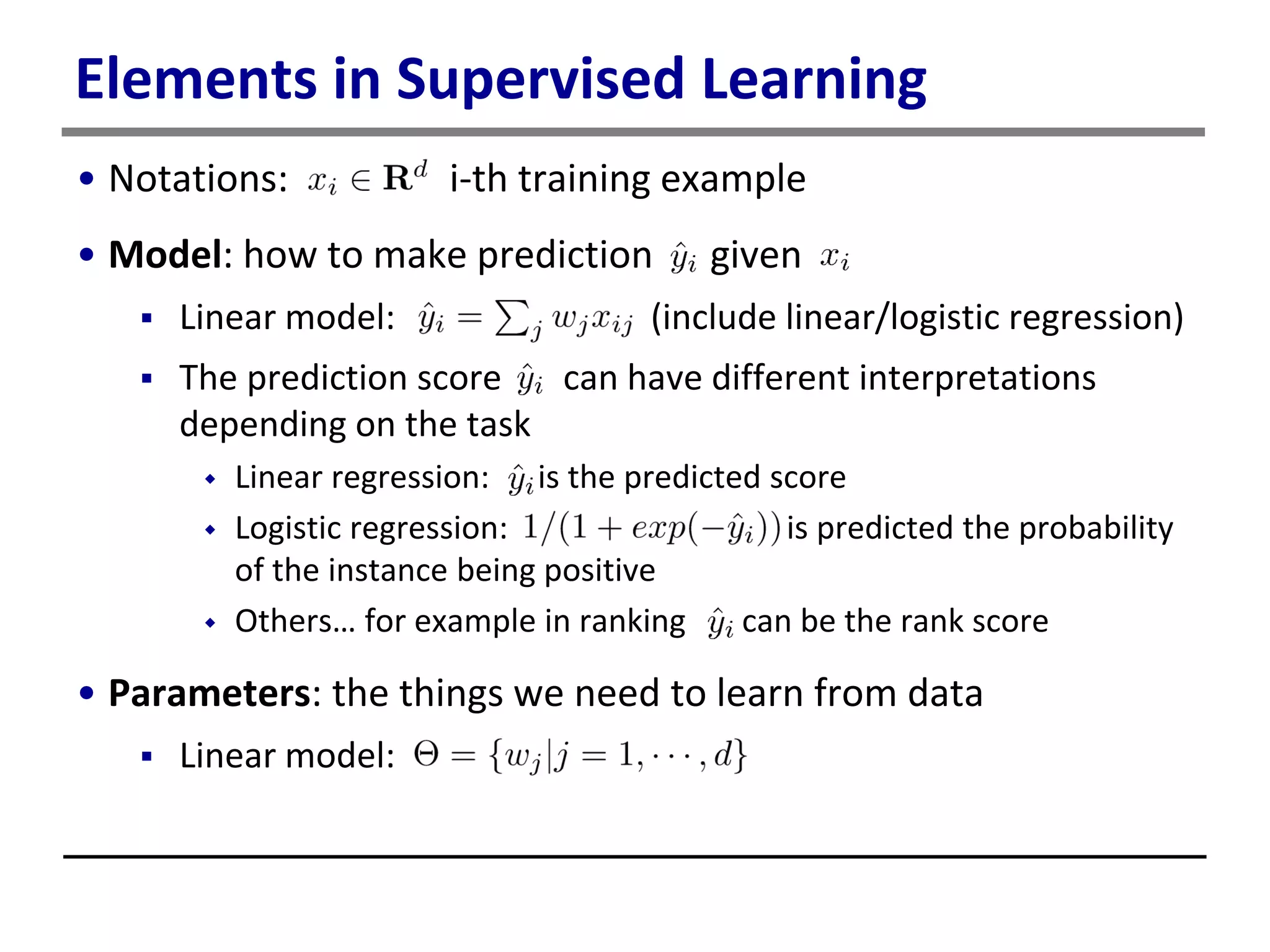

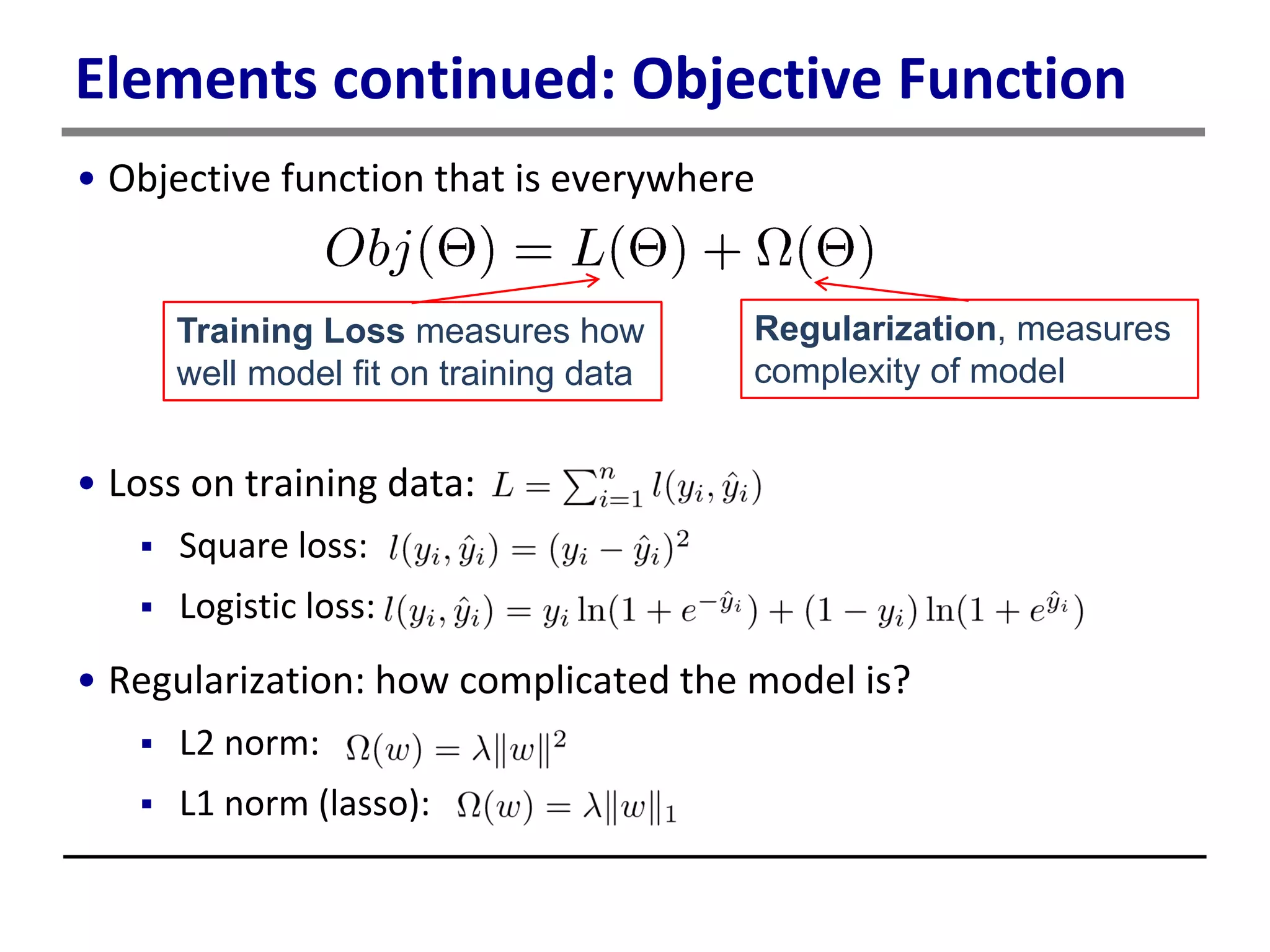

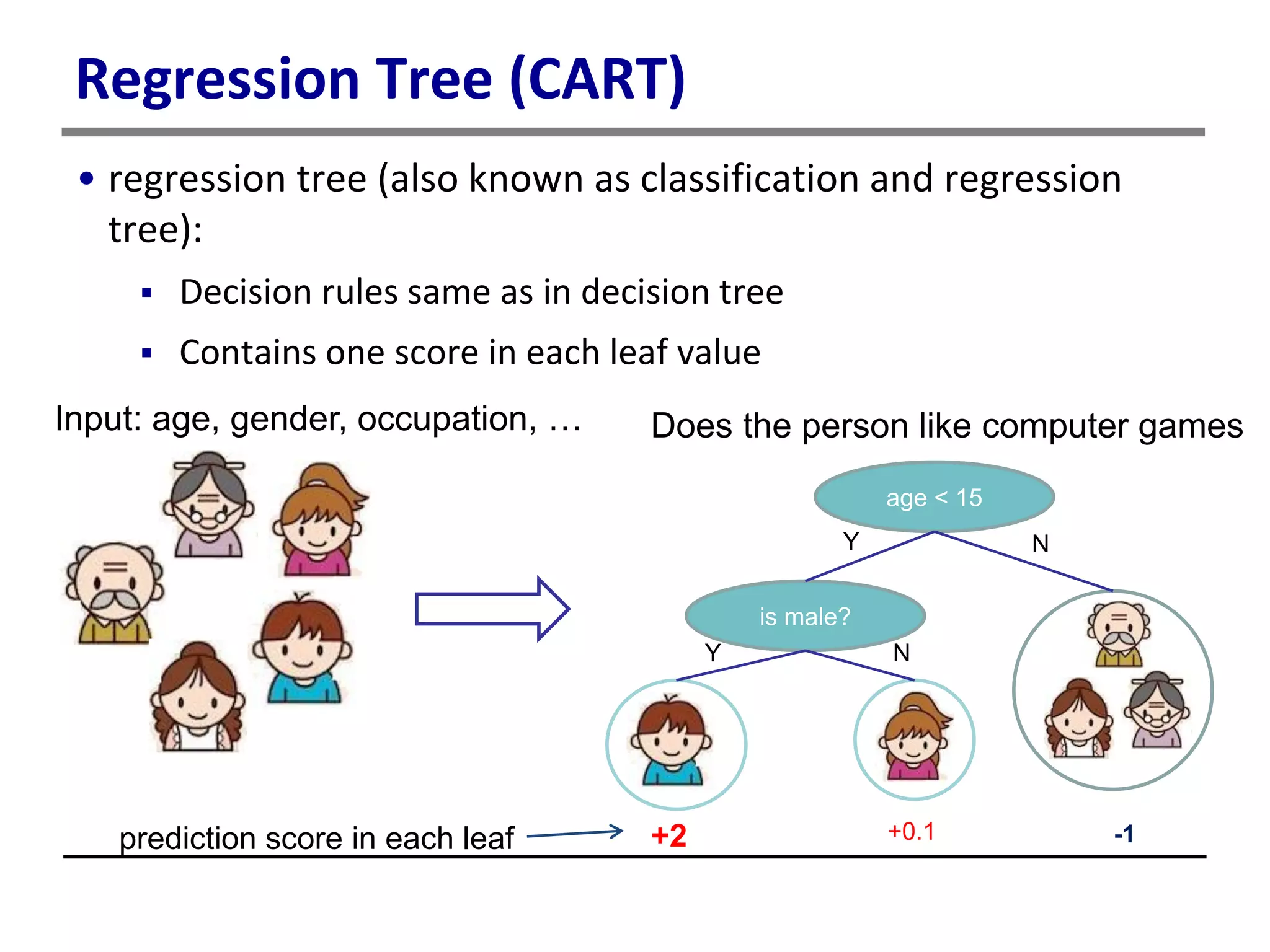

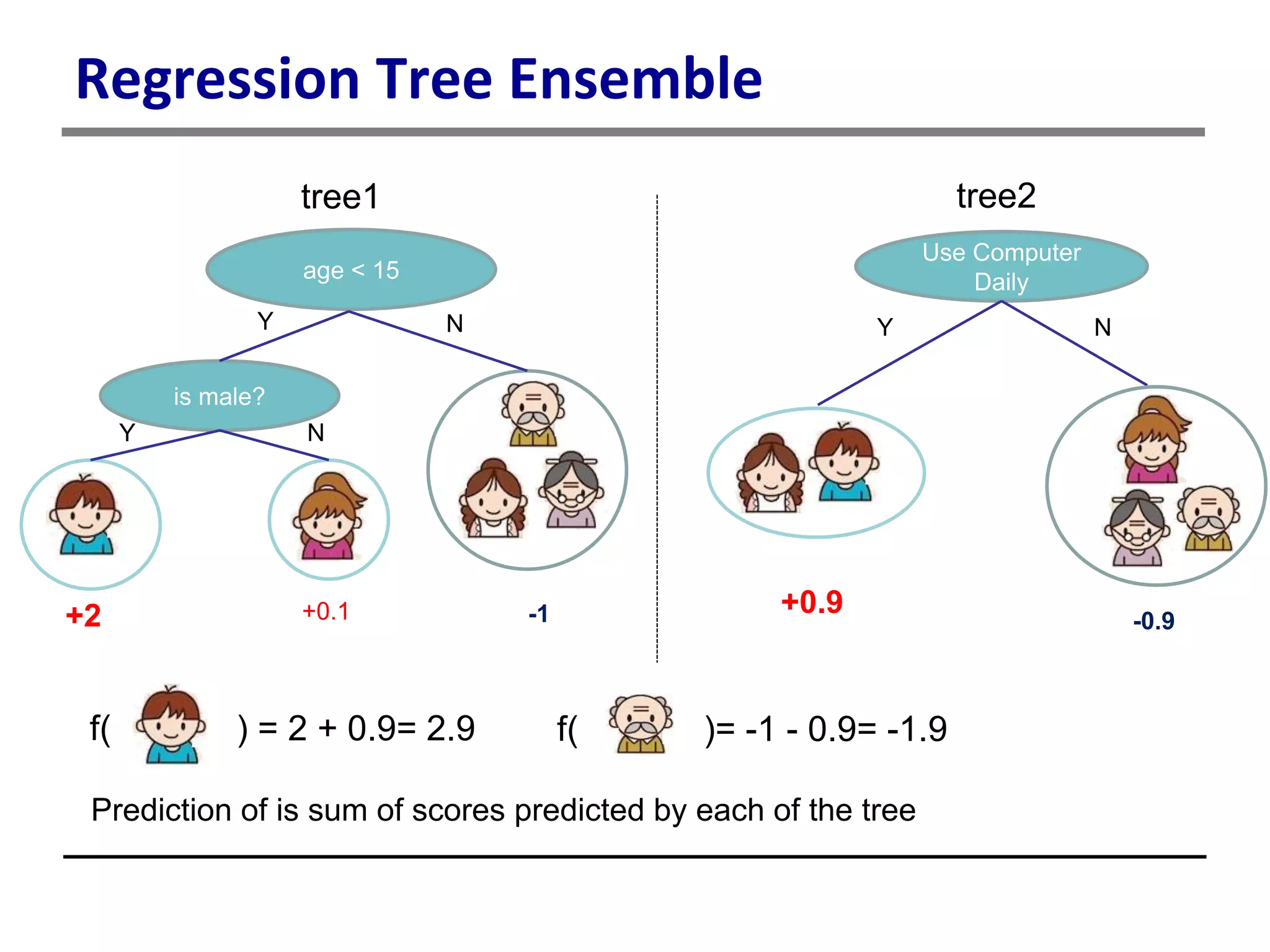

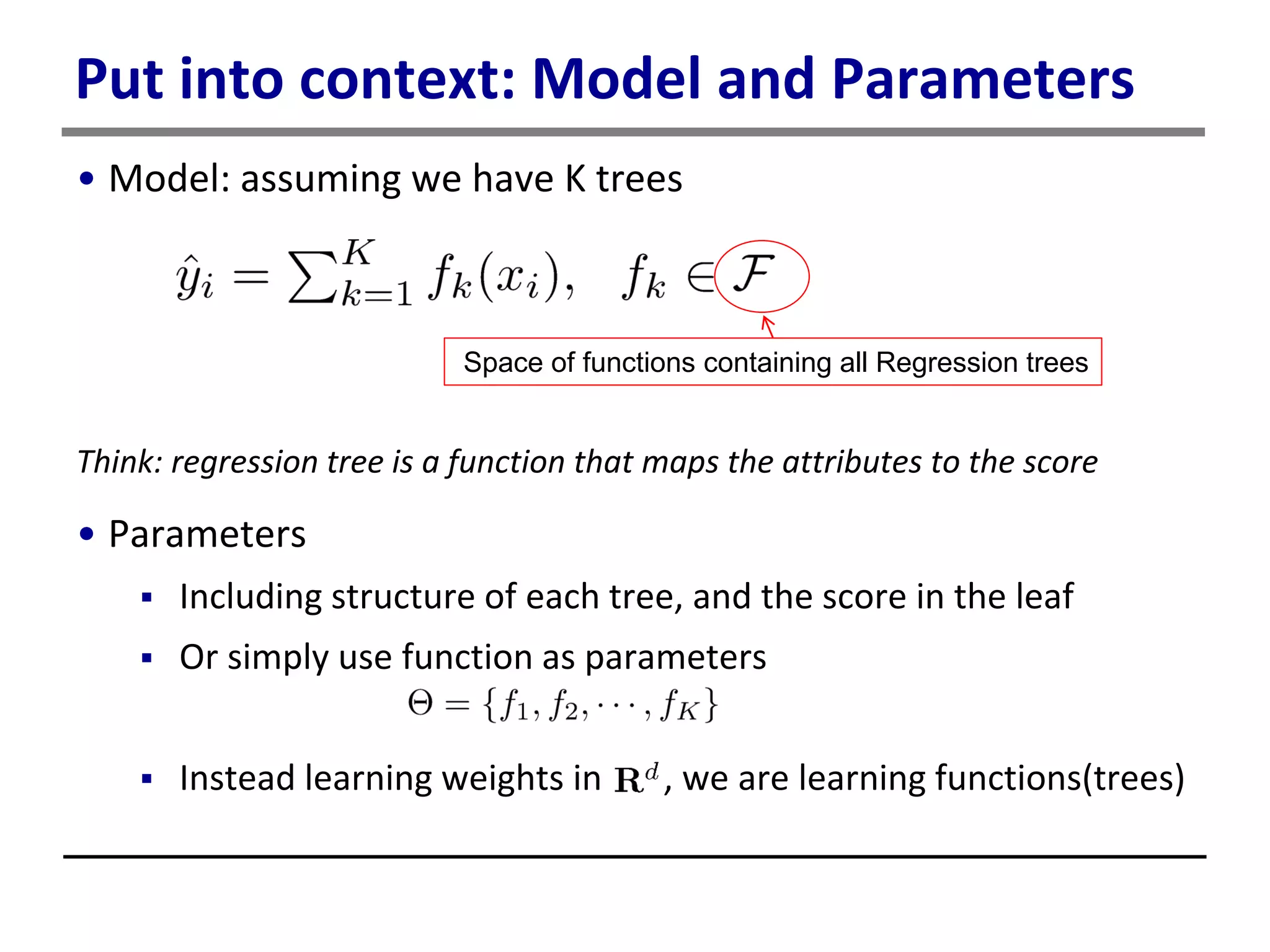

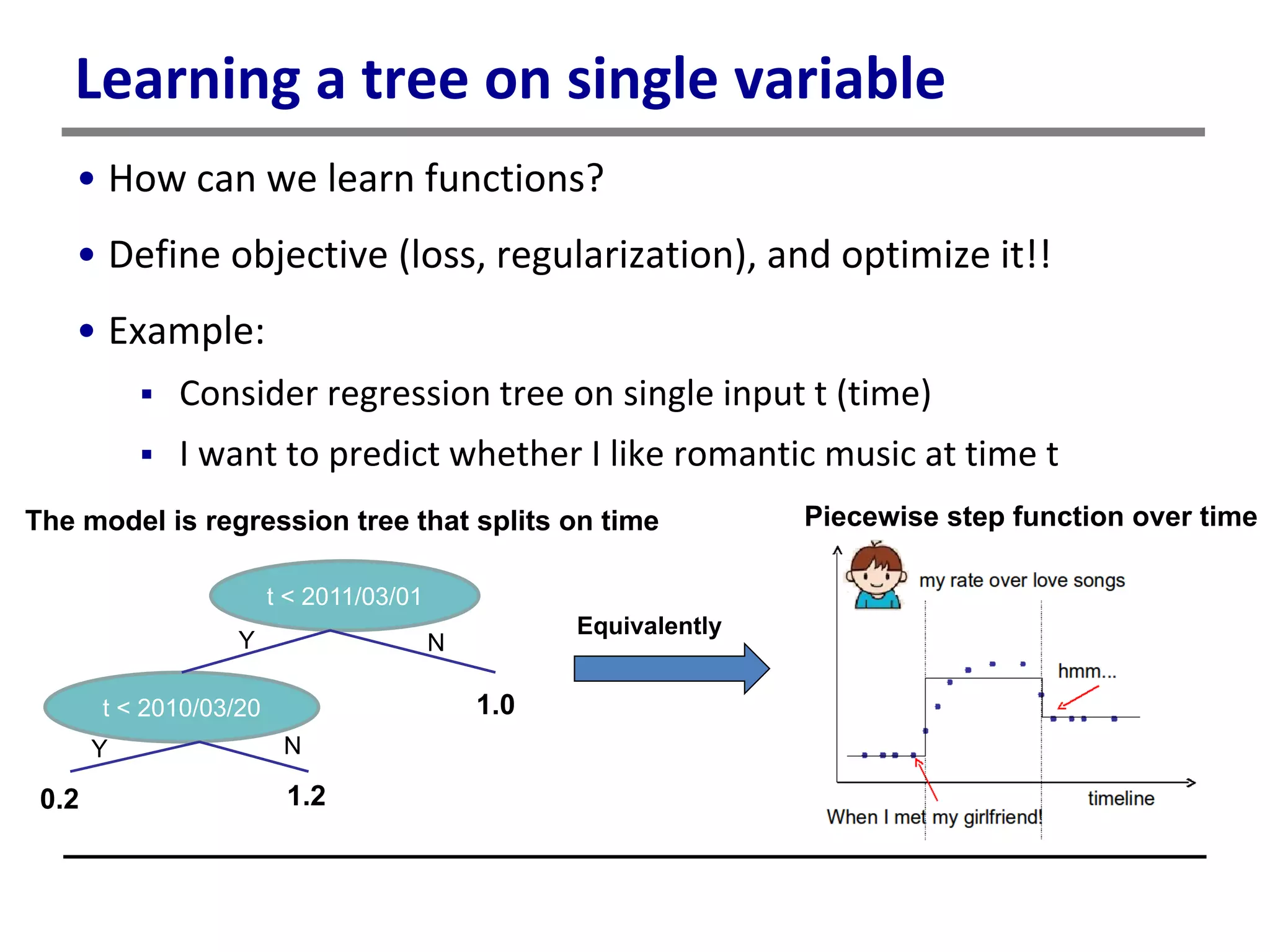

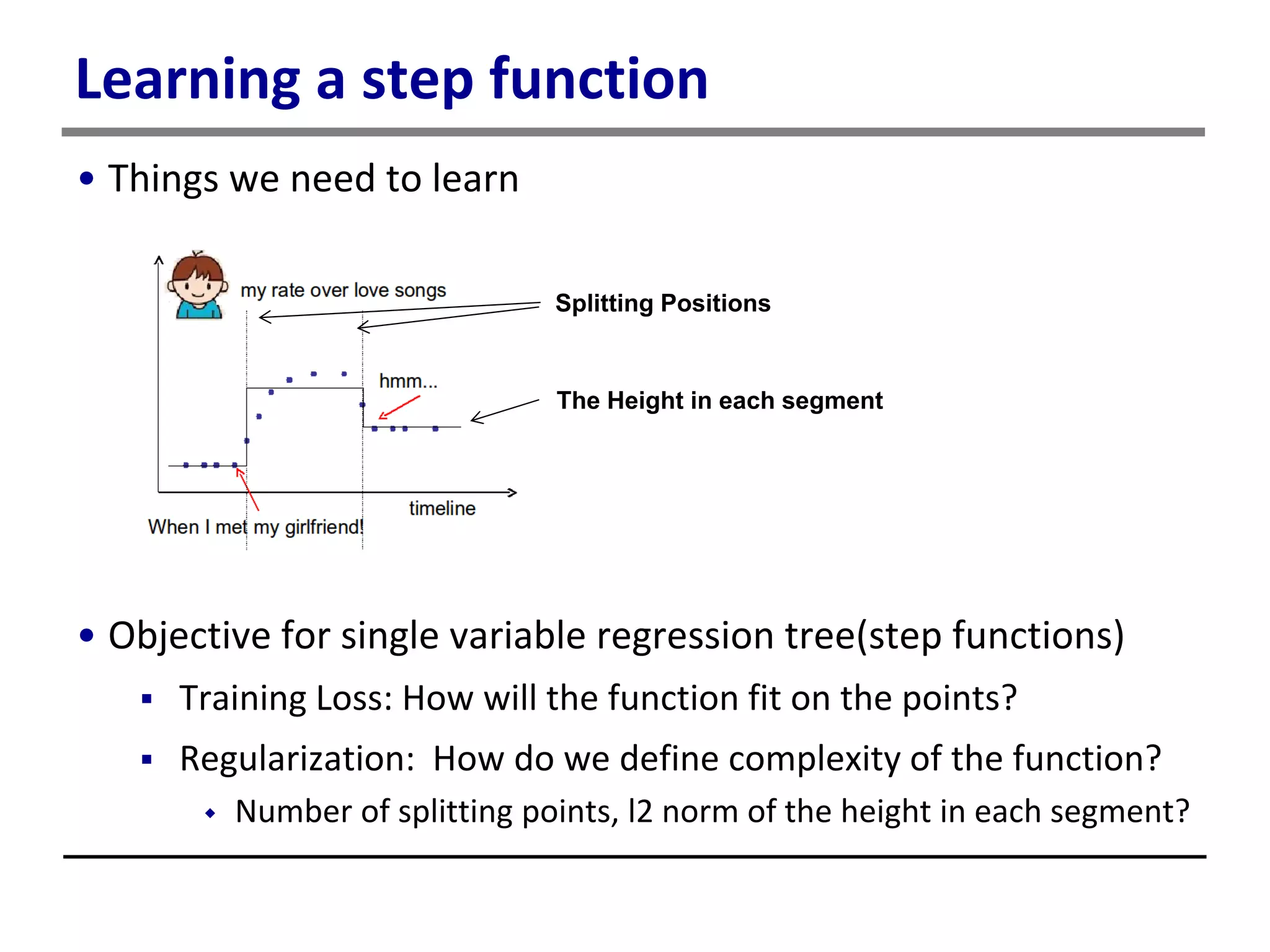

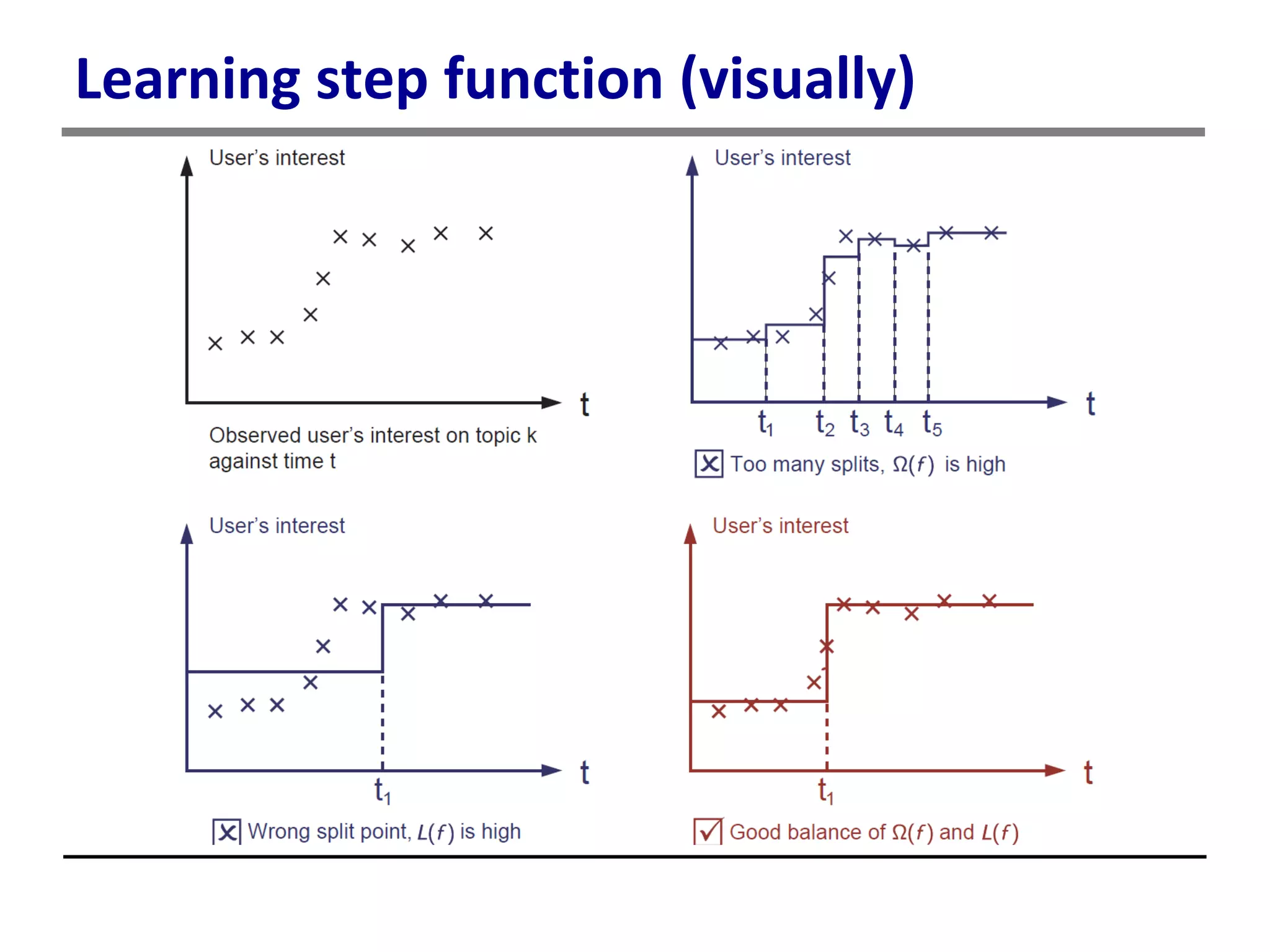

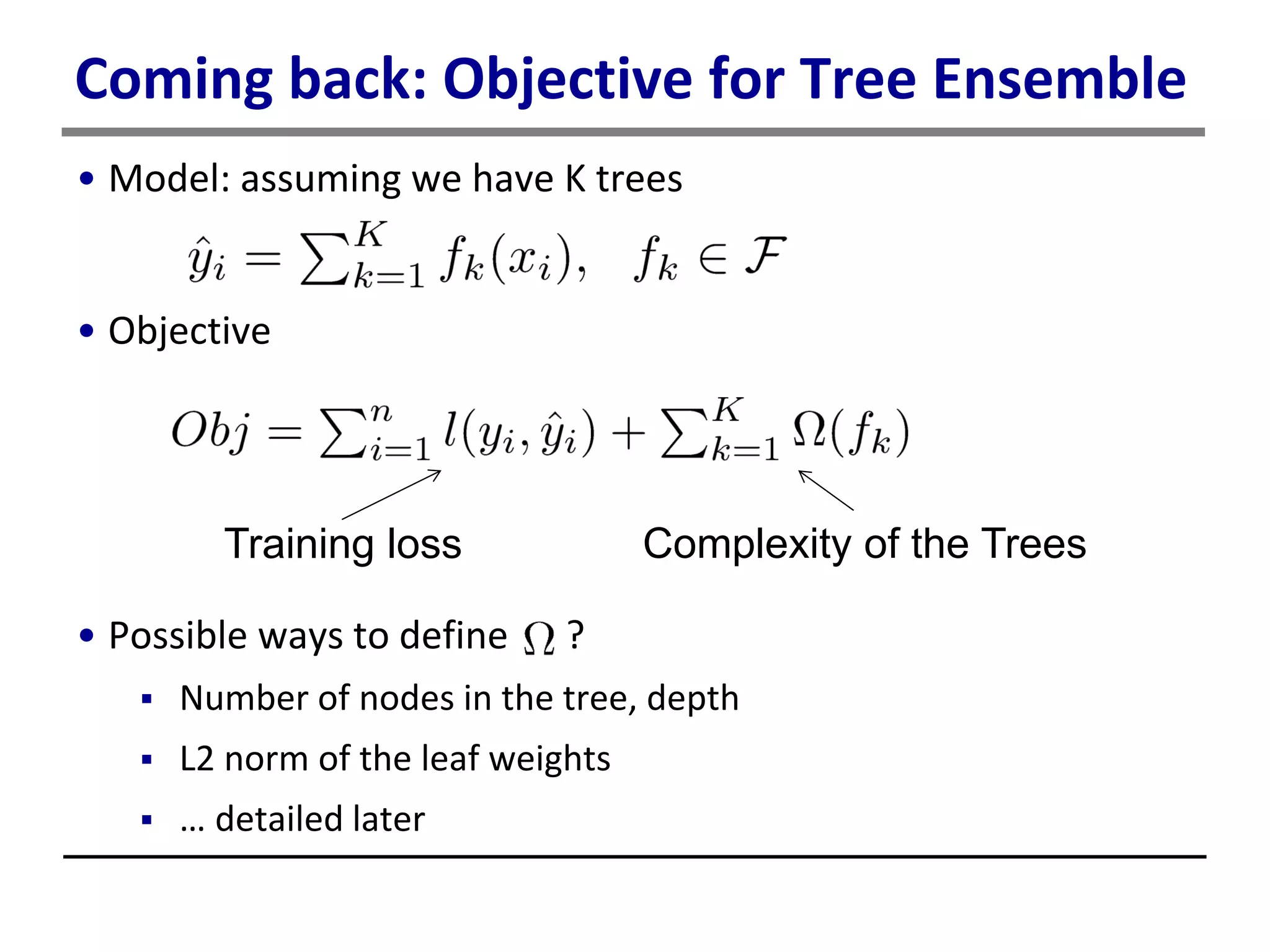

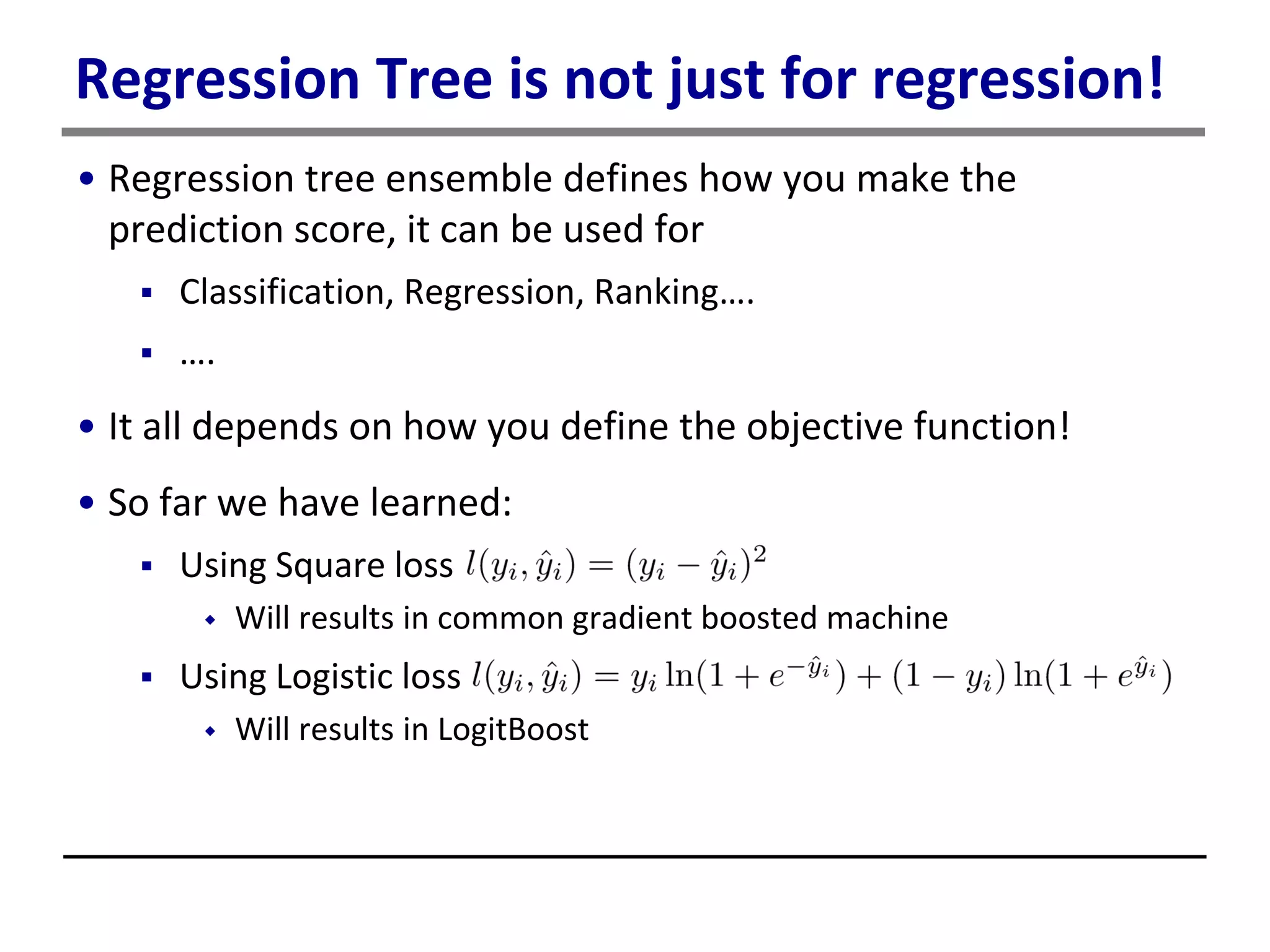

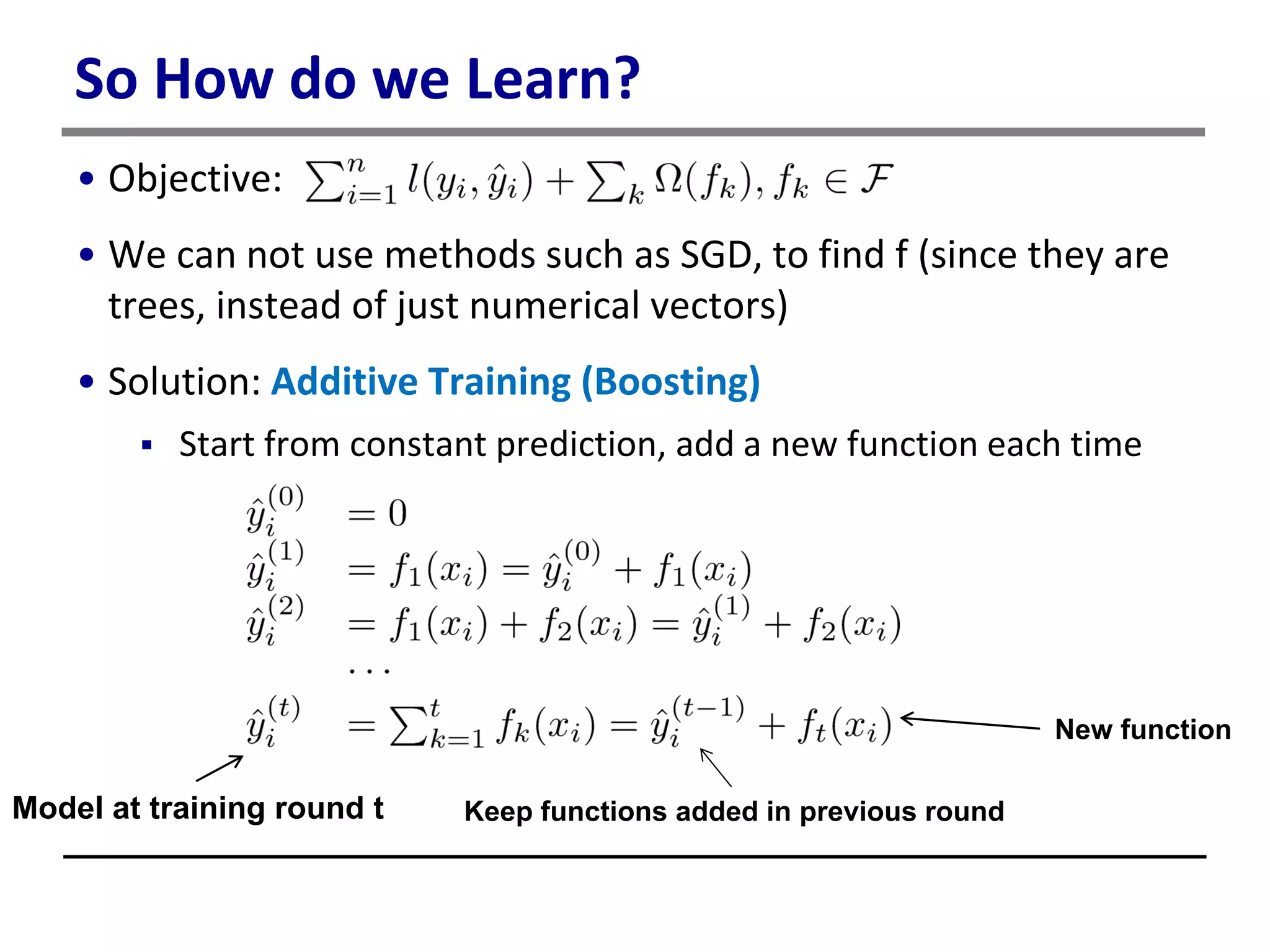

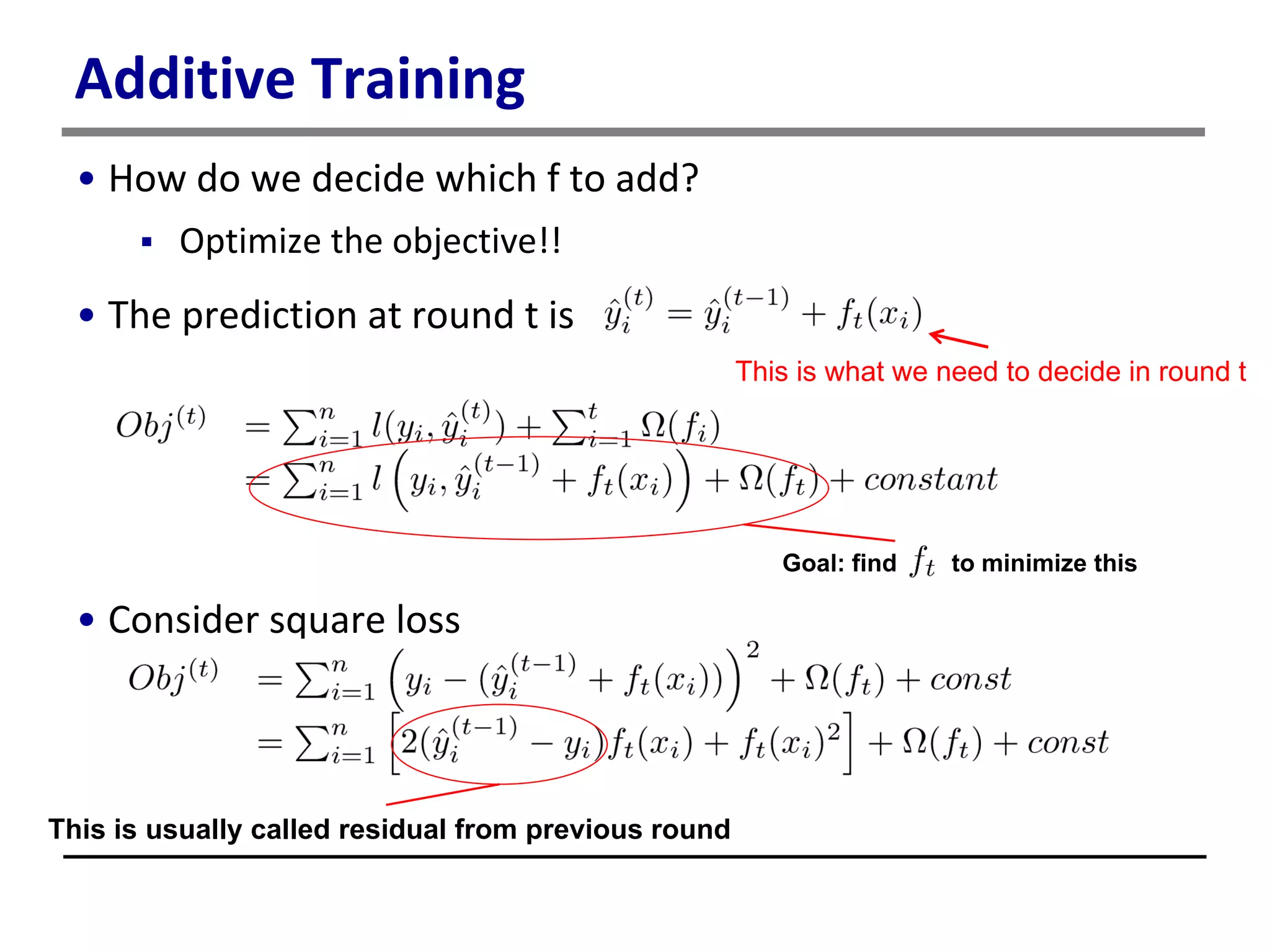

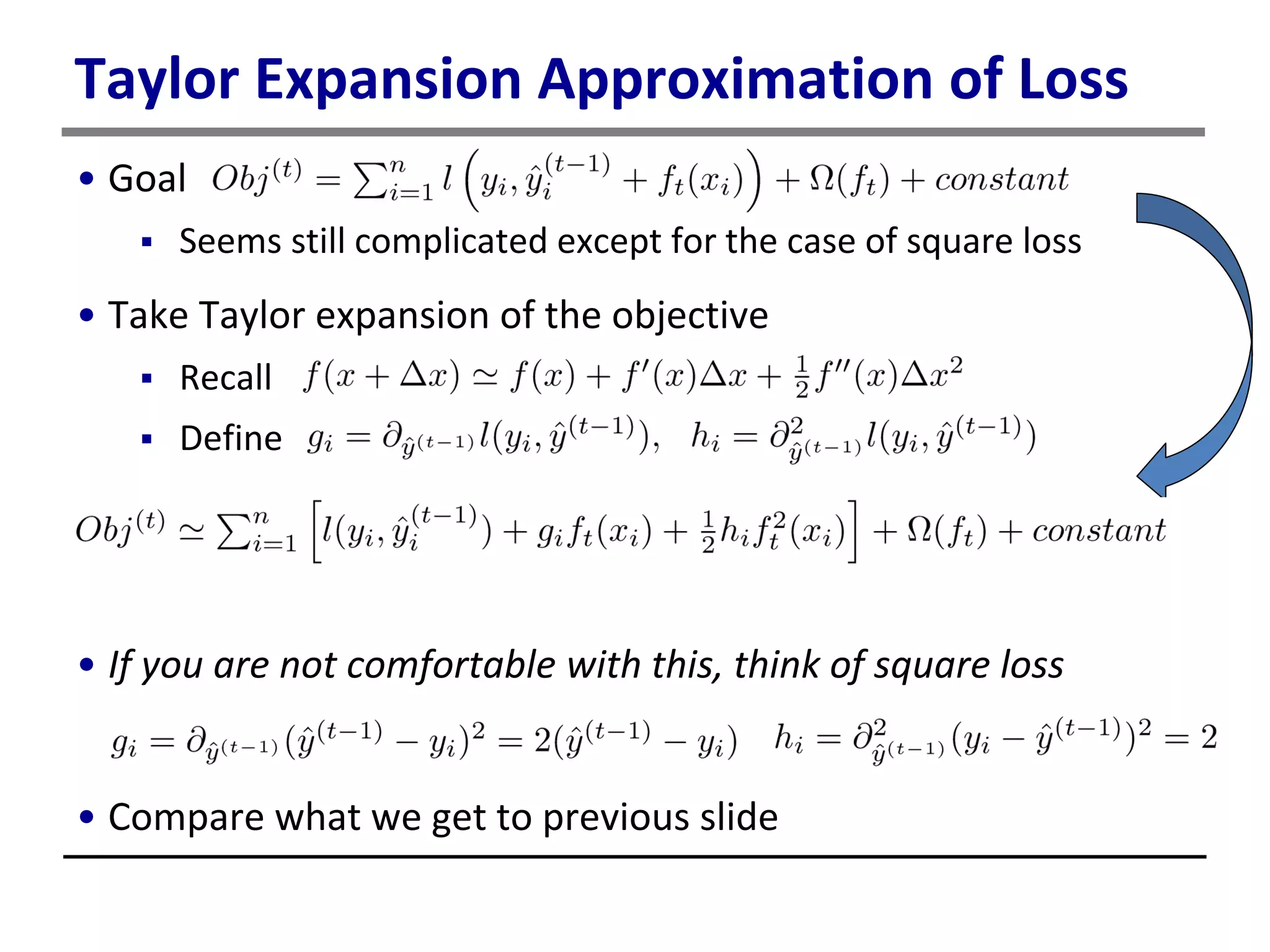

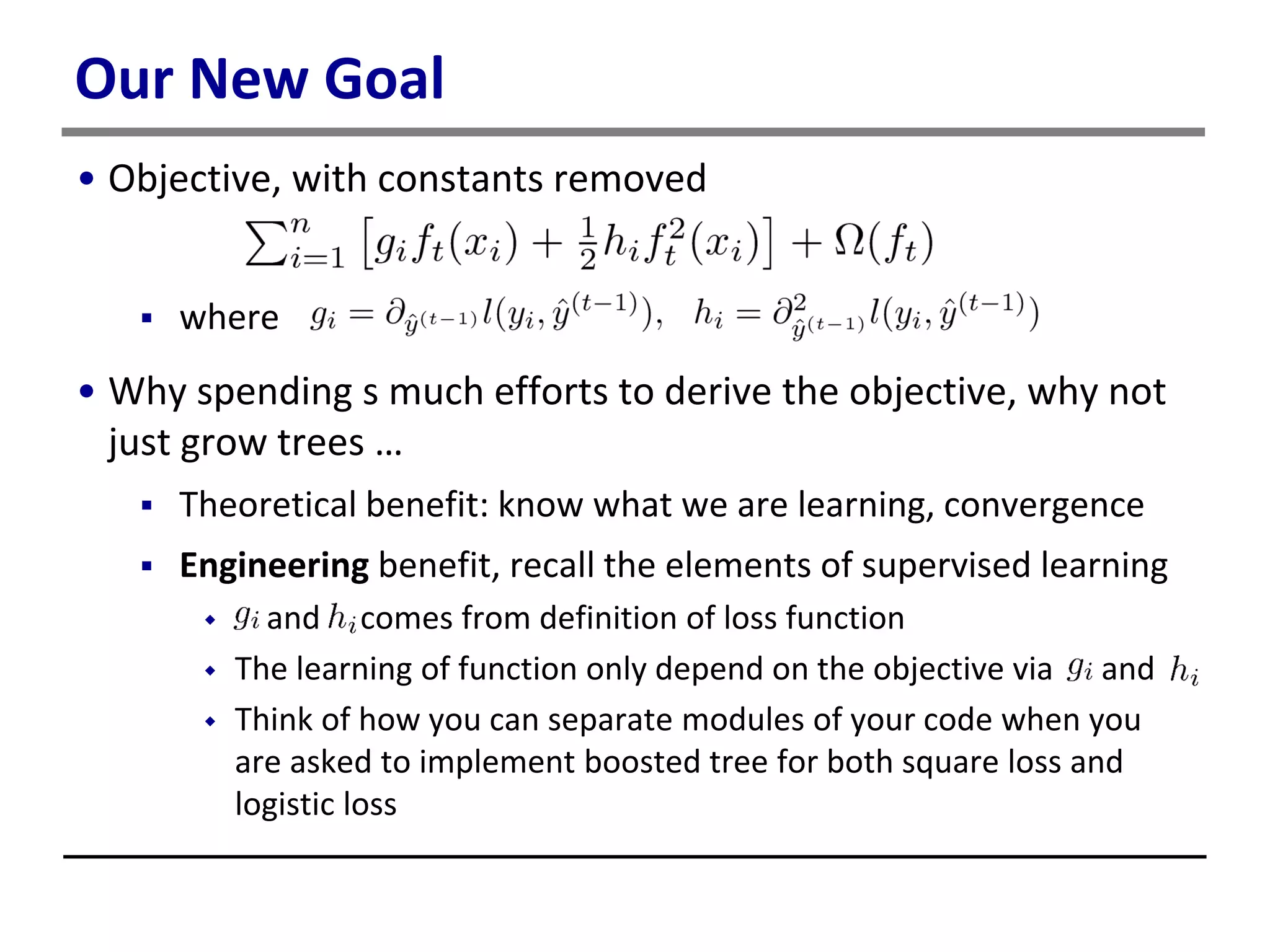

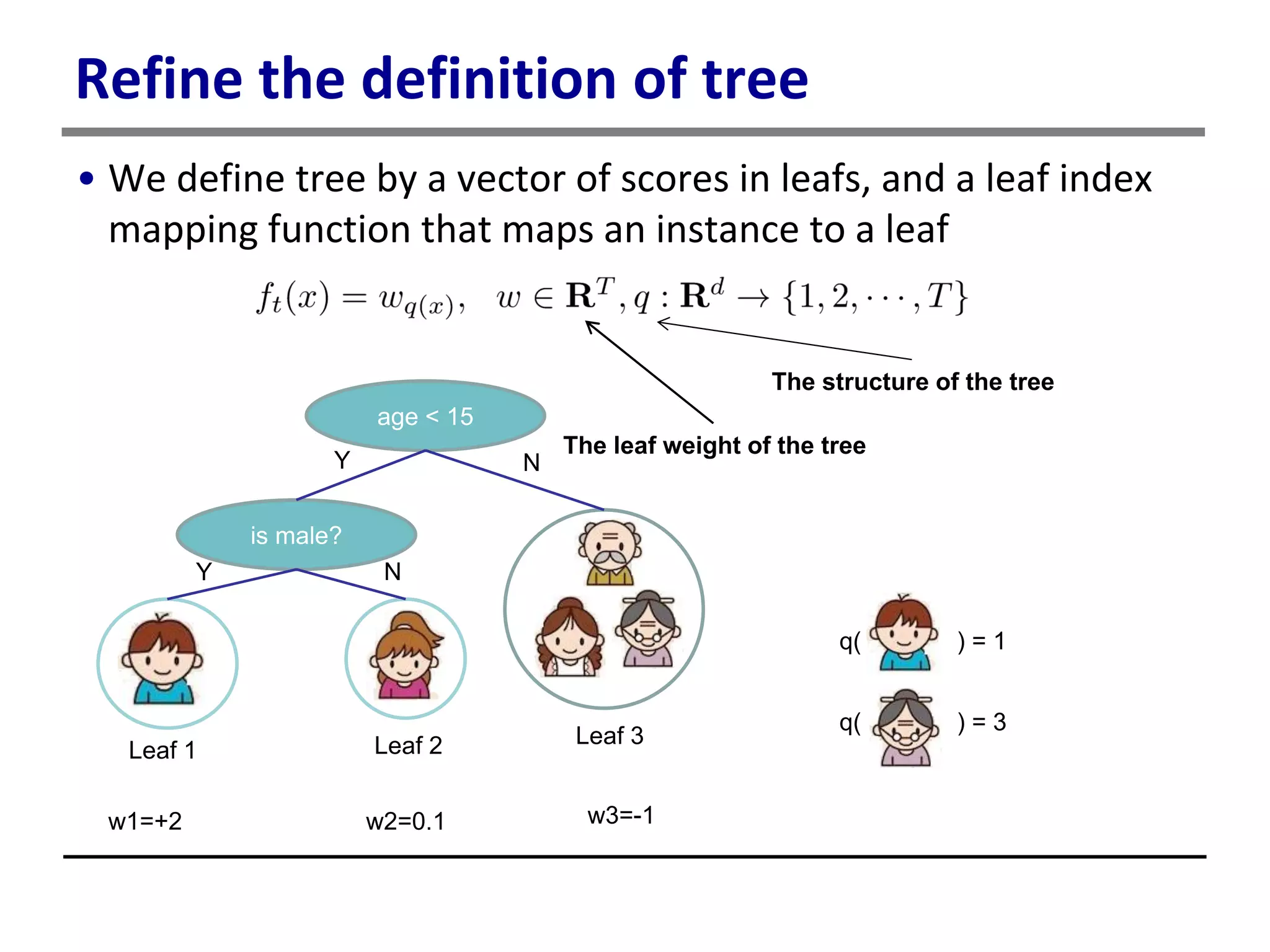

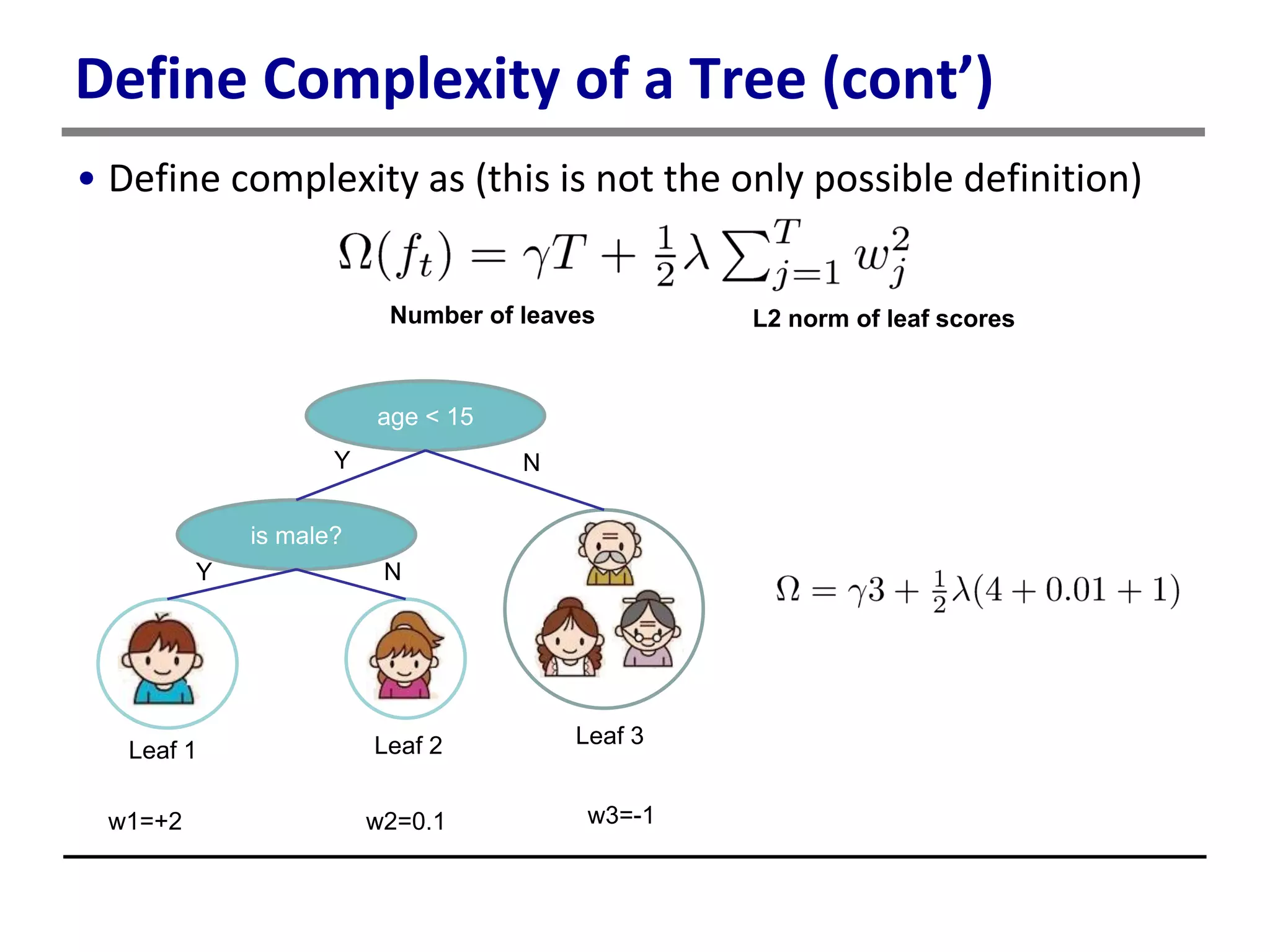

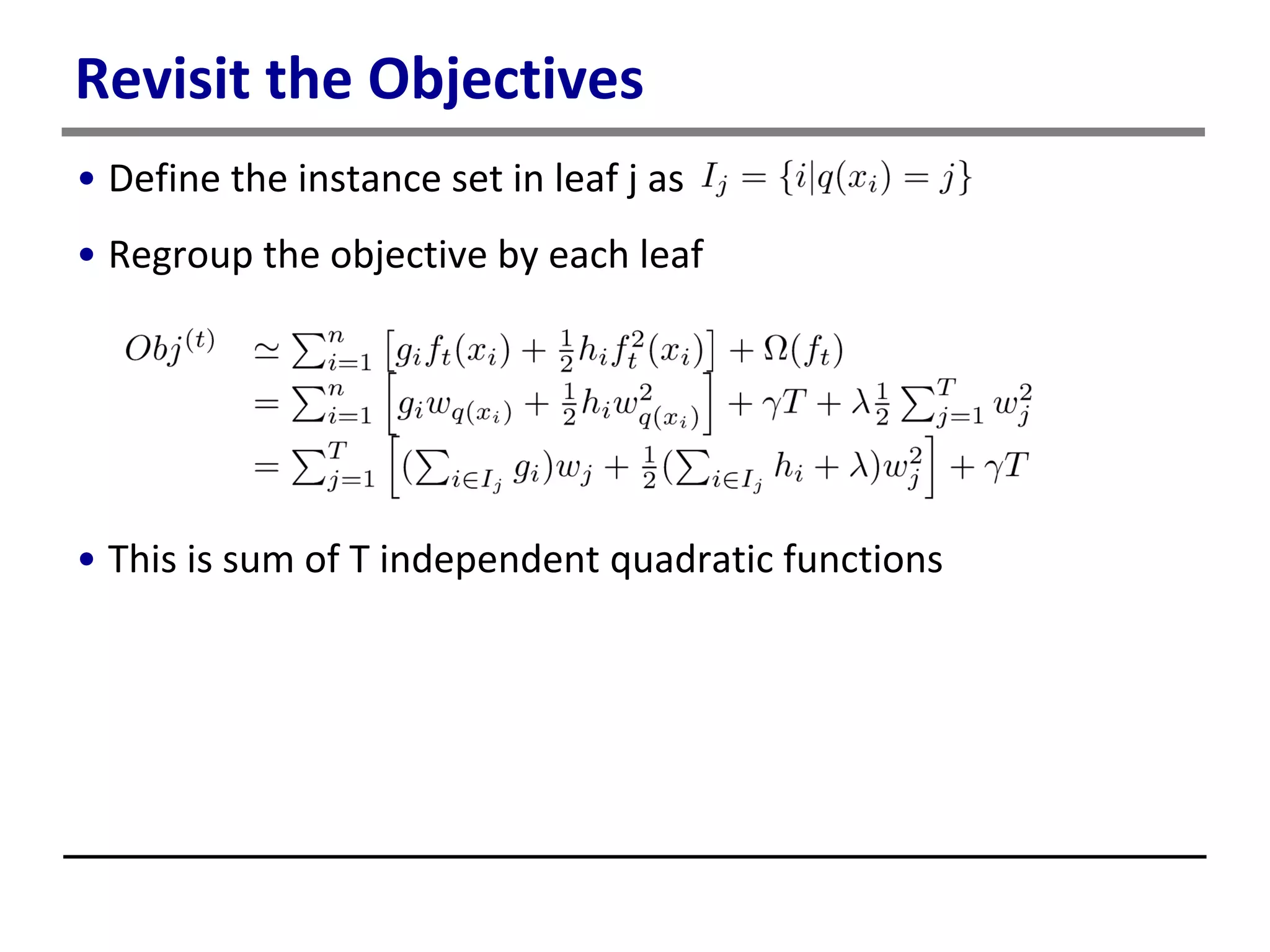

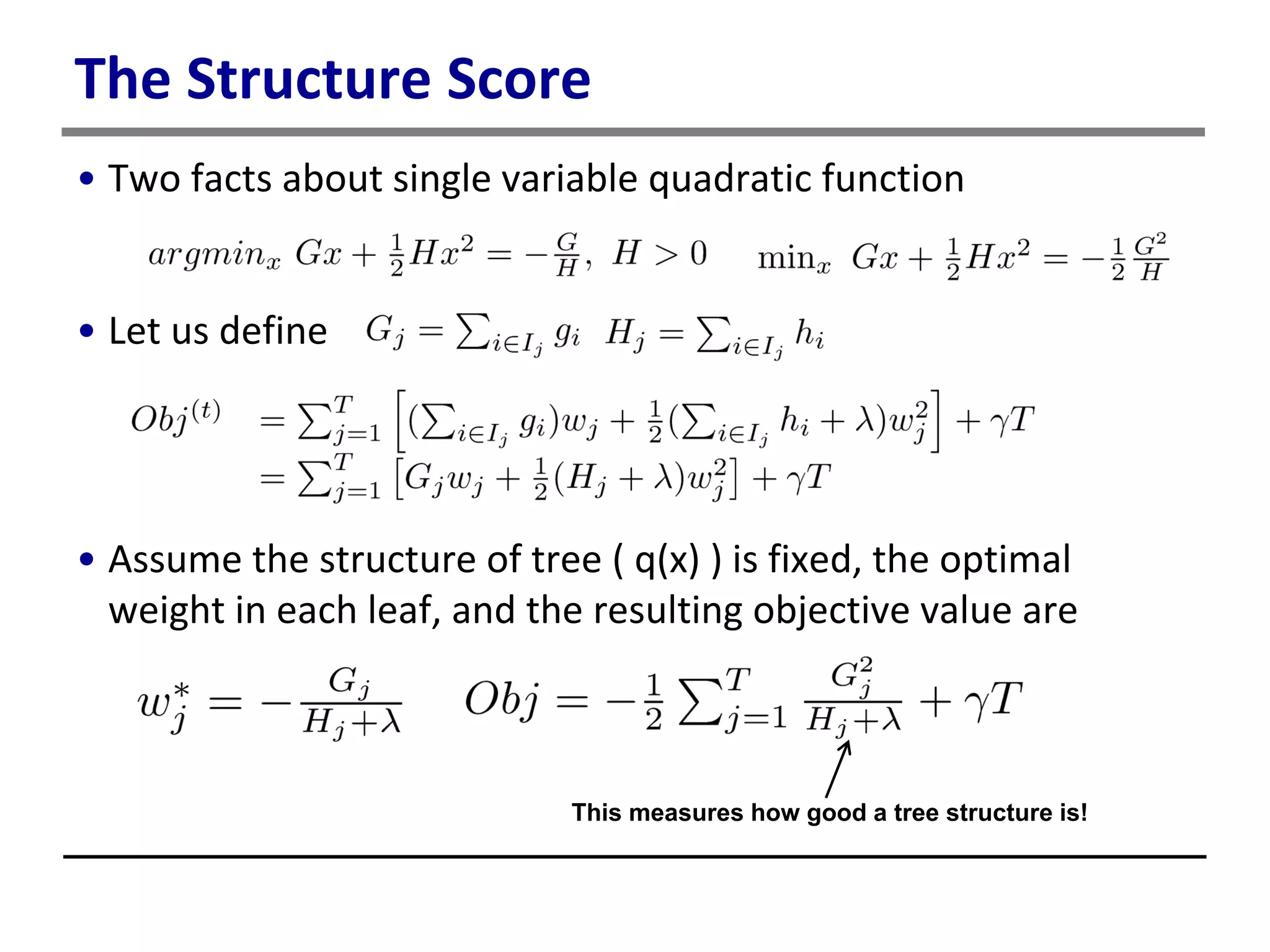

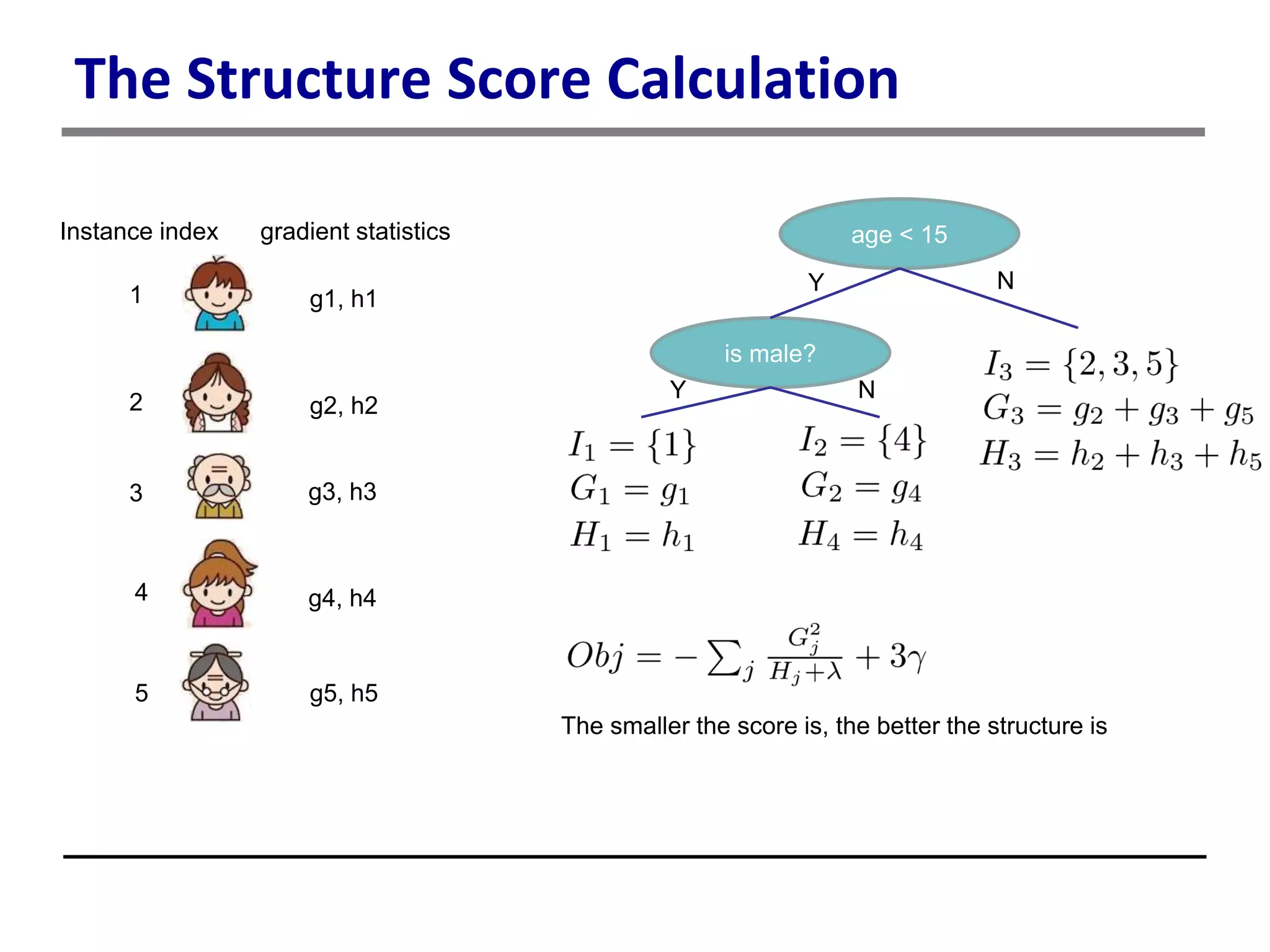

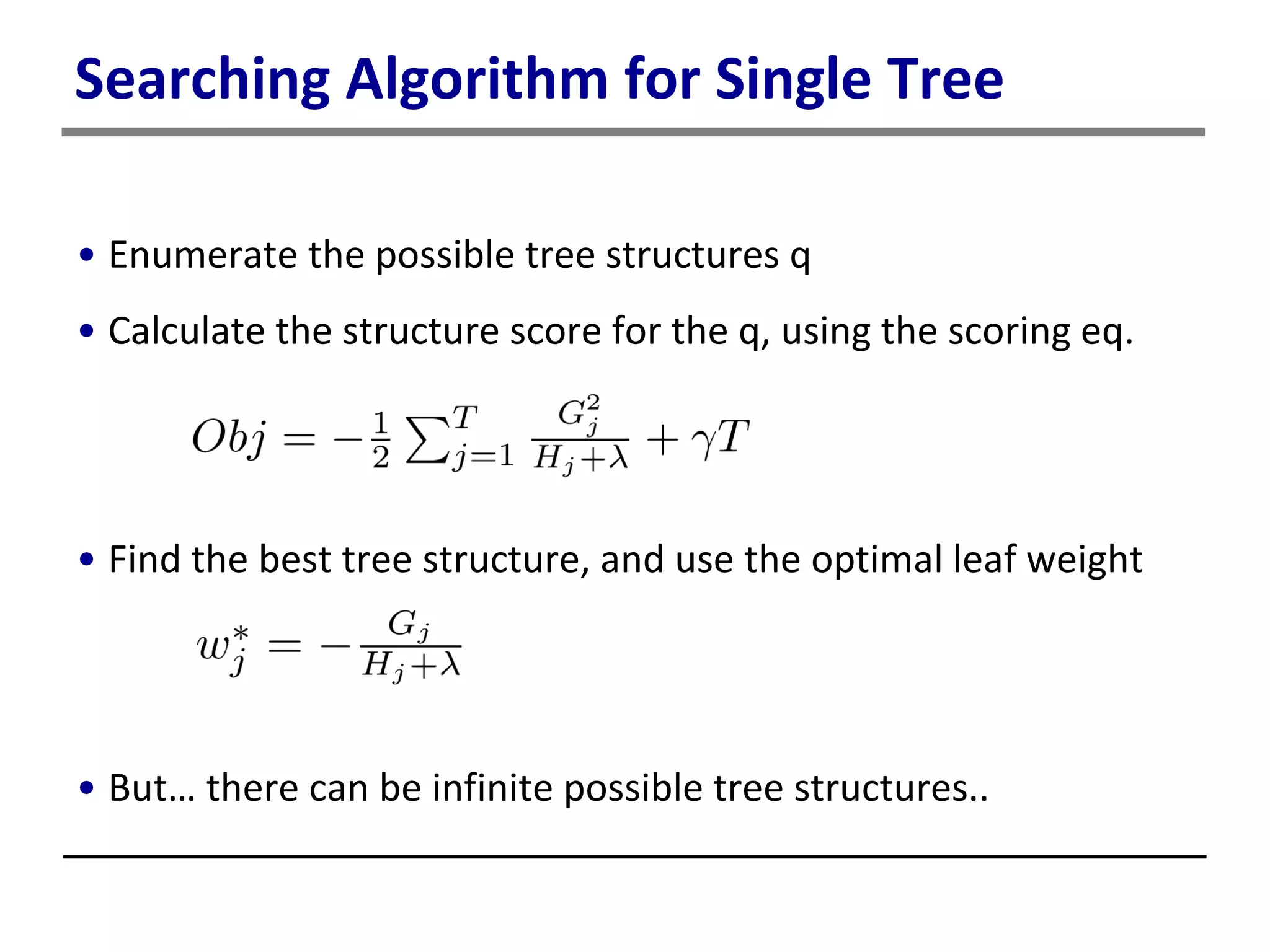

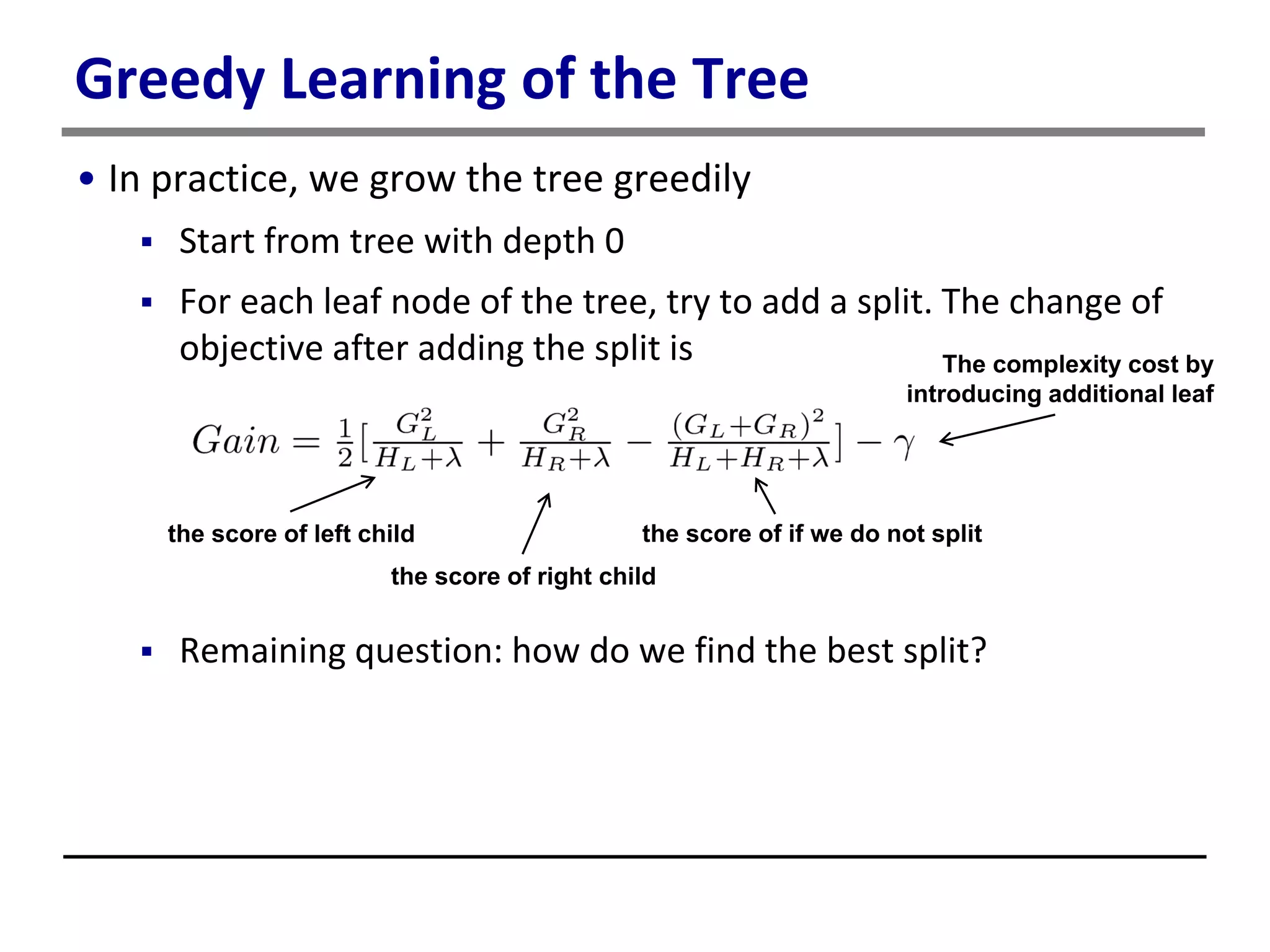

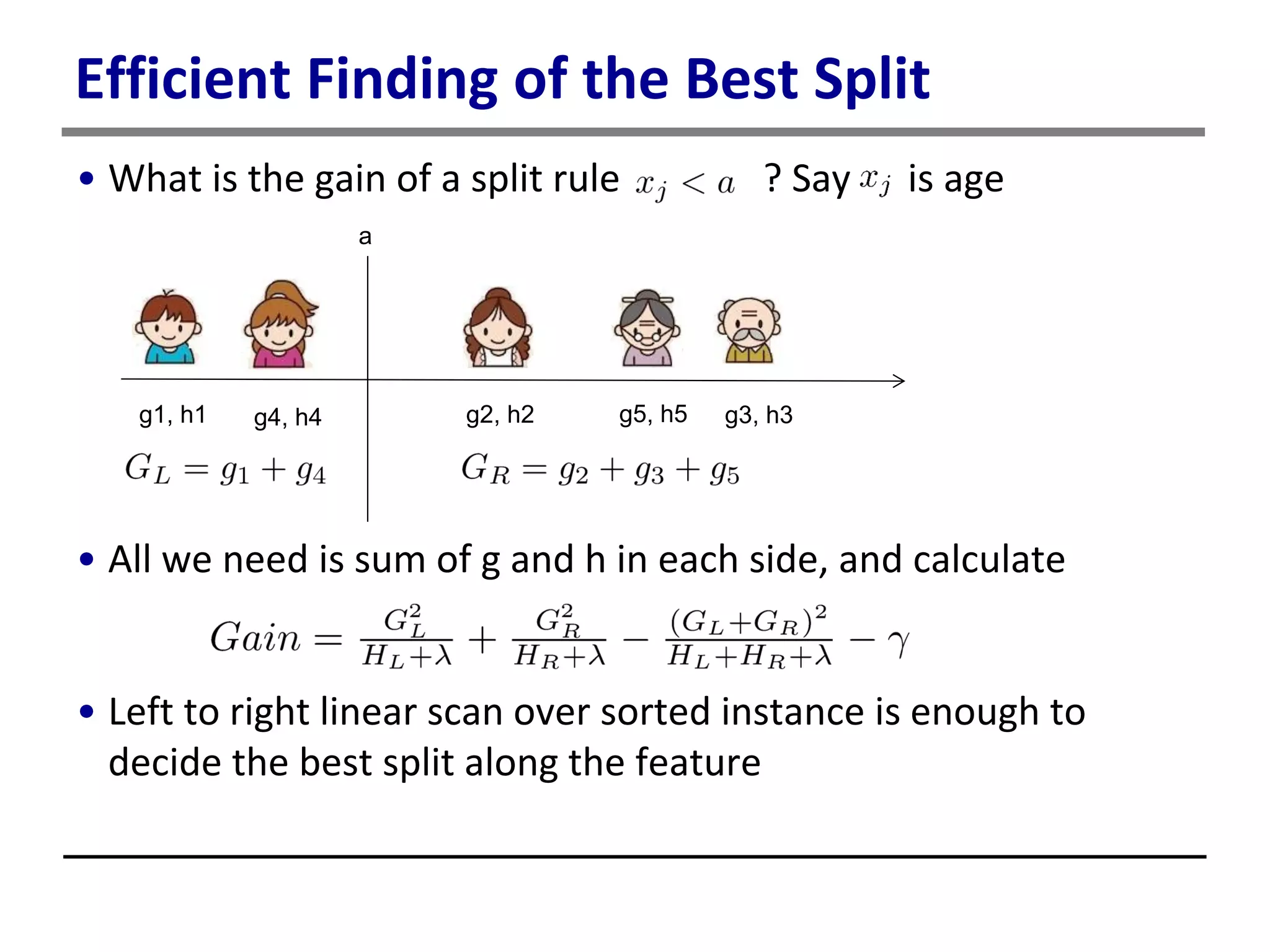

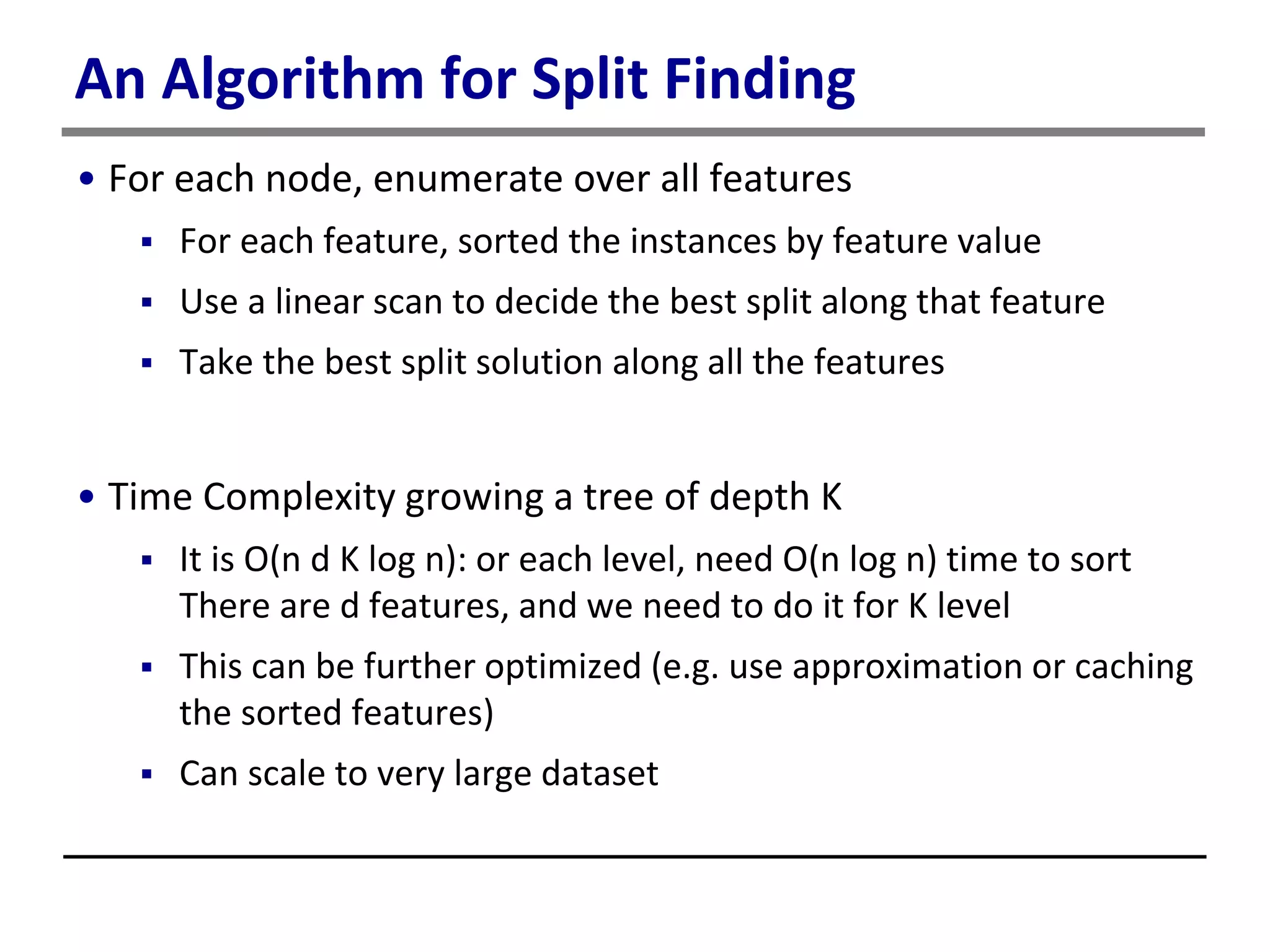

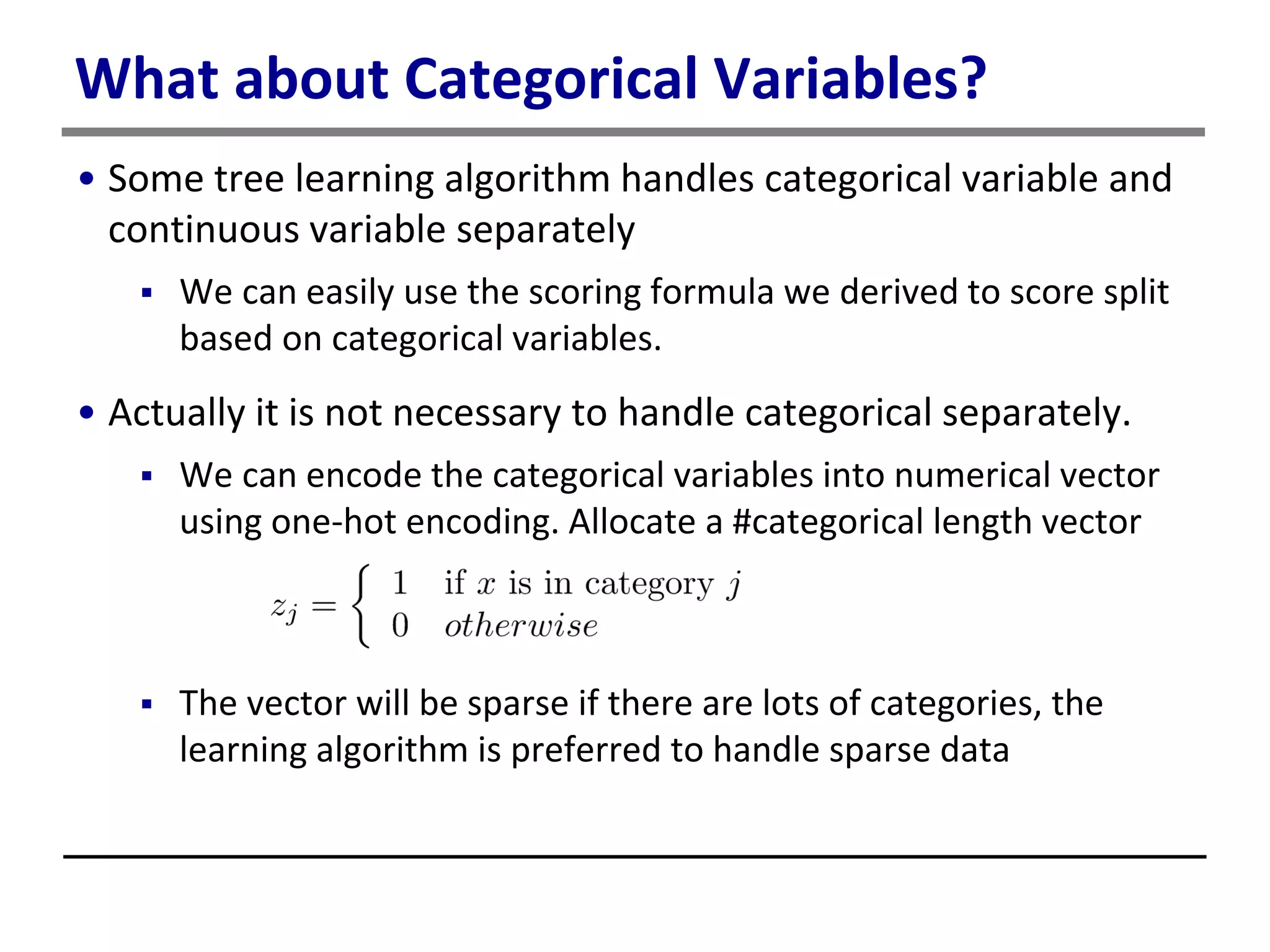

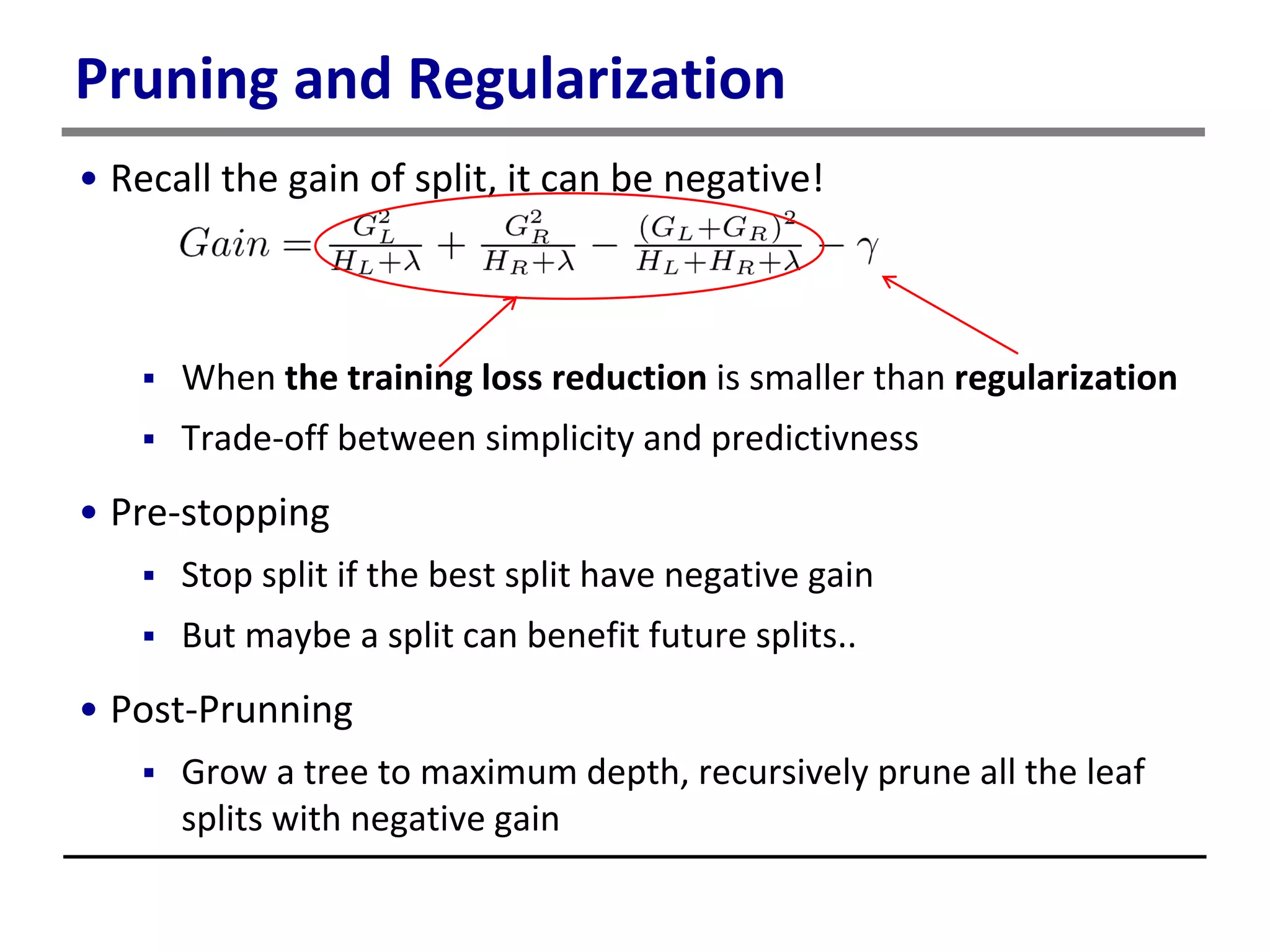

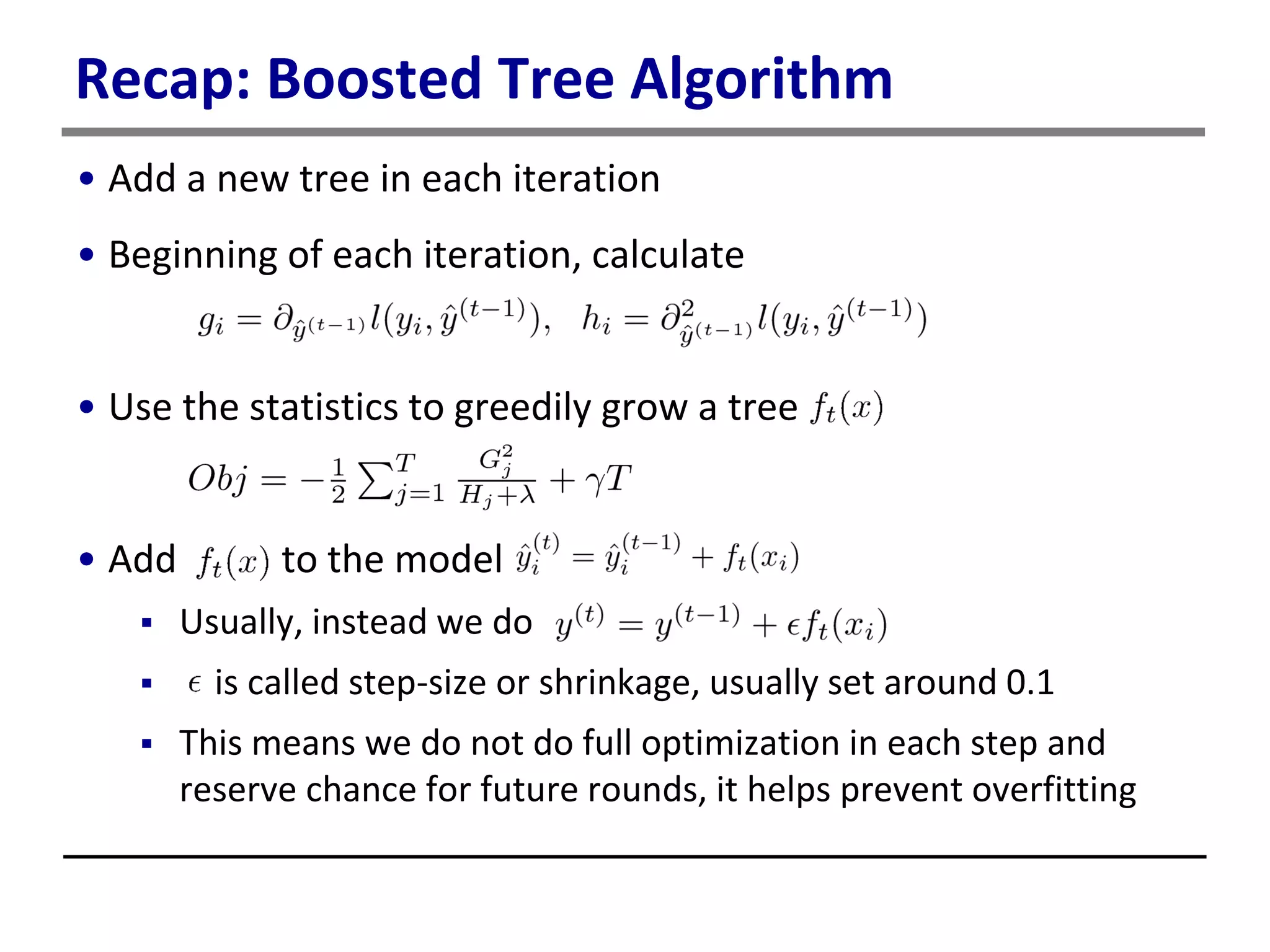

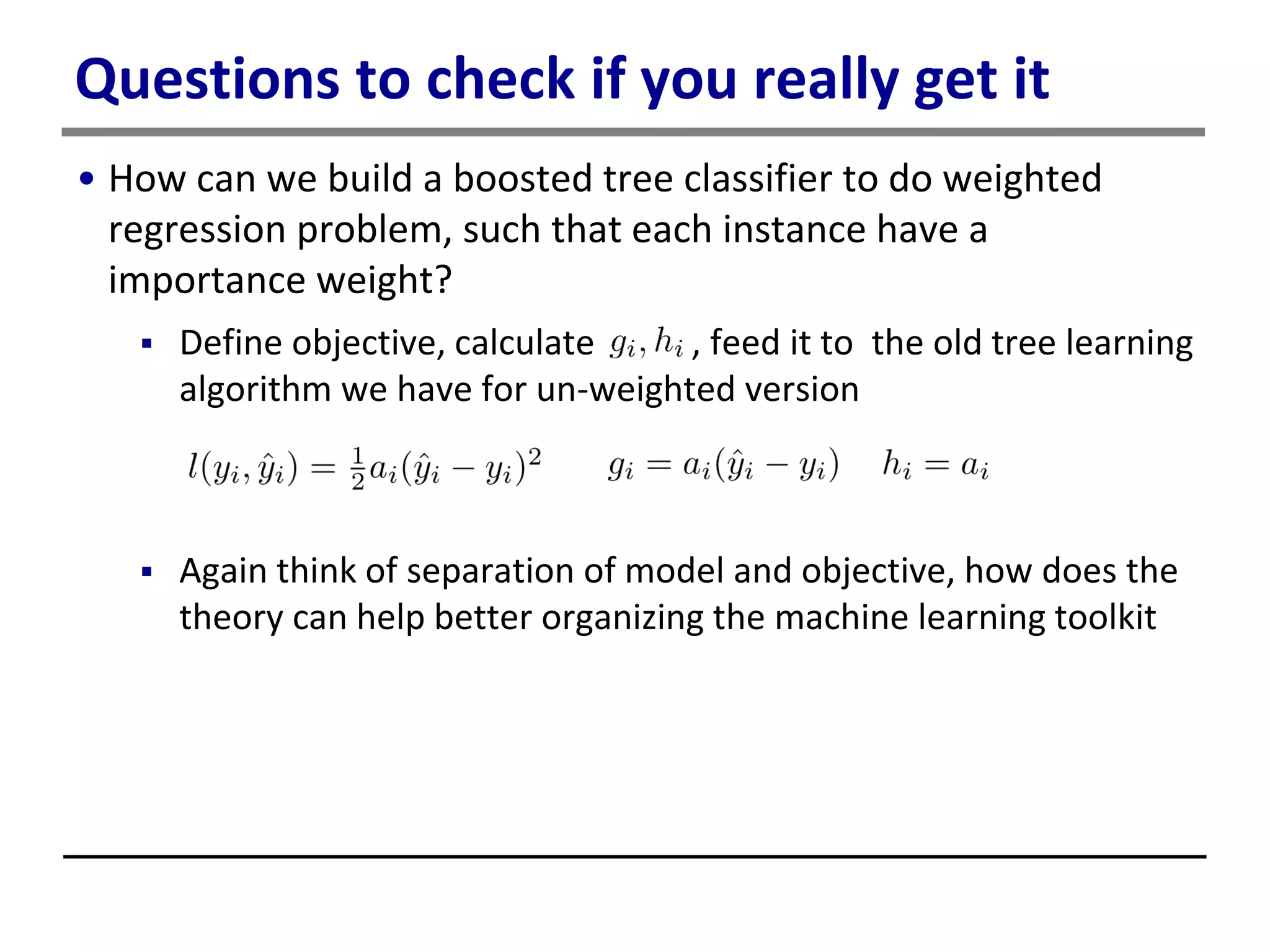

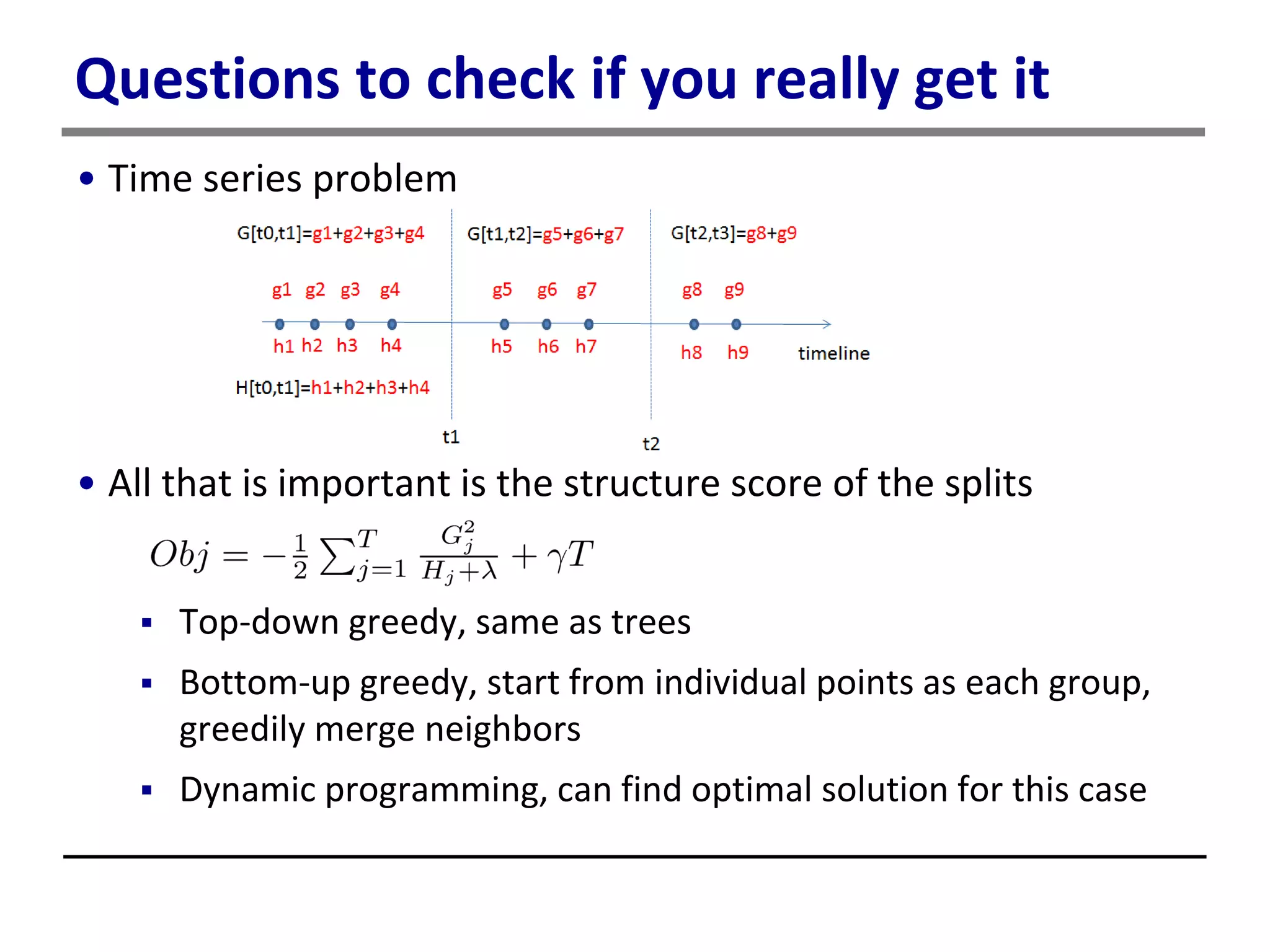

This document provides an introduction to boosted trees. It reviews key concepts in supervised learning like loss functions and regularization. Regression trees make predictions by assigning scores to leaf nodes. Gradient boosting is an algorithm that learns an ensemble of regression trees additively to minimize a loss function. It works by greedily adding trees to improve the model's fit on the training data based on the gradient of the loss function. The trees are learned one at a time by calculating the optimal split at each node to reduce a "structure score" measuring how well the split partitions the data.