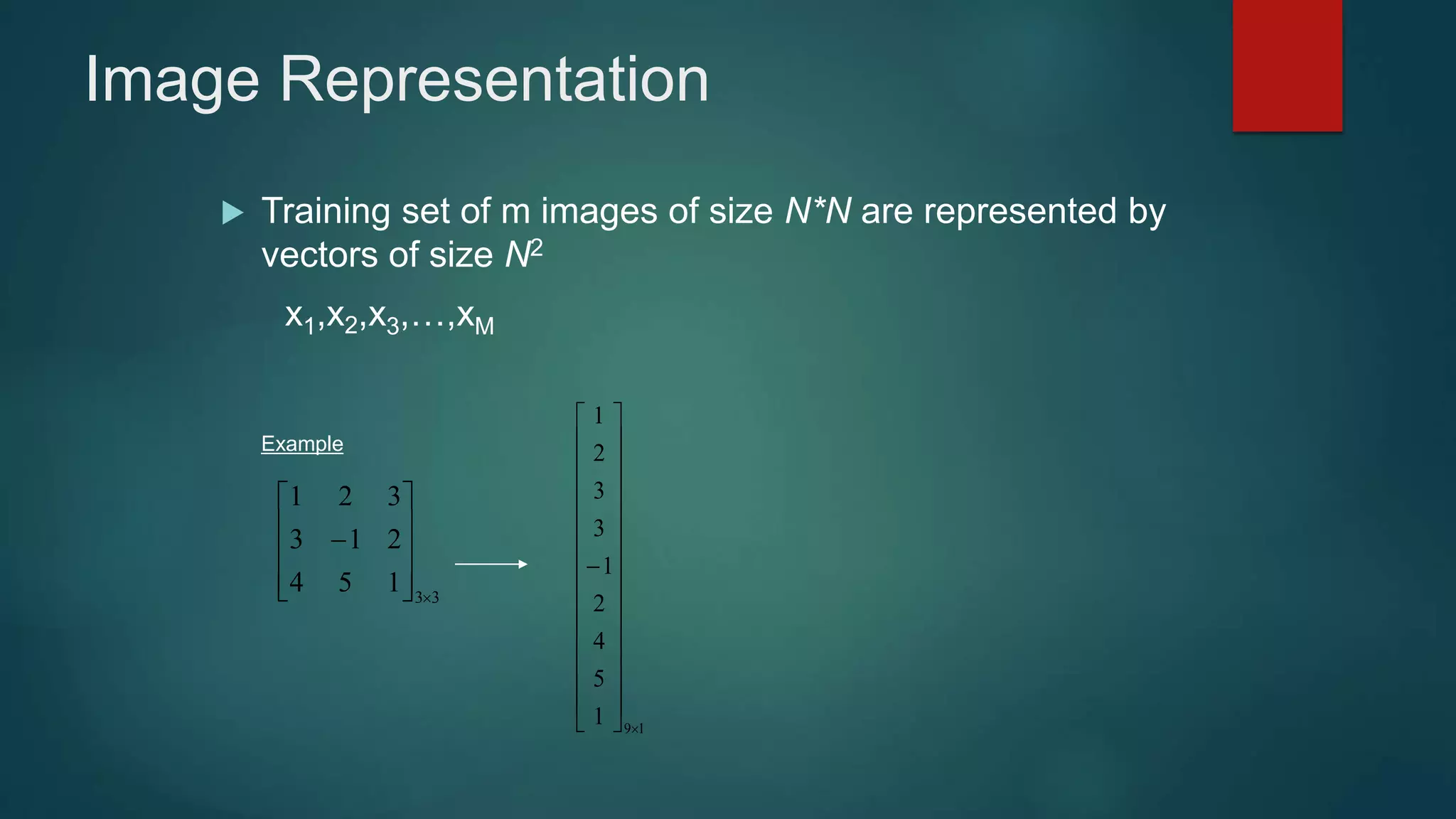

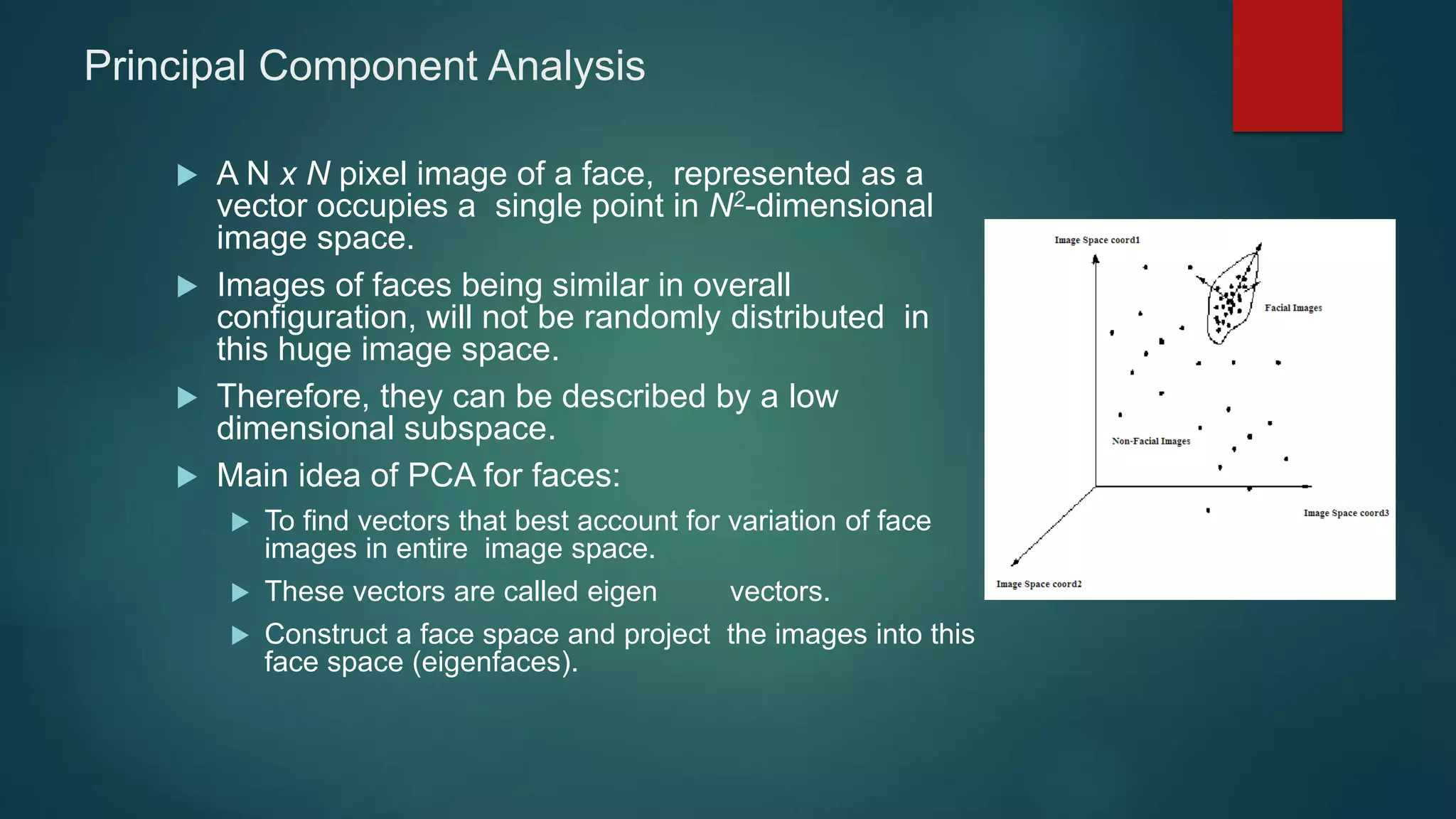

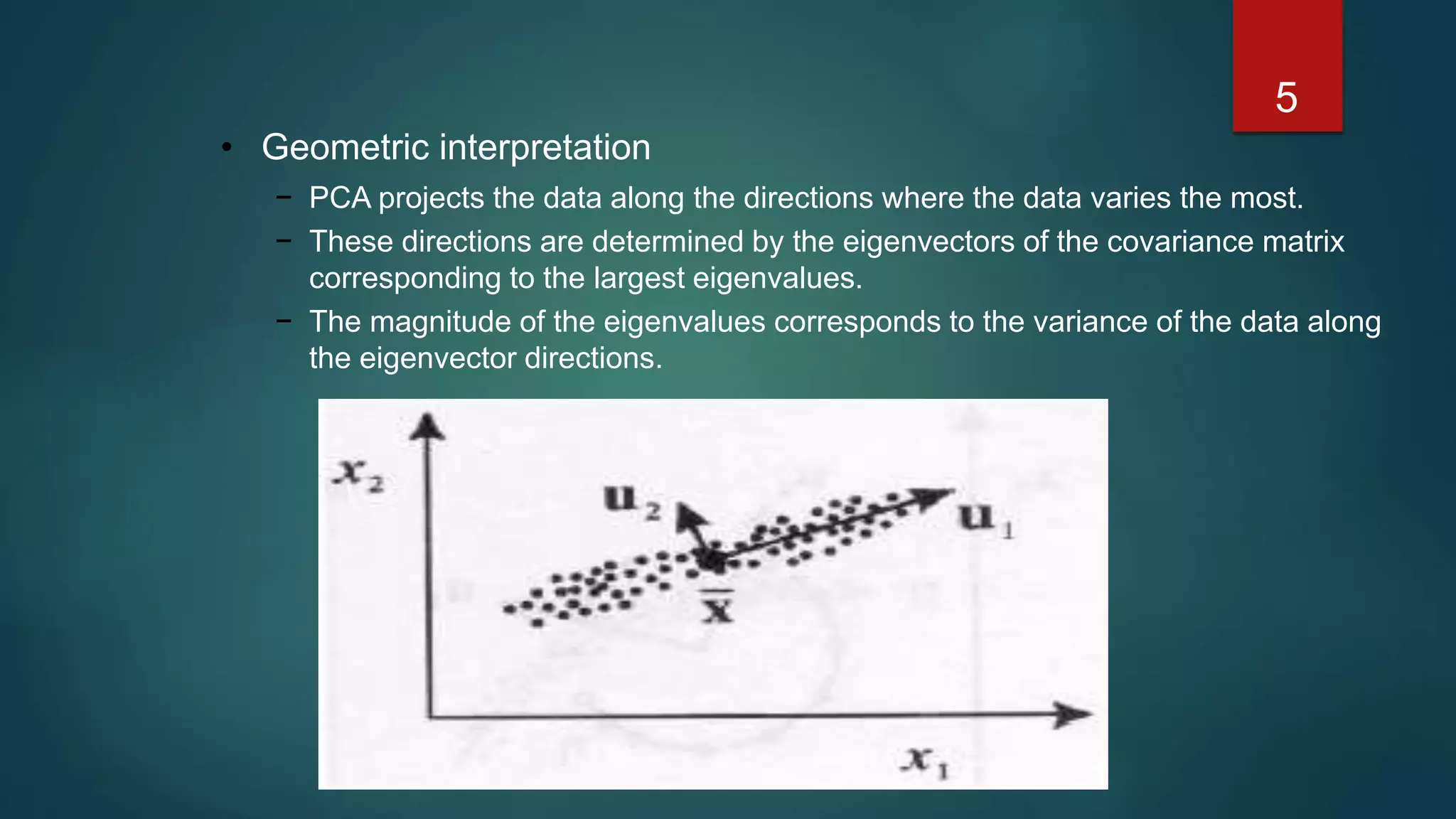

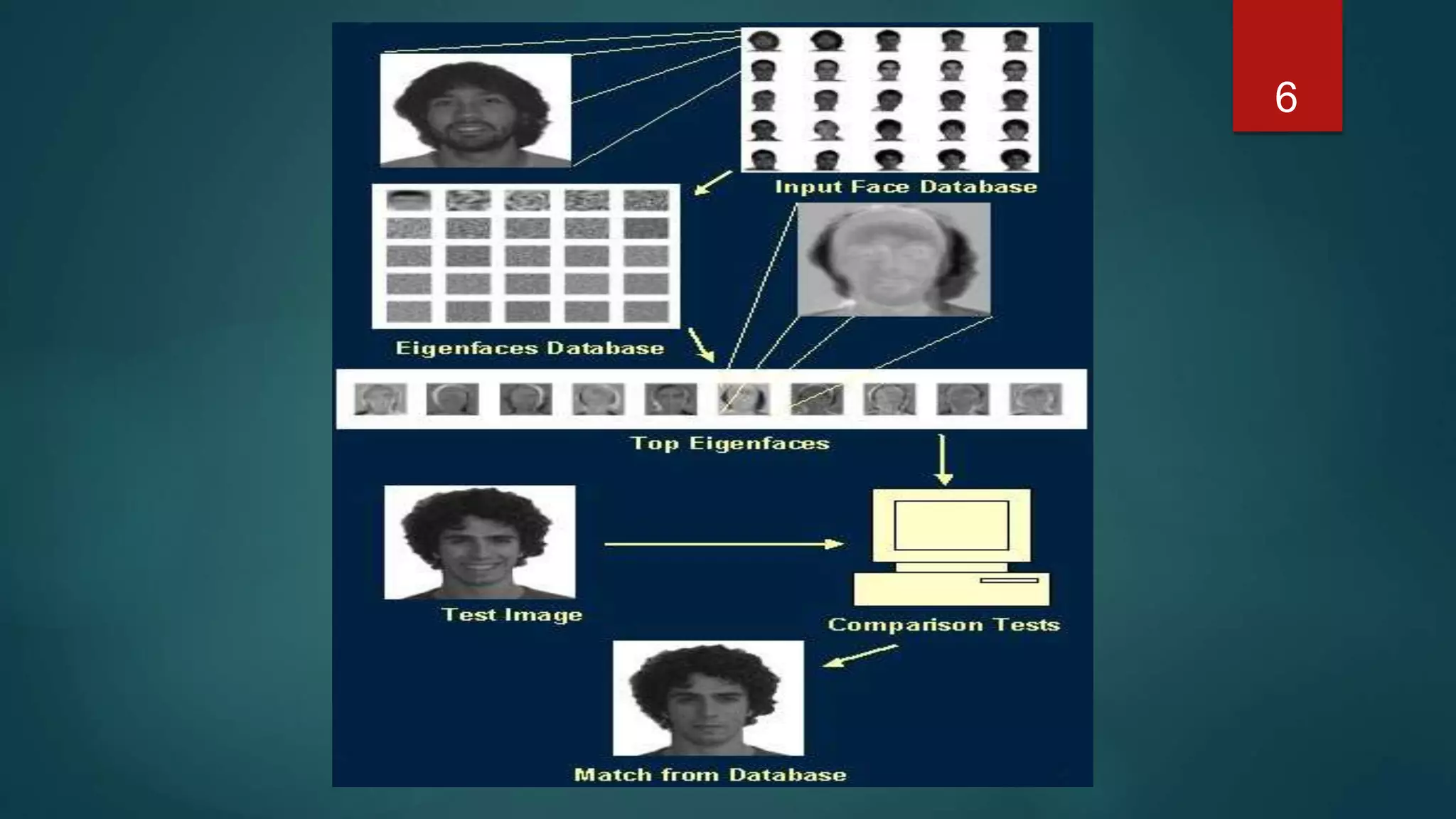

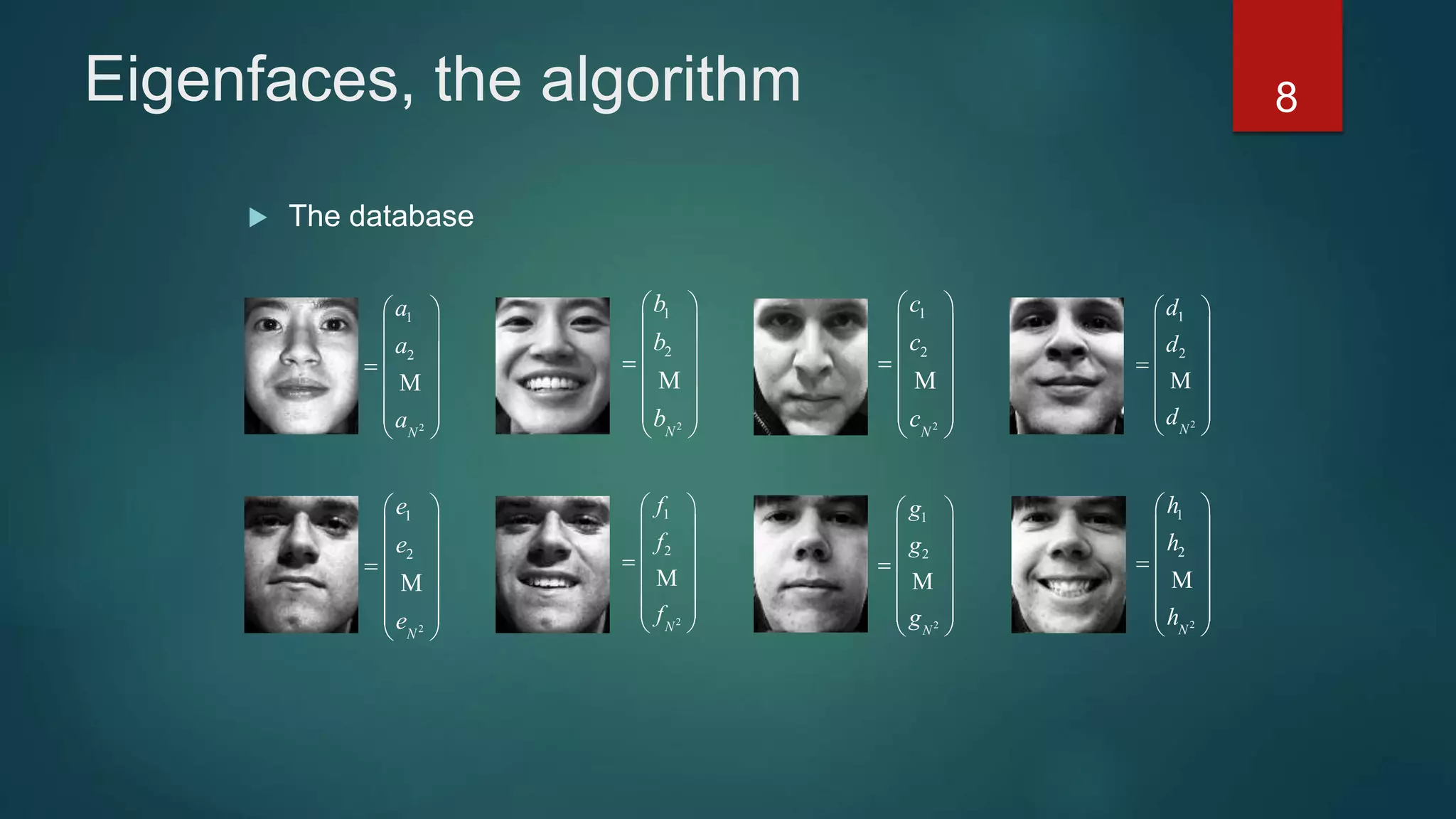

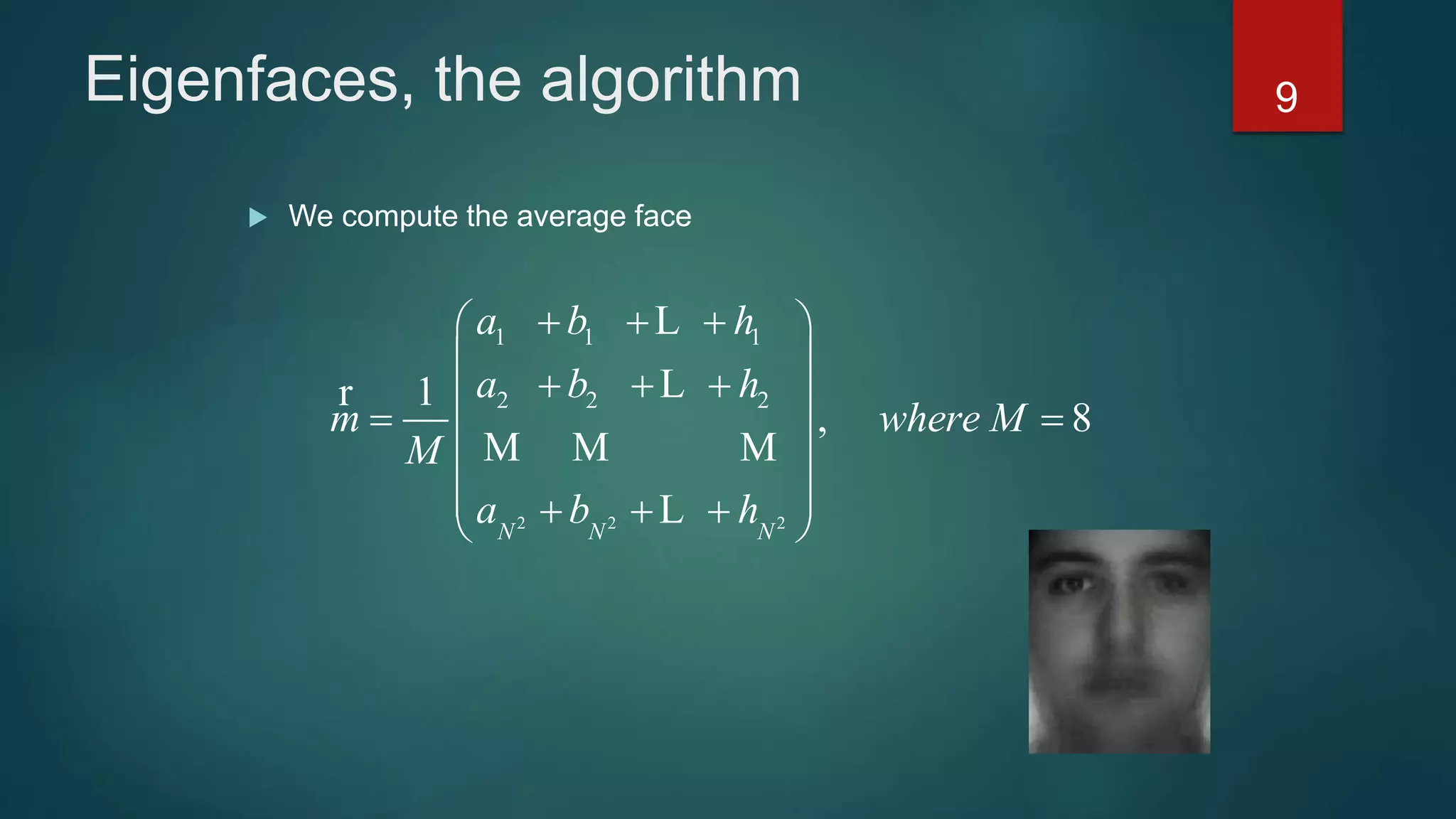

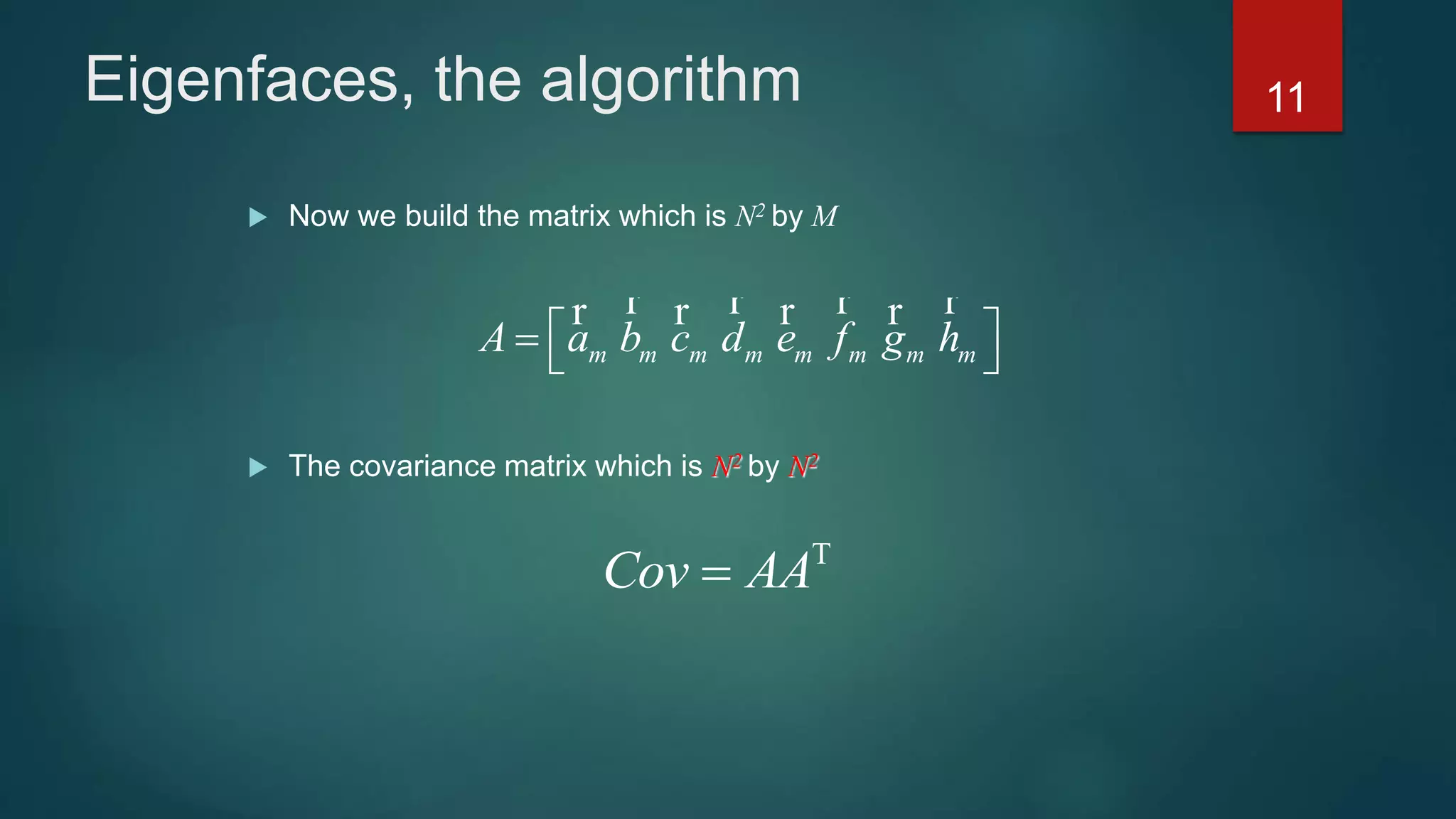

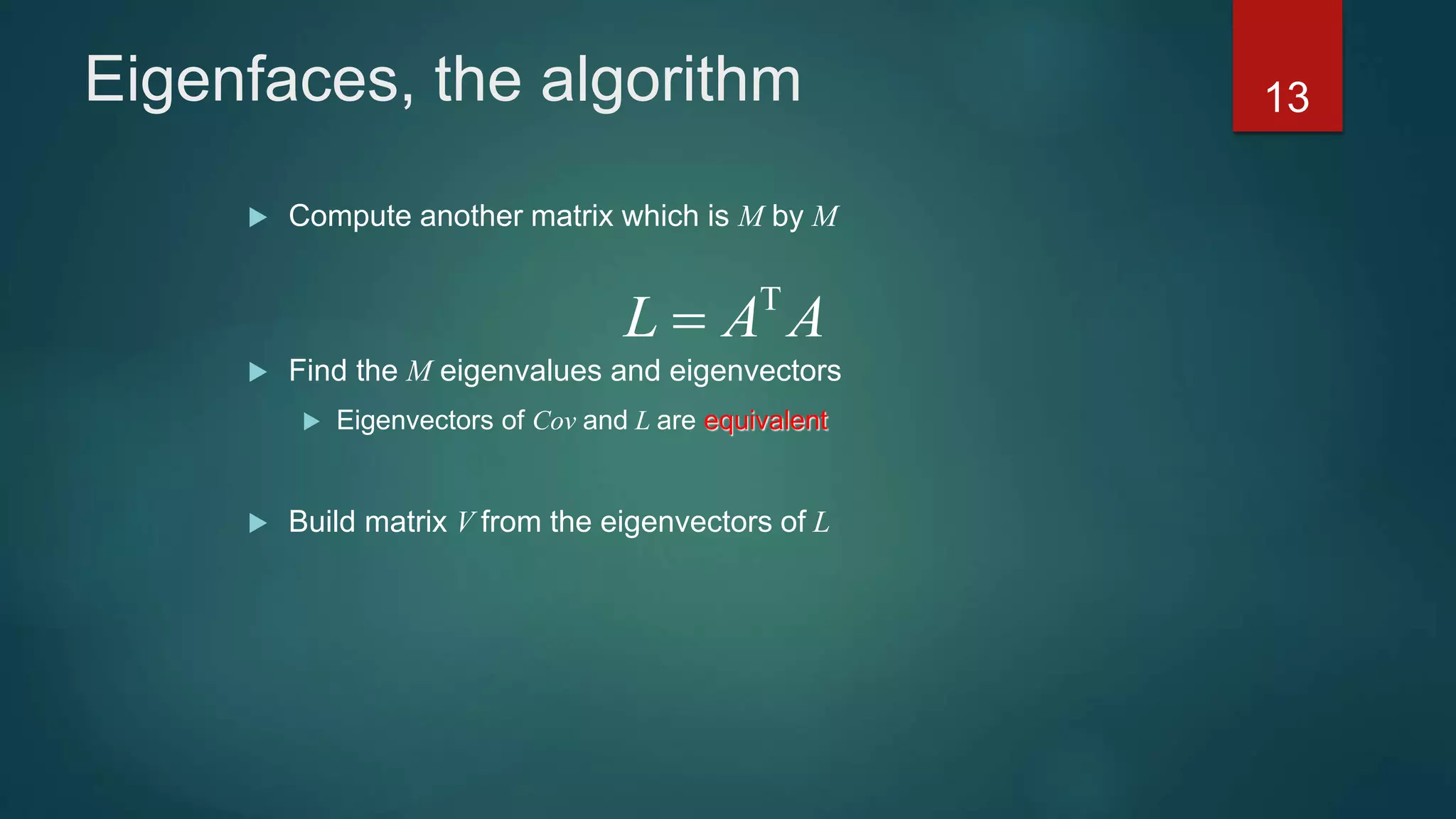

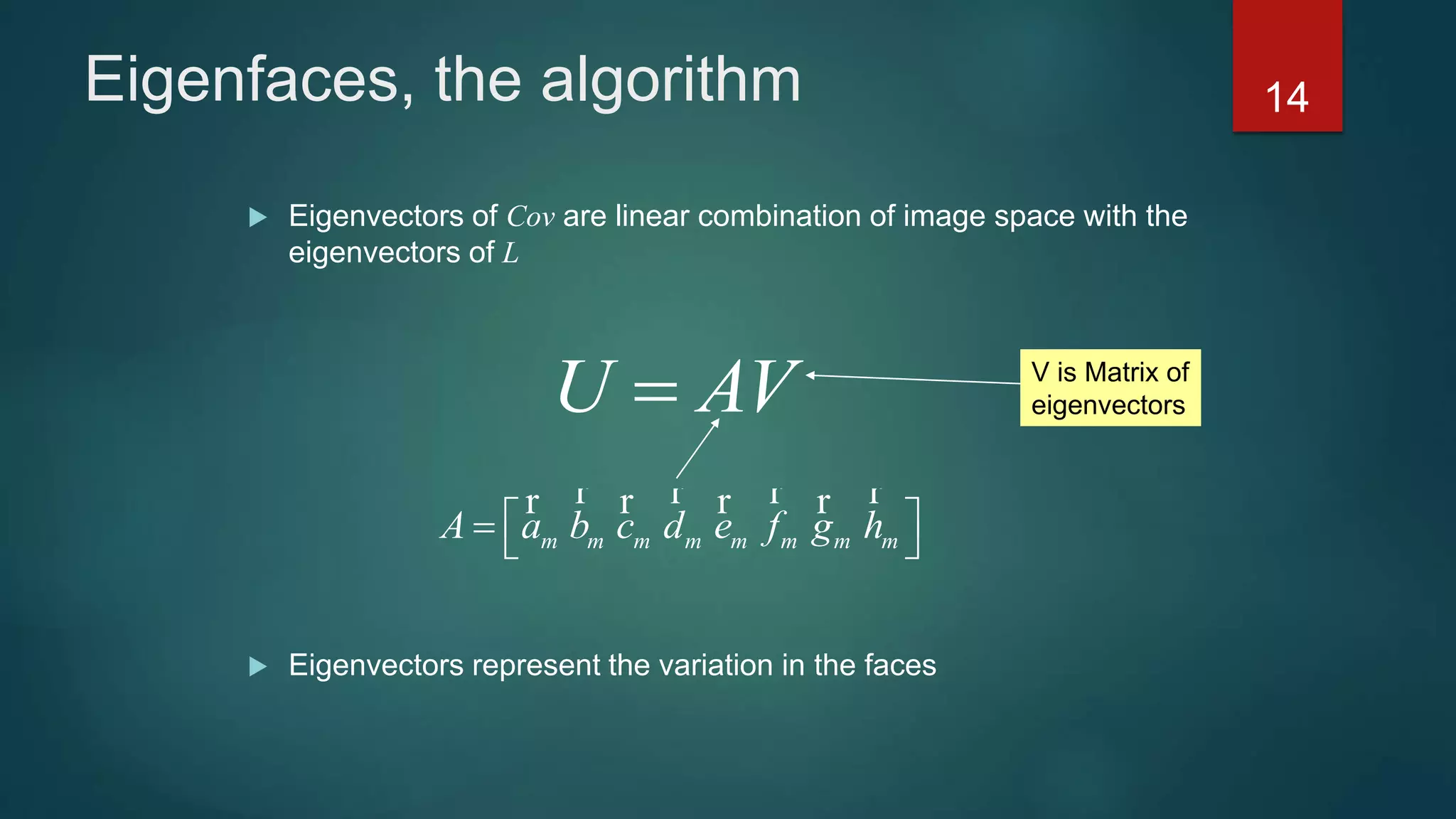

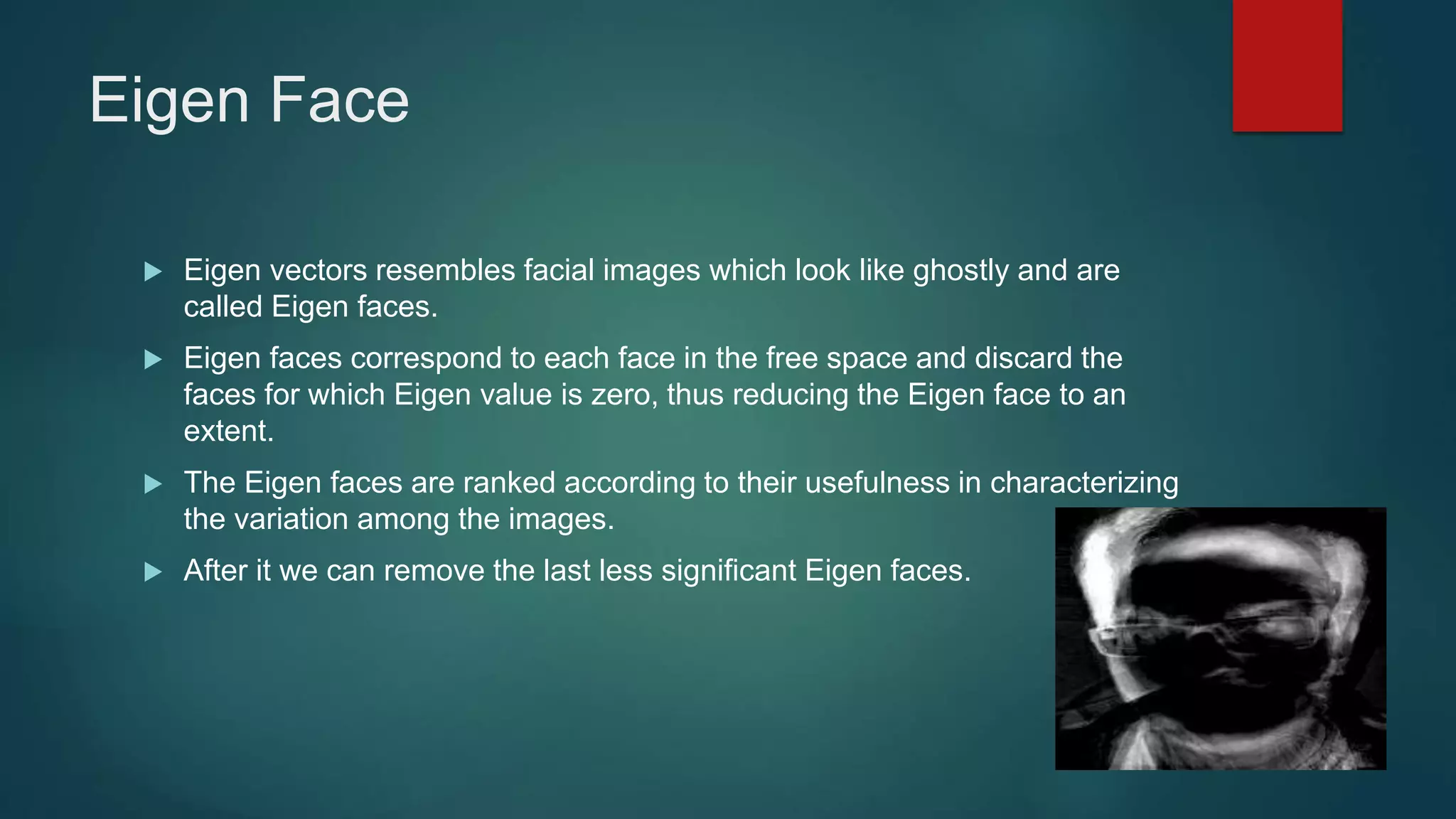

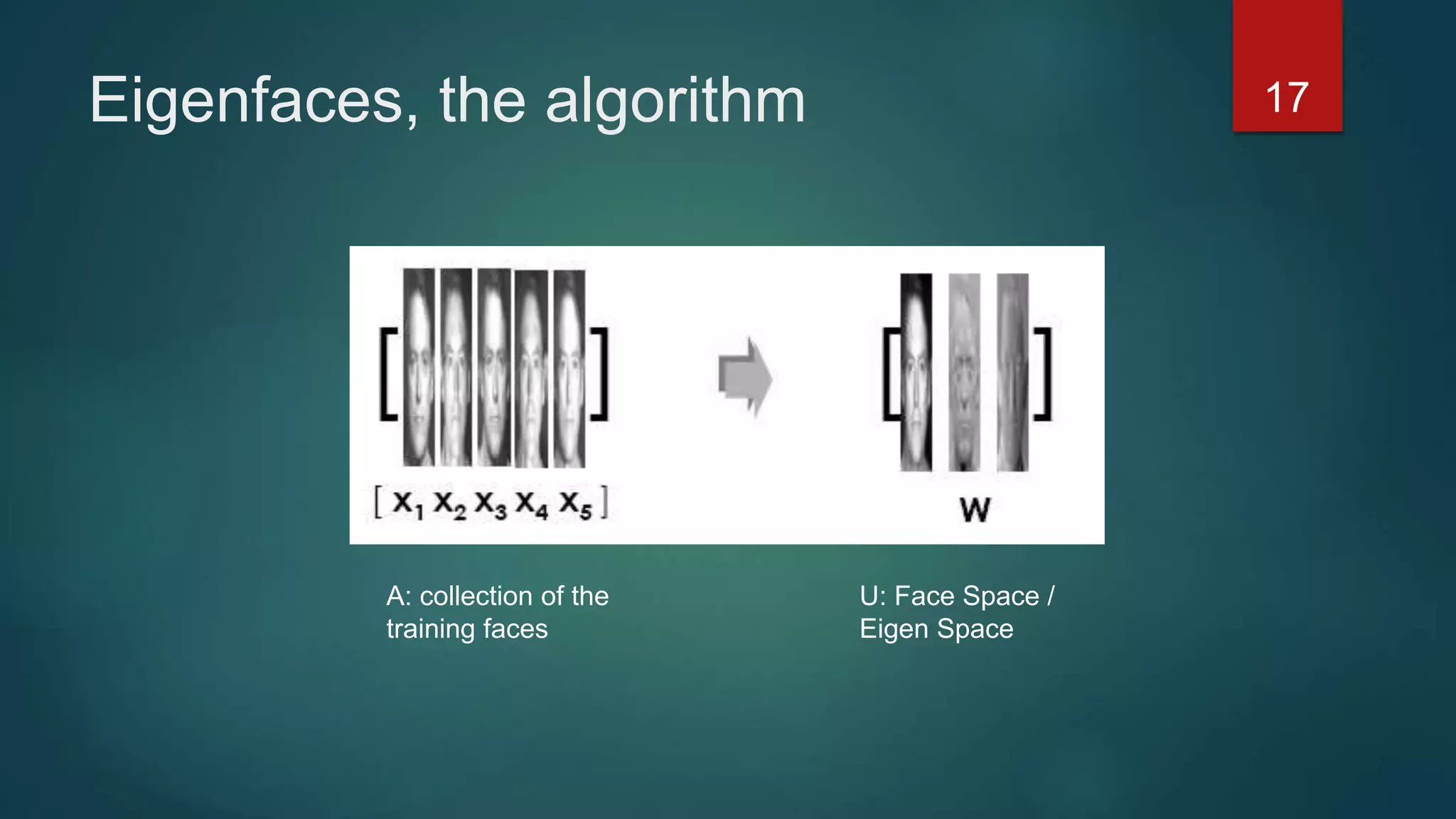

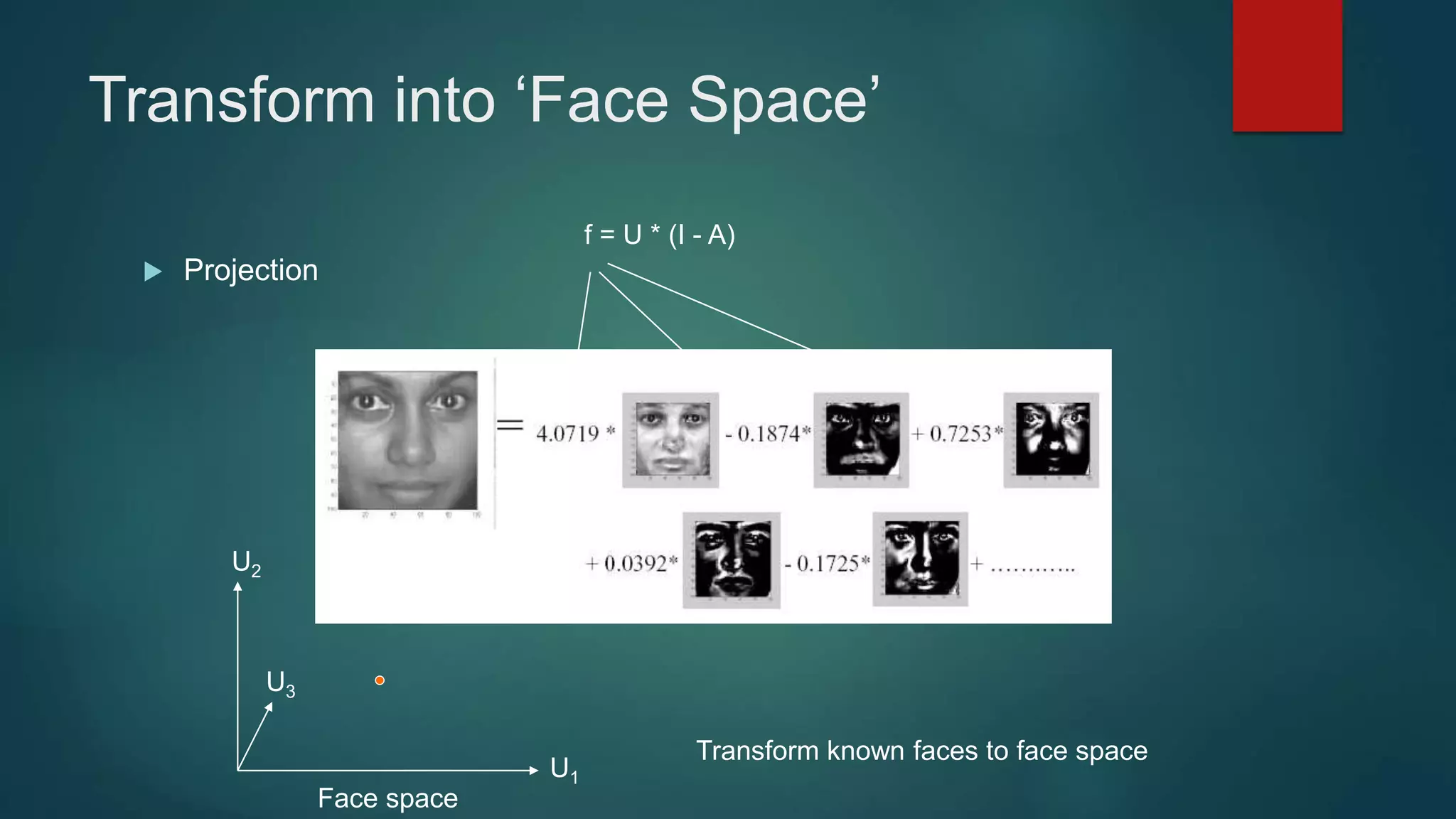

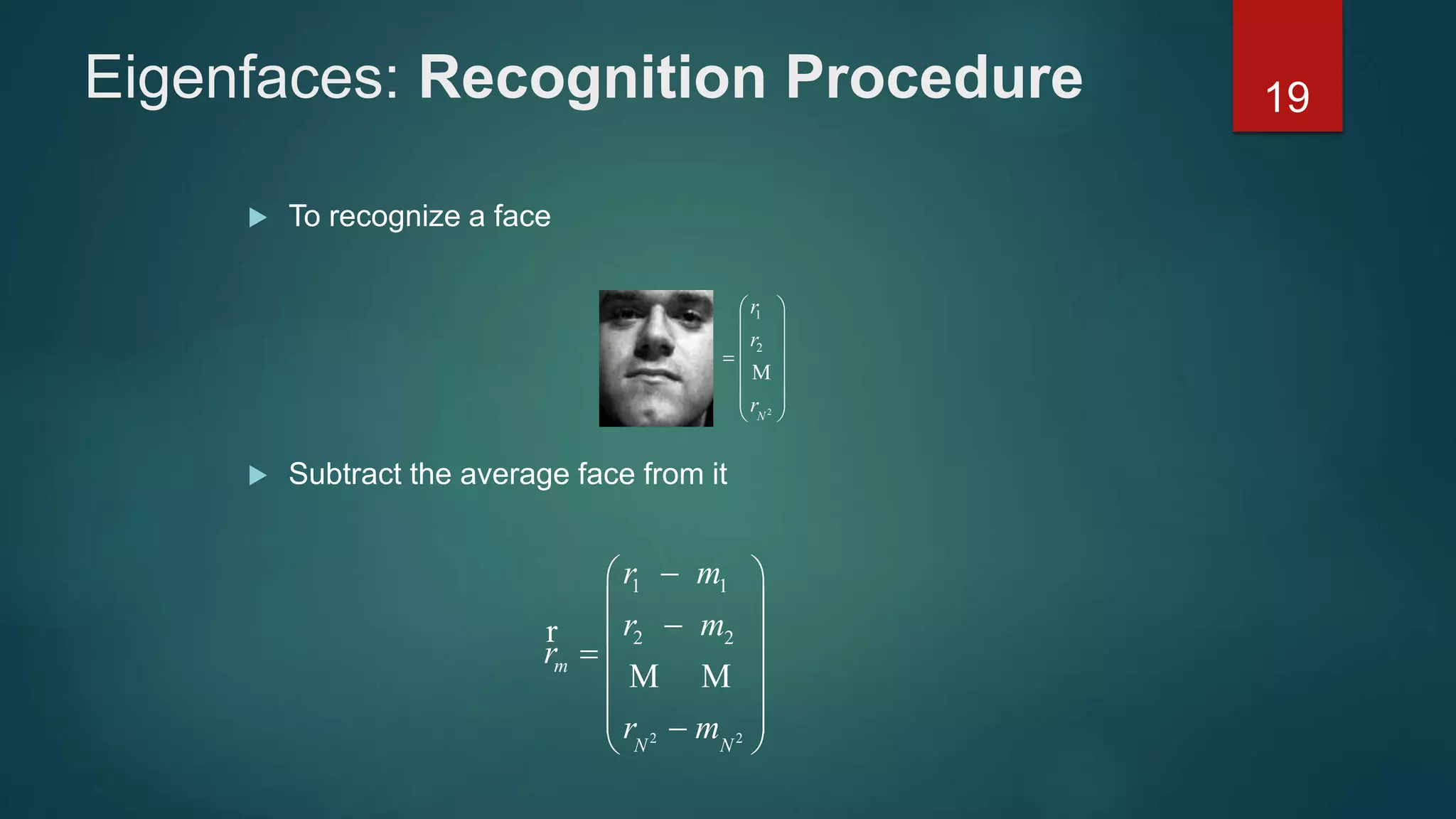

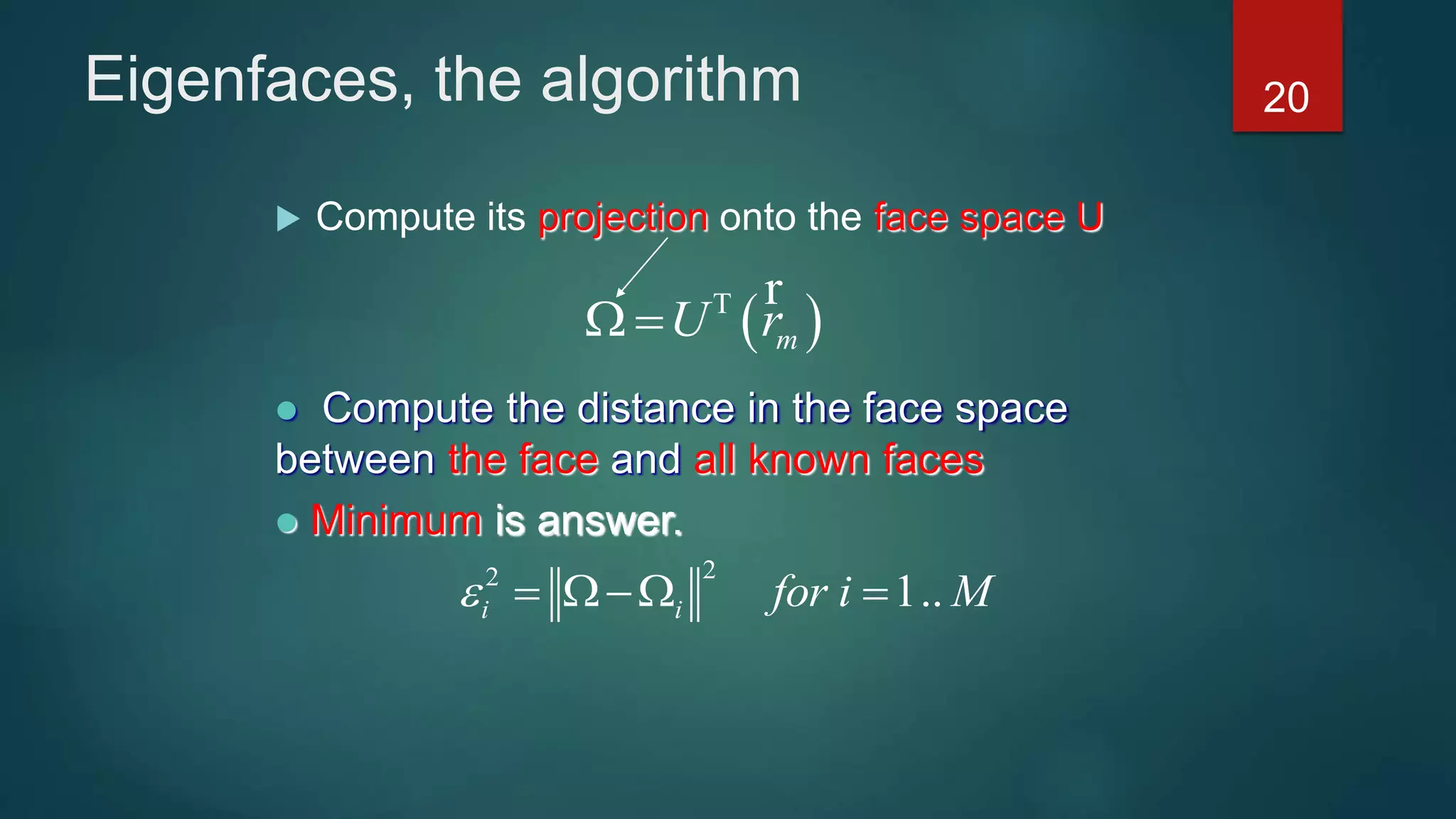

PCA is used to reduce the dimensionality of image data by finding the principal components that account for the most variance in the data. It works by constructing a feature space from the eigenvectors of the image covariance matrix. Images are represented as vectors in this lower-dimensional feature space rather than the original high-dimensional pixel space. The eigenvectors corresponding to the largest eigenvalues are the principal components or "eigenfaces" that best represent the variation between images. New images can be classified by projecting them into this eigenface feature space.