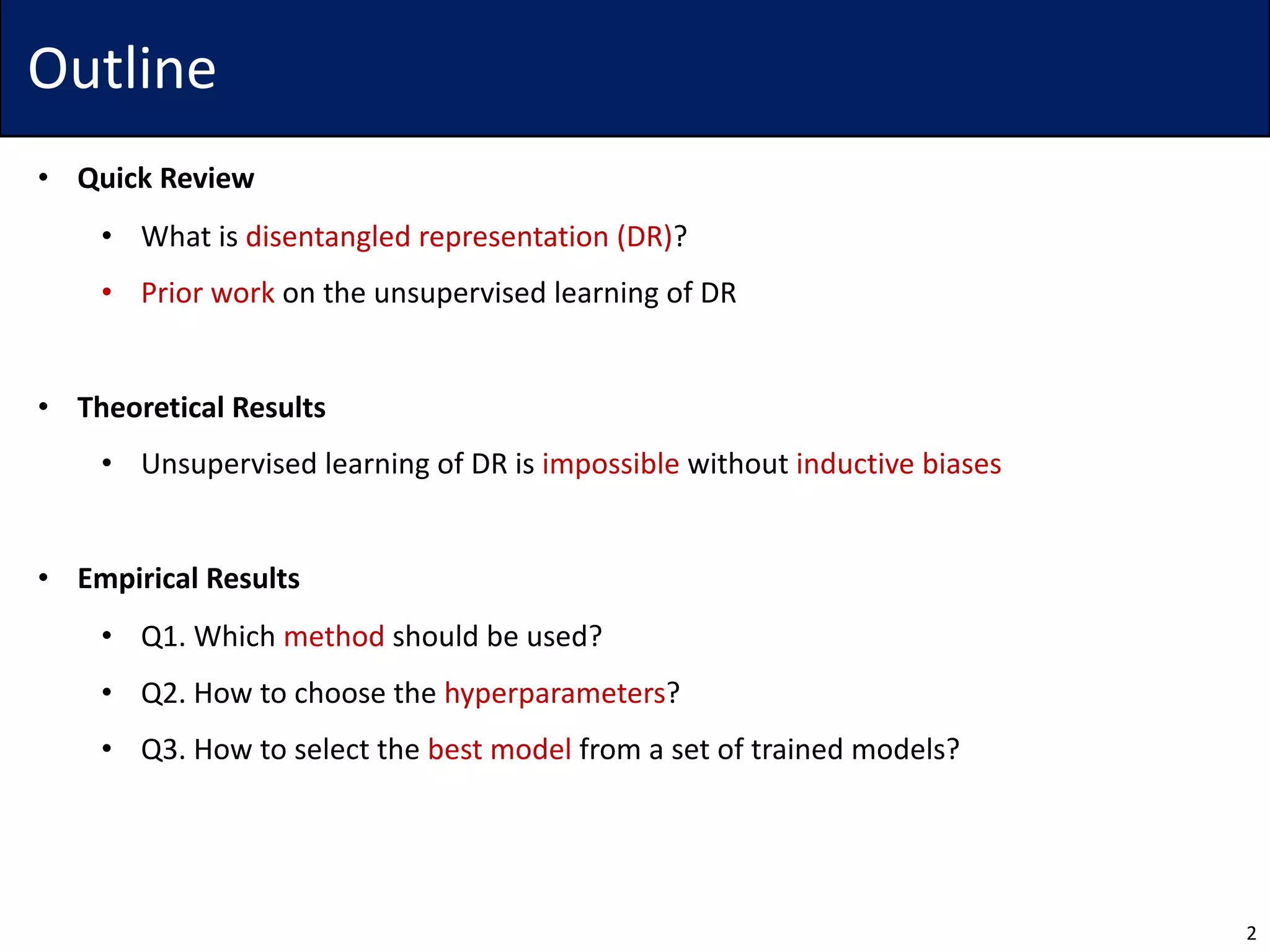

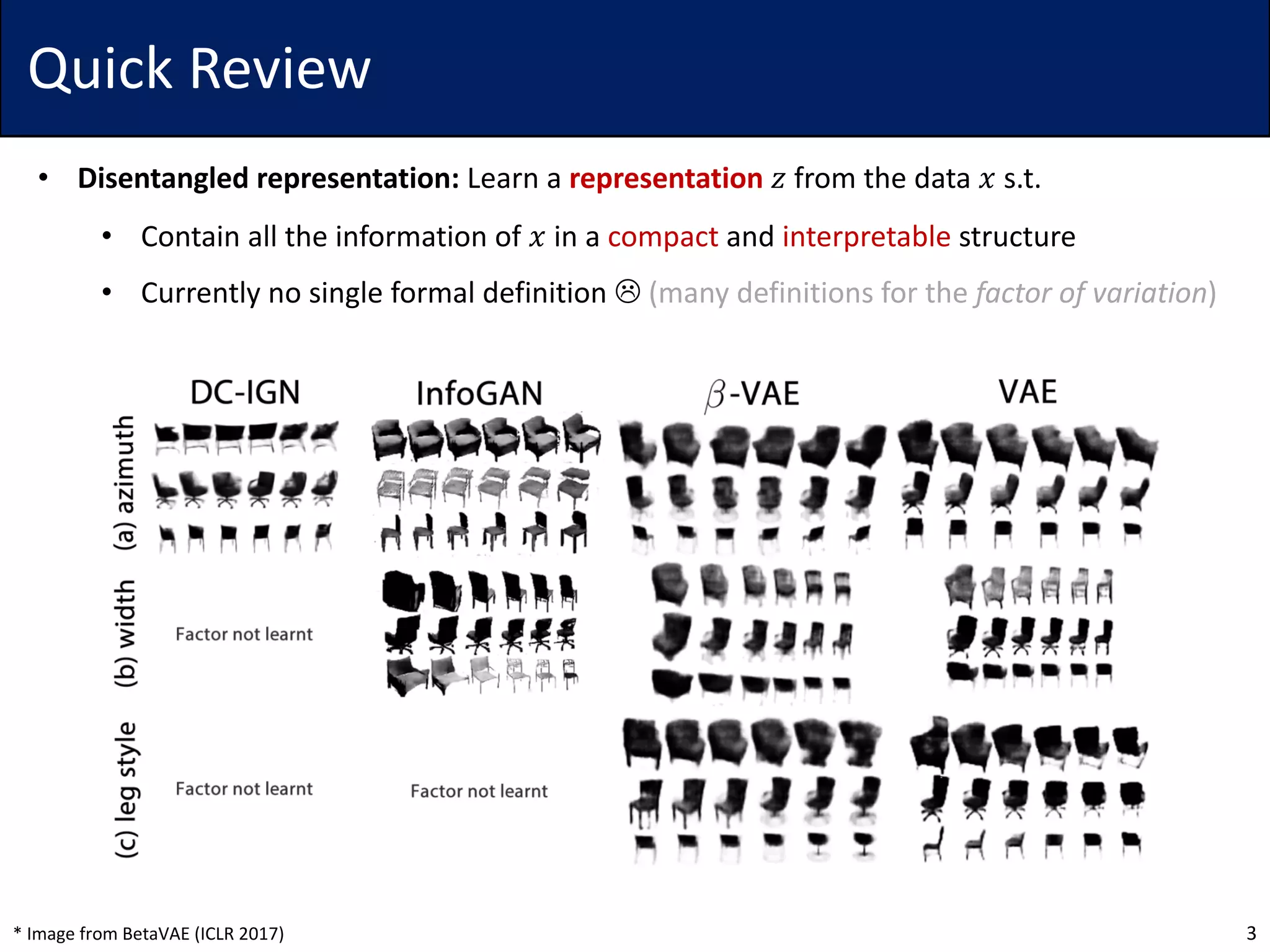

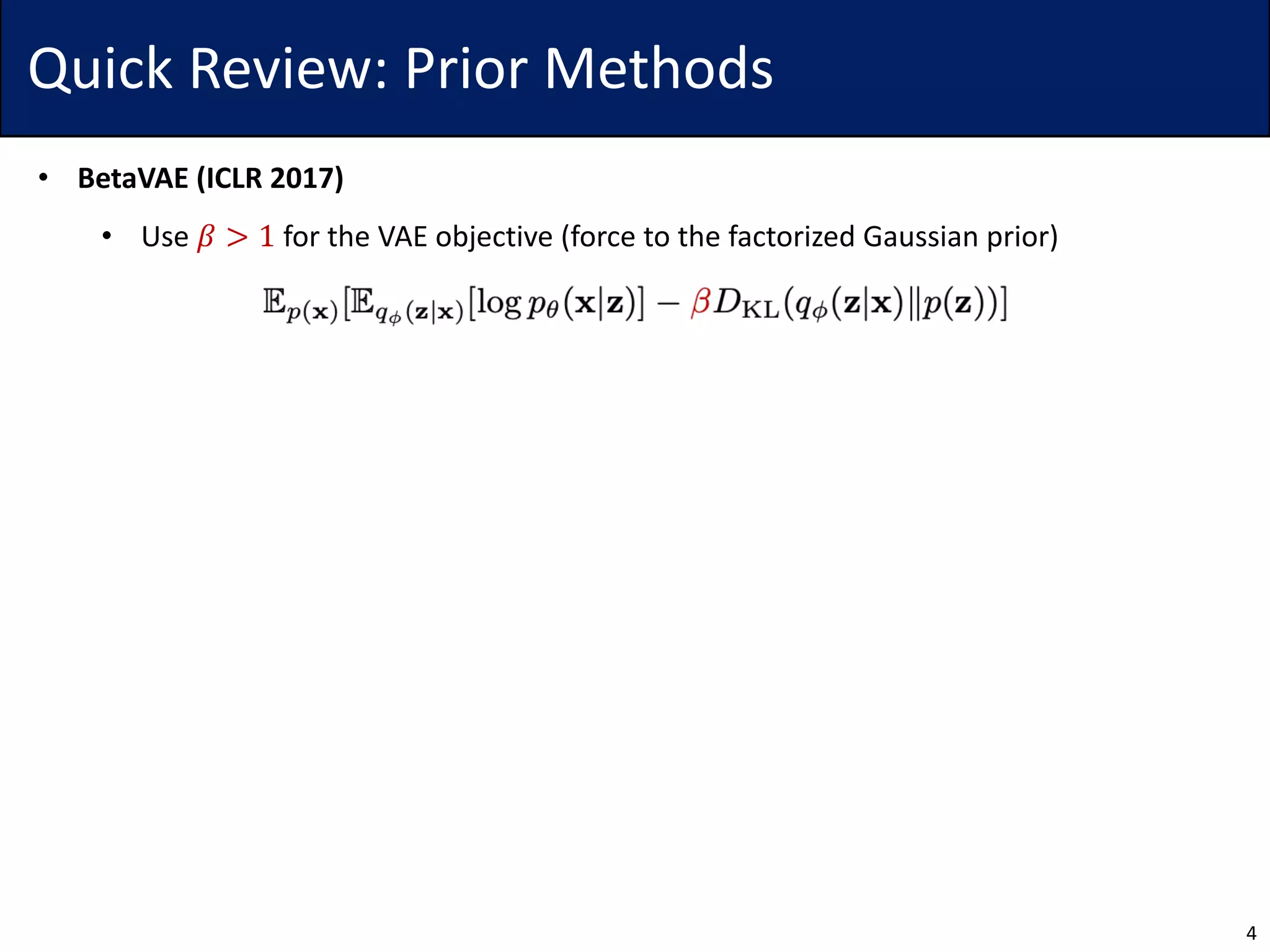

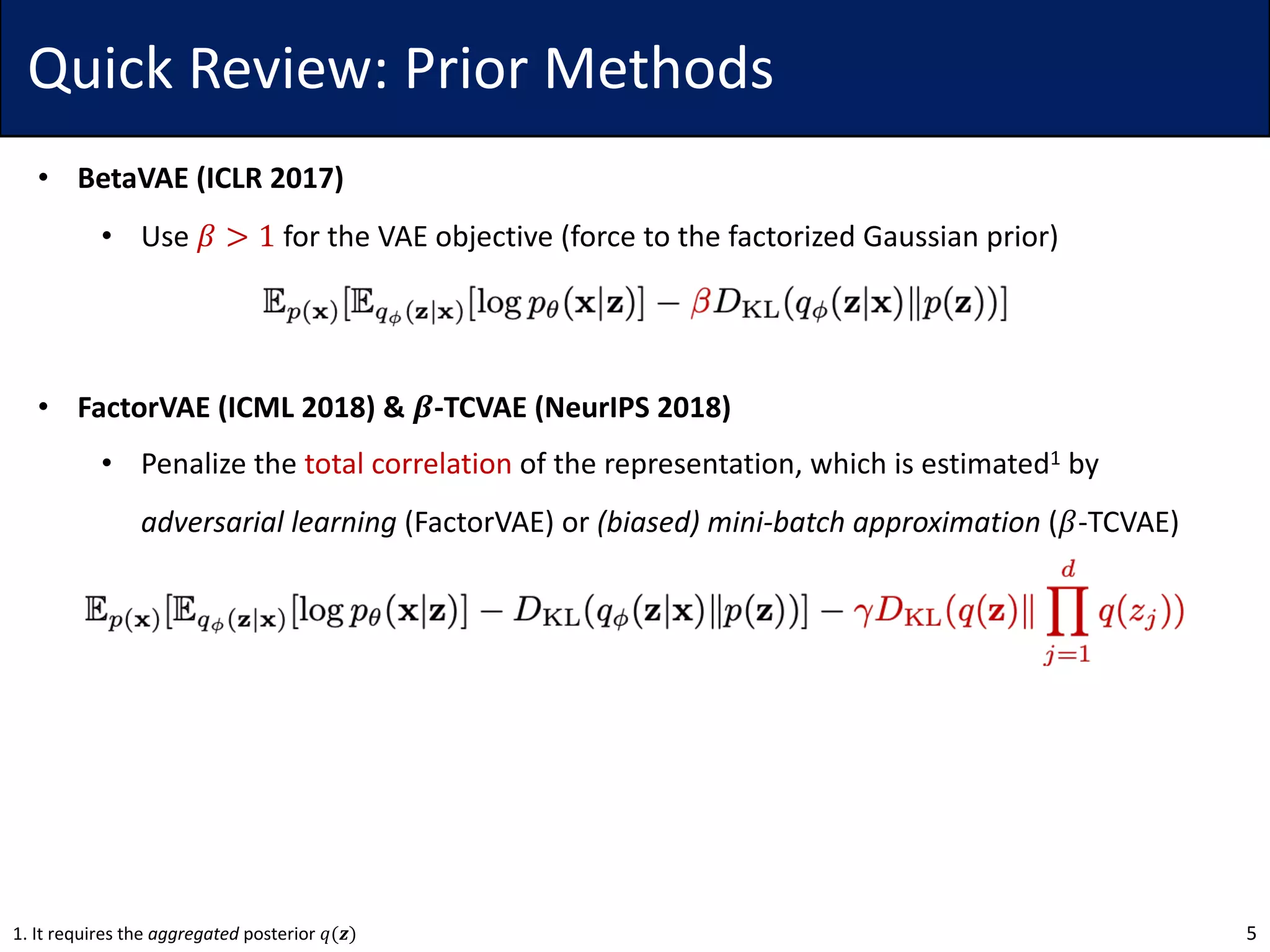

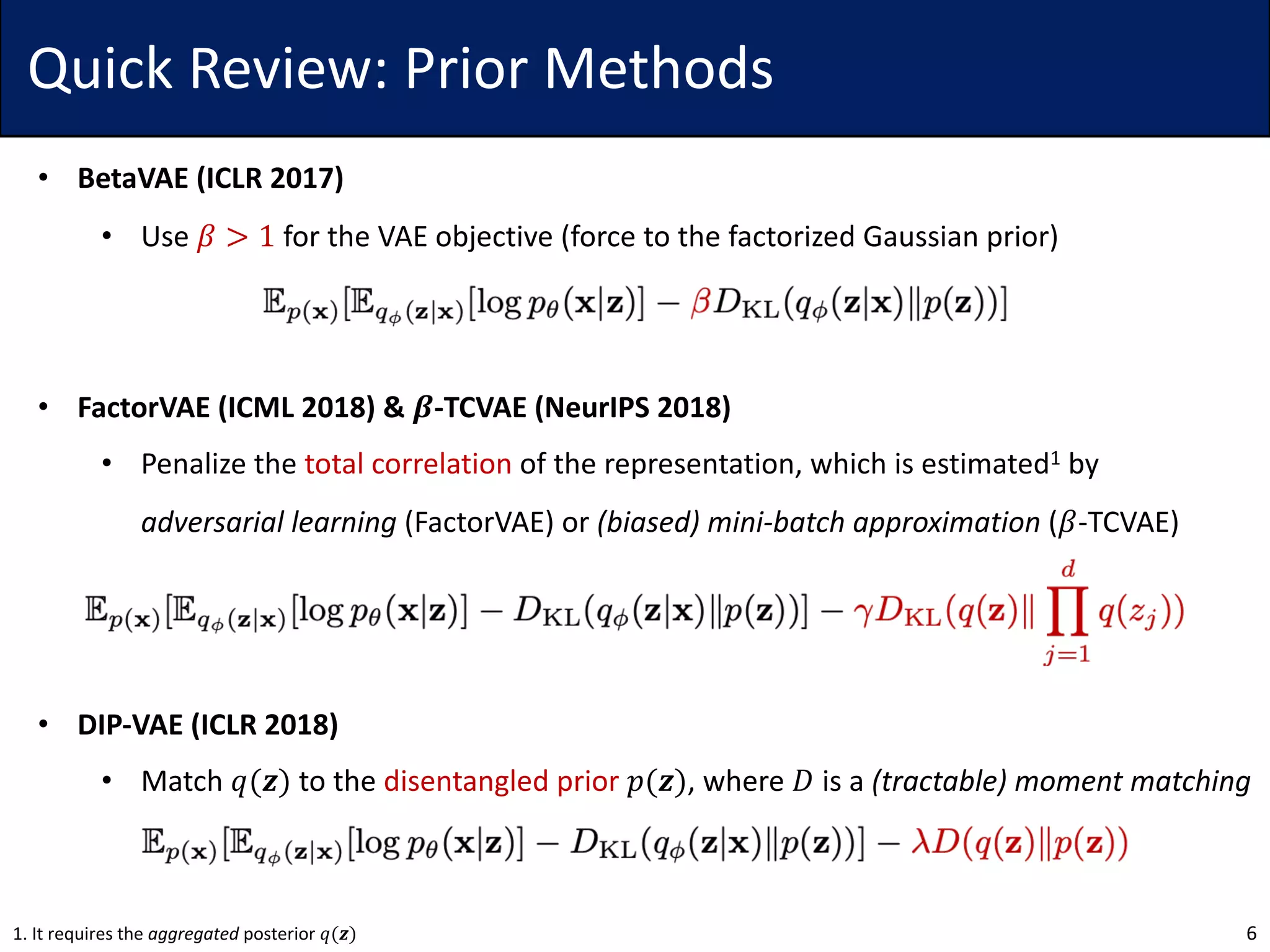

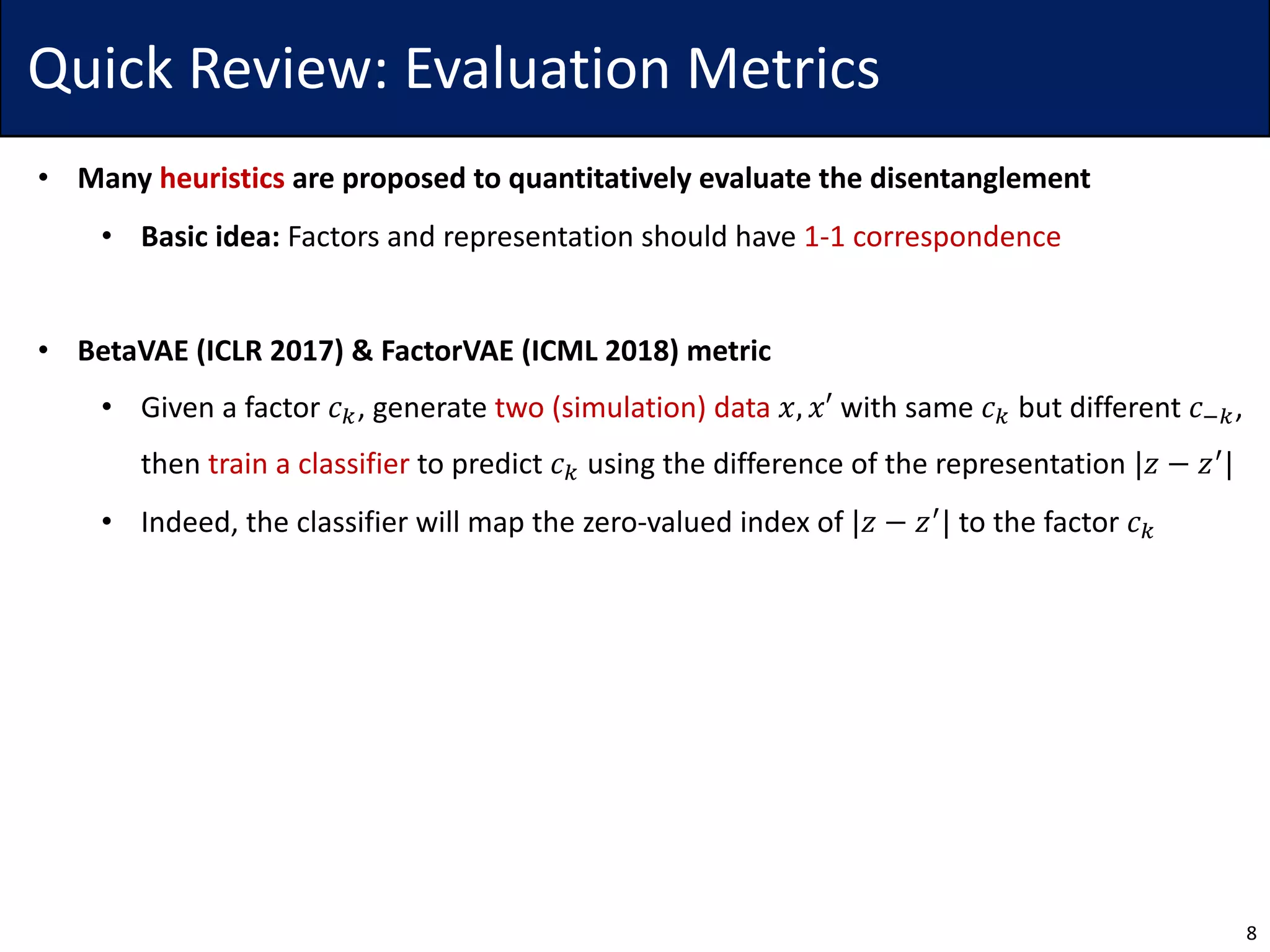

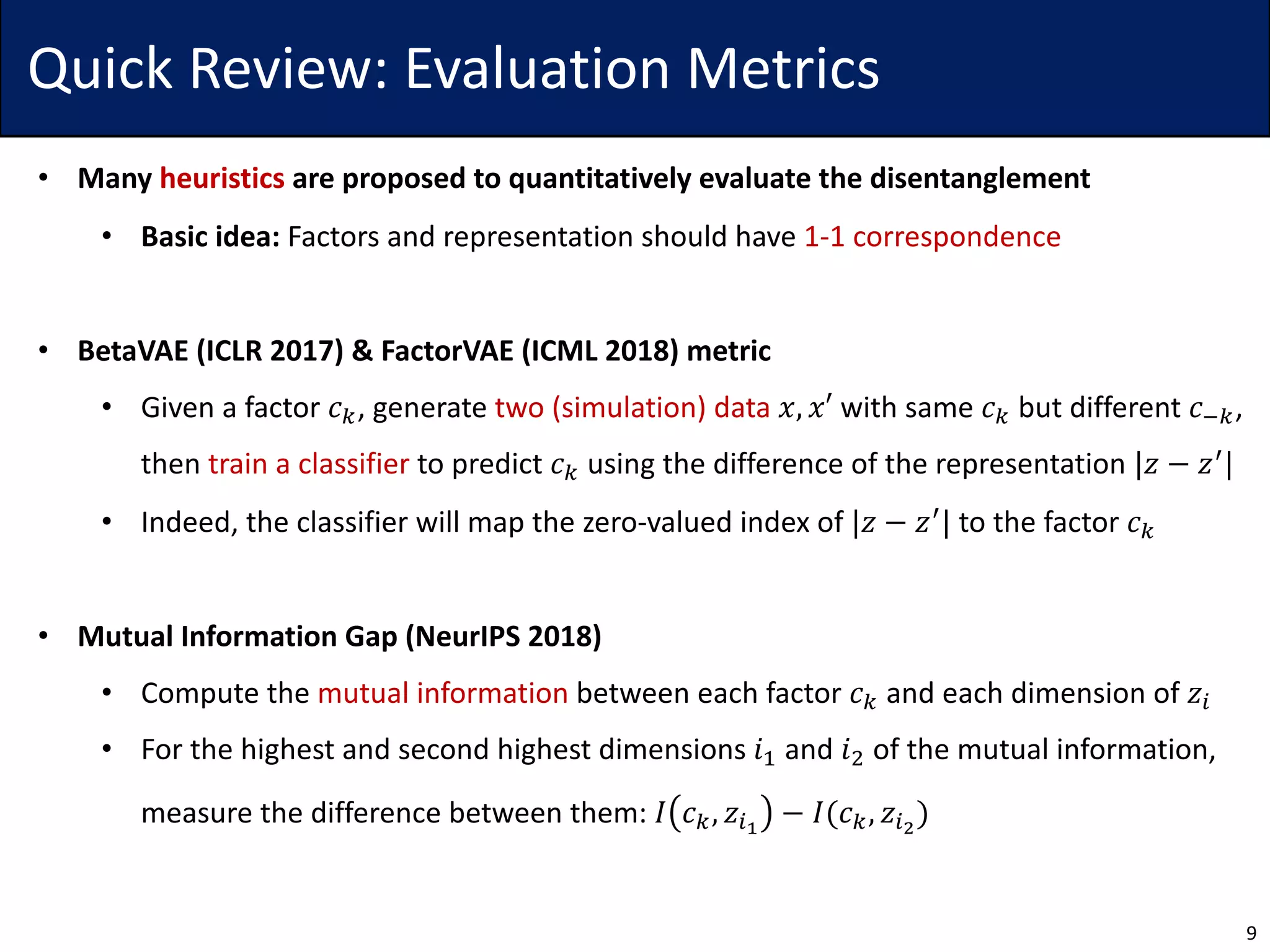

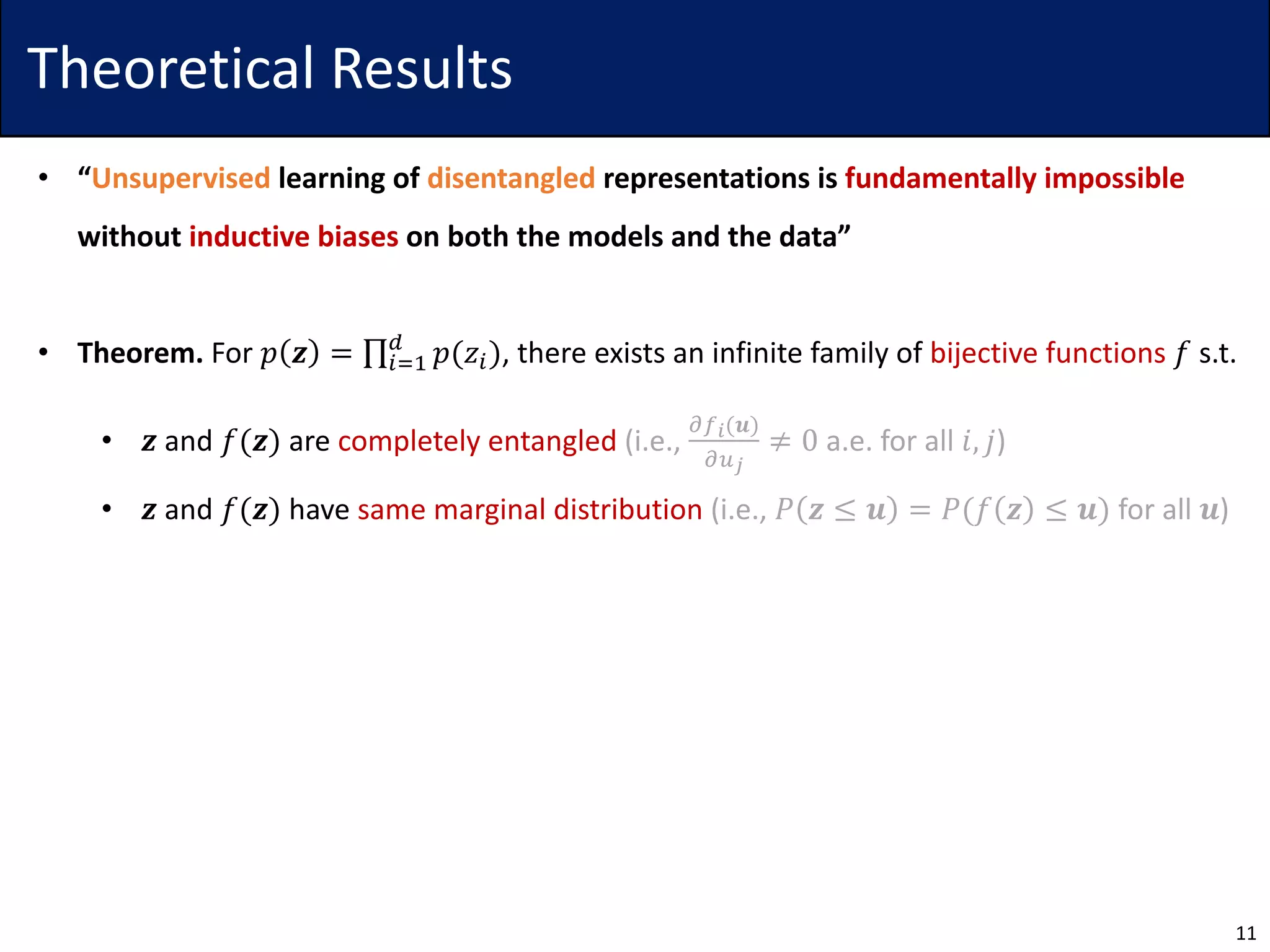

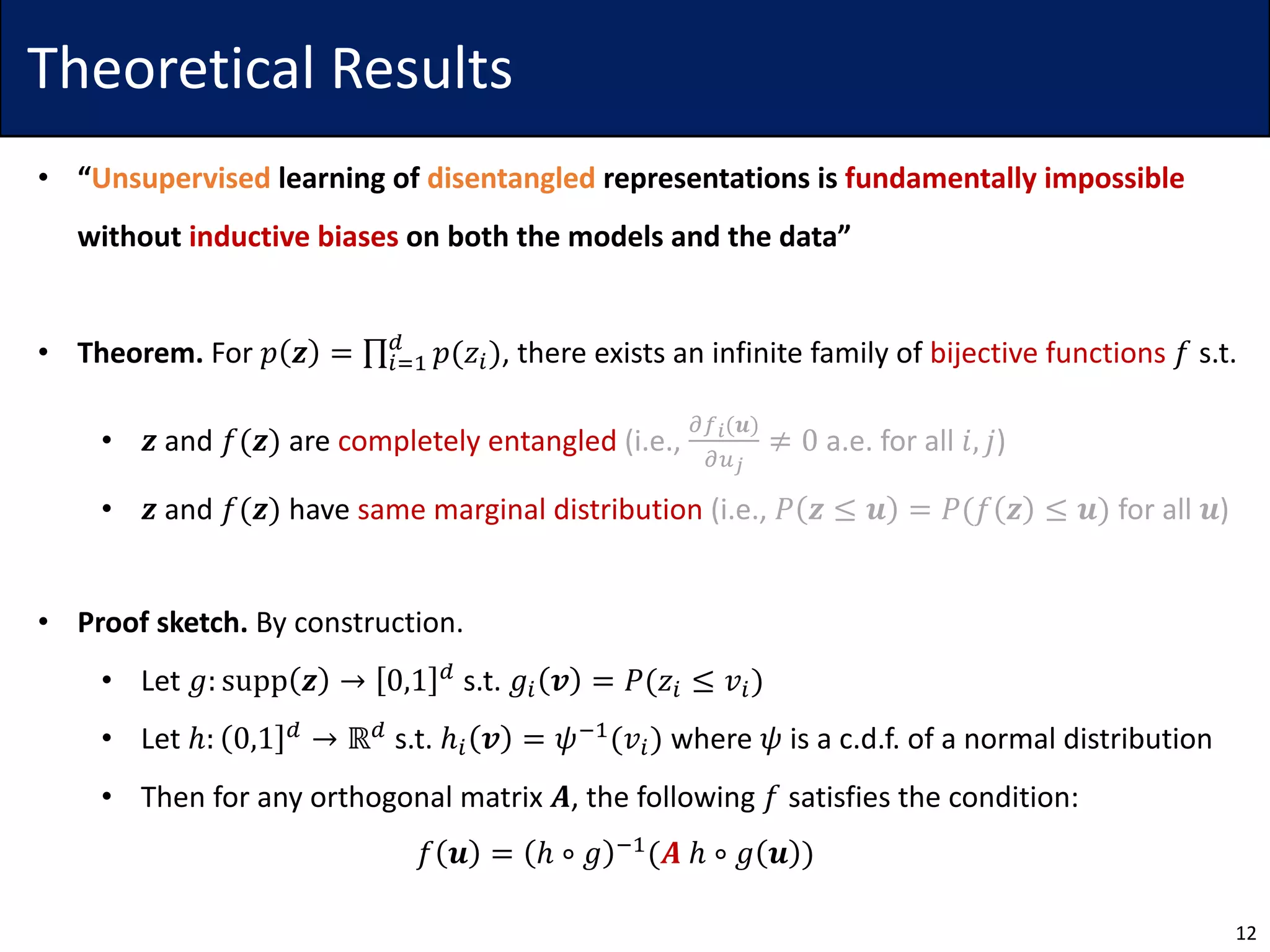

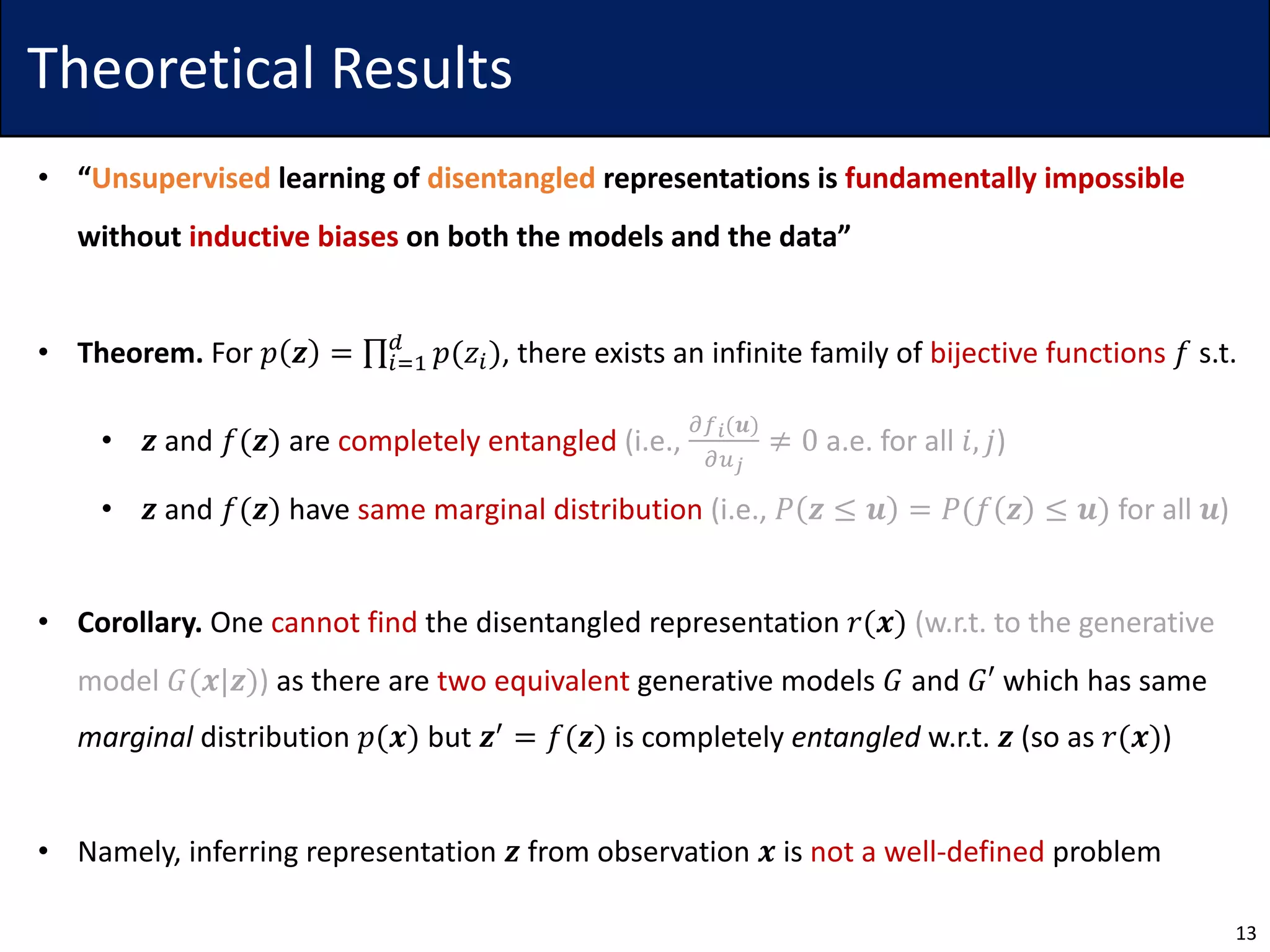

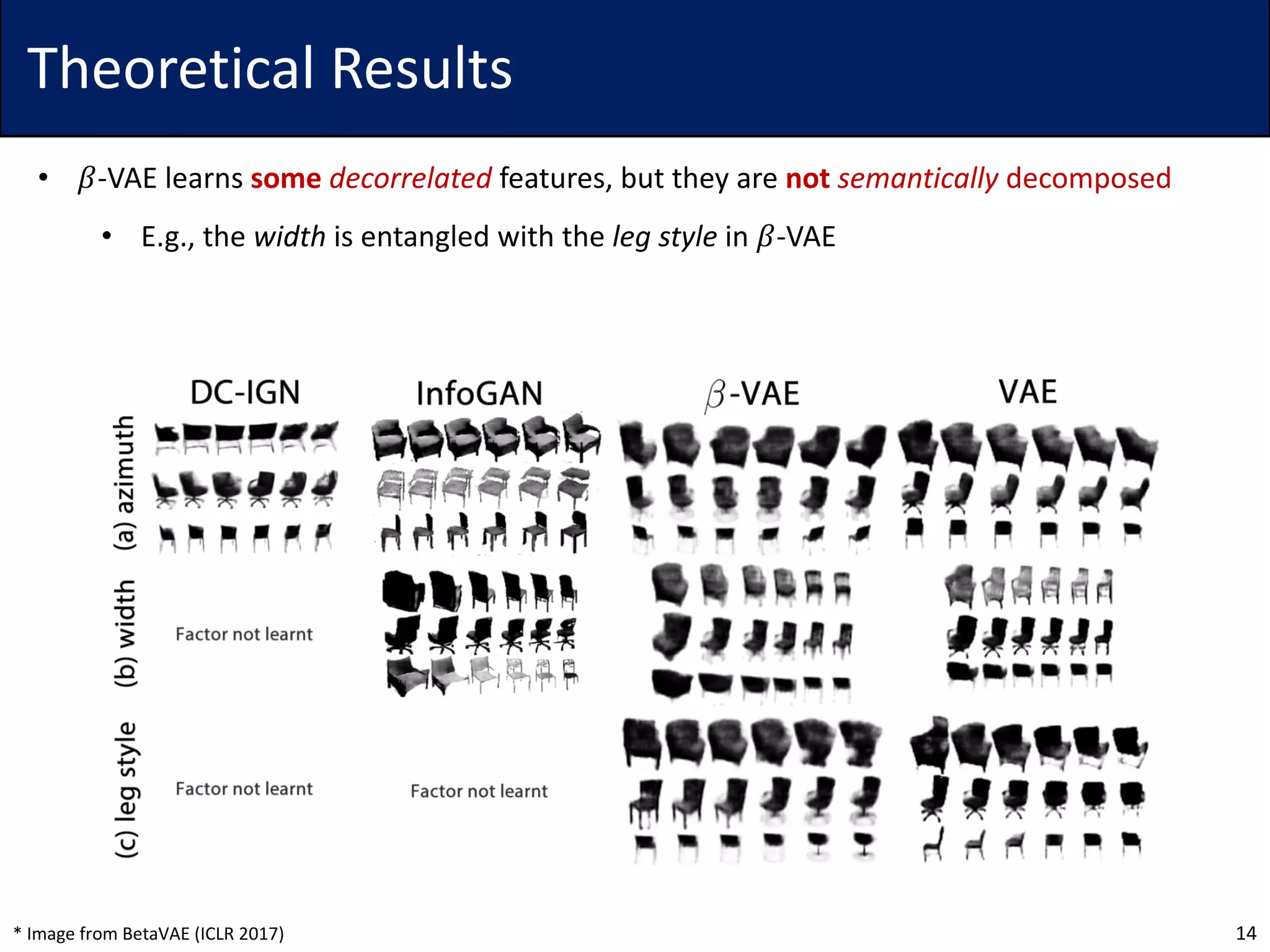

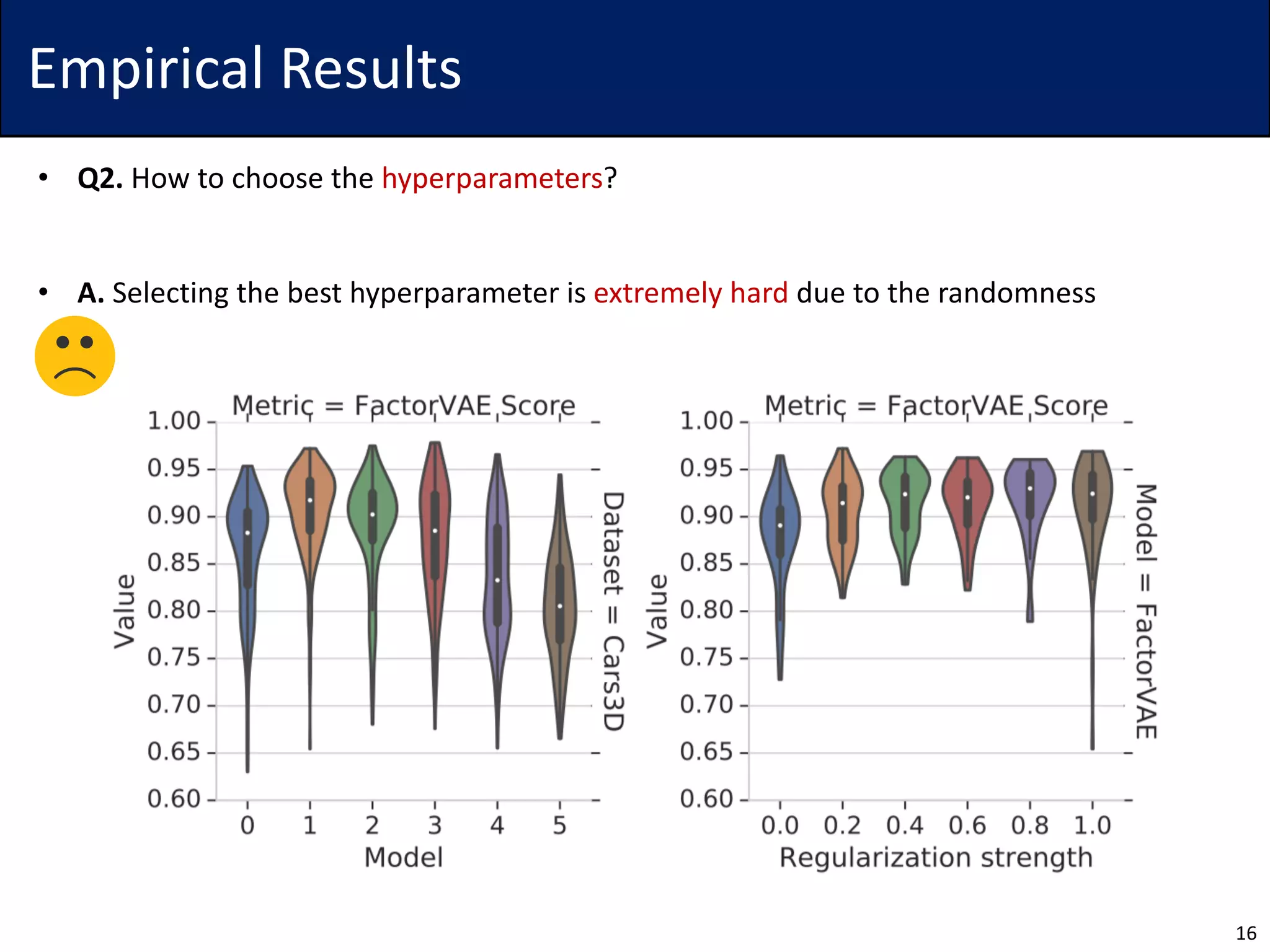

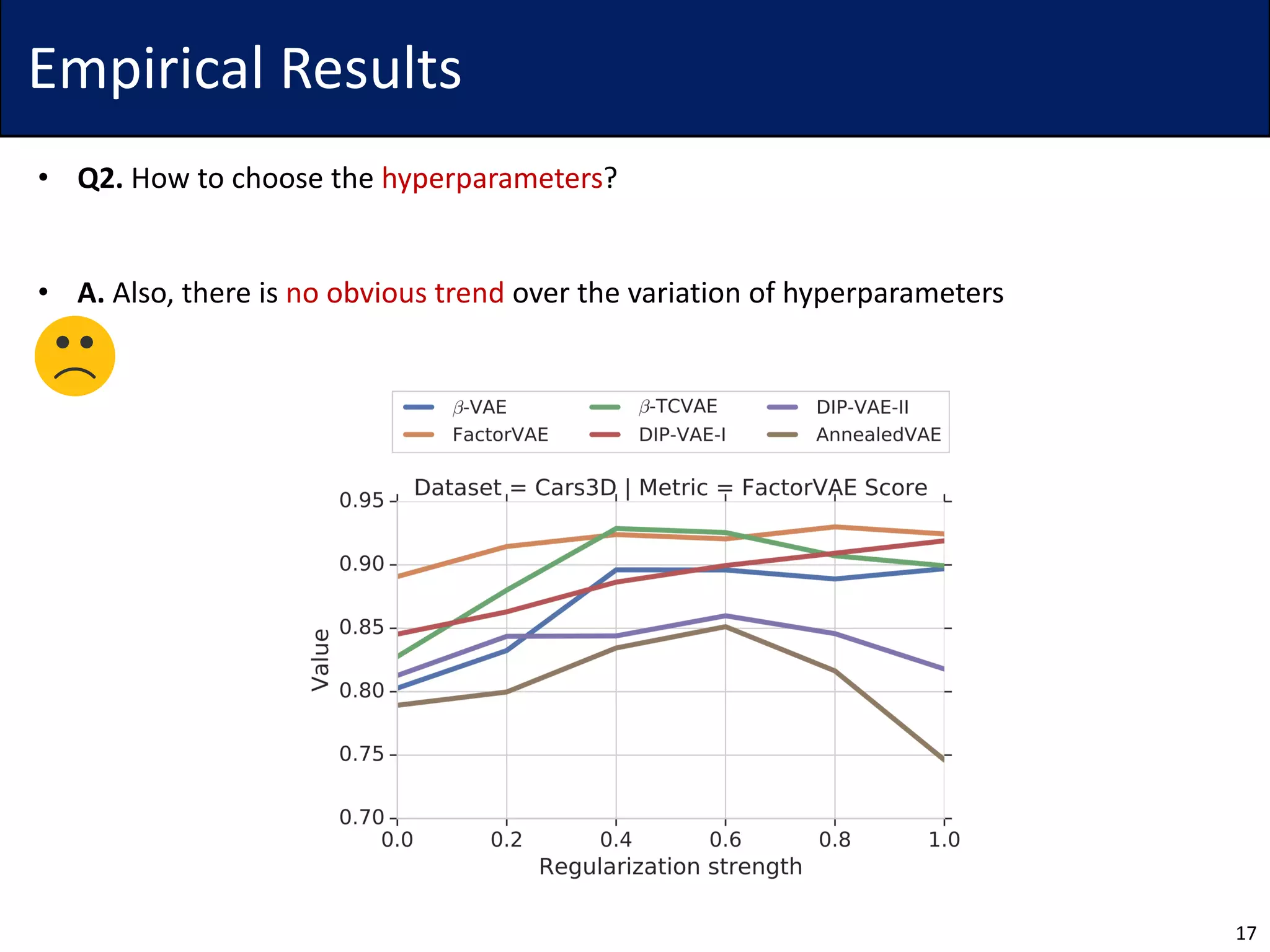

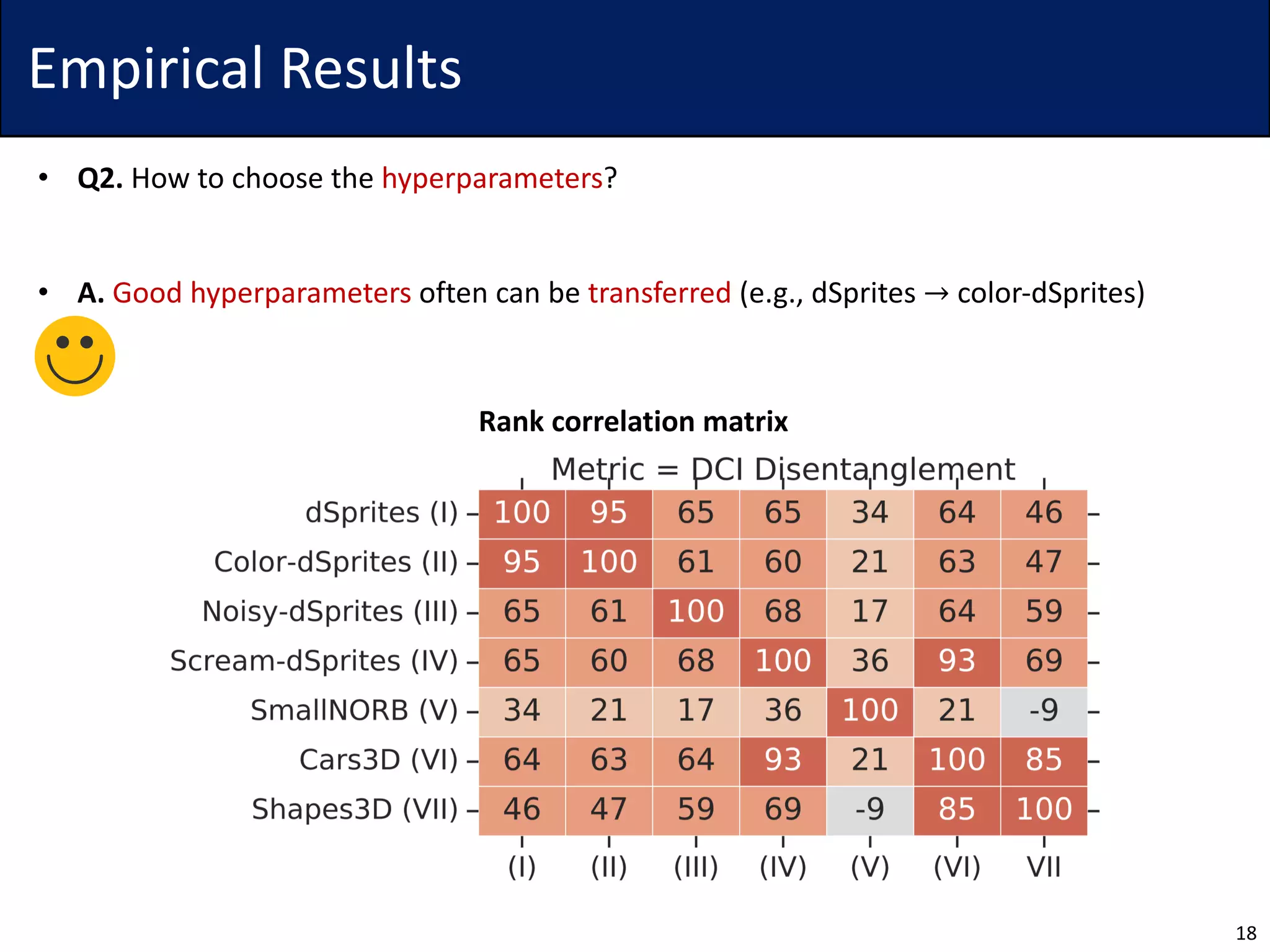

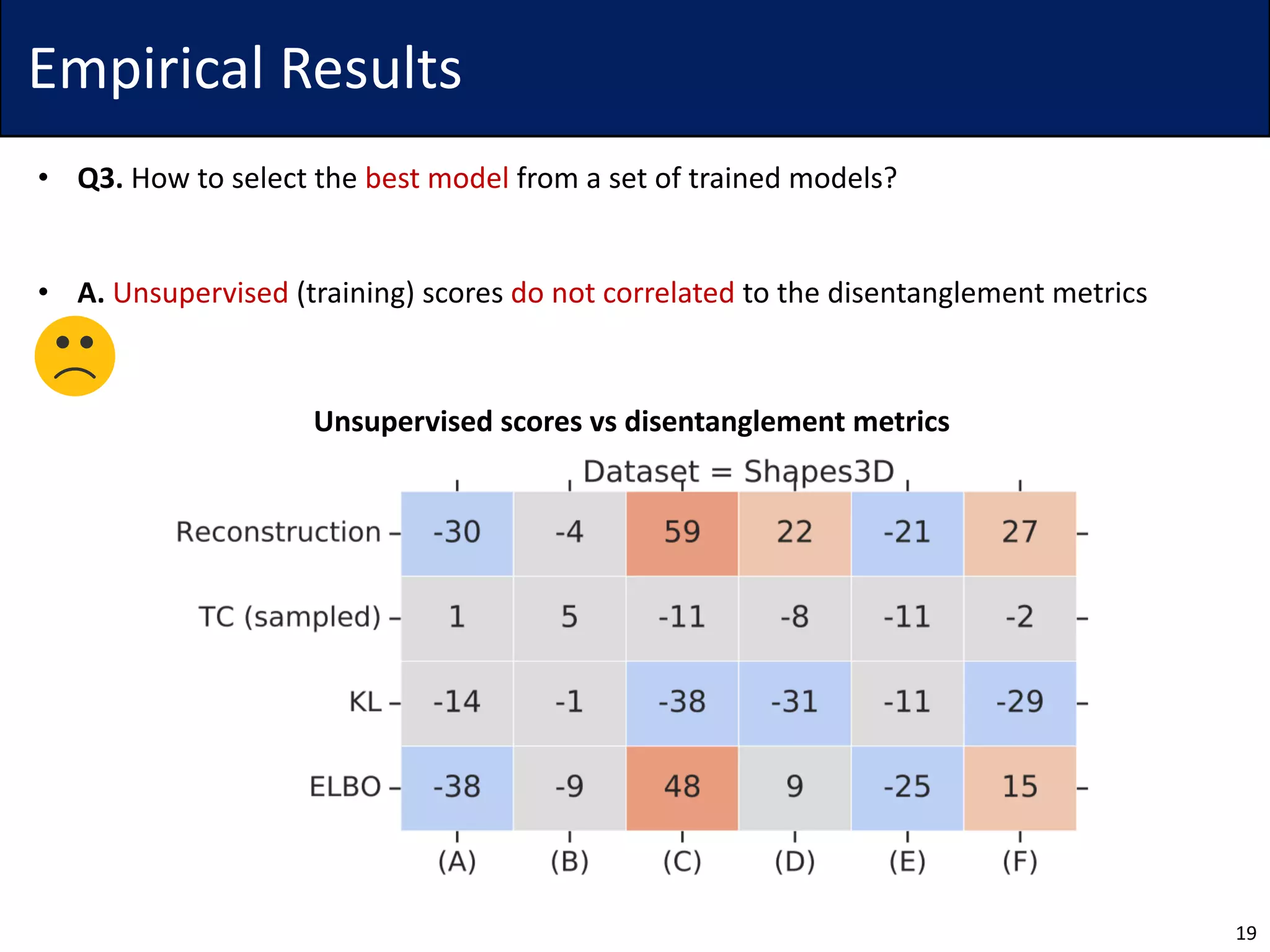

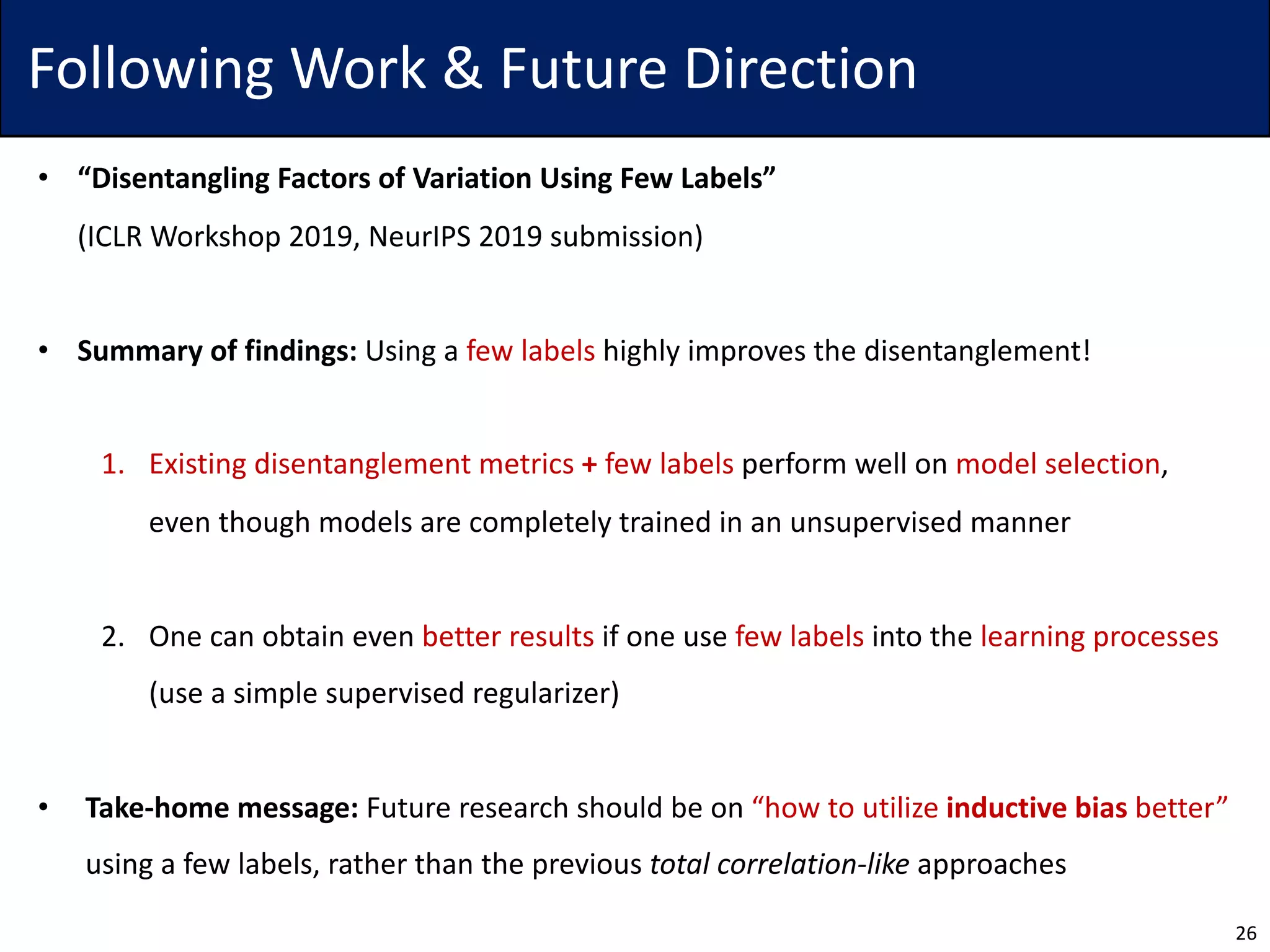

The document discusses challenges in the unsupervised learning of disentangled representations, emphasizing that such learning is fundamentally impossible without inductive biases. It reviews existing methods, metrics for evaluation, and presents theoretical results that highlight limitations of current approaches. Empirical findings suggest the significance of hyperparameters and the necessity for rigorous validation of methods, with recommendations for future research to improve disentanglement using few labels.