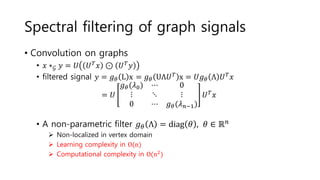

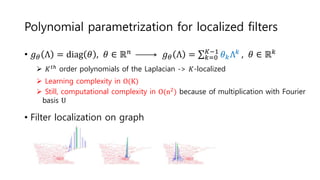

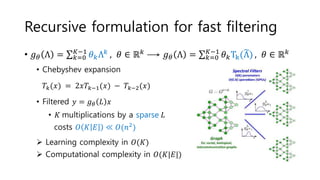

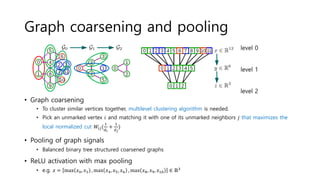

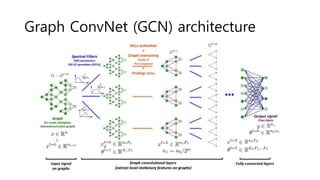

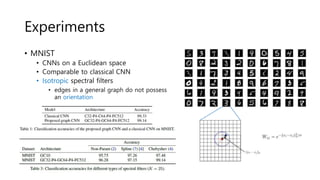

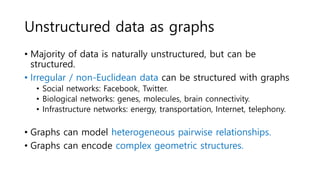

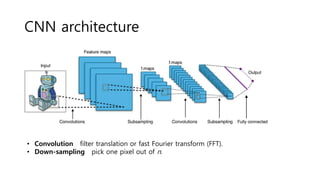

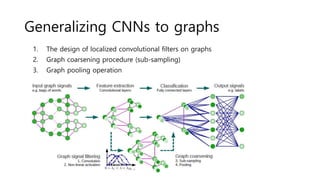

The document discusses the application of convolutional neural networks (CNNs) on graphs, particularly for unstructured data such as social, biological, and infrastructure networks. It highlights methods for formulating convolution and down-sampling on graphs, including the design of localized convolutional filters and graph coarsening techniques. The findings suggest that utilizing spectral graph theory can lead to efficient graph filtering and pooling, with proposed contributions towards linear complexity in filter design.

![Graph Fourier Transform

• Graph Fourier Transform

• 𝐿 = 𝑈Λ𝑈 𝑇 (Eigen value decomposition)

• Graph Fourier basis 𝑈 = [𝑢0, … , 𝑢 𝑛−1]

• Graph frequencies Λ =

𝜆0 ⋯ 0

⋮ ⋱ ⋮

0 ⋯ 𝜆 𝑛−1

1. Graph signal 𝑥 ∶ 𝑉 → ℝ, 𝑥 ∈ ℝ 𝑛

2. Transform 𝑥 = 𝑈 𝑇 𝑥 ∈ ℝ 𝑛](https://image.slidesharecdn.com/convolutionalneuralnetworksongraphs-170216024406/85/Convolutional-Neural-Networks-on-Graphs-with-Fast-Localized-Spectral-Filtering-7-320.jpg)