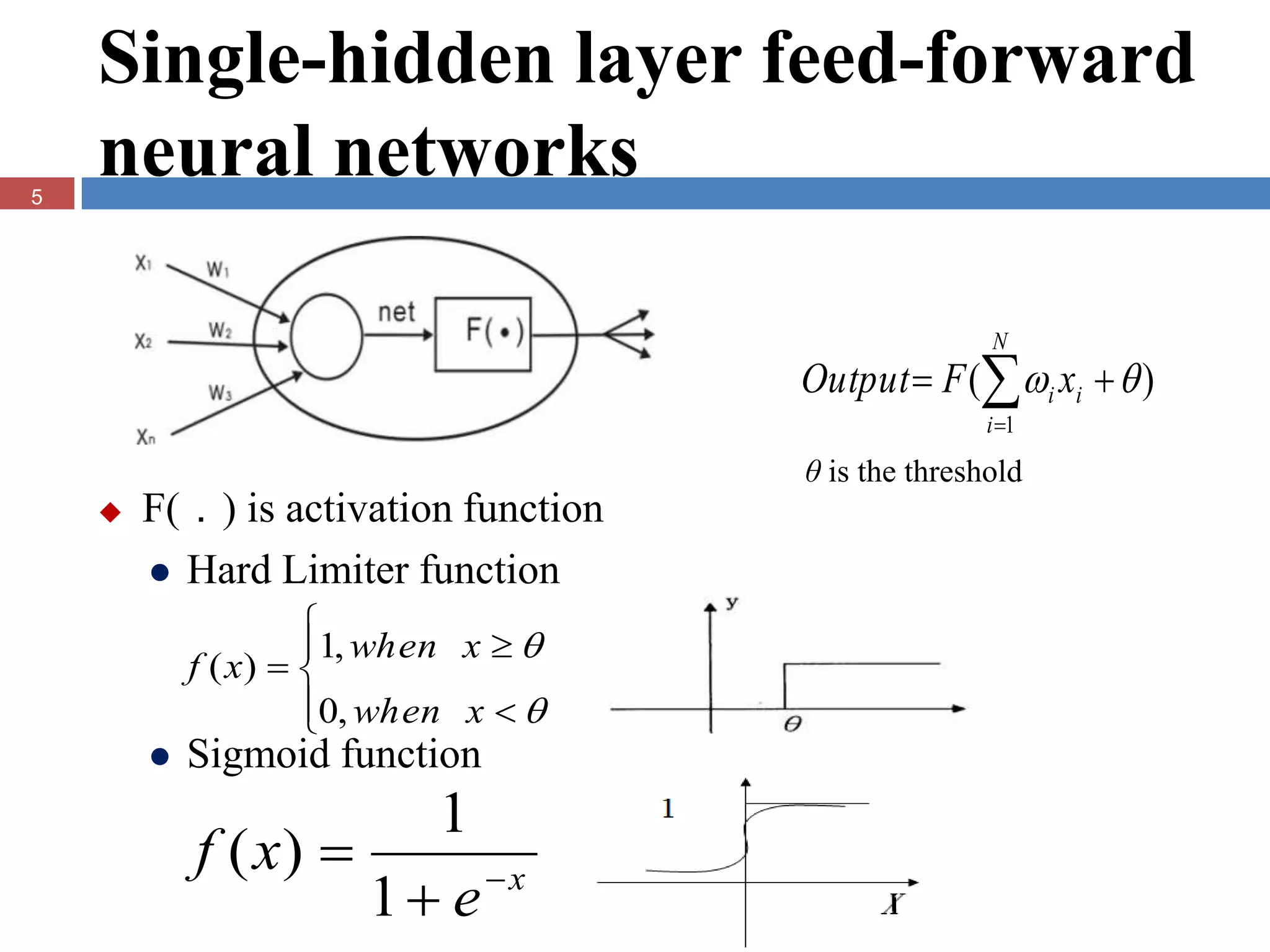

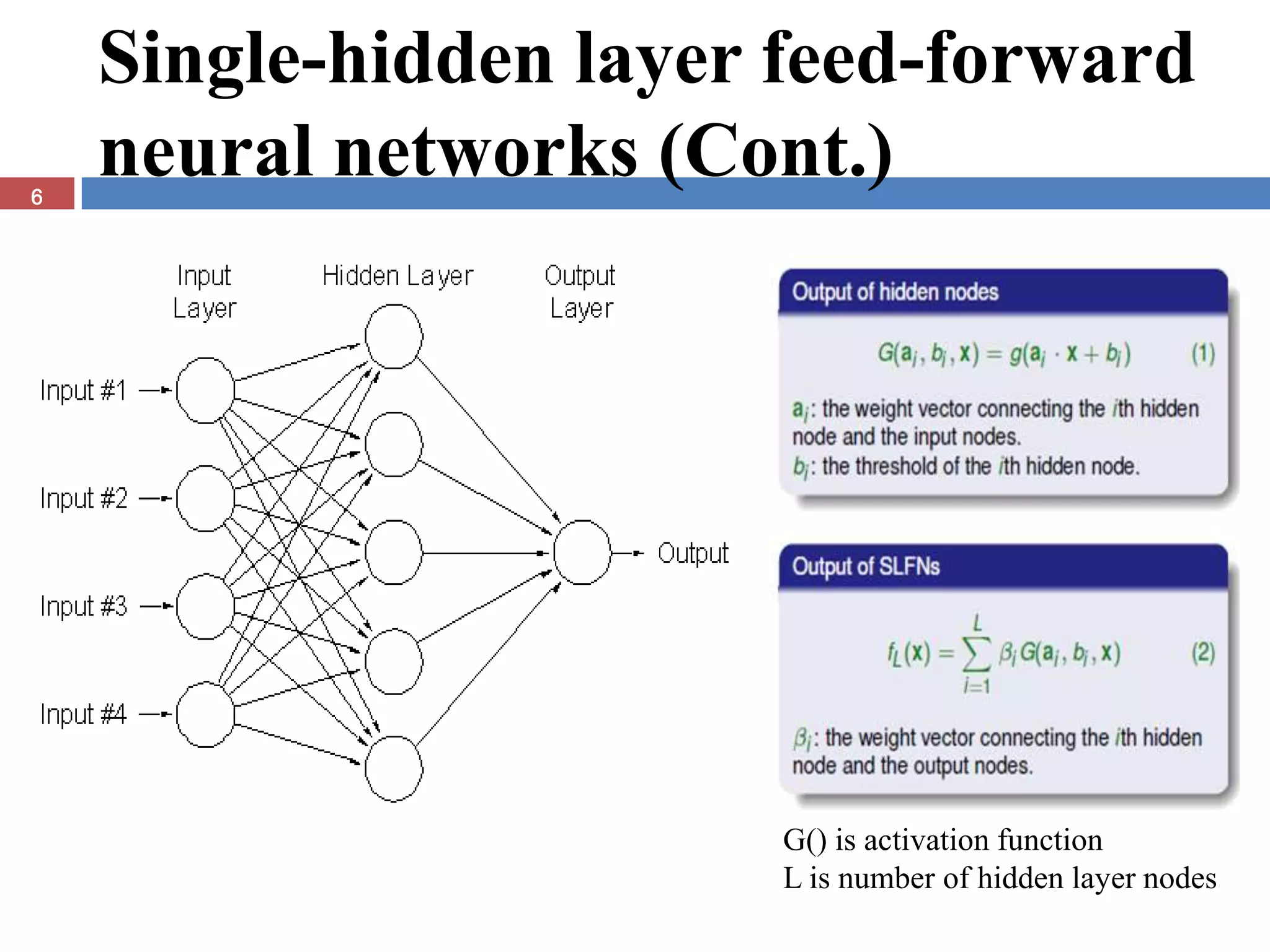

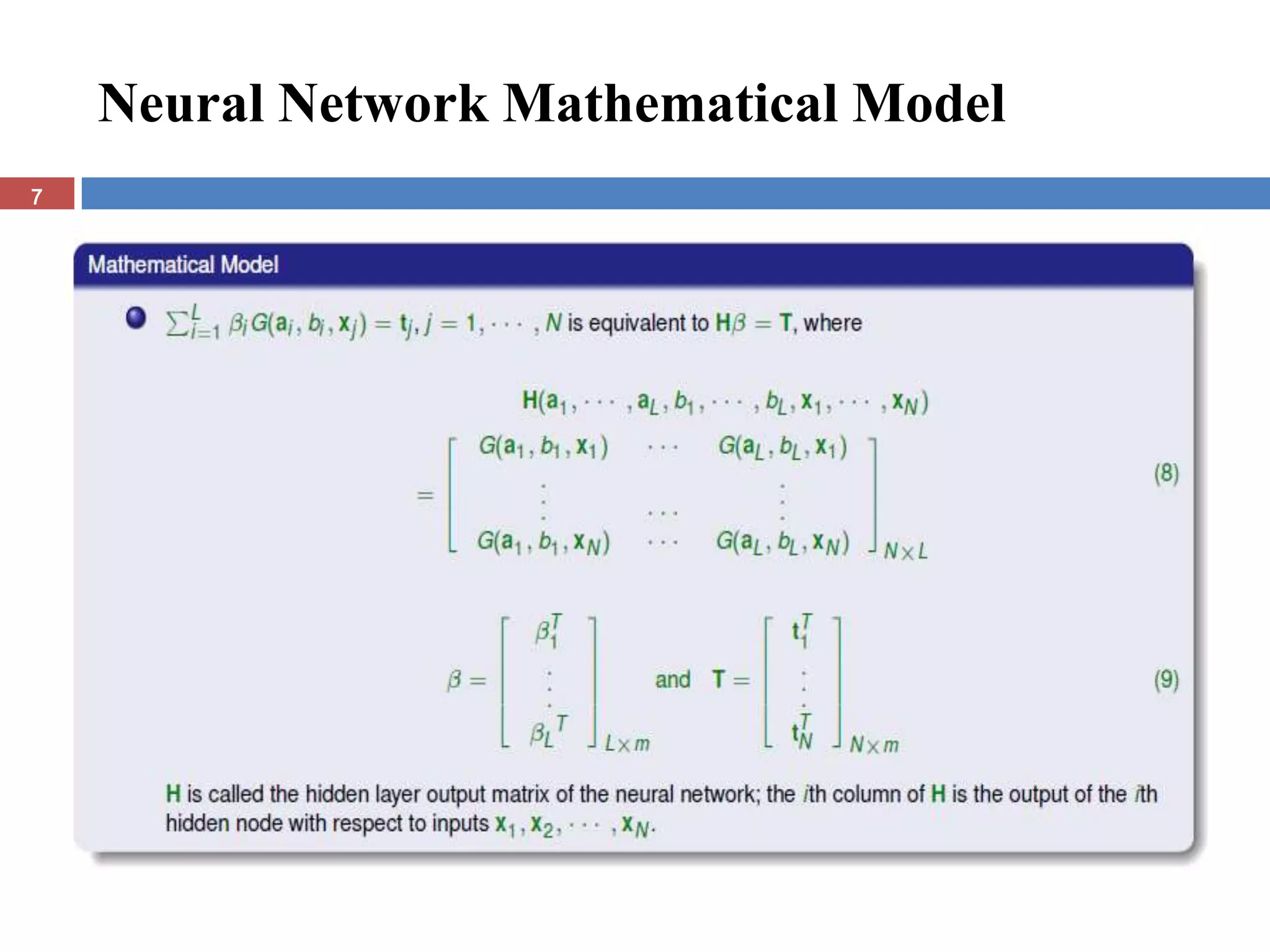

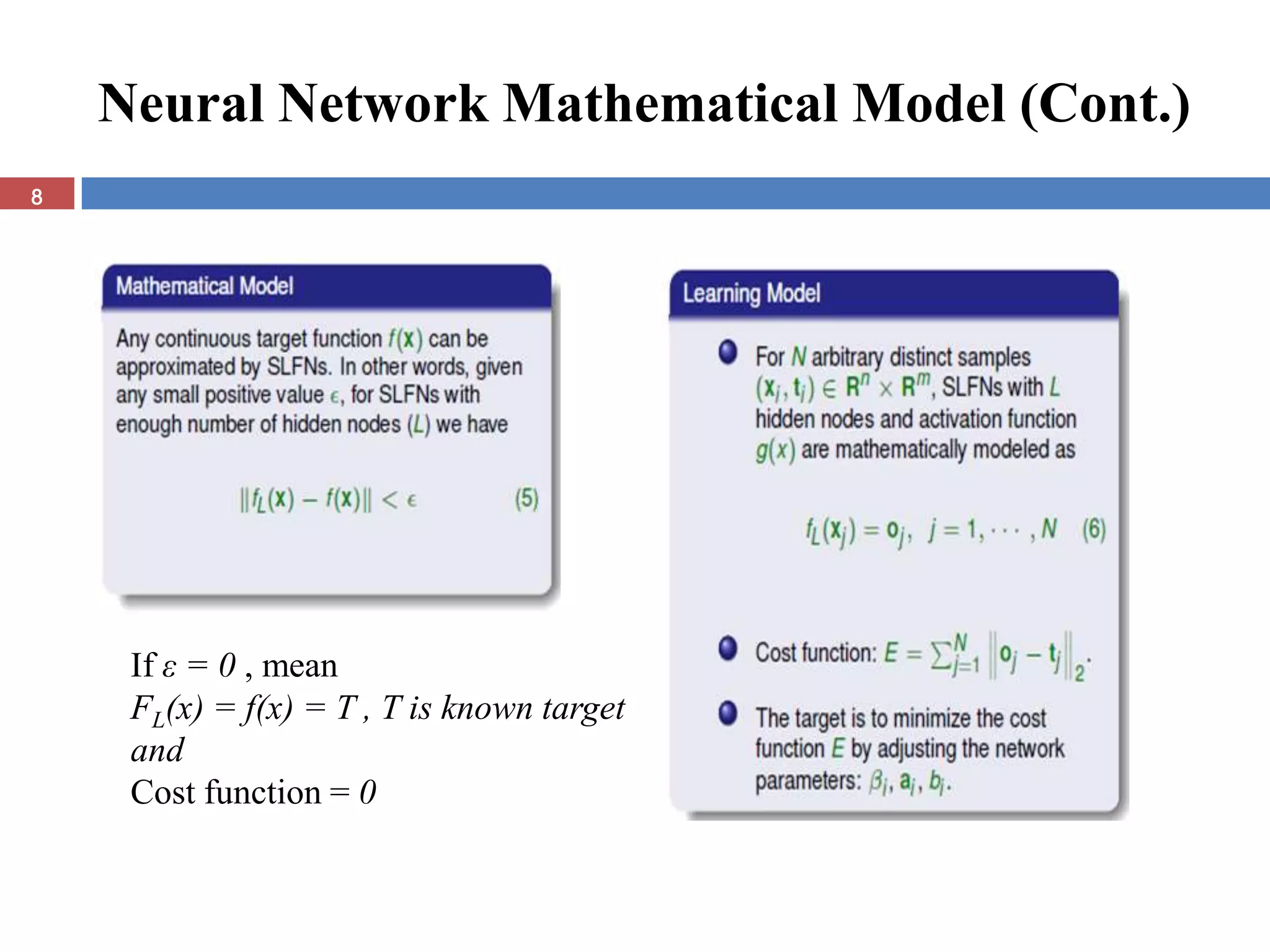

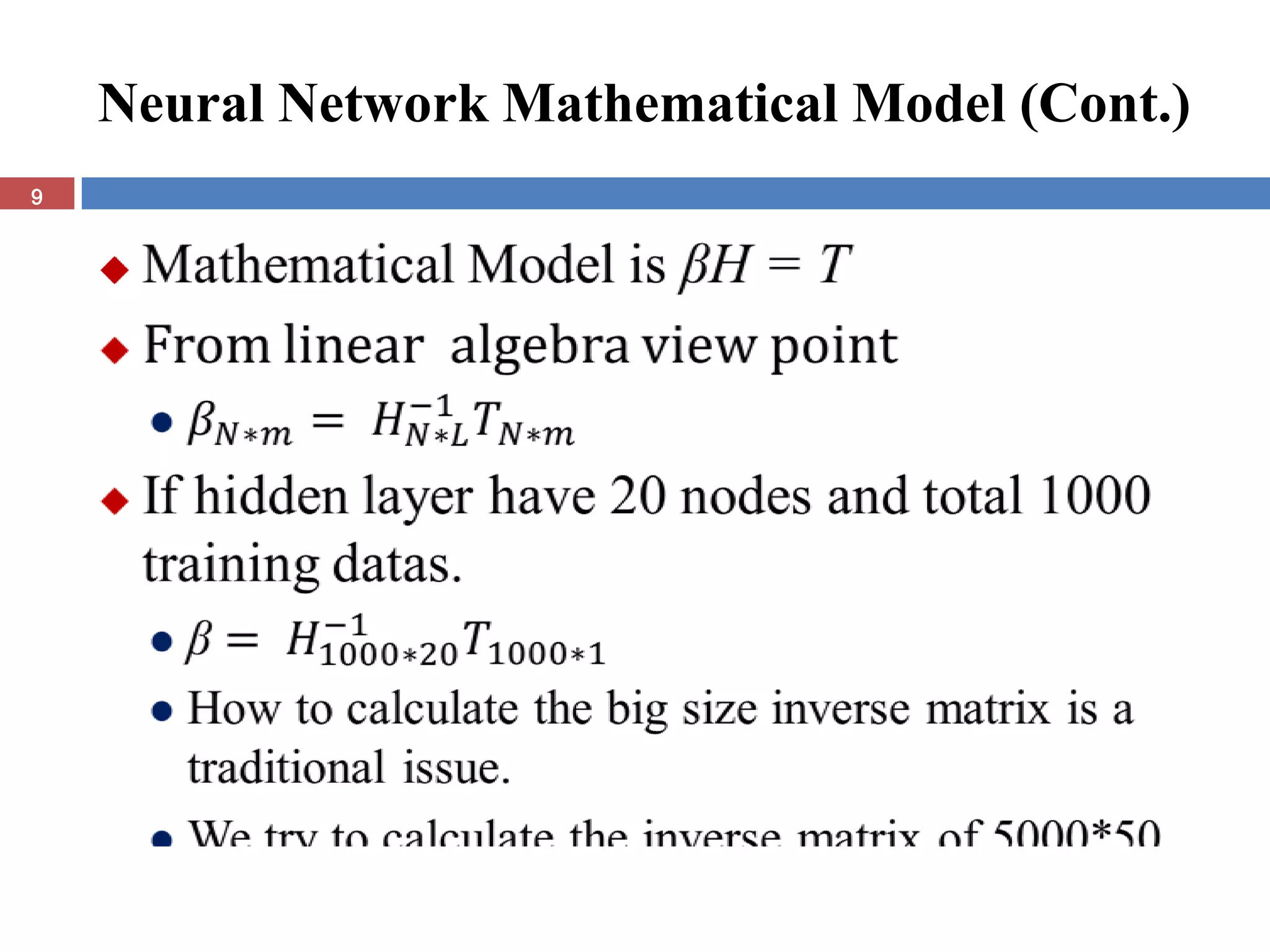

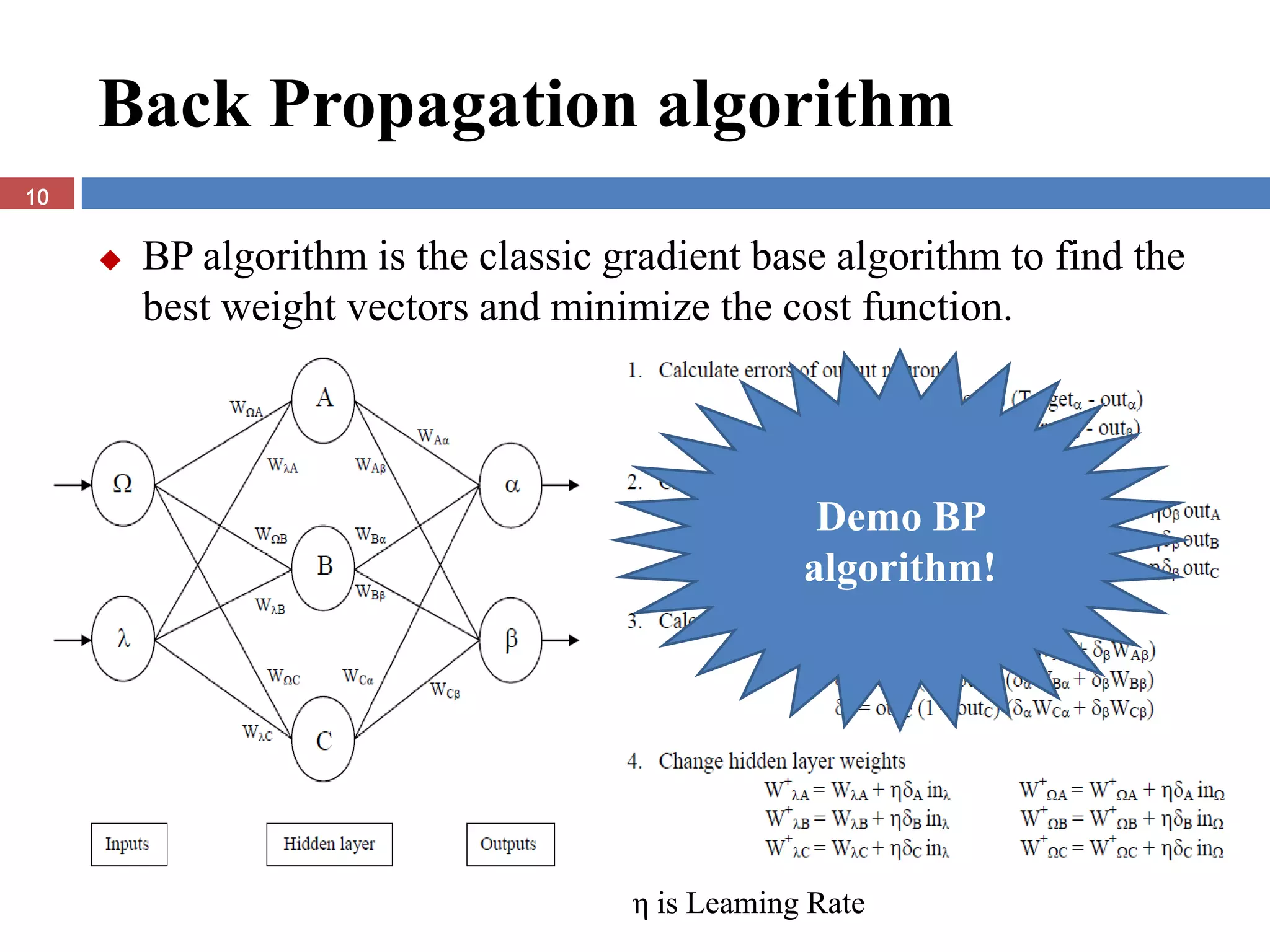

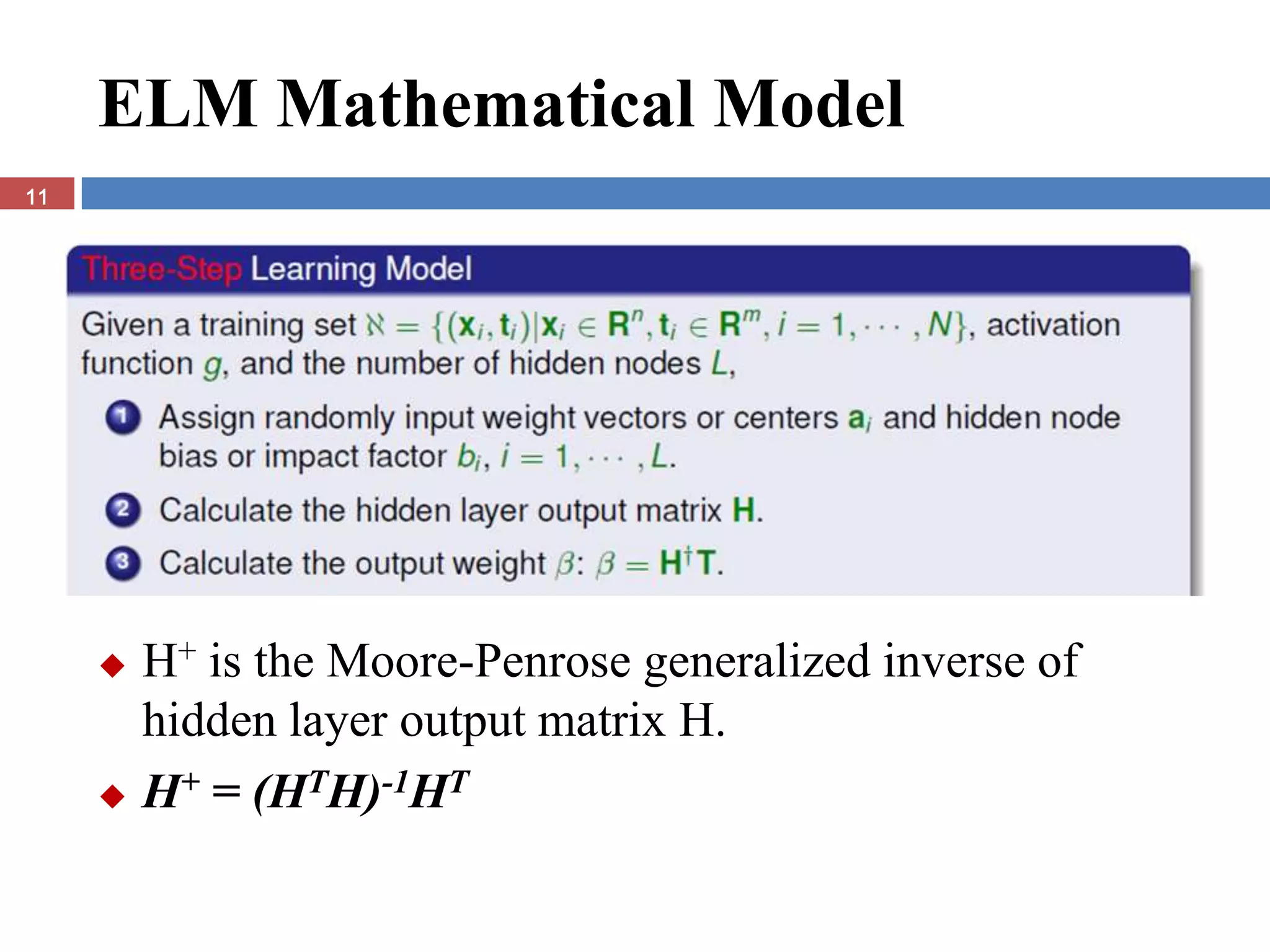

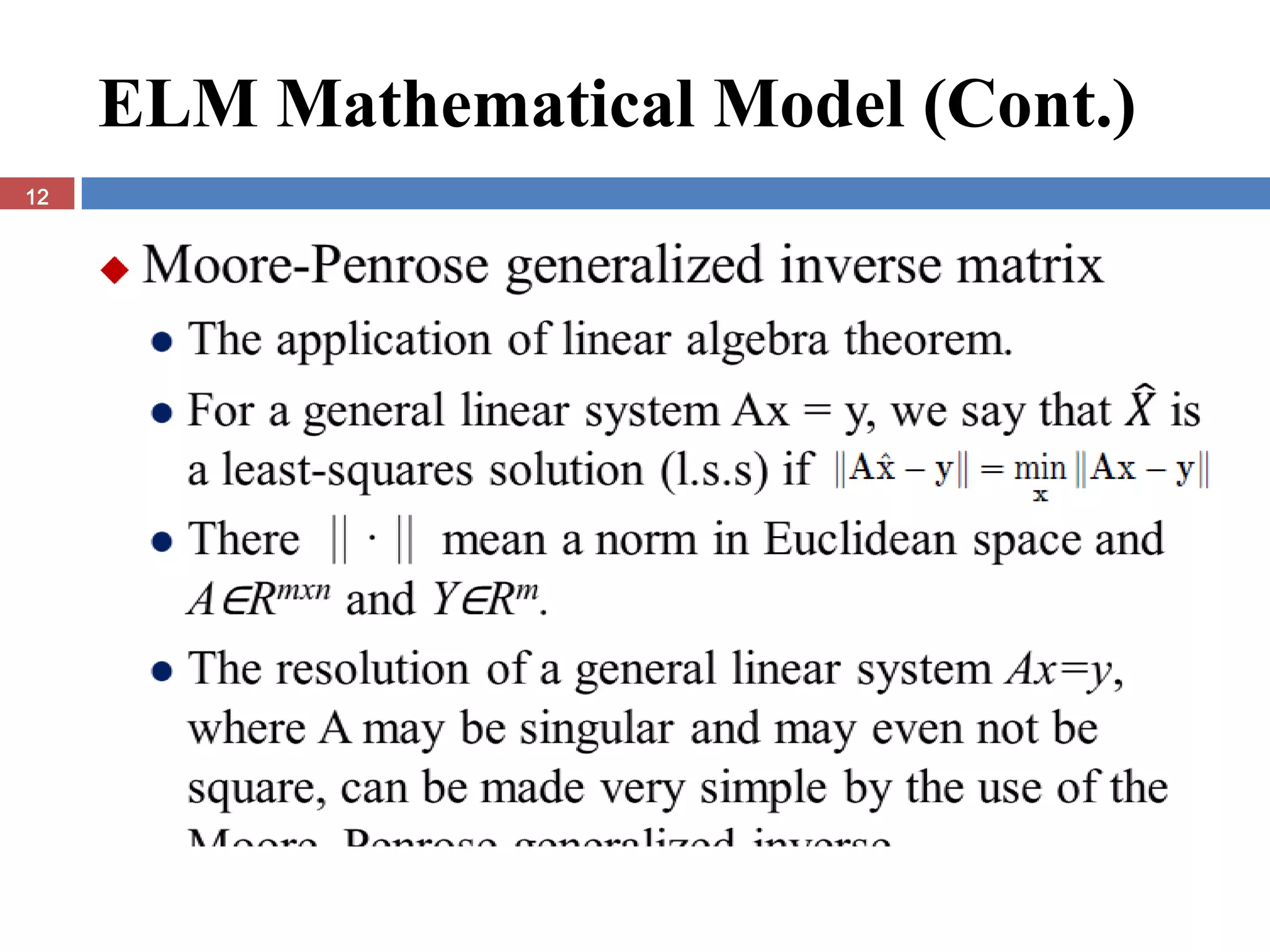

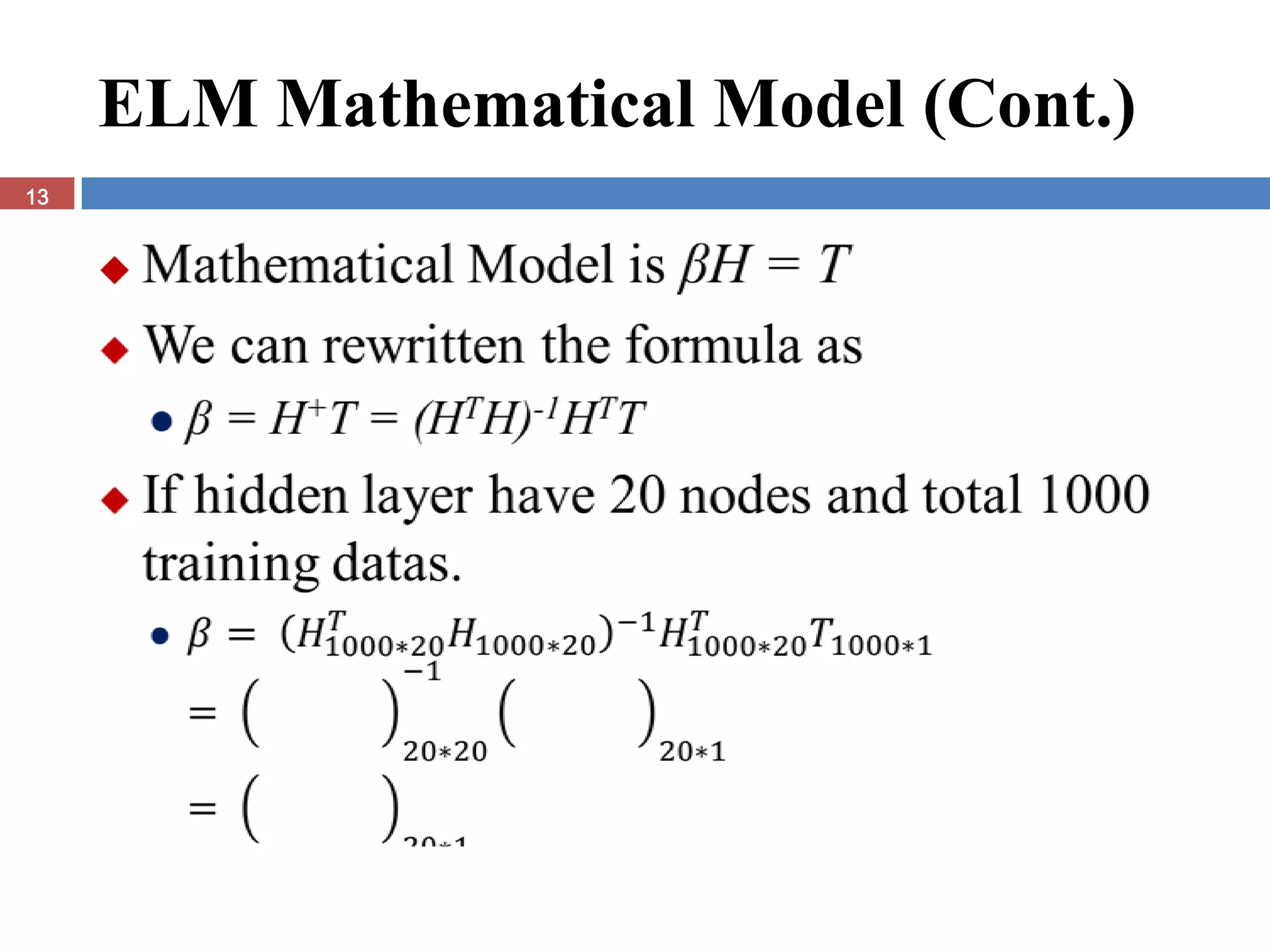

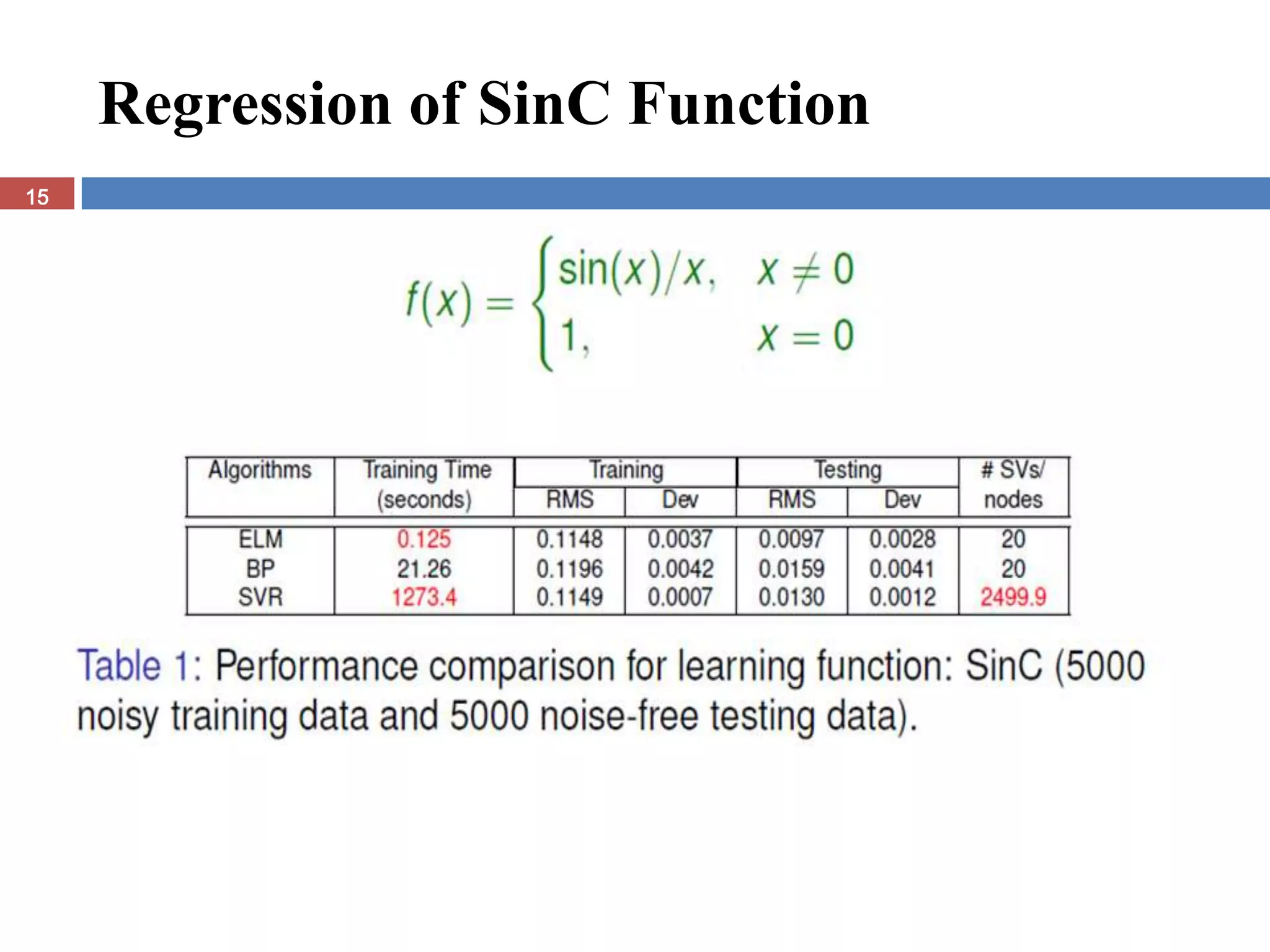

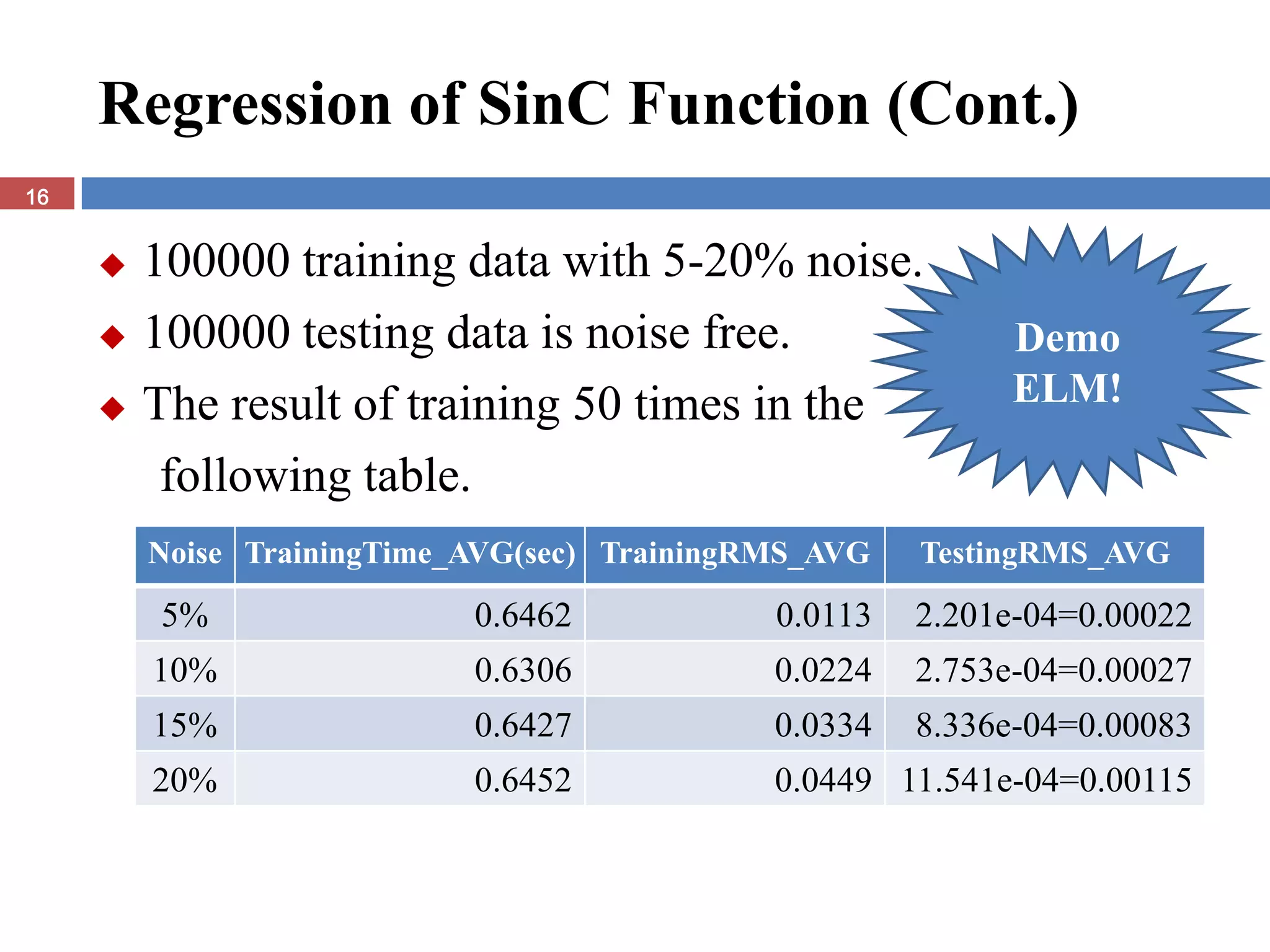

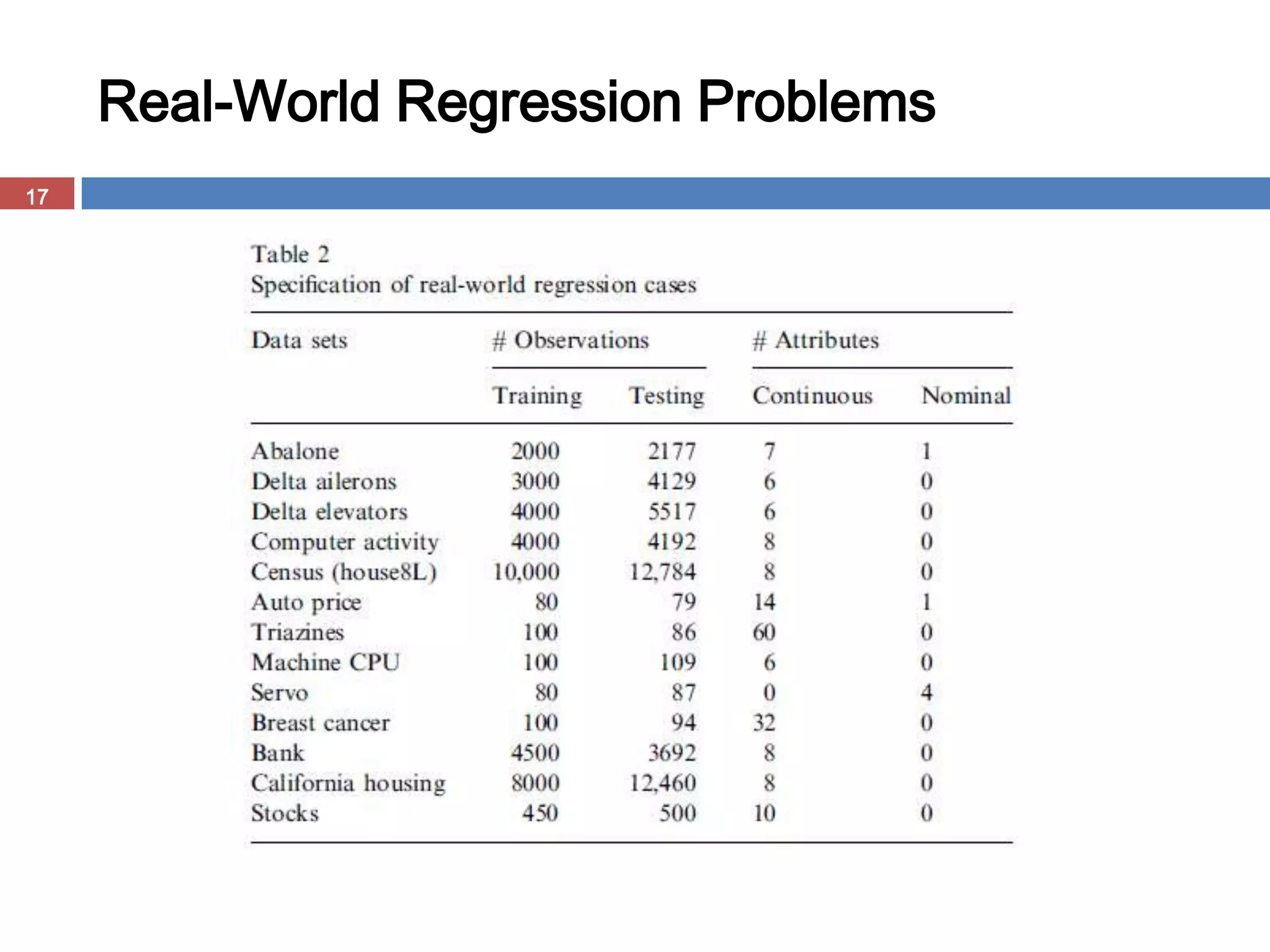

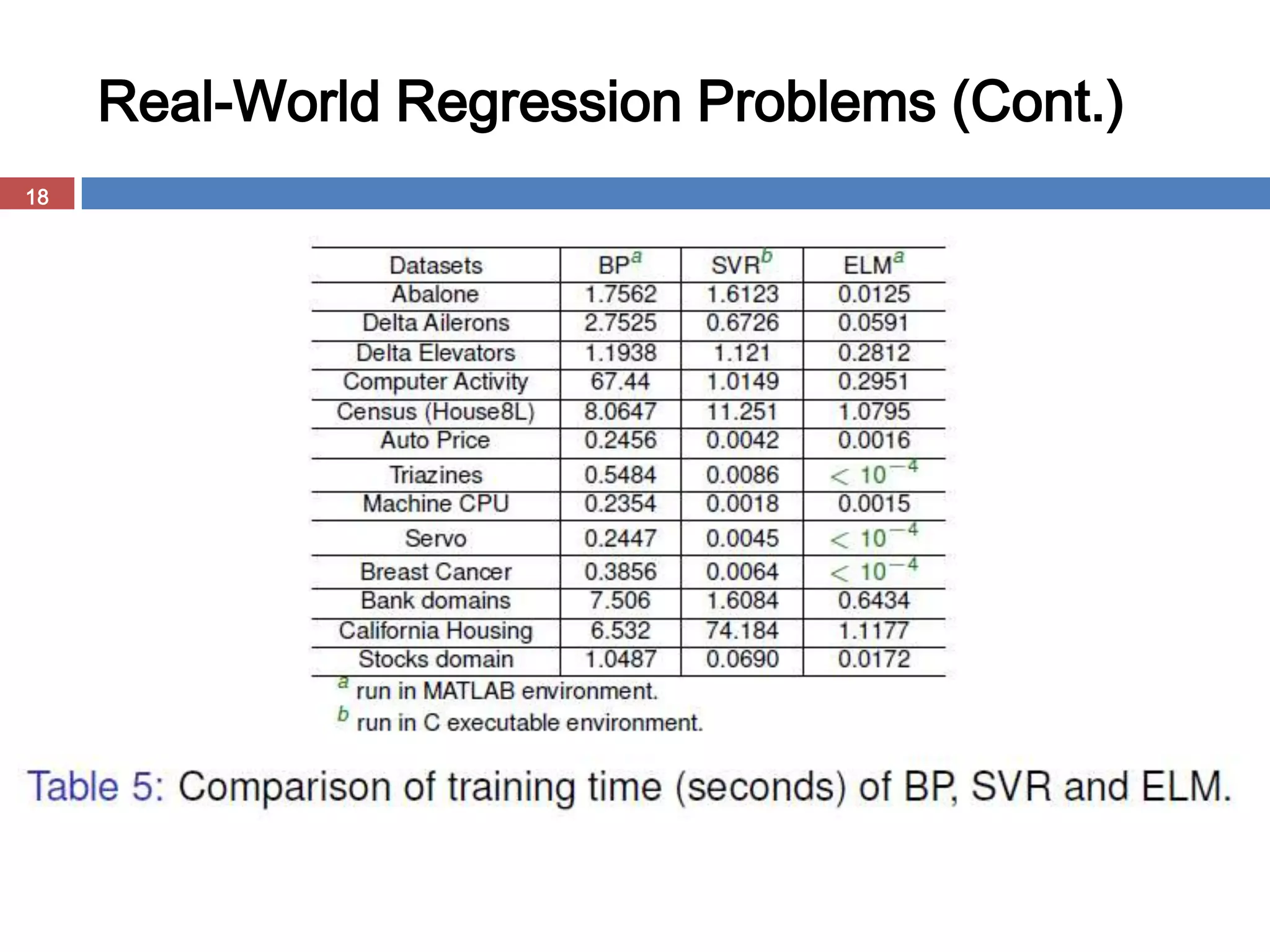

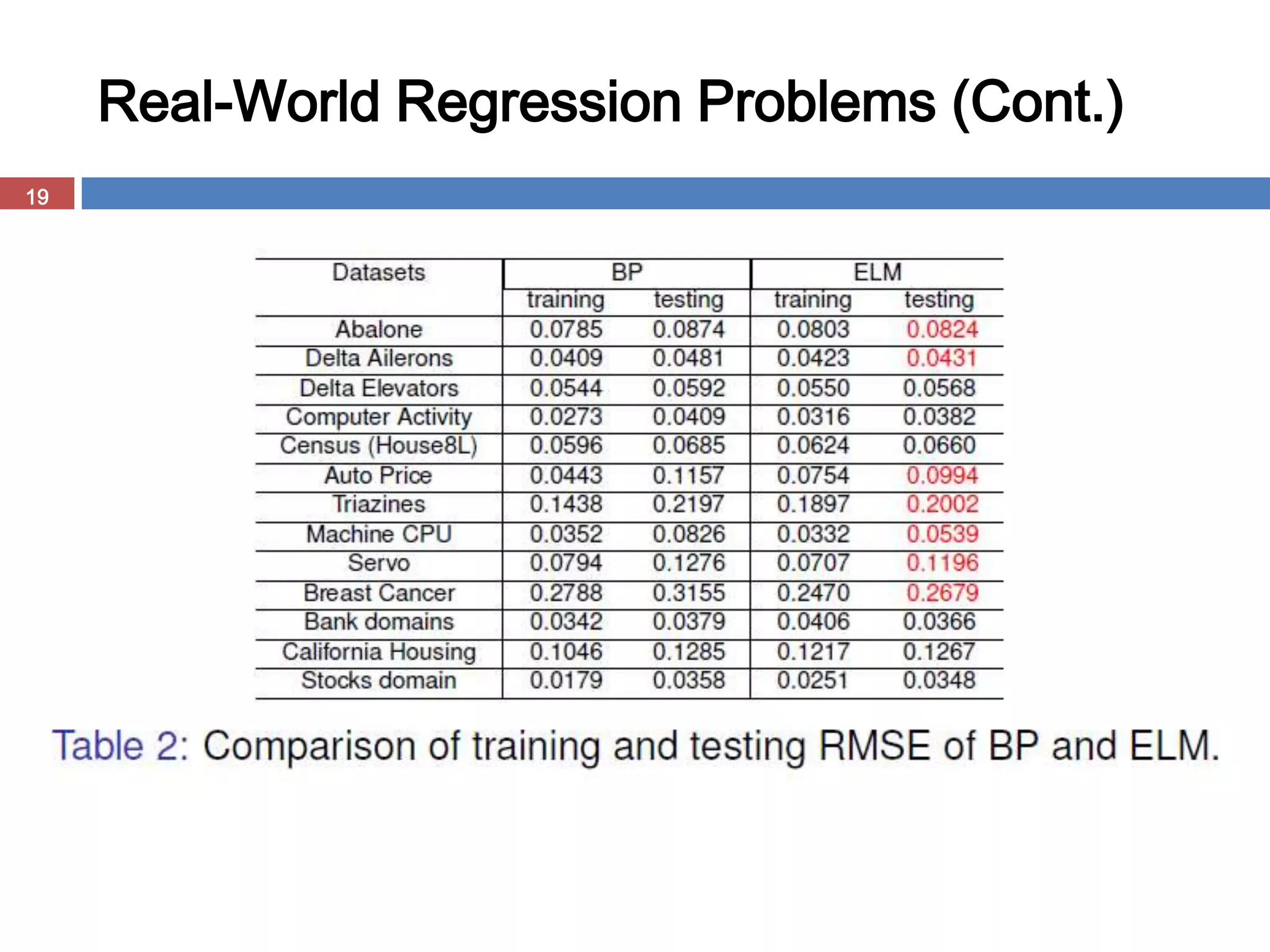

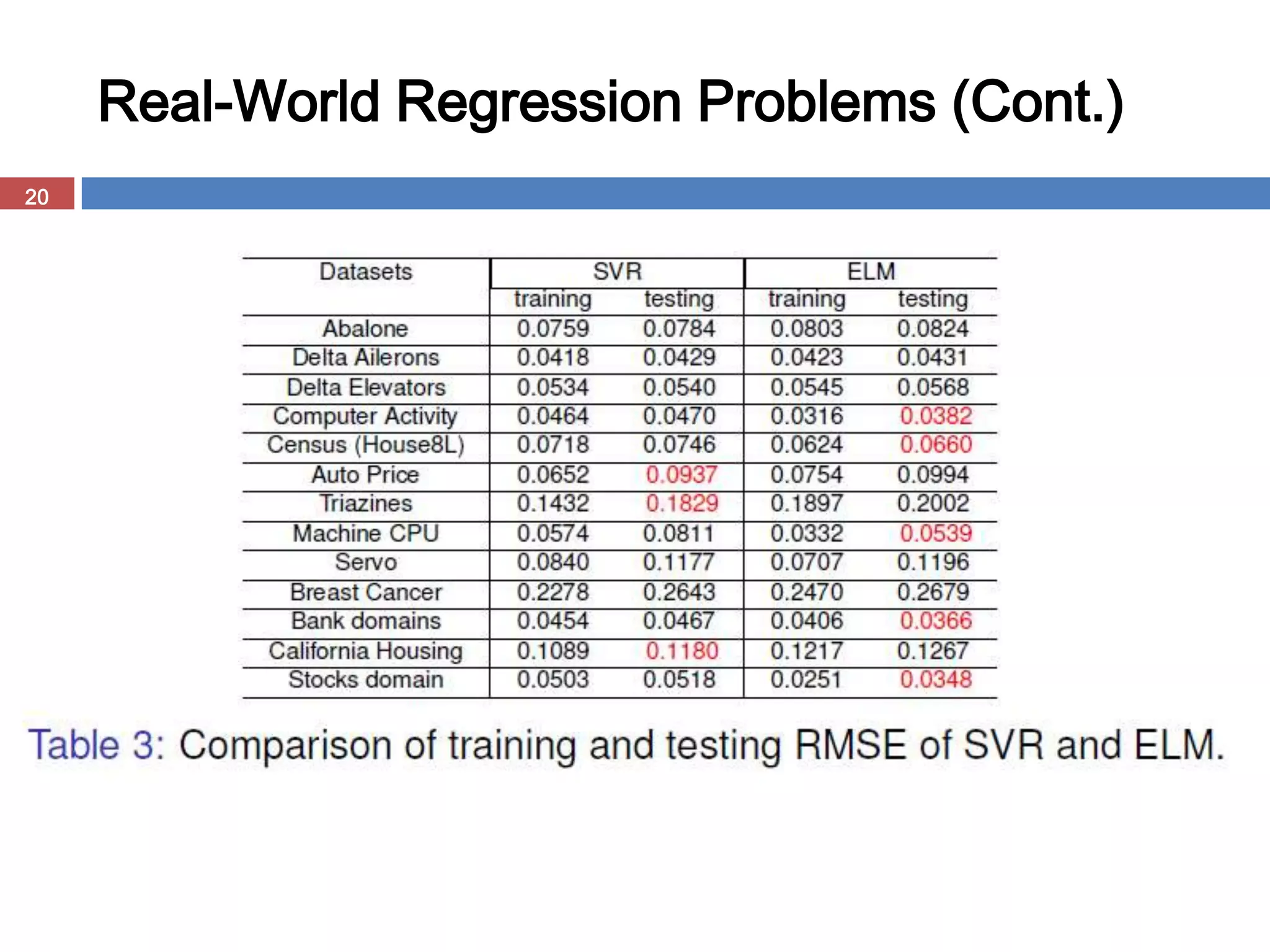

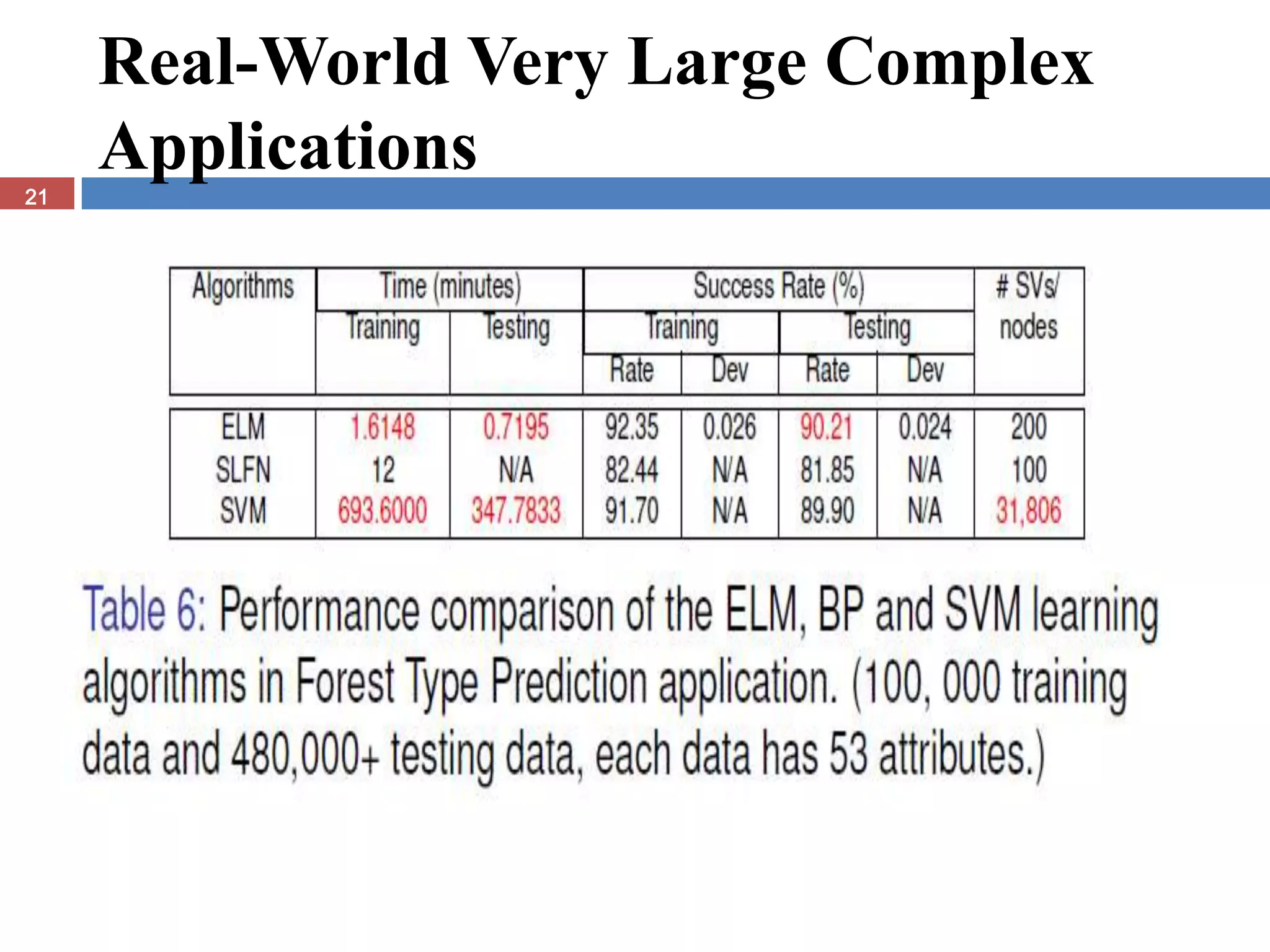

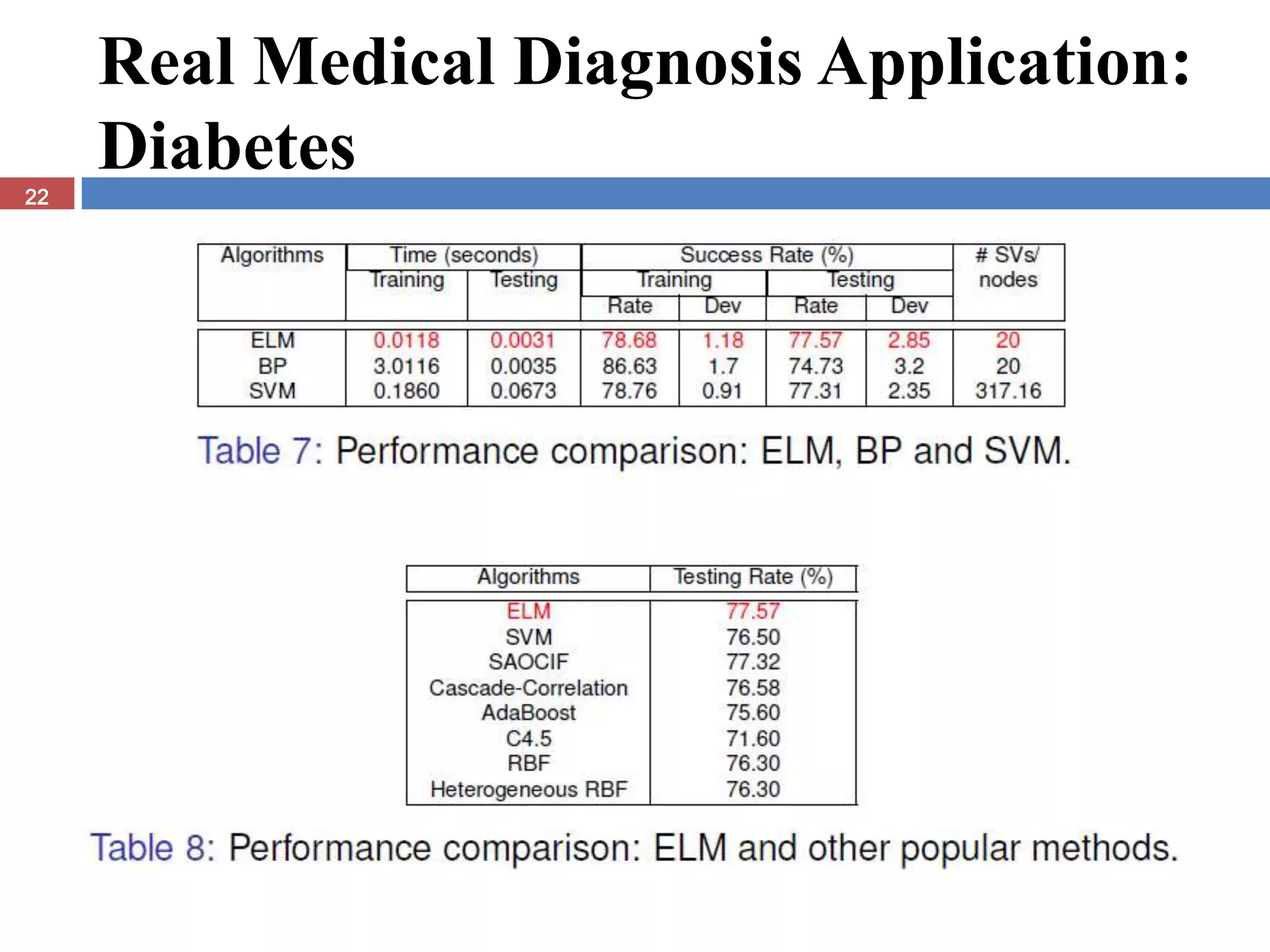

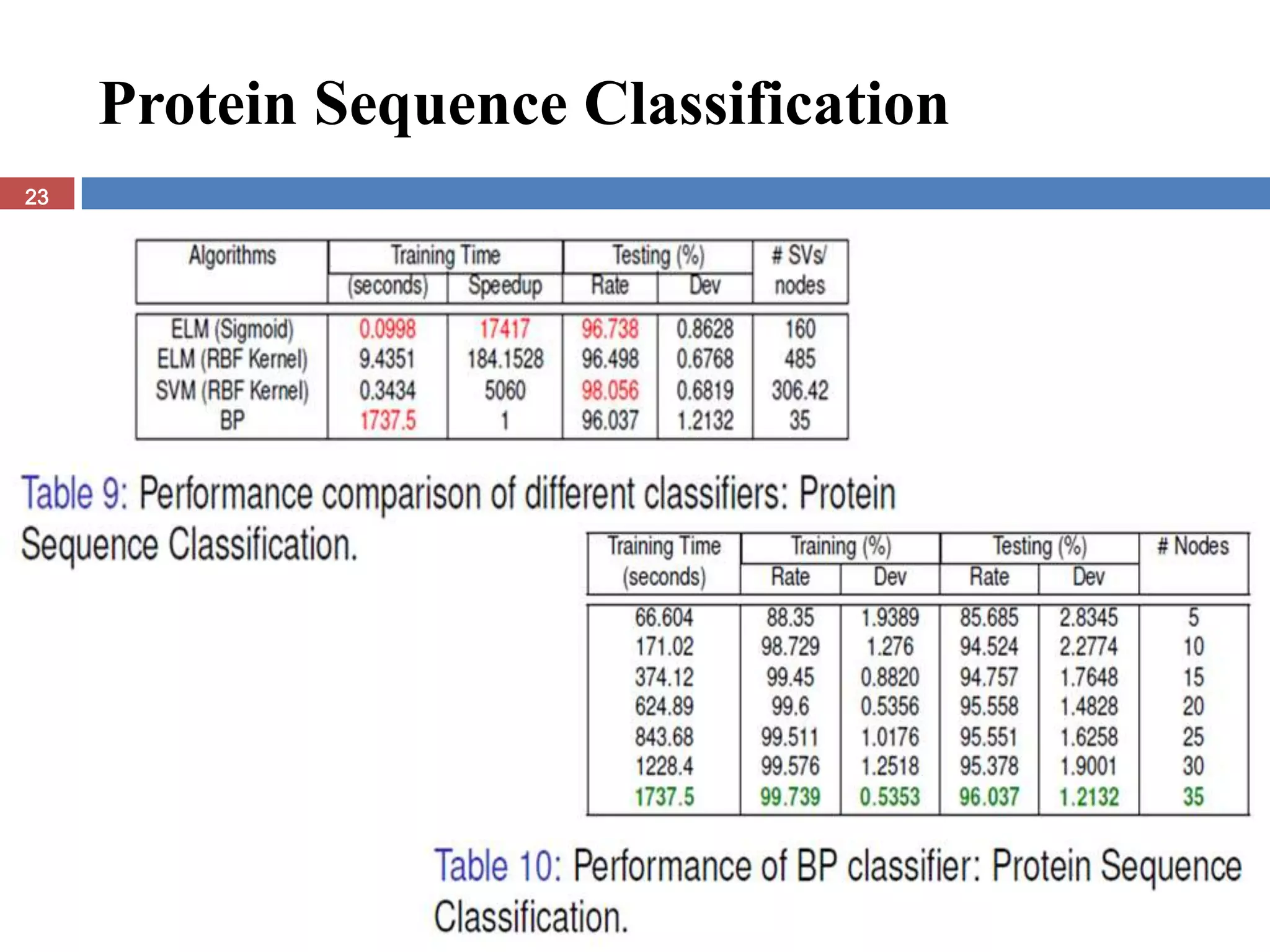

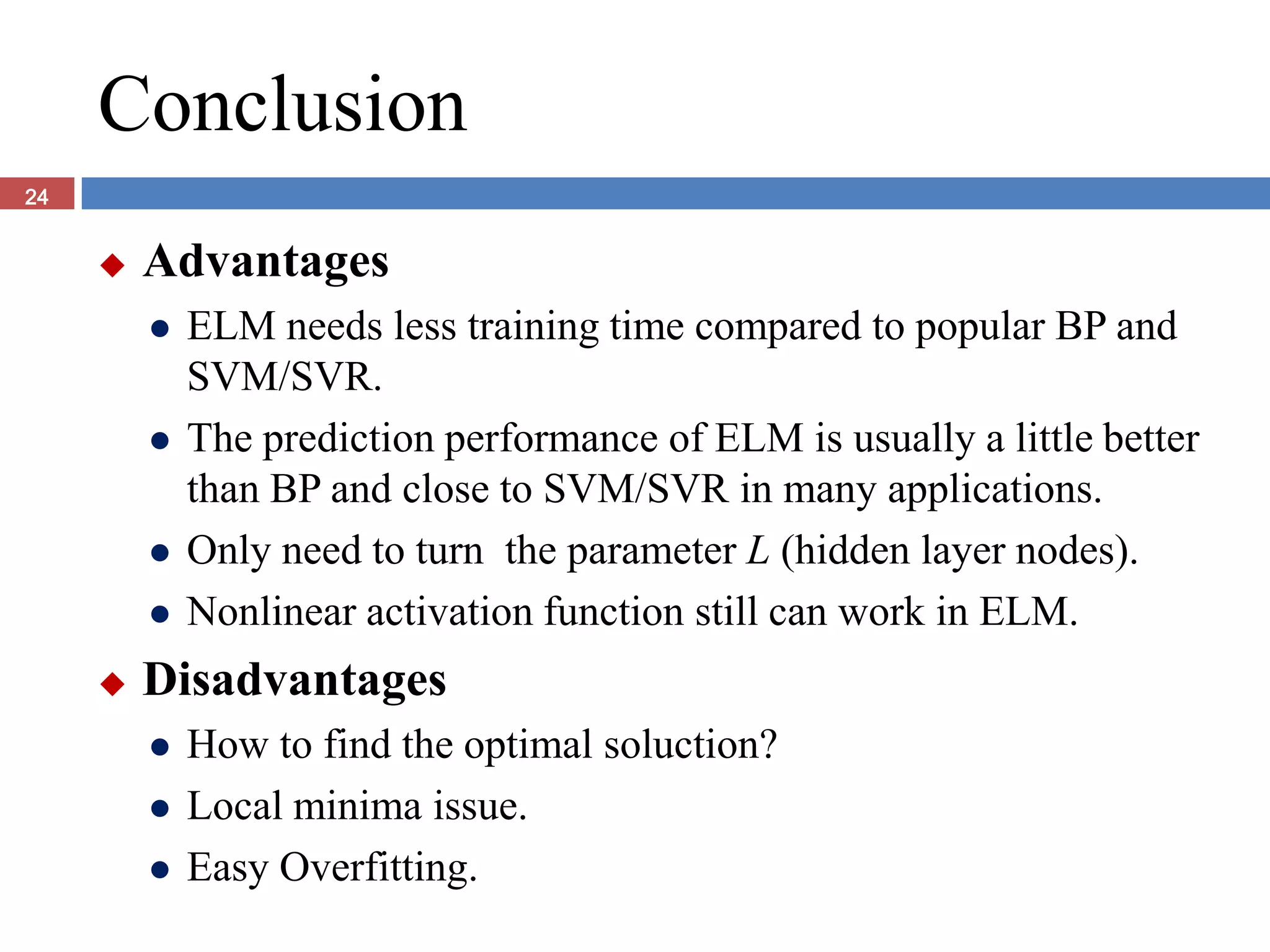

The document presents the extreme learning machine (ELM) theory and algorithm. ELM is a learning algorithm for single-hidden layer feedforward neural networks. Unlike traditional algorithms, ELM assigns input weights and hidden biases randomly and computes output weights through Moore-Penrose generalized inverse, making it faster than backpropagation. The paper evaluates ELM on regression and classification tasks, finding it achieves comparable or better performance than other algorithms while requiring less training time.