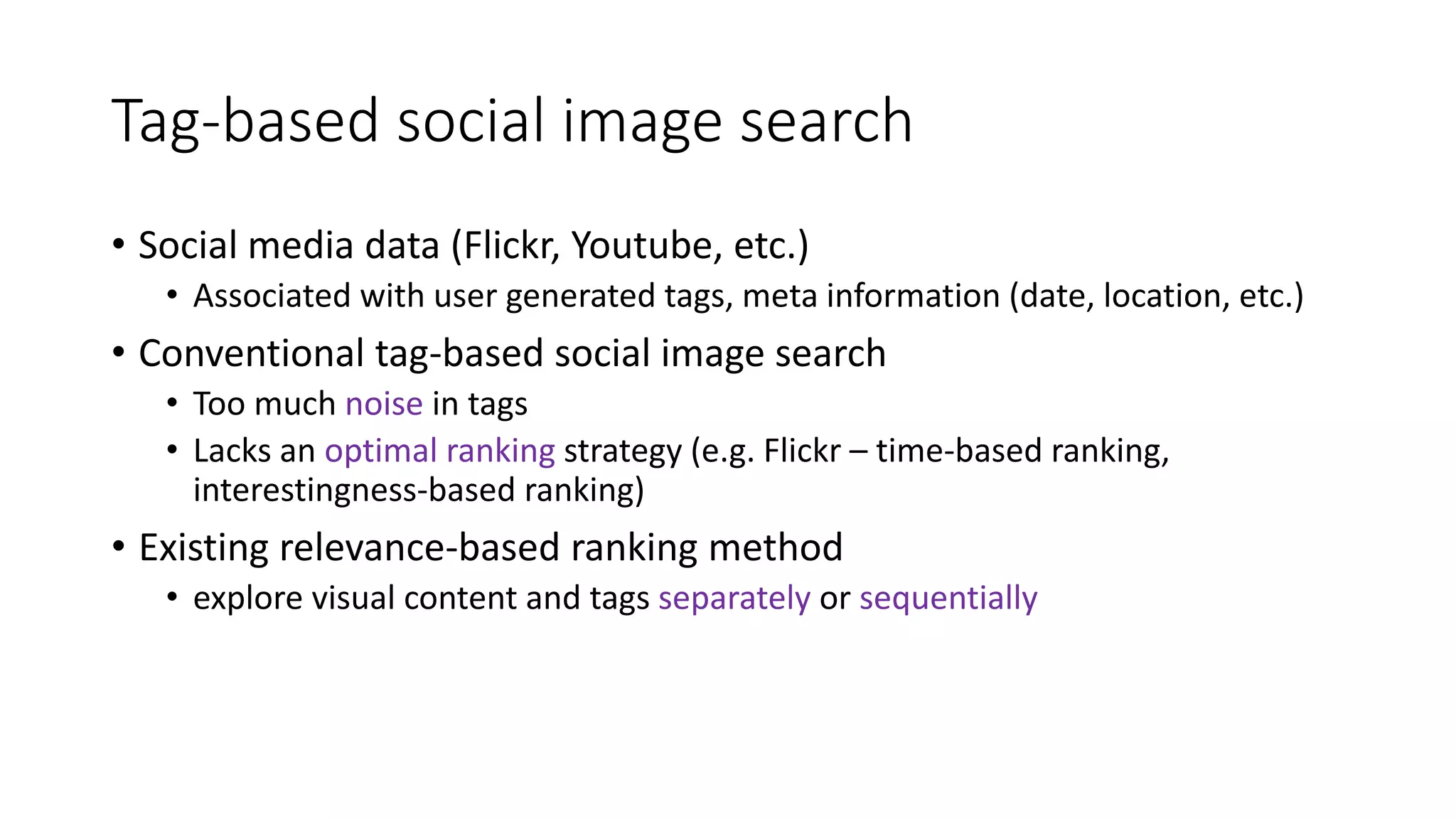

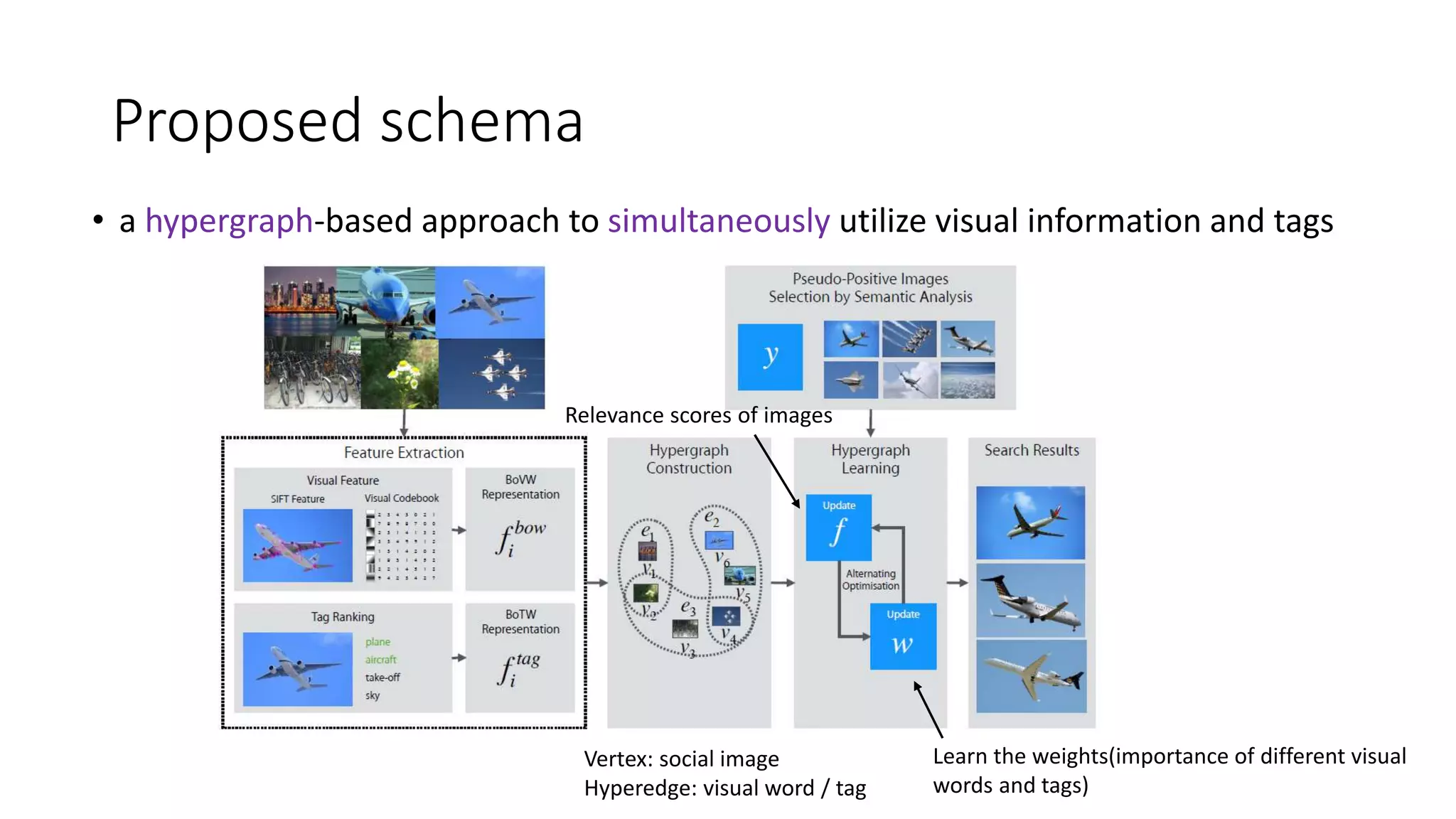

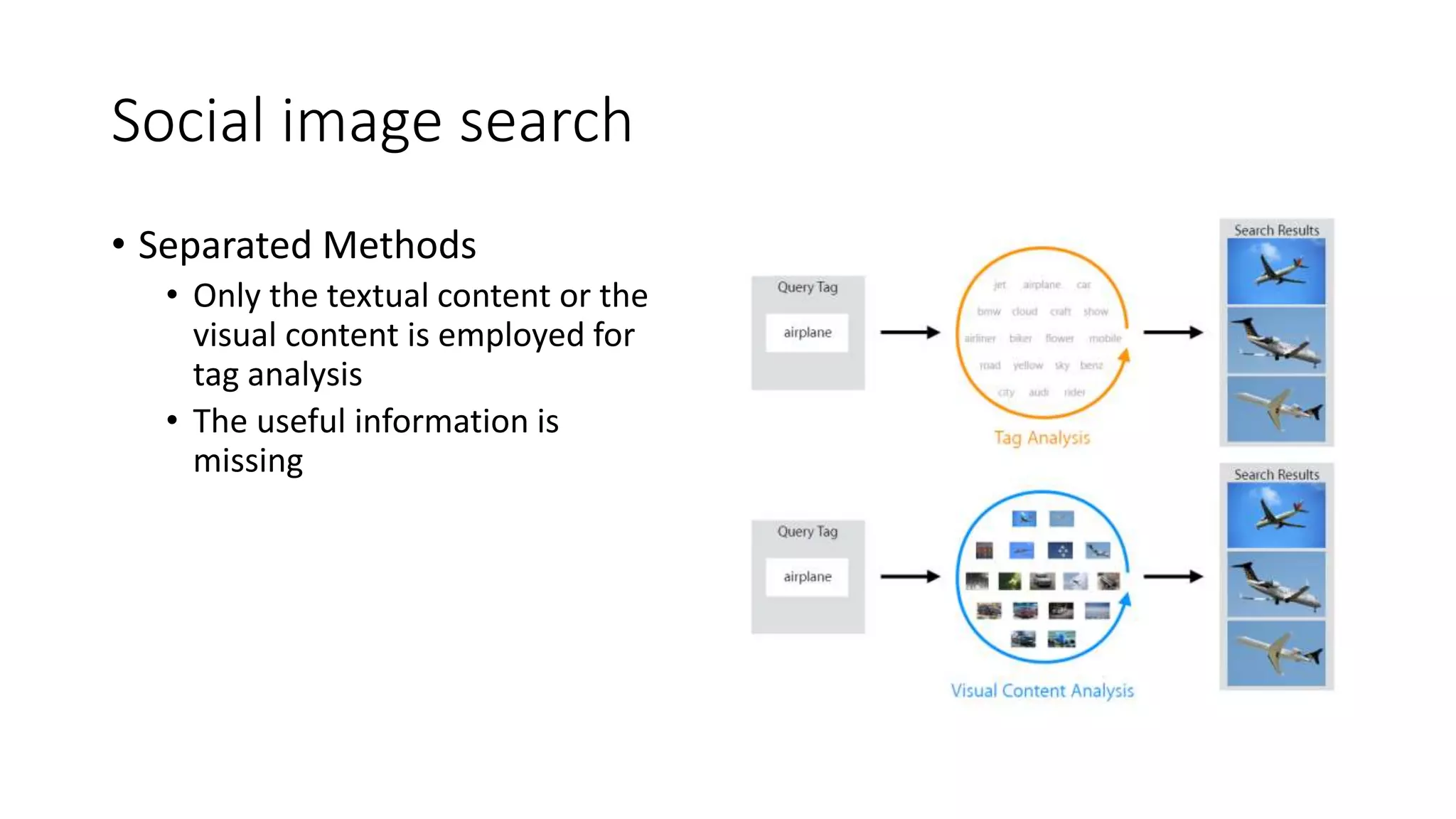

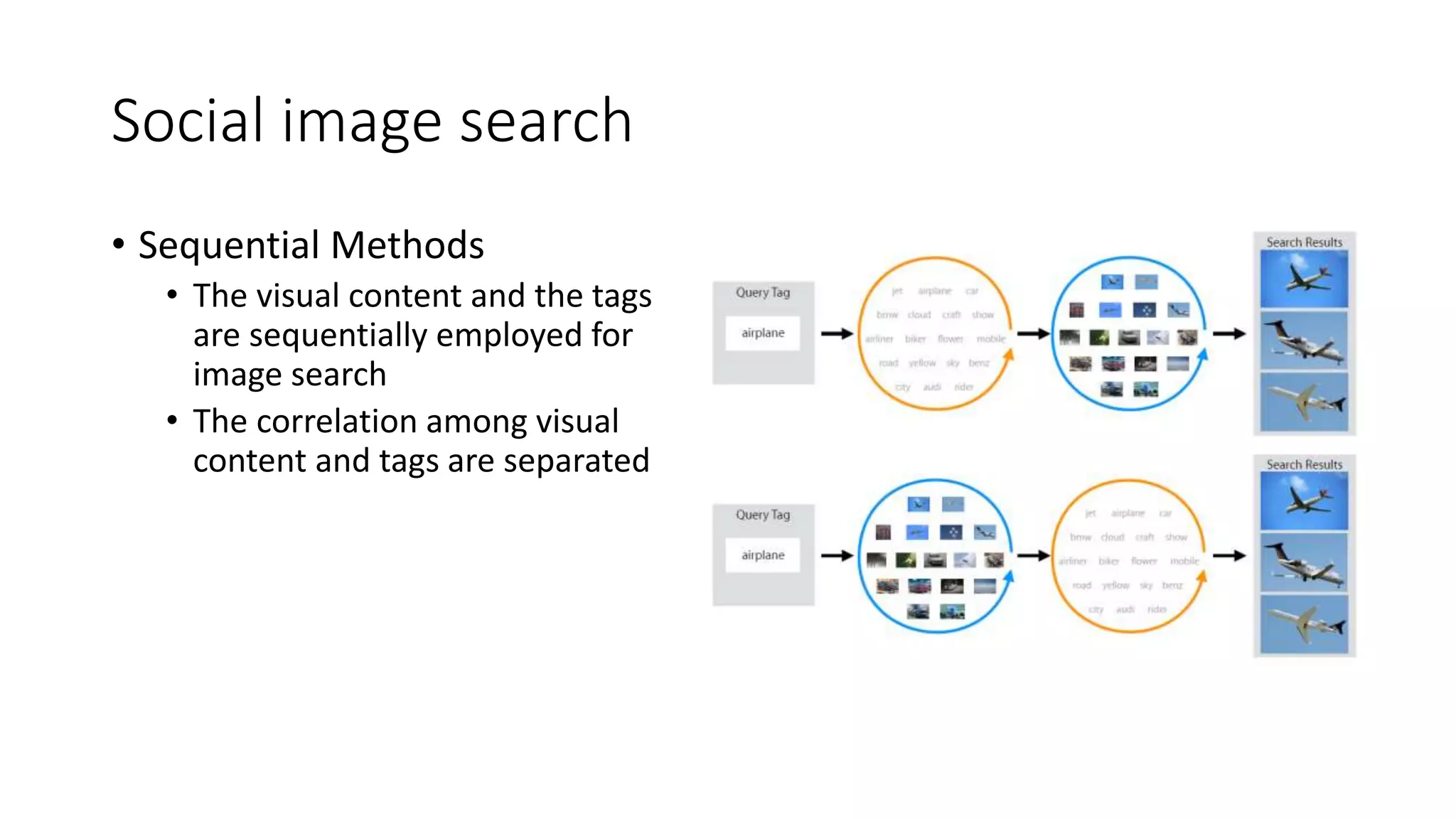

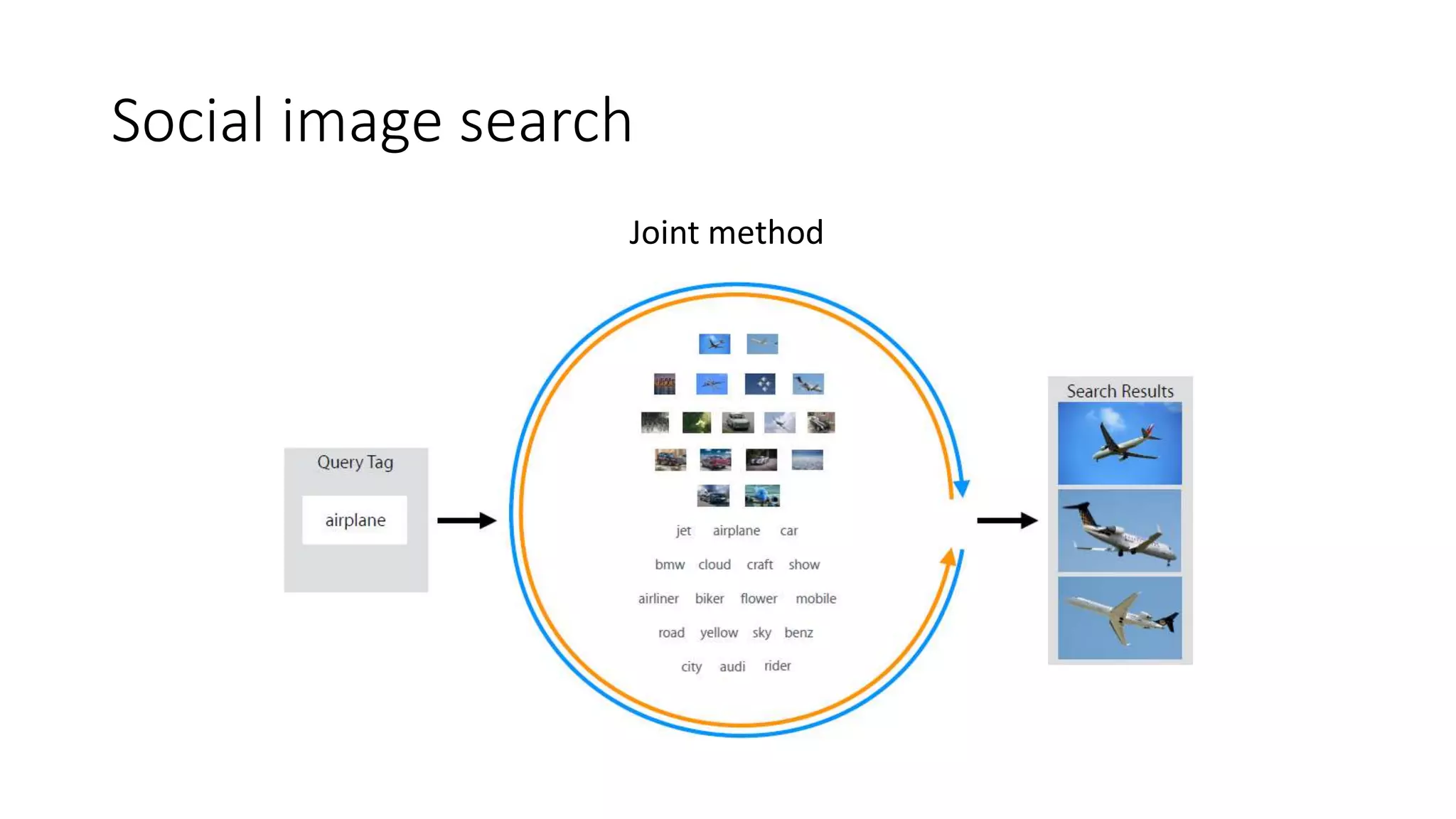

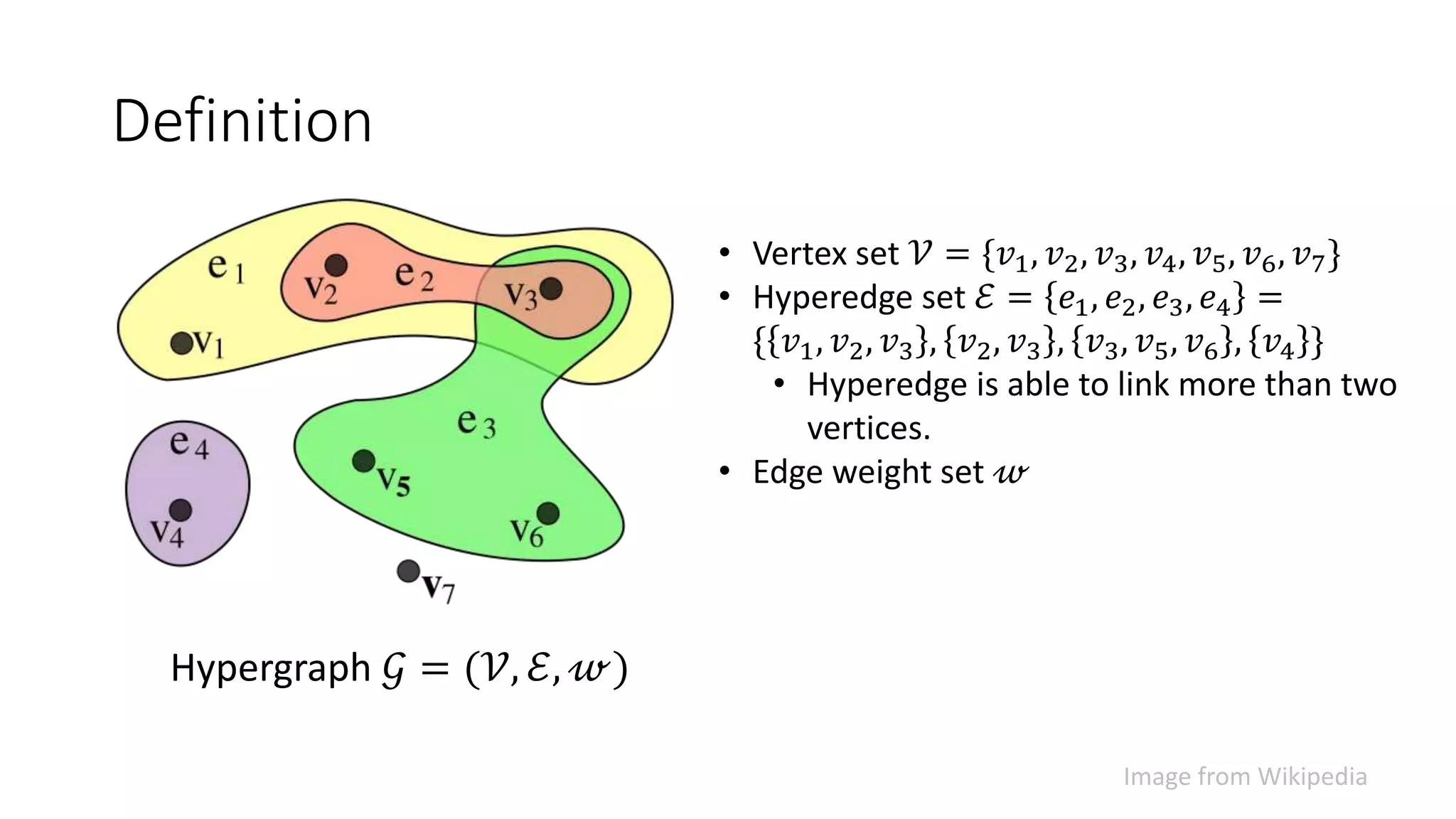

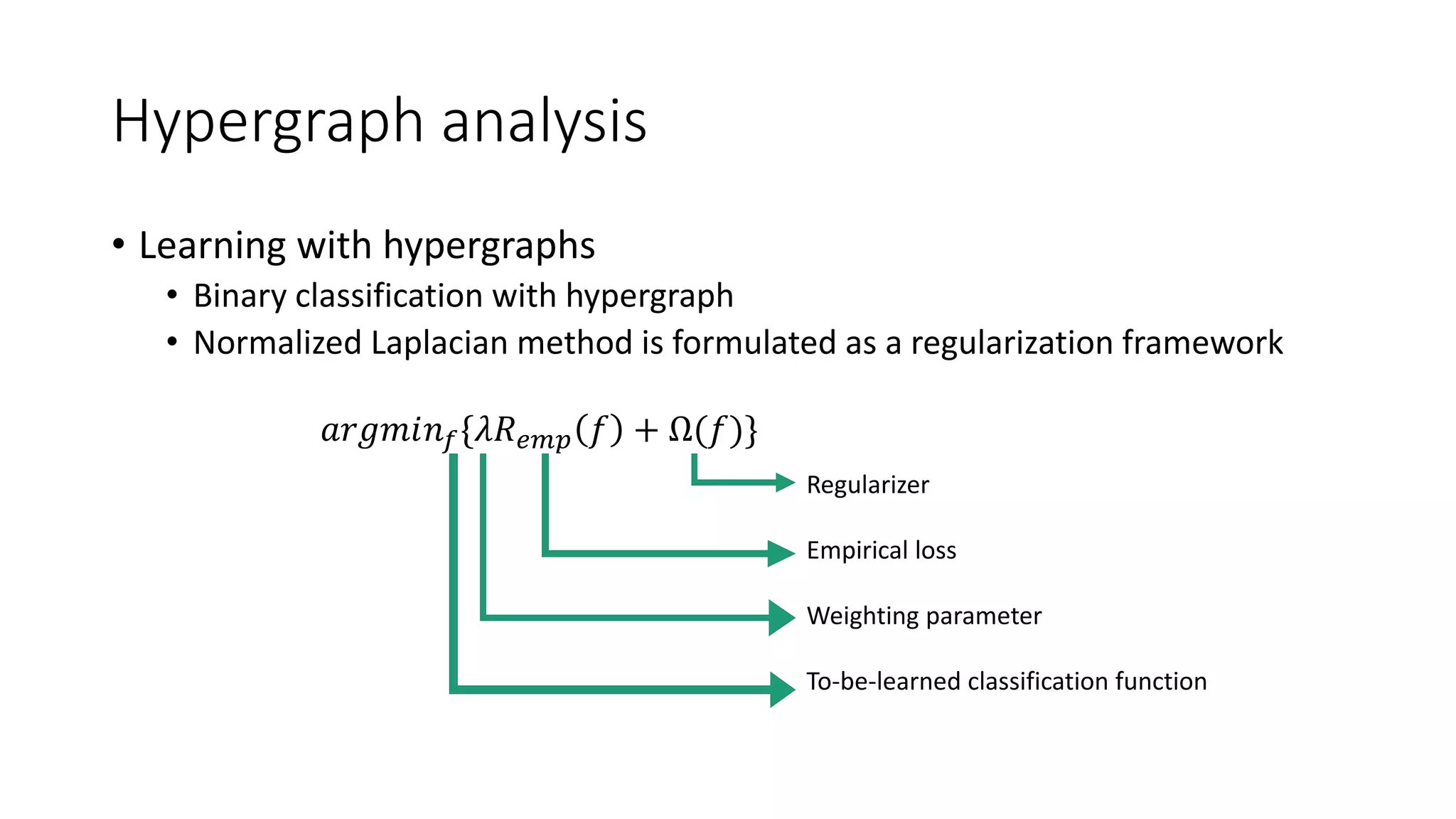

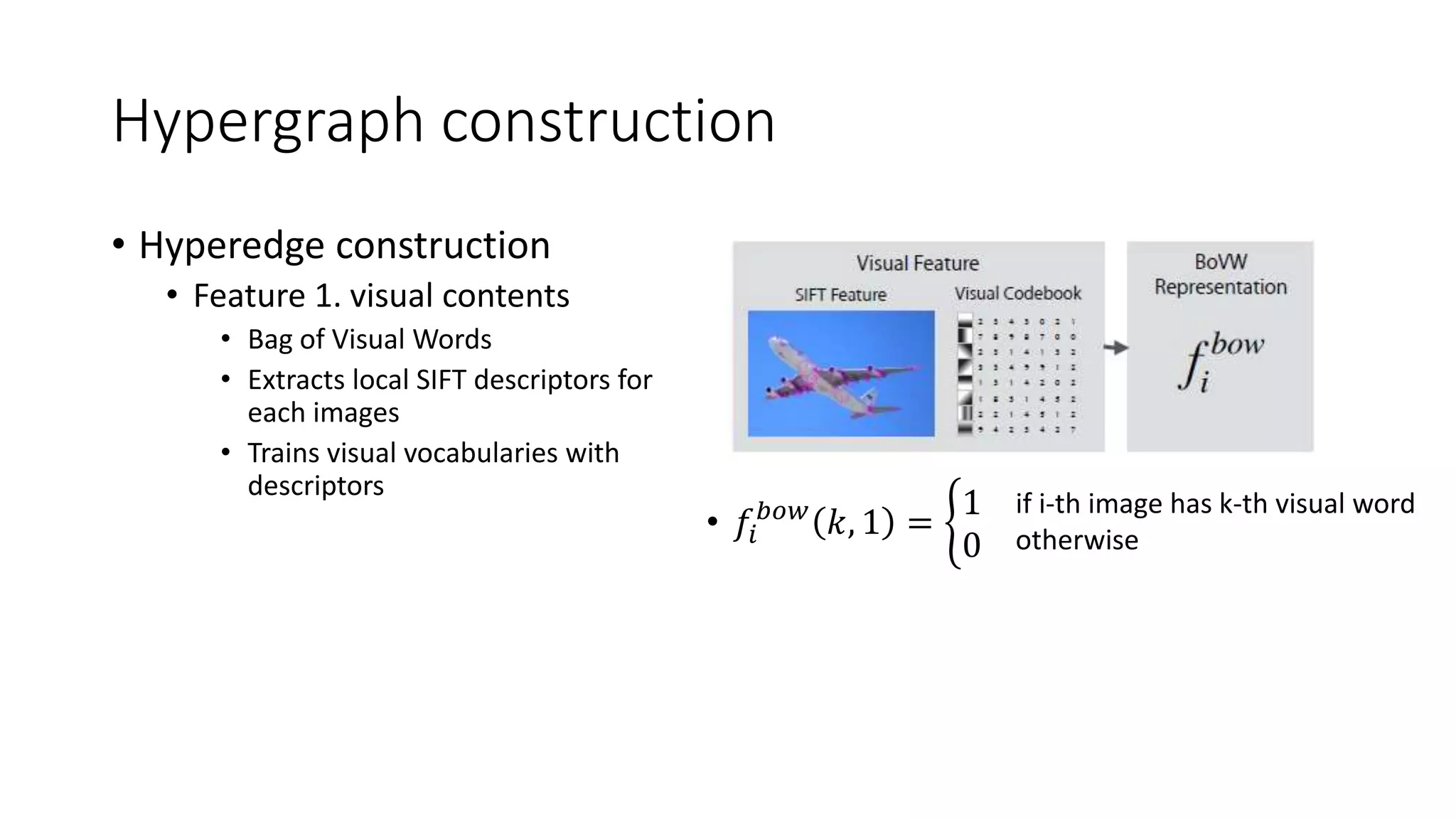

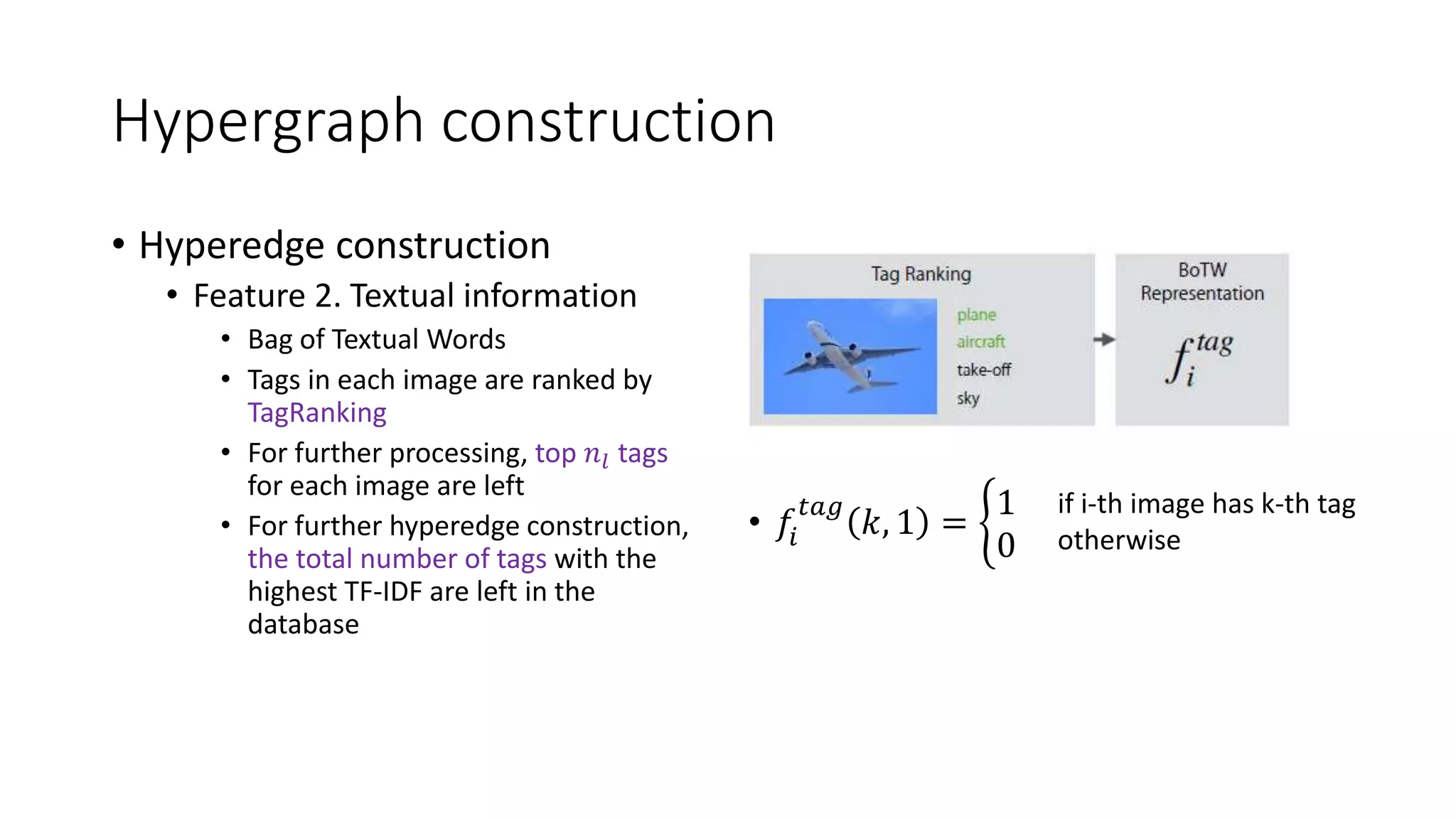

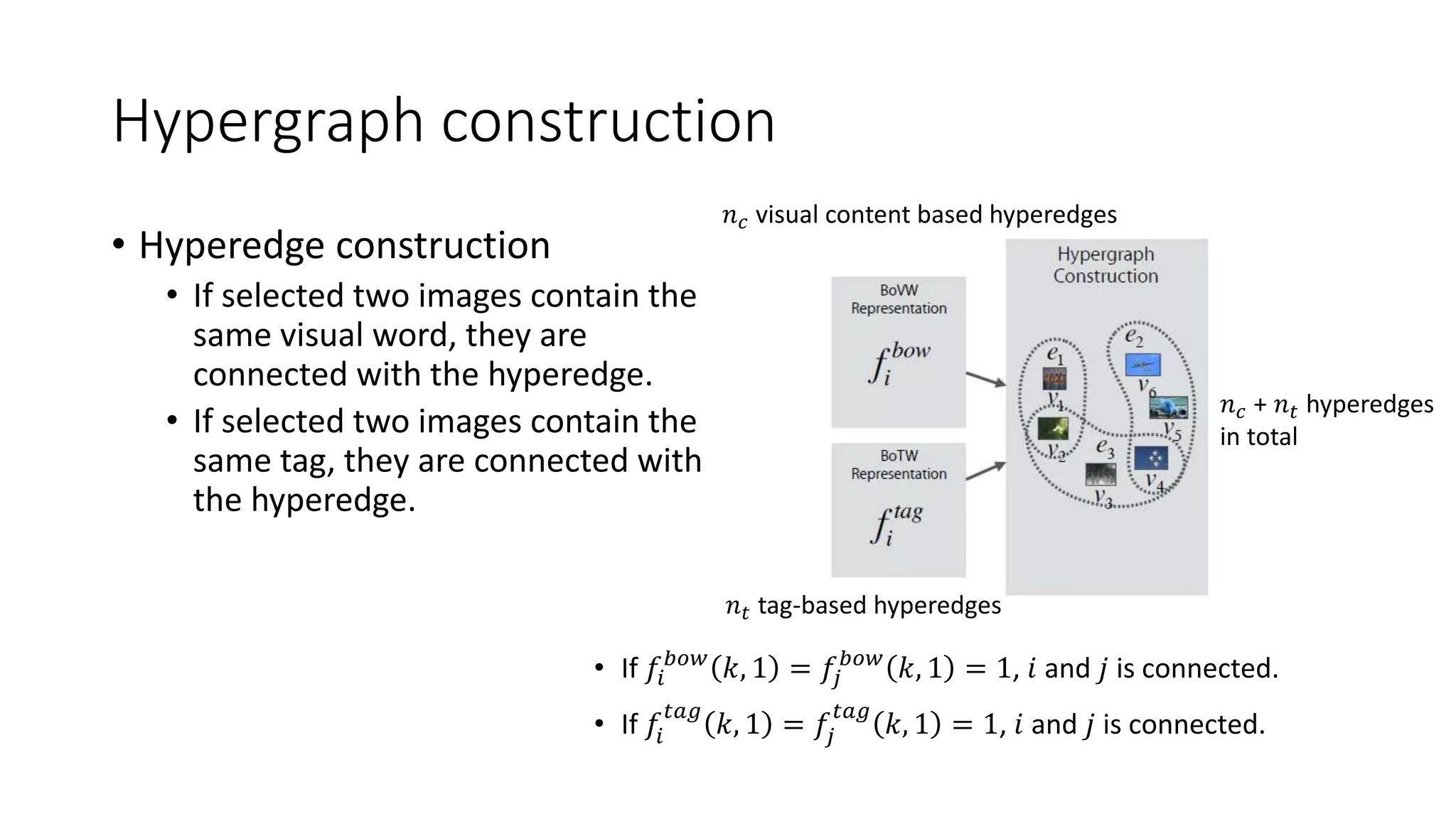

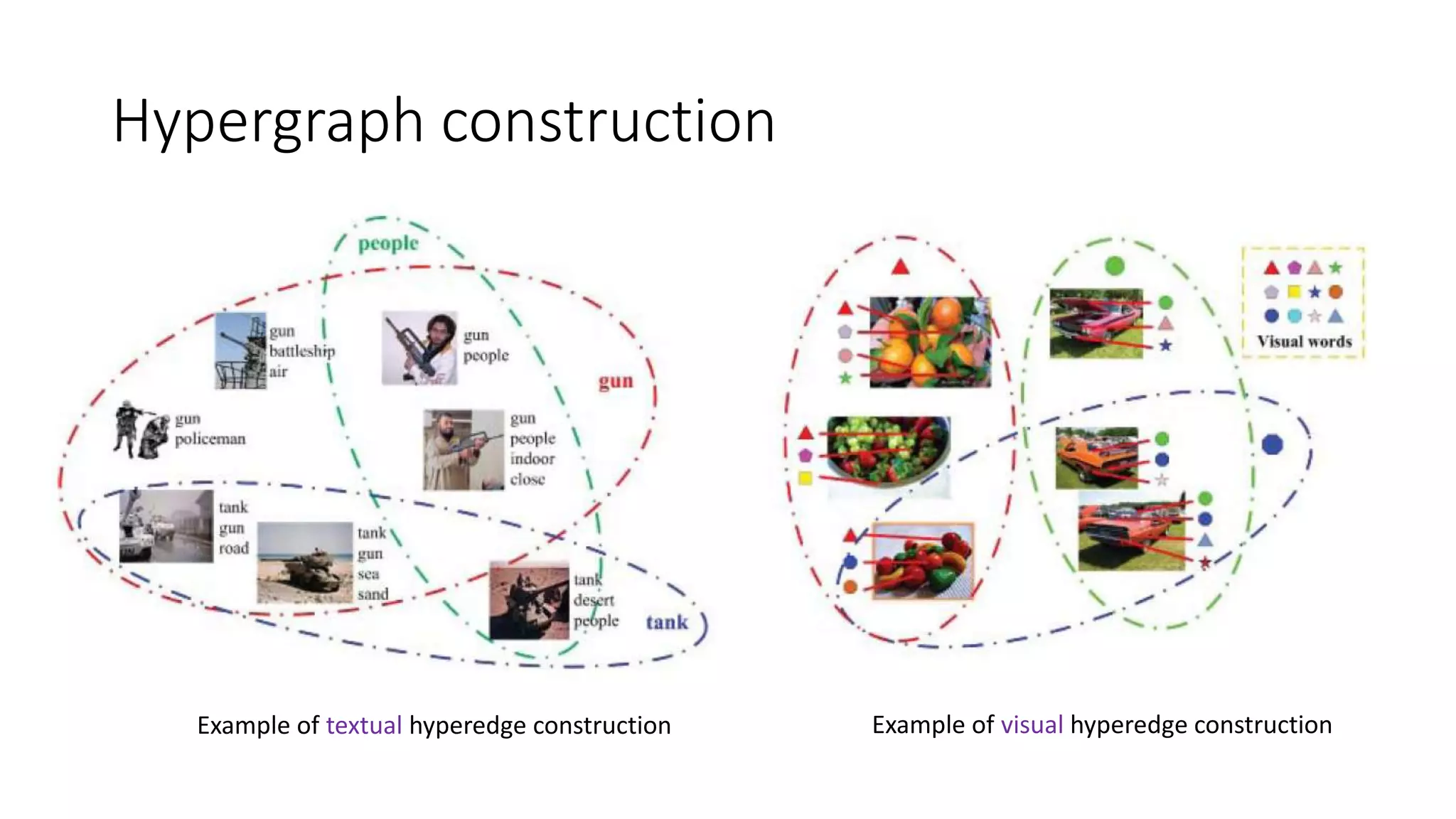

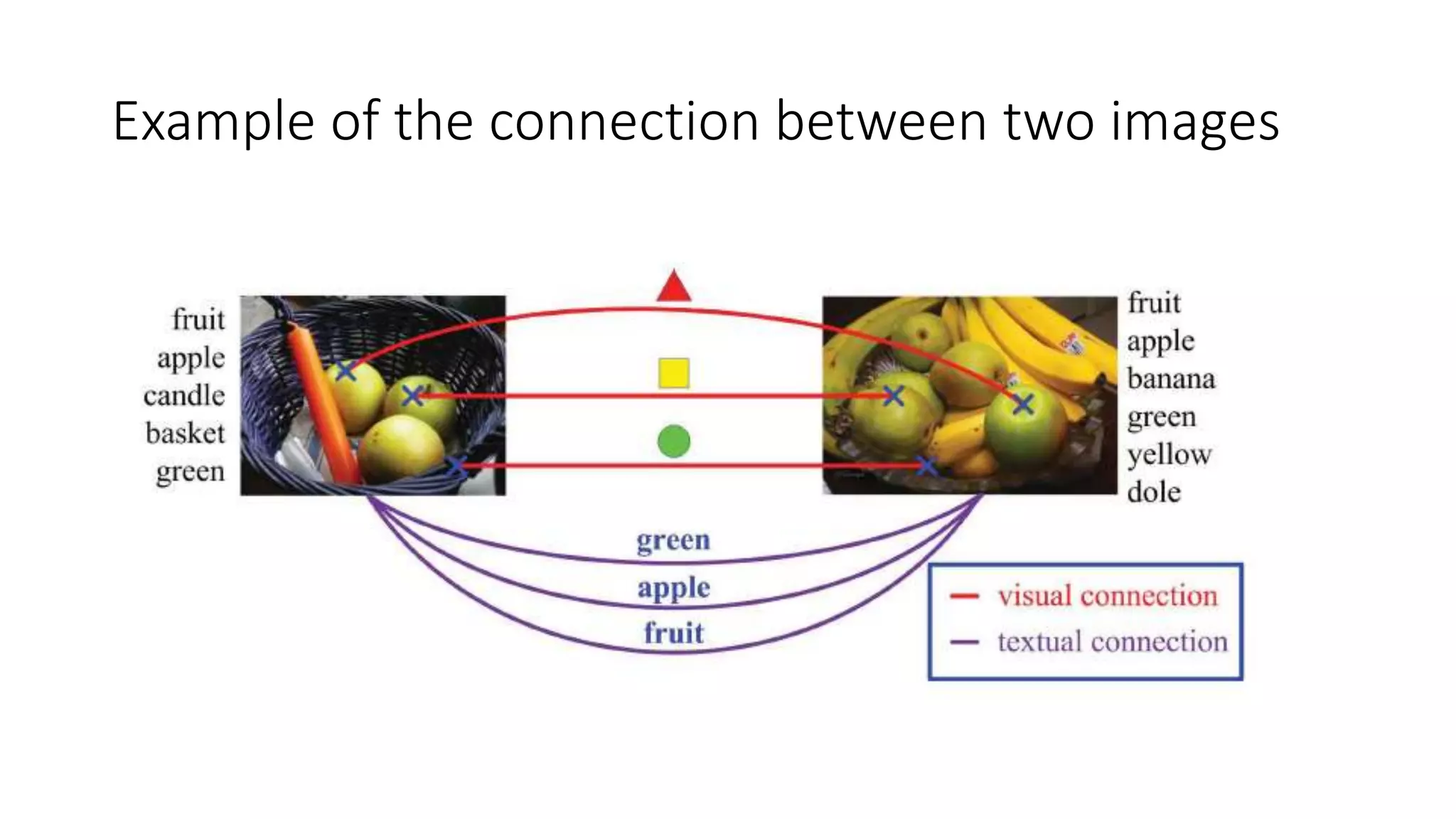

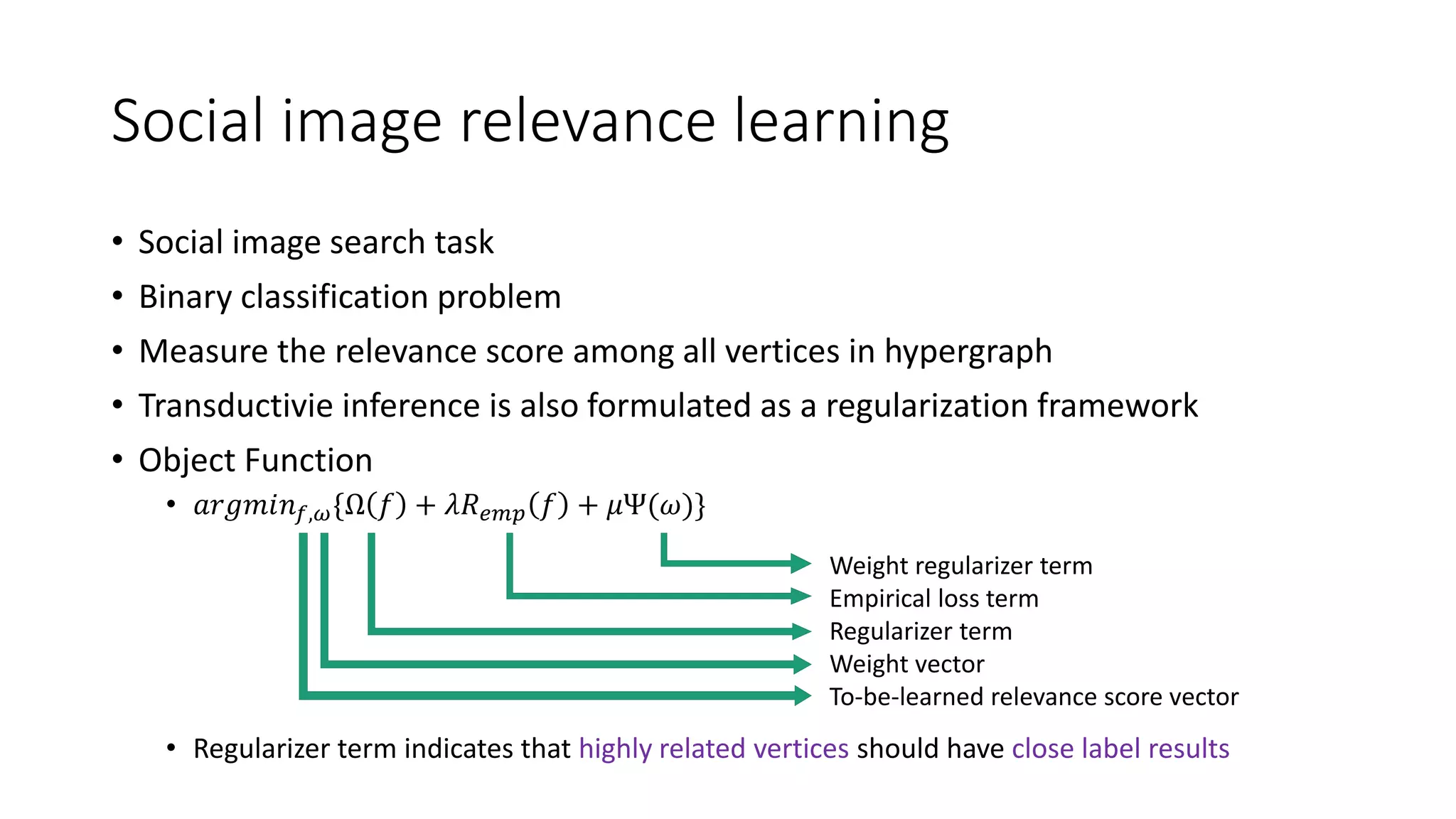

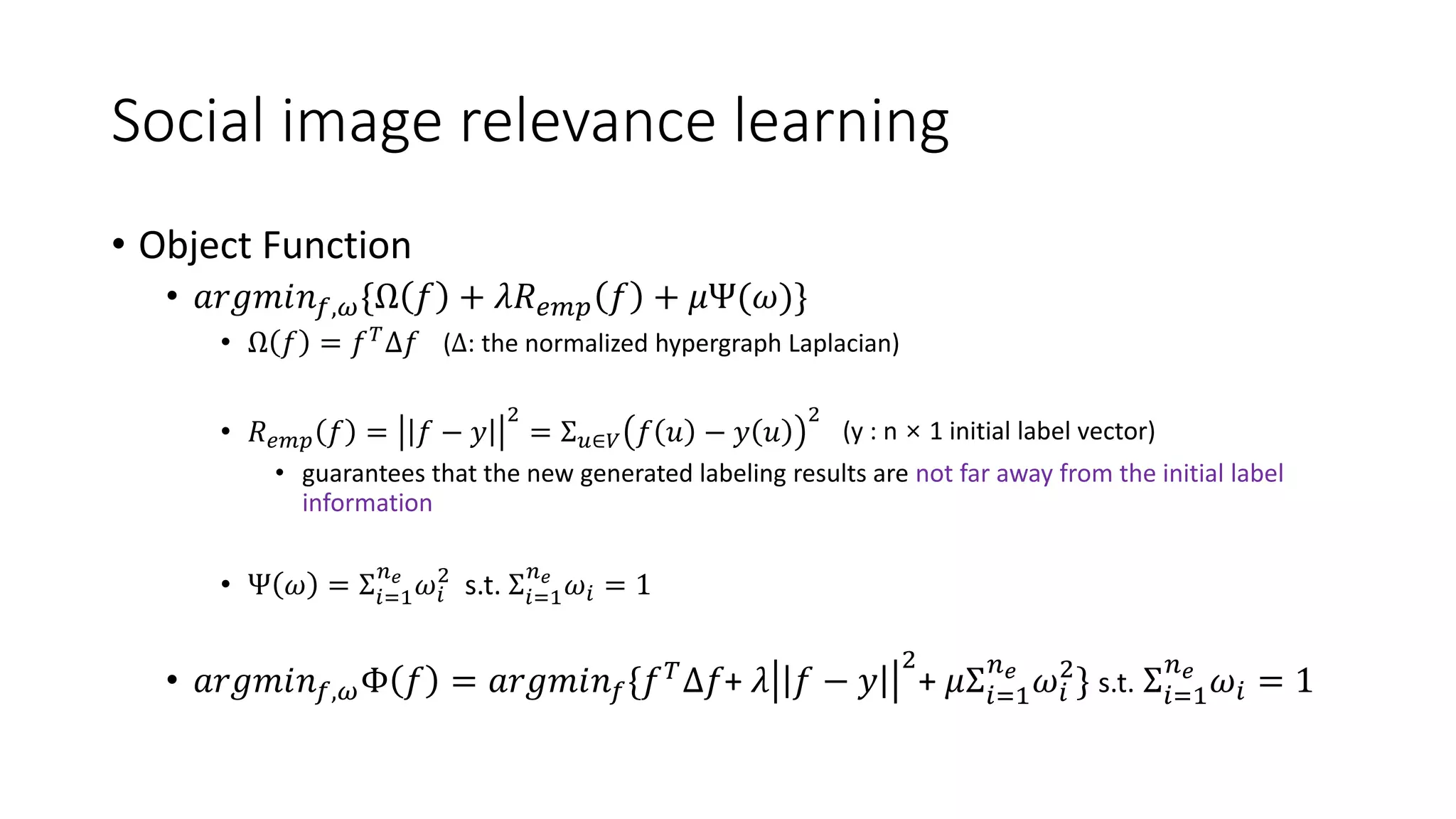

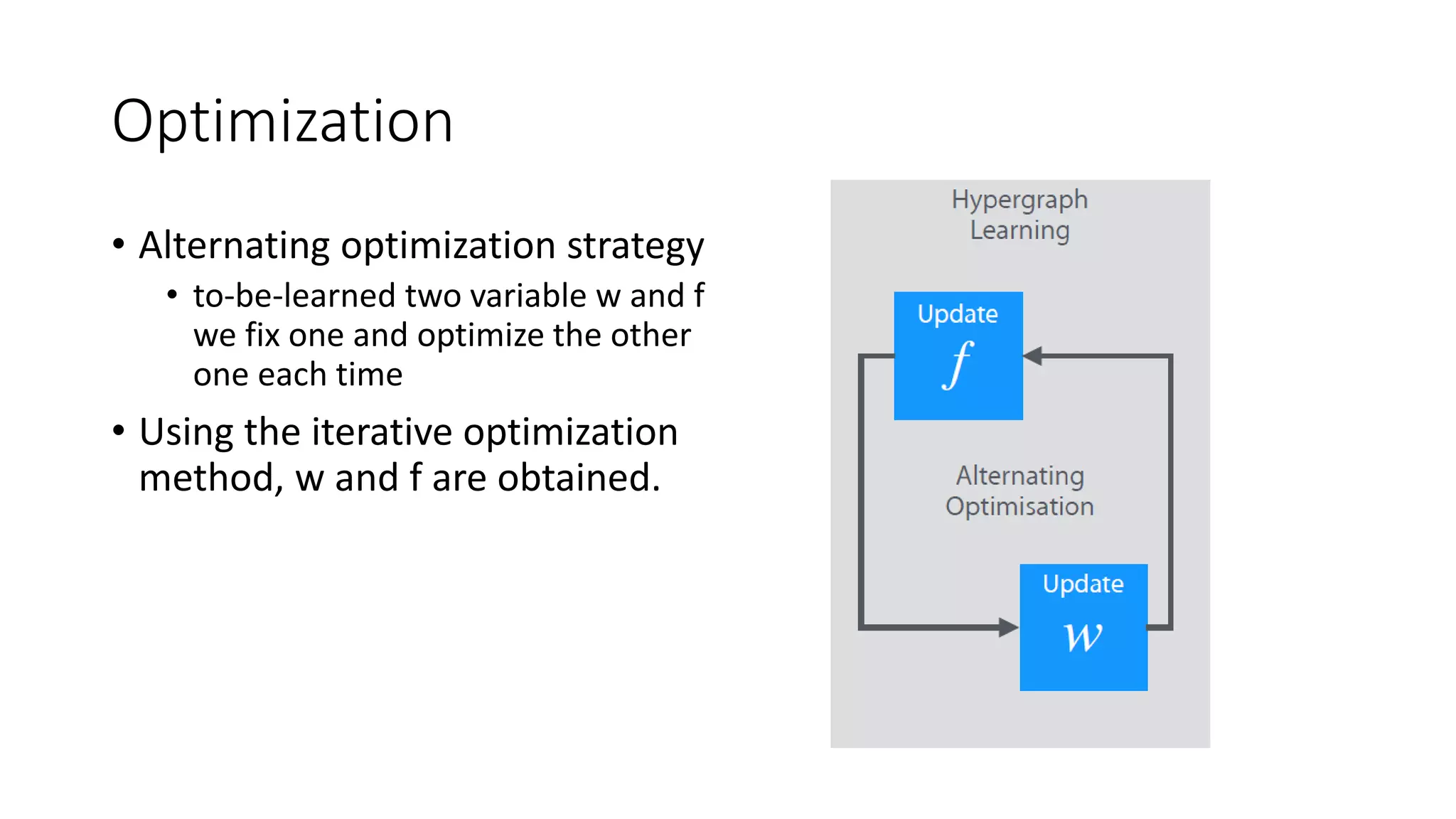

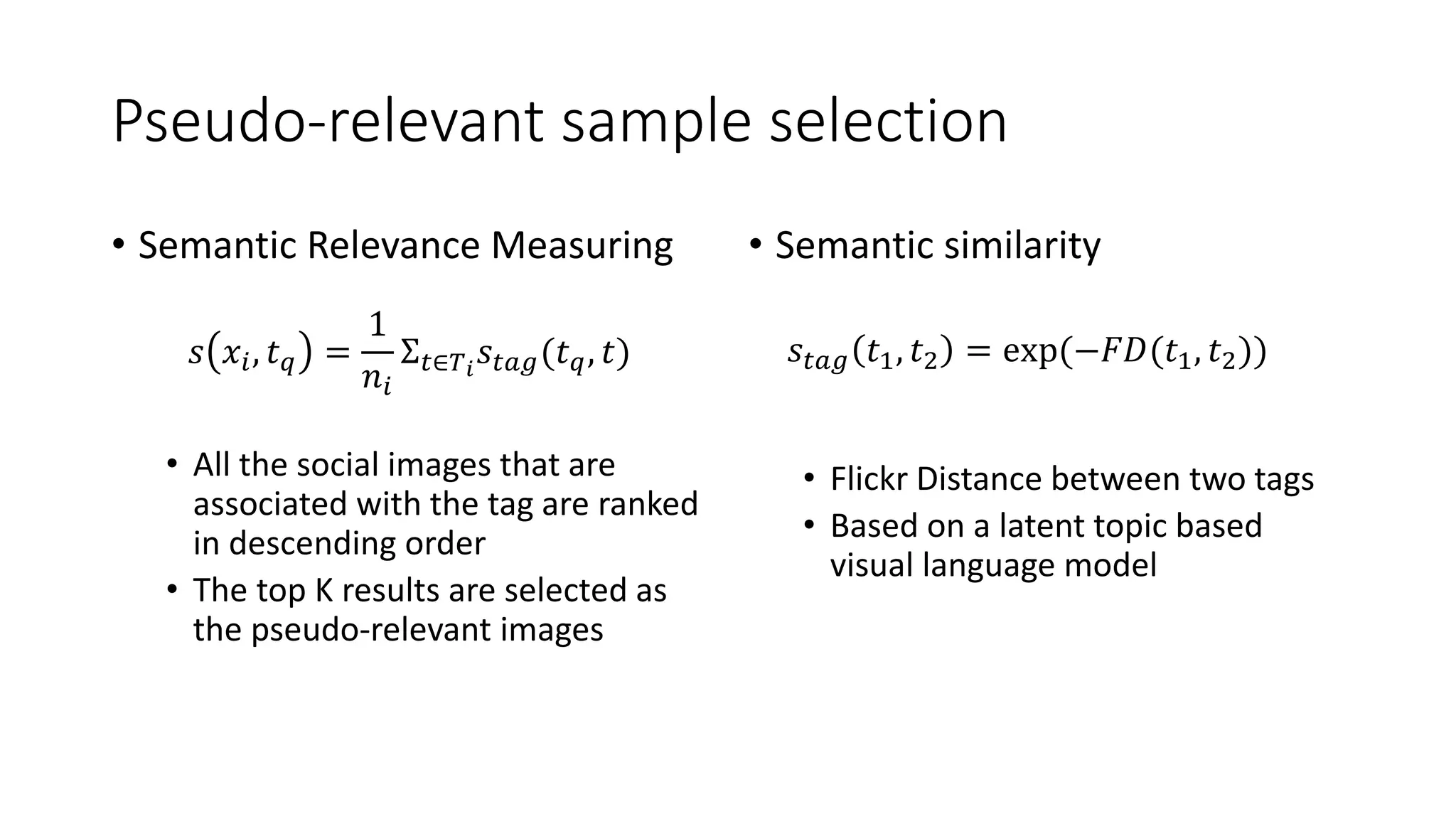

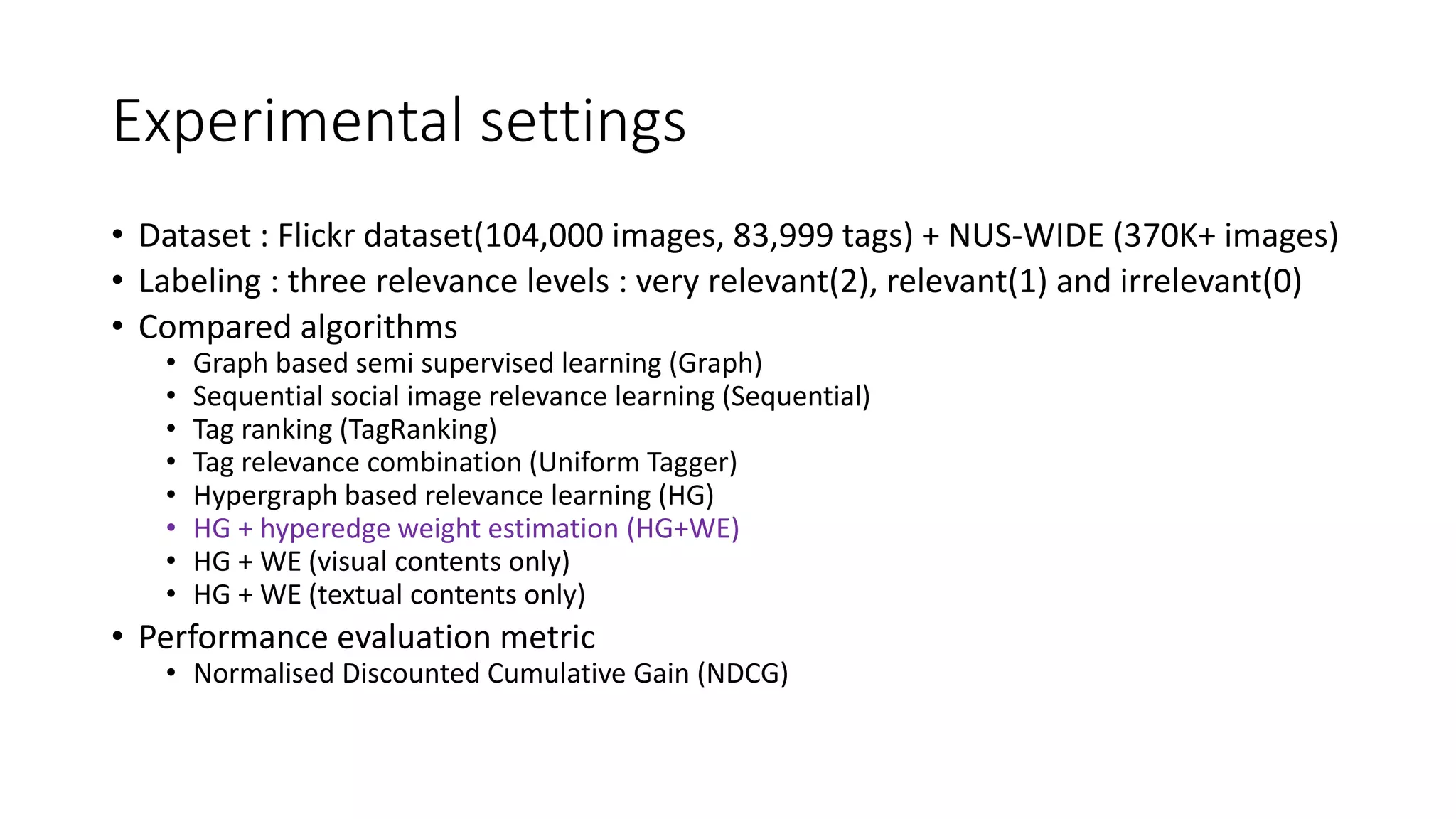

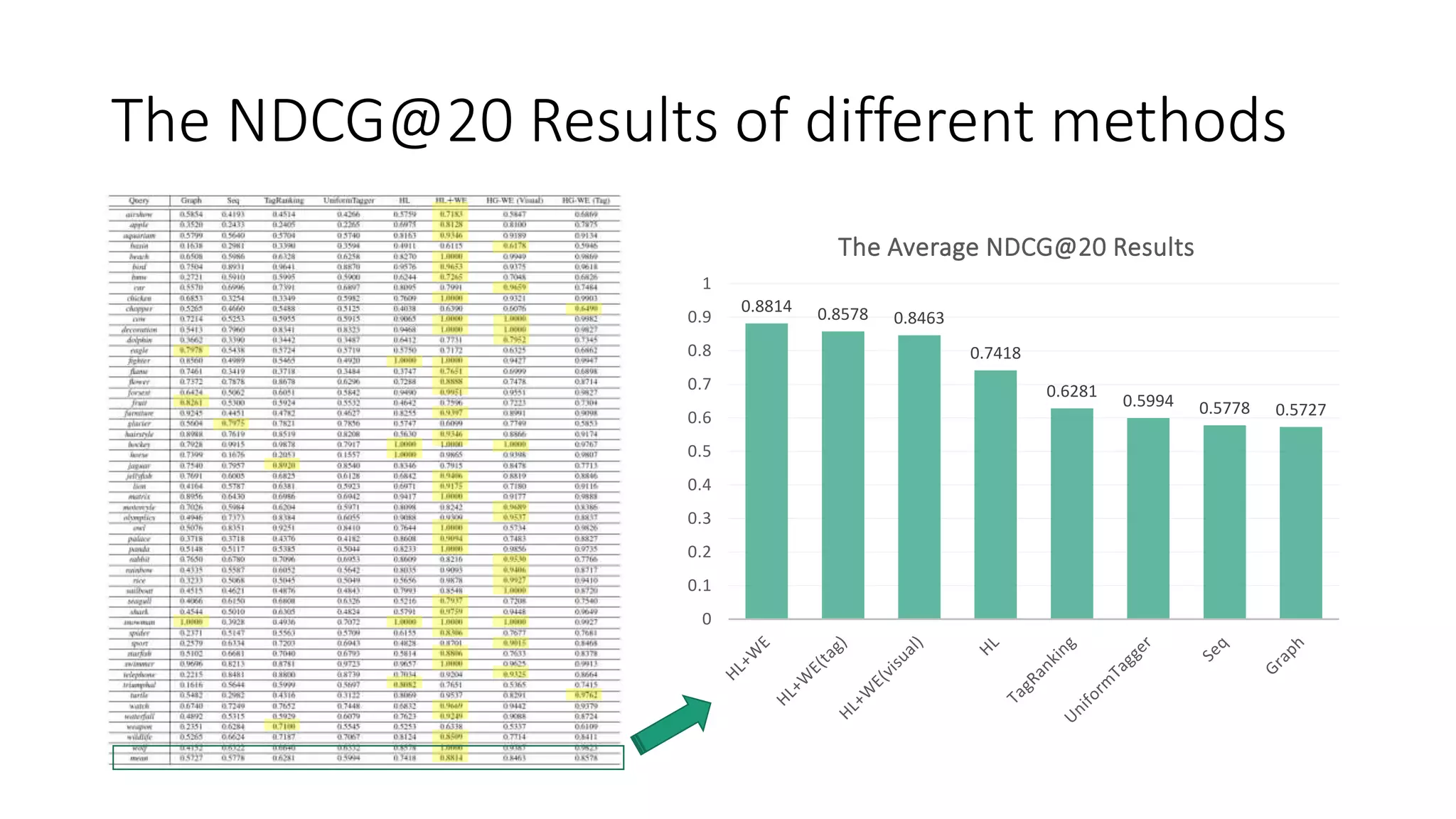

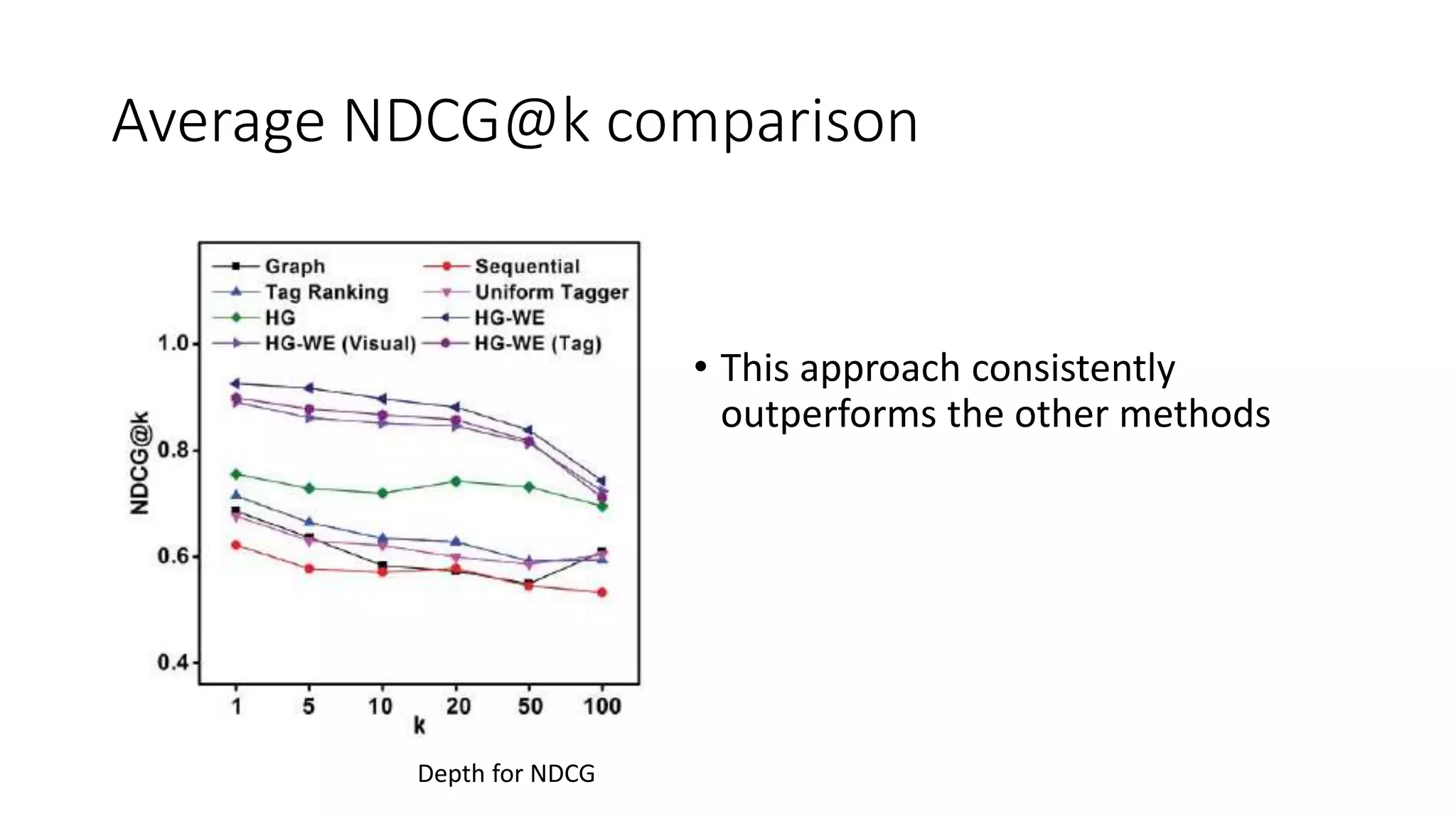

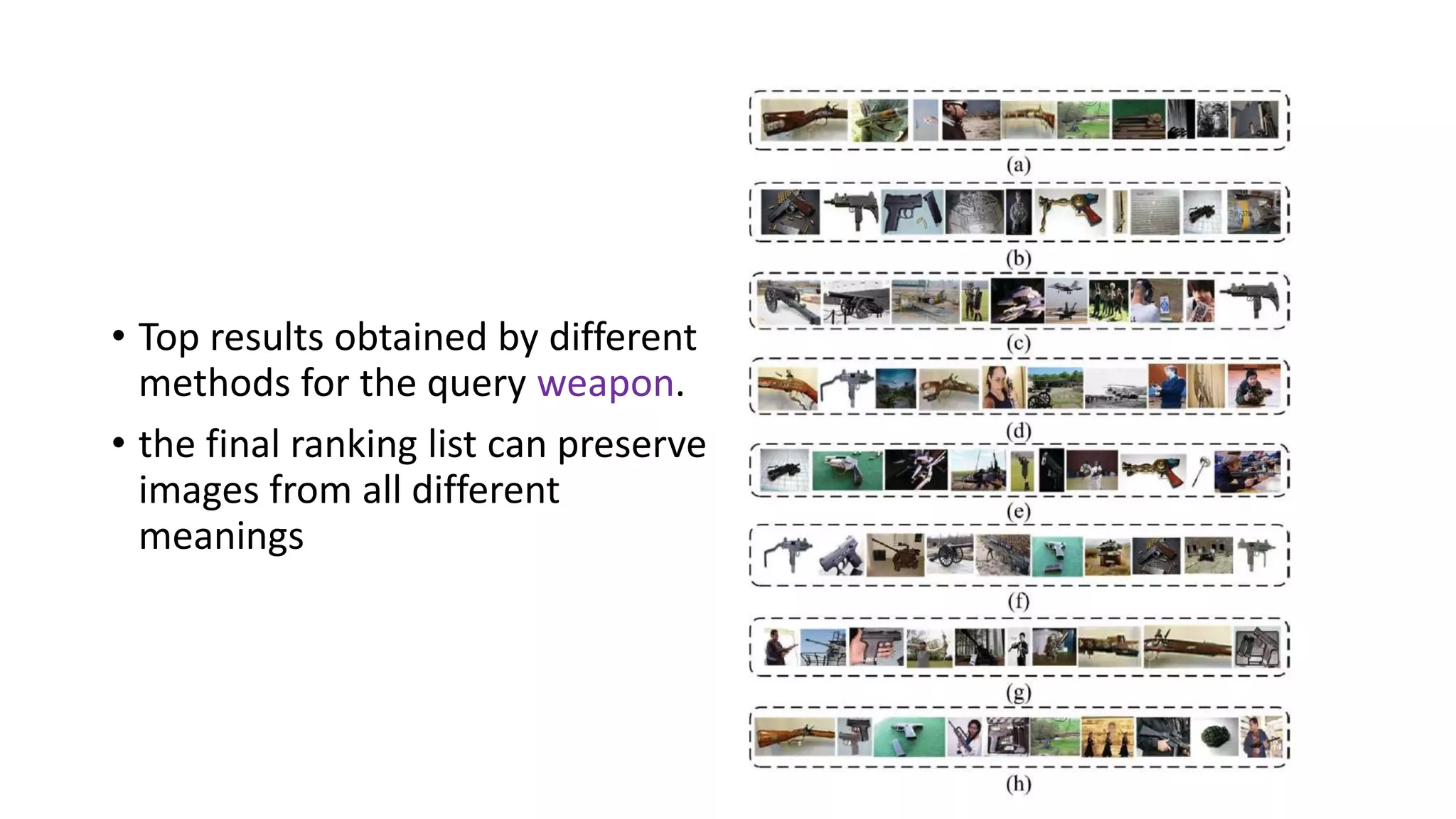

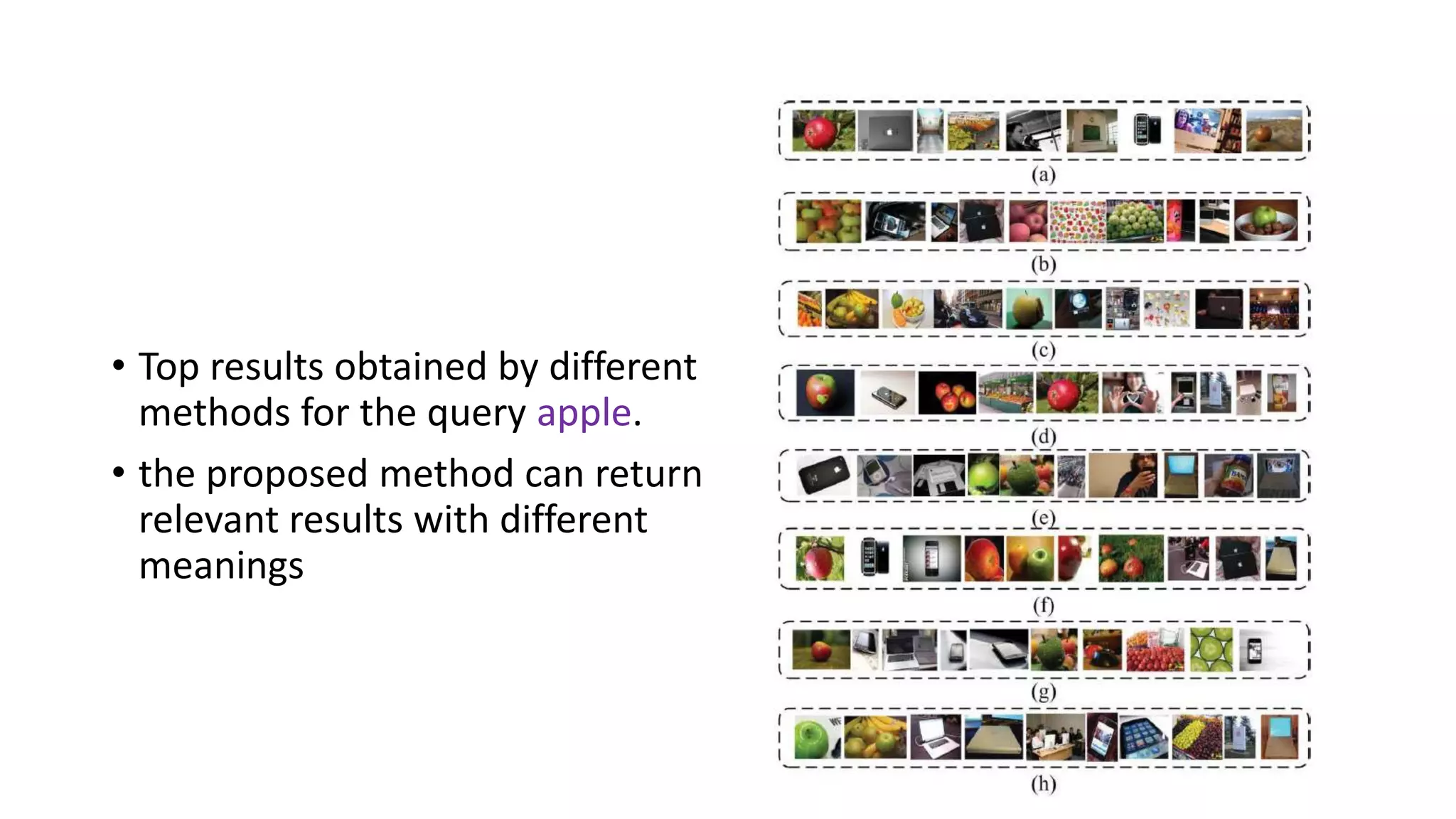

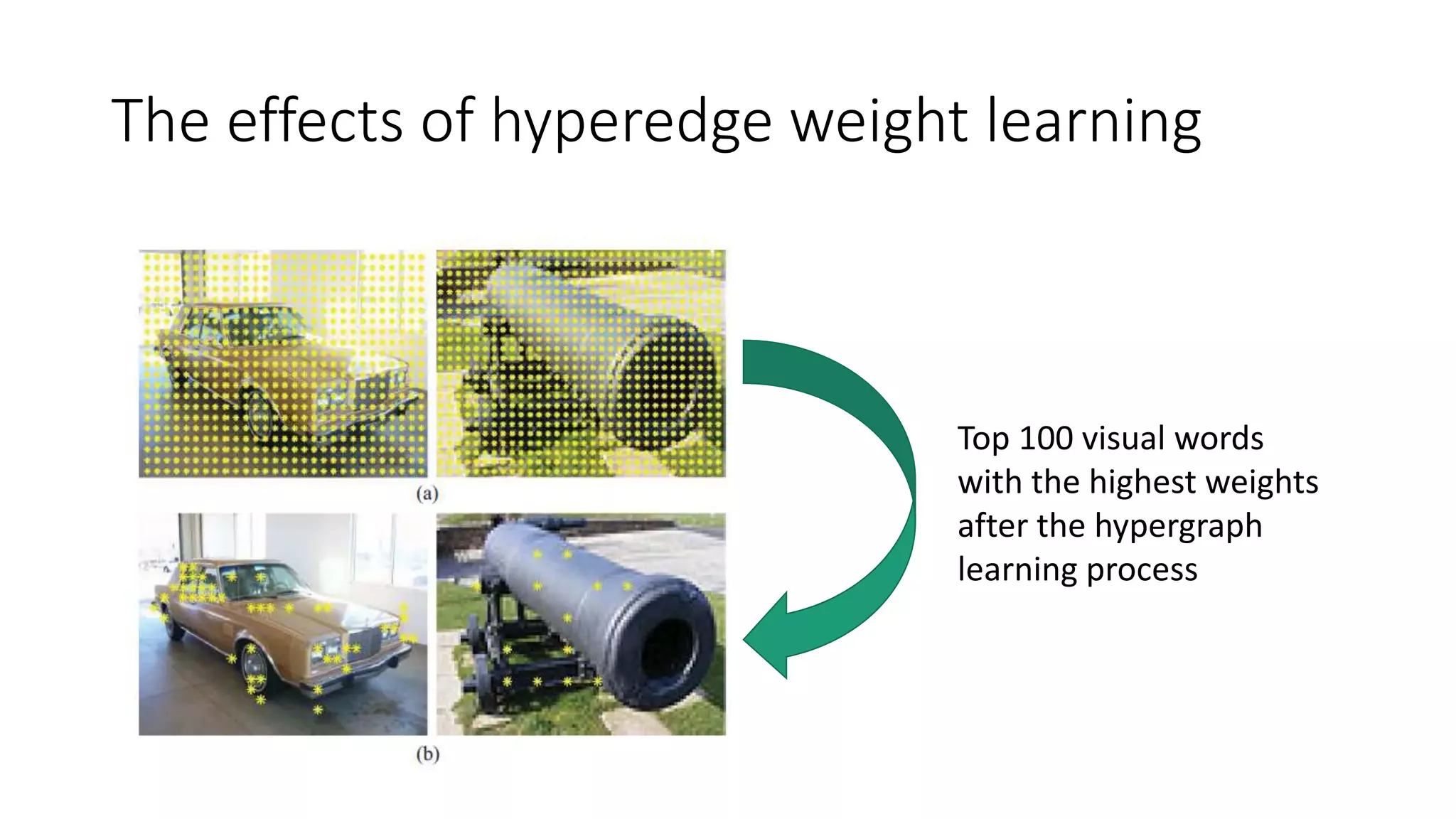

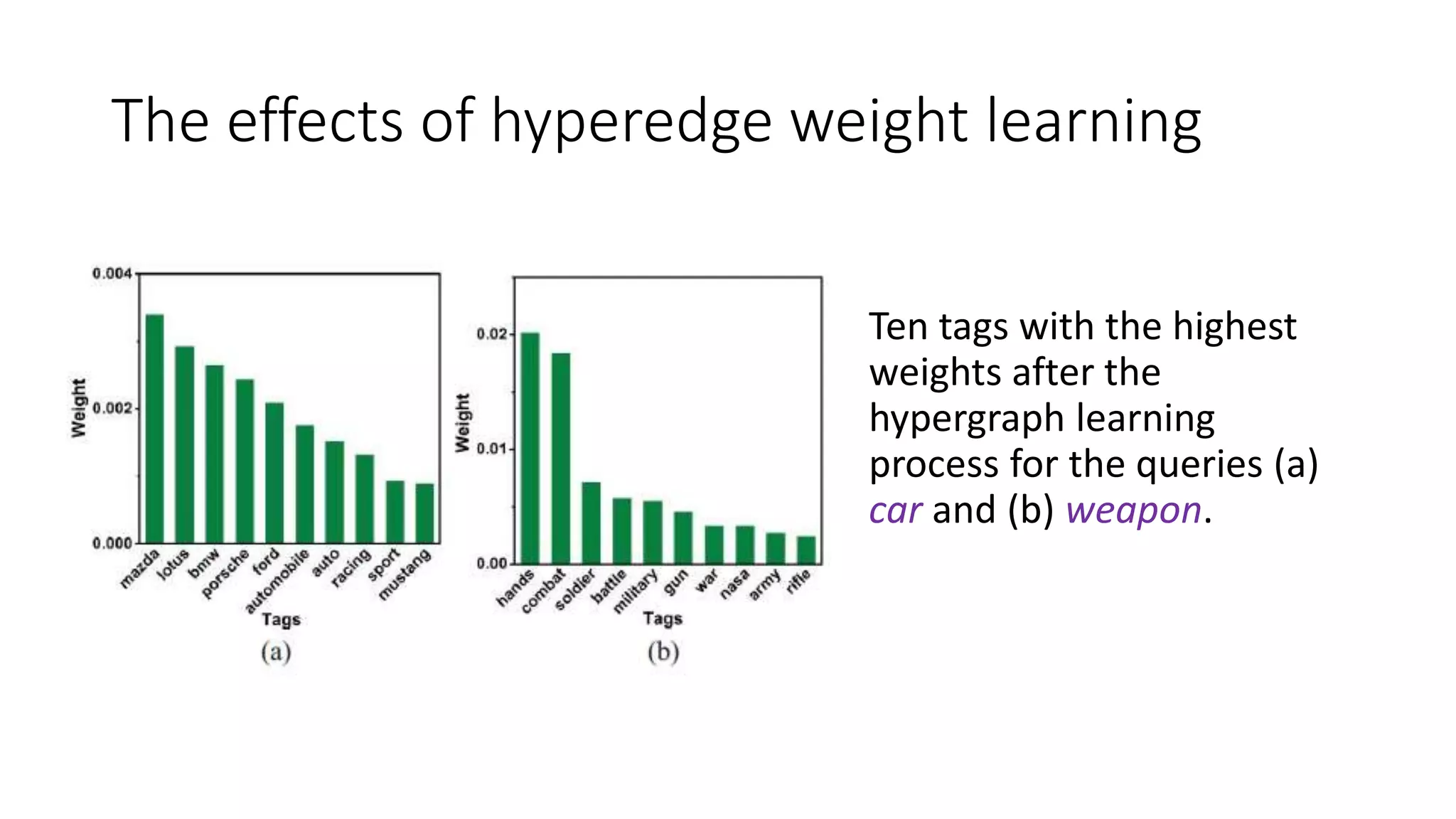

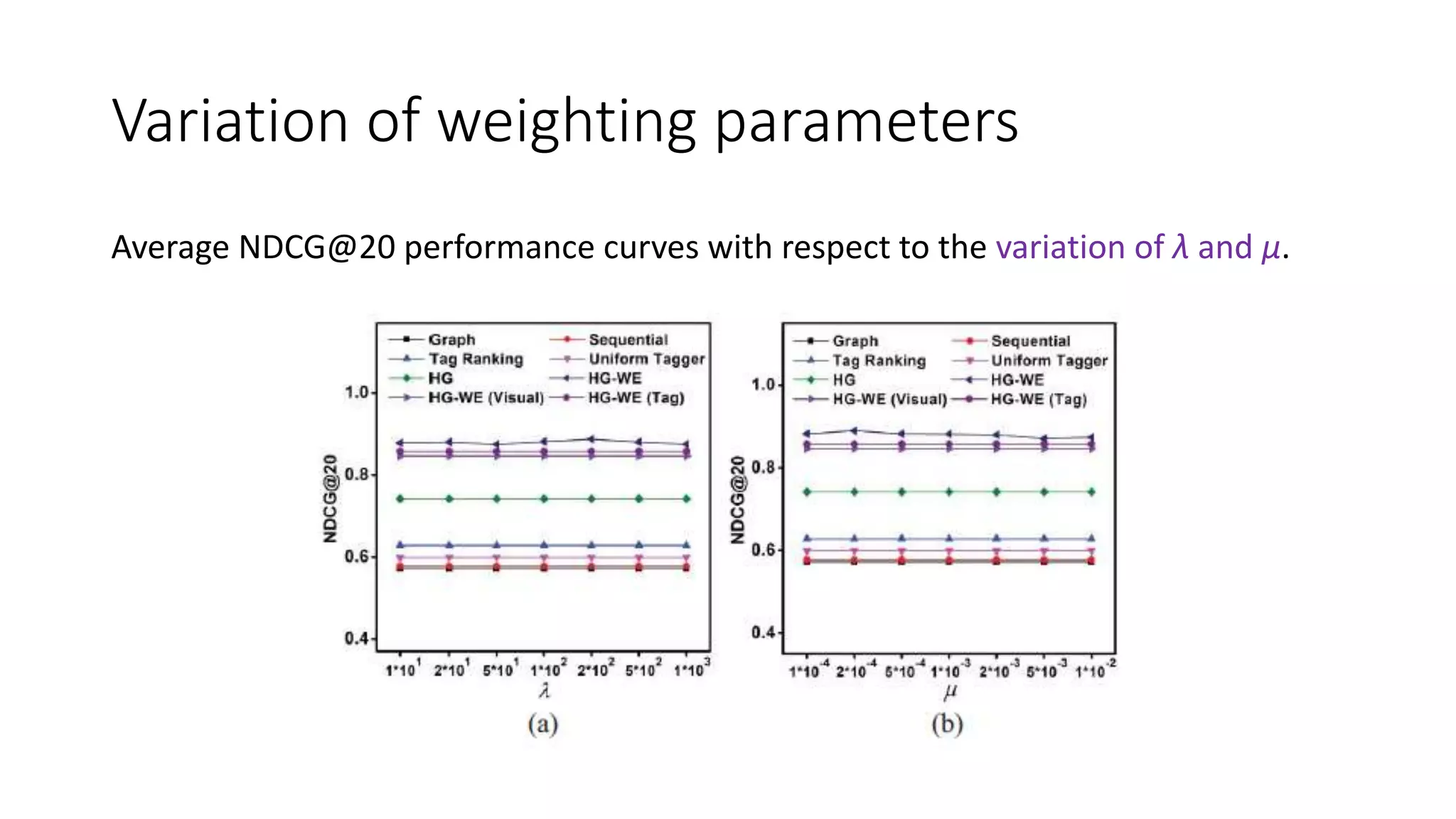

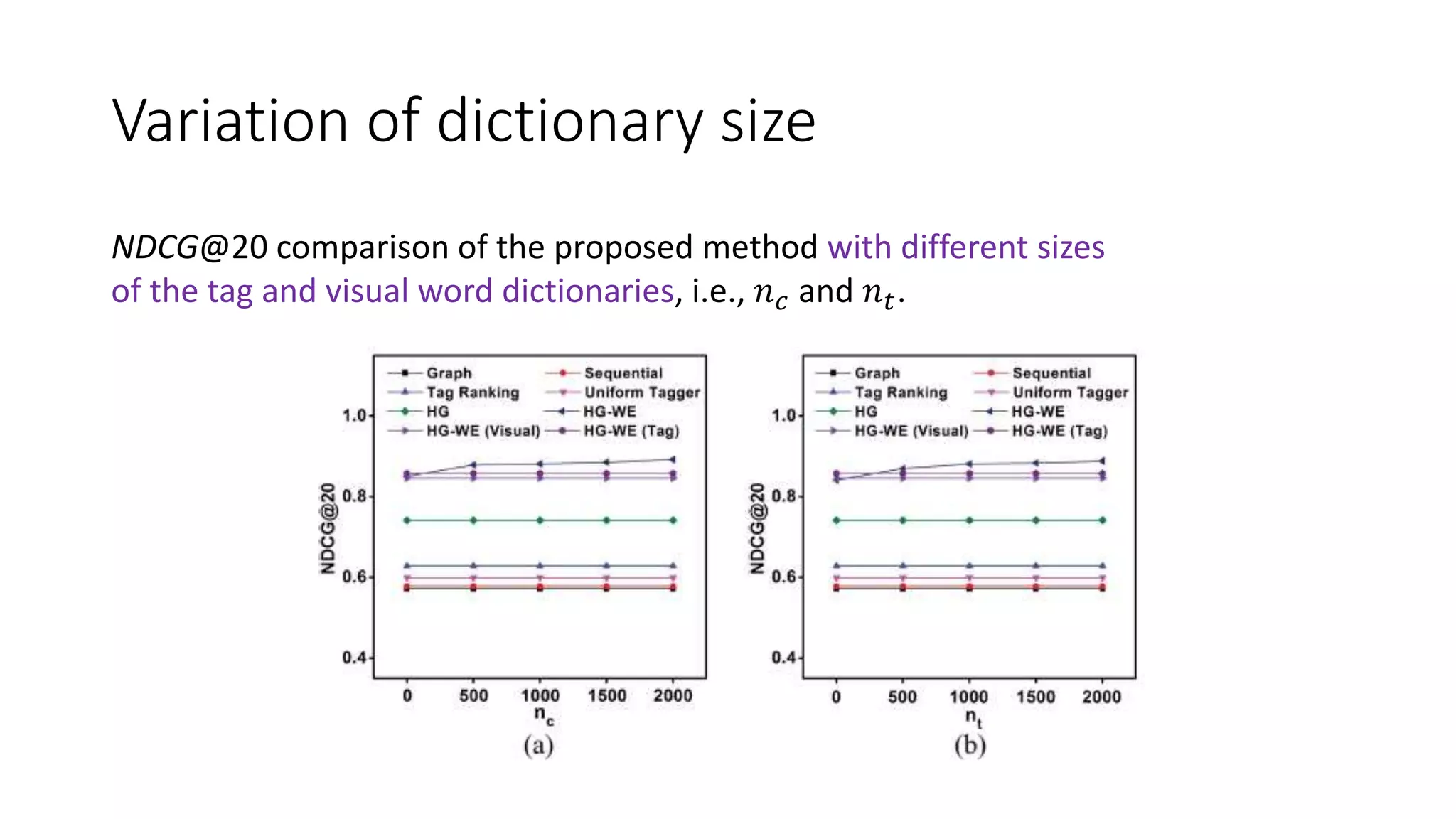

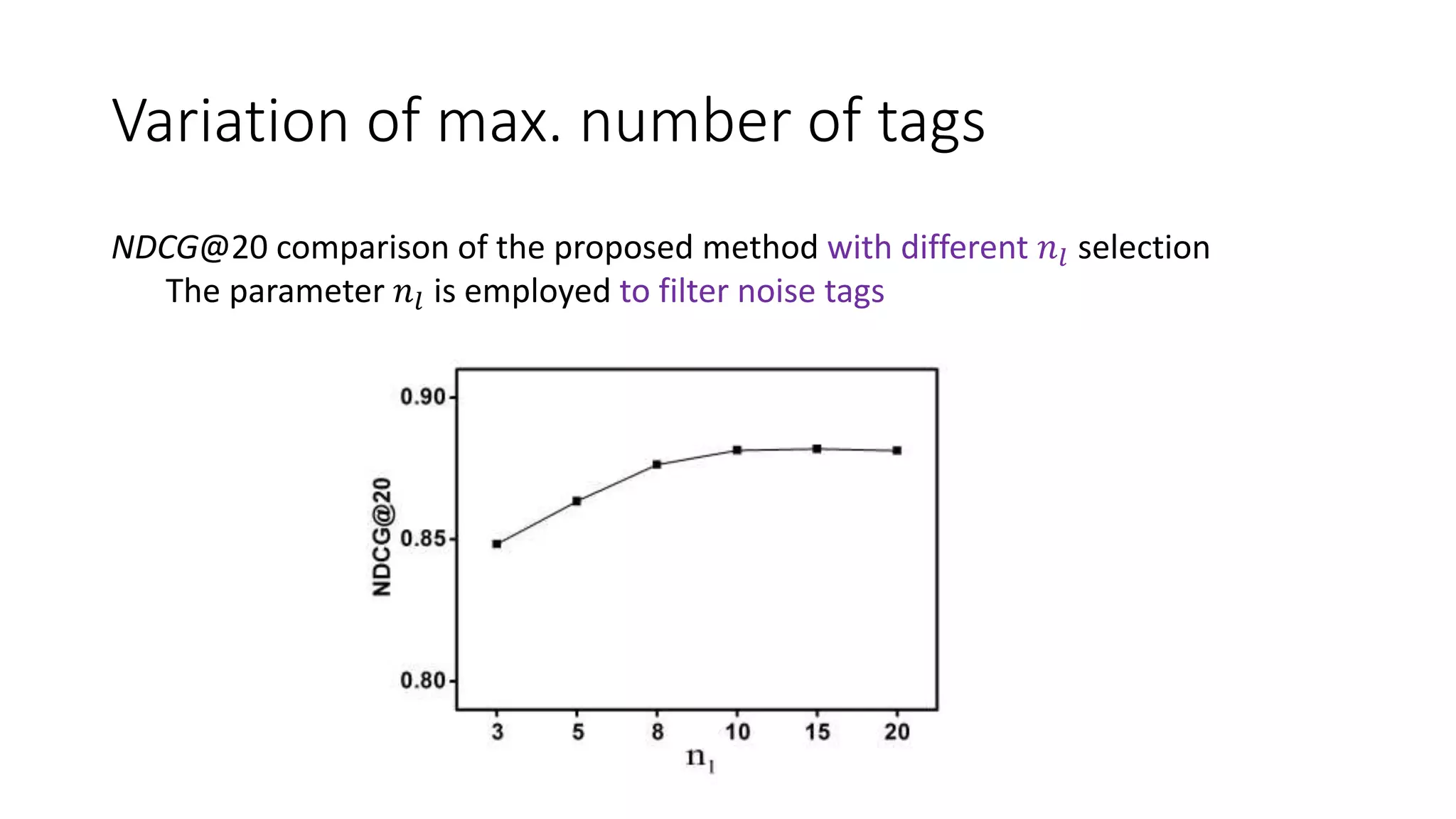

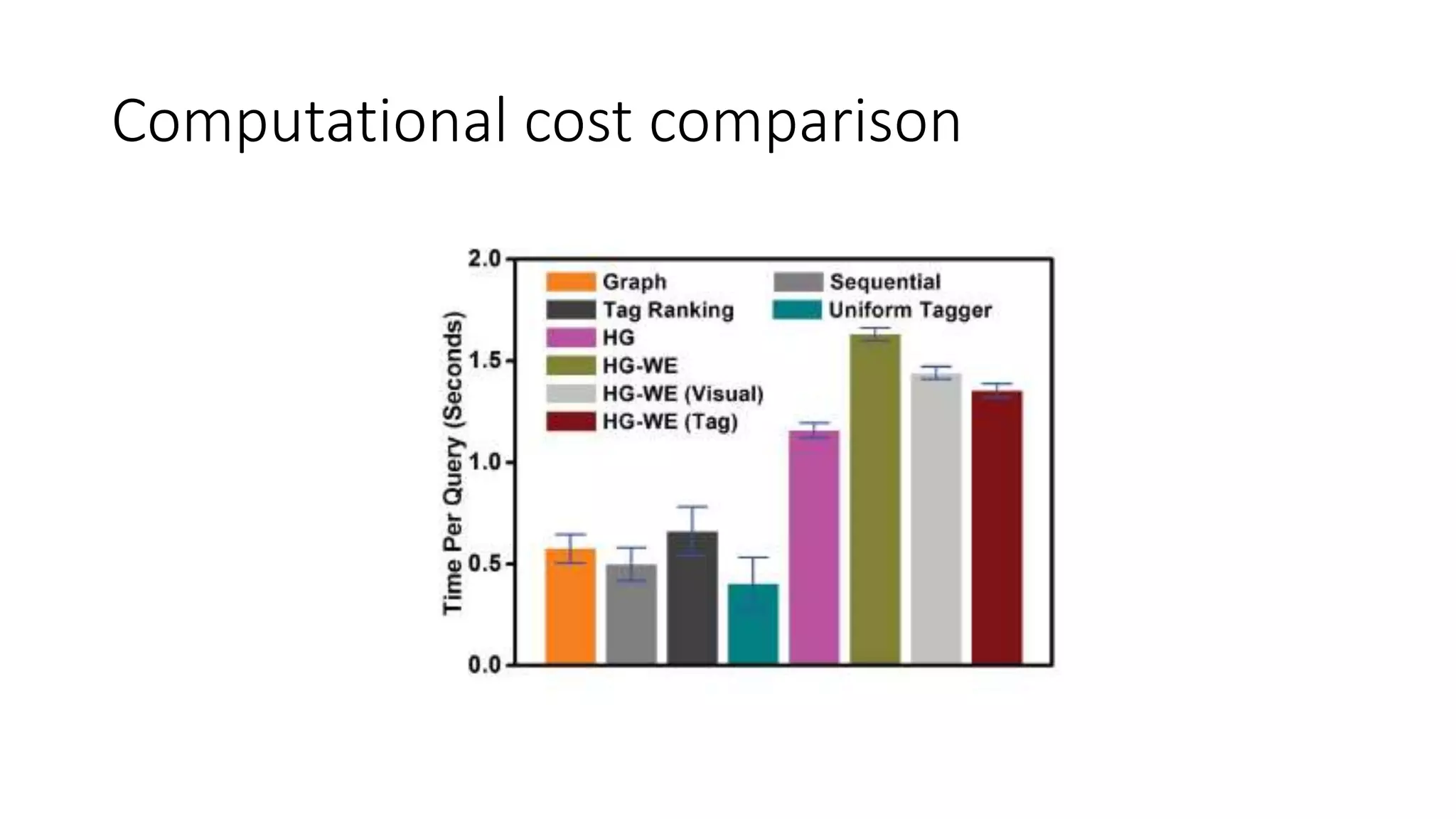

The document presents a hypergraph-based approach for improving tag-based social image search by jointly leveraging visual content and textual tags, addressing the limitations of conventional methods that treat these elements separately. It details the construction of hypergraphs to represent social images, the learning of relevance scores through binary classification, and optimization techniques used for this purpose. Experimental results demonstrate the proposed method's superiority over existing algorithms in accurately ranking images based on relevance to user queries.