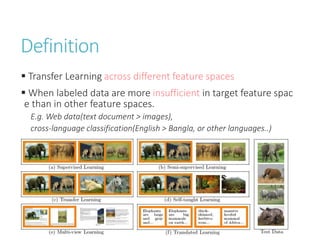

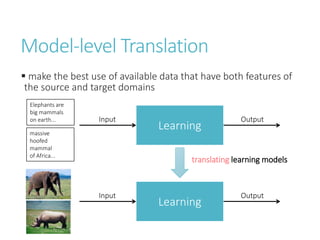

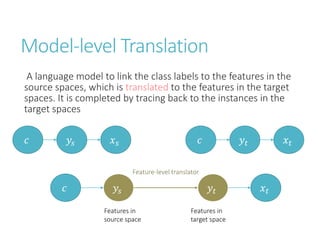

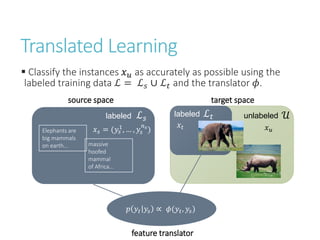

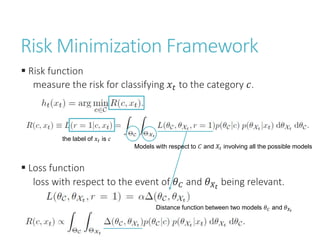

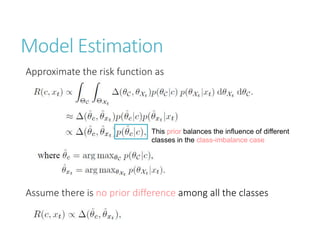

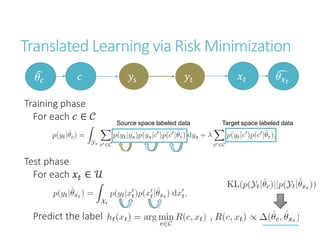

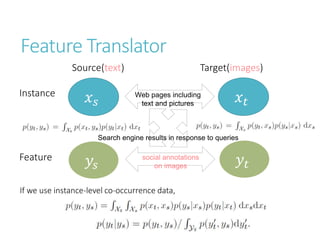

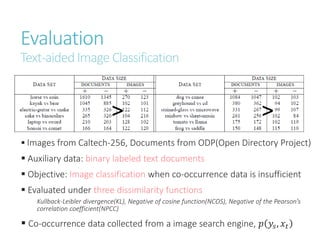

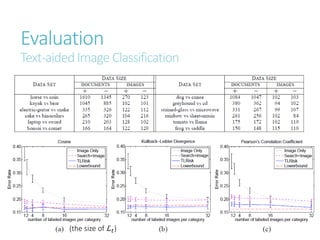

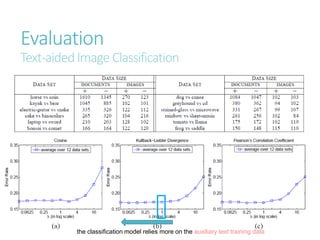

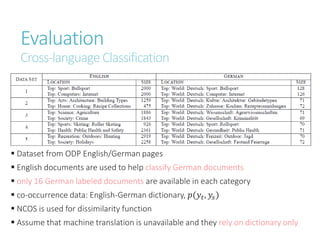

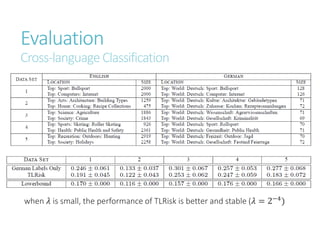

This document presents an approach called translated learning that allows for transfer learning across different feature spaces. It describes how translated learning works by using a feature translator to link labels from a source feature space to the target feature space using only a small amount of labeled data in the target space. The approach is formulated as a risk minimization framework and models are estimated via an approximation method. The effectiveness is demonstrated on two applications: text-aided image classification and cross-language classification, showing improved performance over not using the translated learning approach, especially when labeled data is limited in the target space.