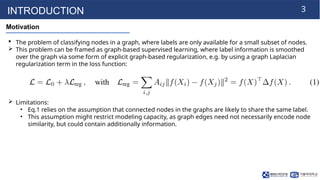

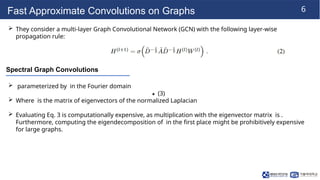

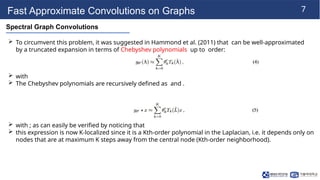

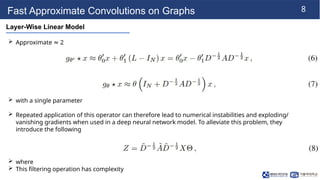

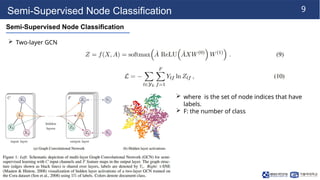

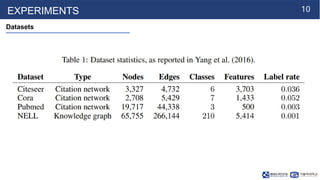

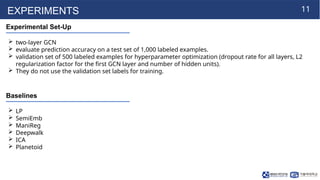

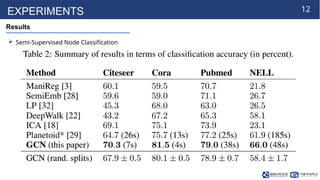

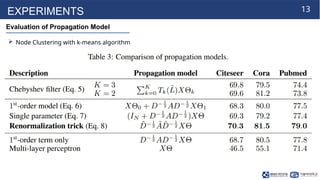

The document presents a novel approach for semi-supervised classification of nodes in graphs using a graph convolutional network (GCN) model that incorporates an efficient layer-wise propagation rule. This method improves classification accuracy and computational efficiency compared to state-of-the-art techniques. Experiments demonstrate the model's effectiveness in encoding graph structure and node features for classification tasks.