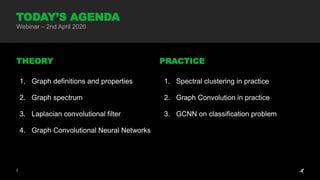

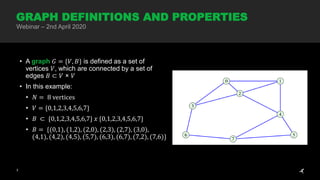

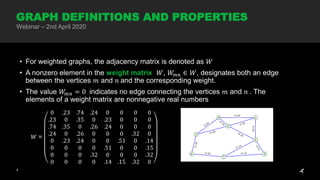

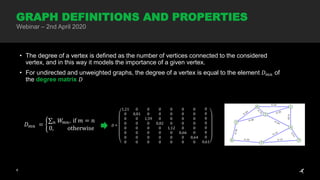

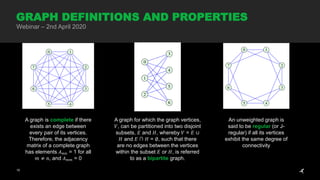

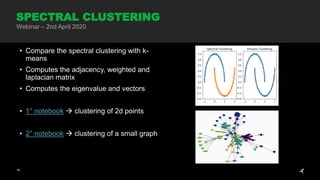

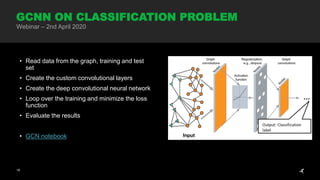

The document discusses graph neural networks, focusing on graph definitions, properties, and applications such as spectral clustering and graph convolutional neural networks. It covers key concepts like adjacency matrices, graph Laplacians, and how they are used in convolutional filters for classification tasks. The webinar on April 2nd, 2020, also includes practical demonstrations of clustering techniques and deep learning models related to graph processing.