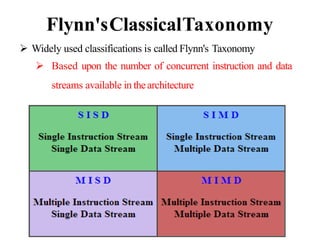

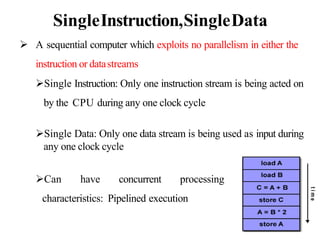

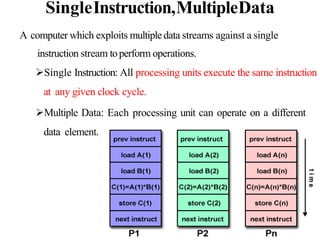

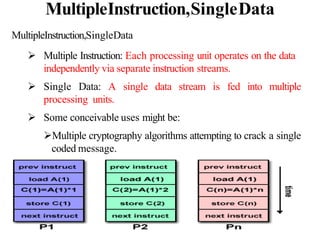

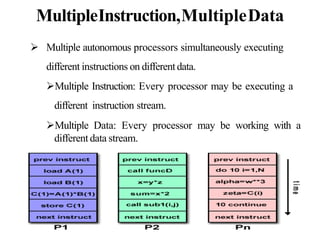

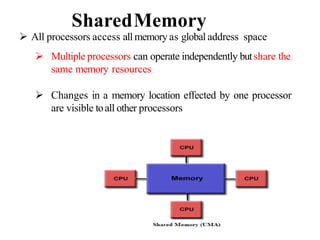

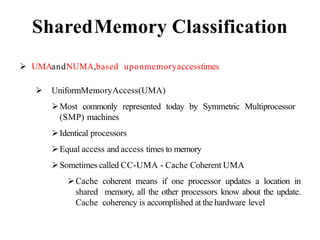

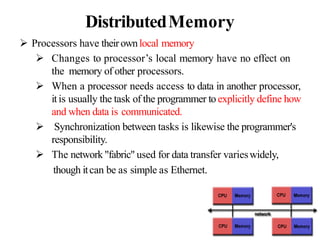

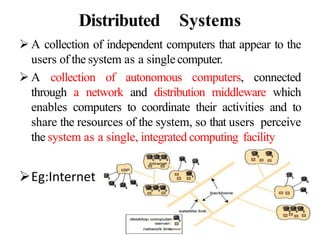

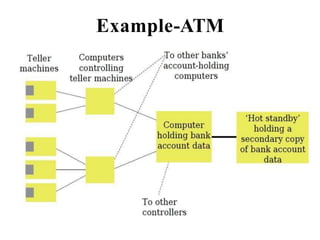

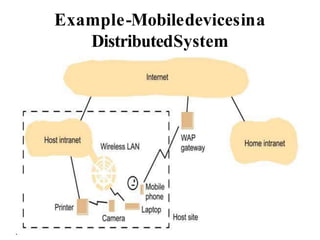

Parallel and distributed computing allows problems to be broken into discrete parts that can be solved simultaneously. This approach utilizes multiple processors that work concurrently on different parts of the problem. There are several types of parallel architectures depending on how instructions and data are distributed across processors. Shared memory systems give all processors access to a common memory space while distributed memory assigns private memory to each processor requiring explicit data transfer. Large-scale systems may combine these approaches into hybrid designs. Distributed systems extend parallelism across a network and provide users with a single, integrated view of geographically dispersed resources and computers. Key challenges for distributed systems include transparency, scalability, fault tolerance and concurrency.