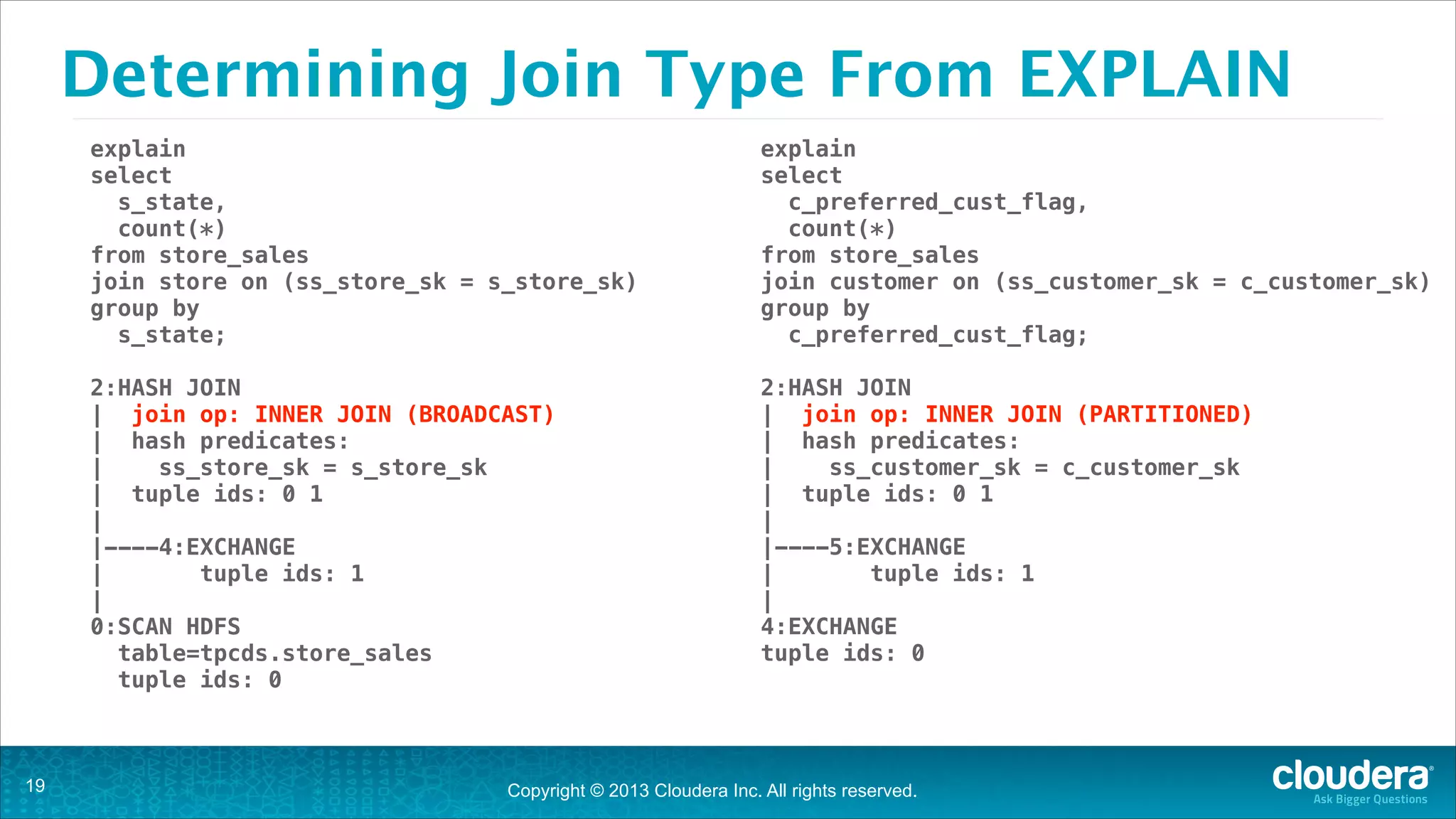

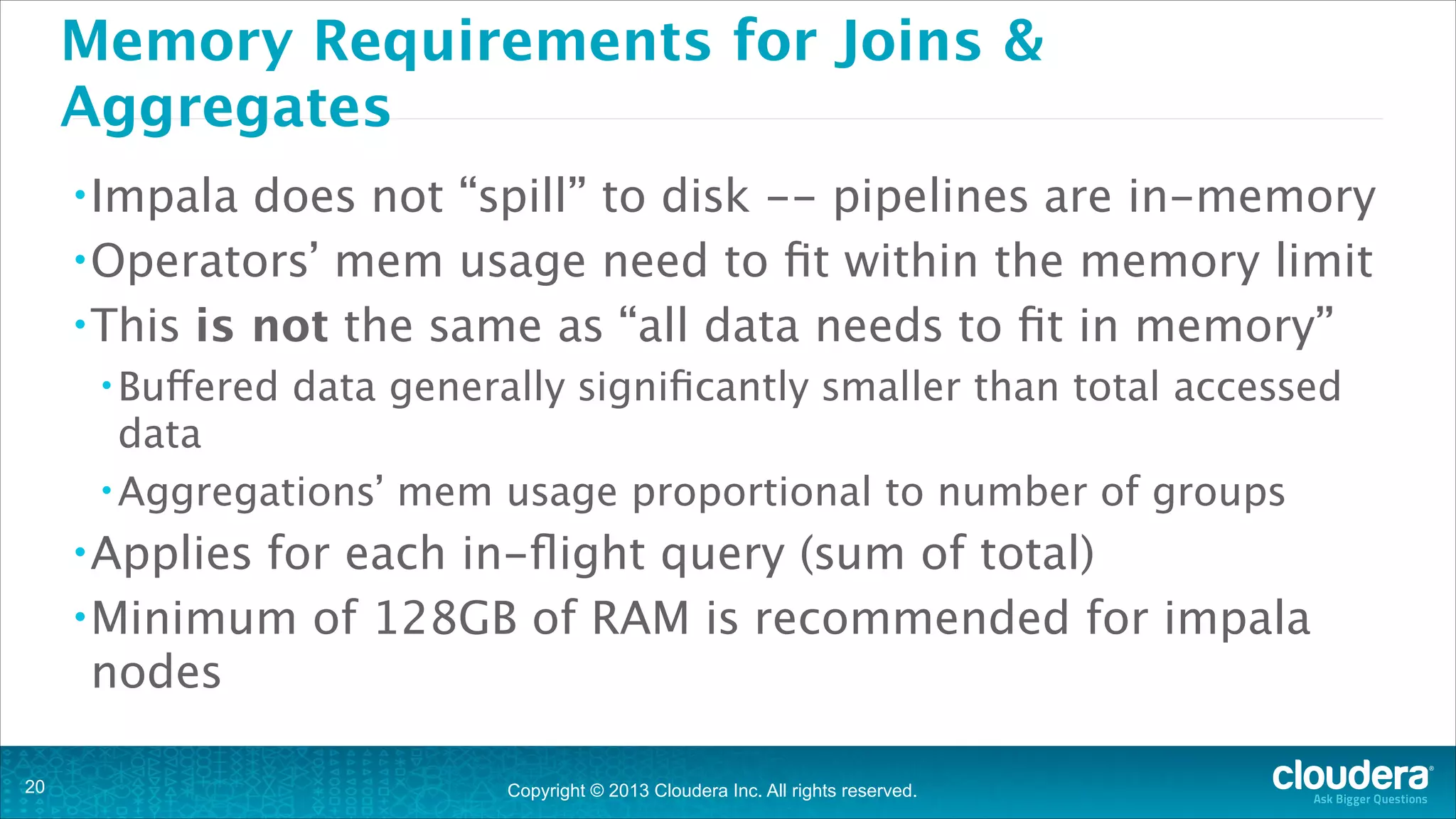

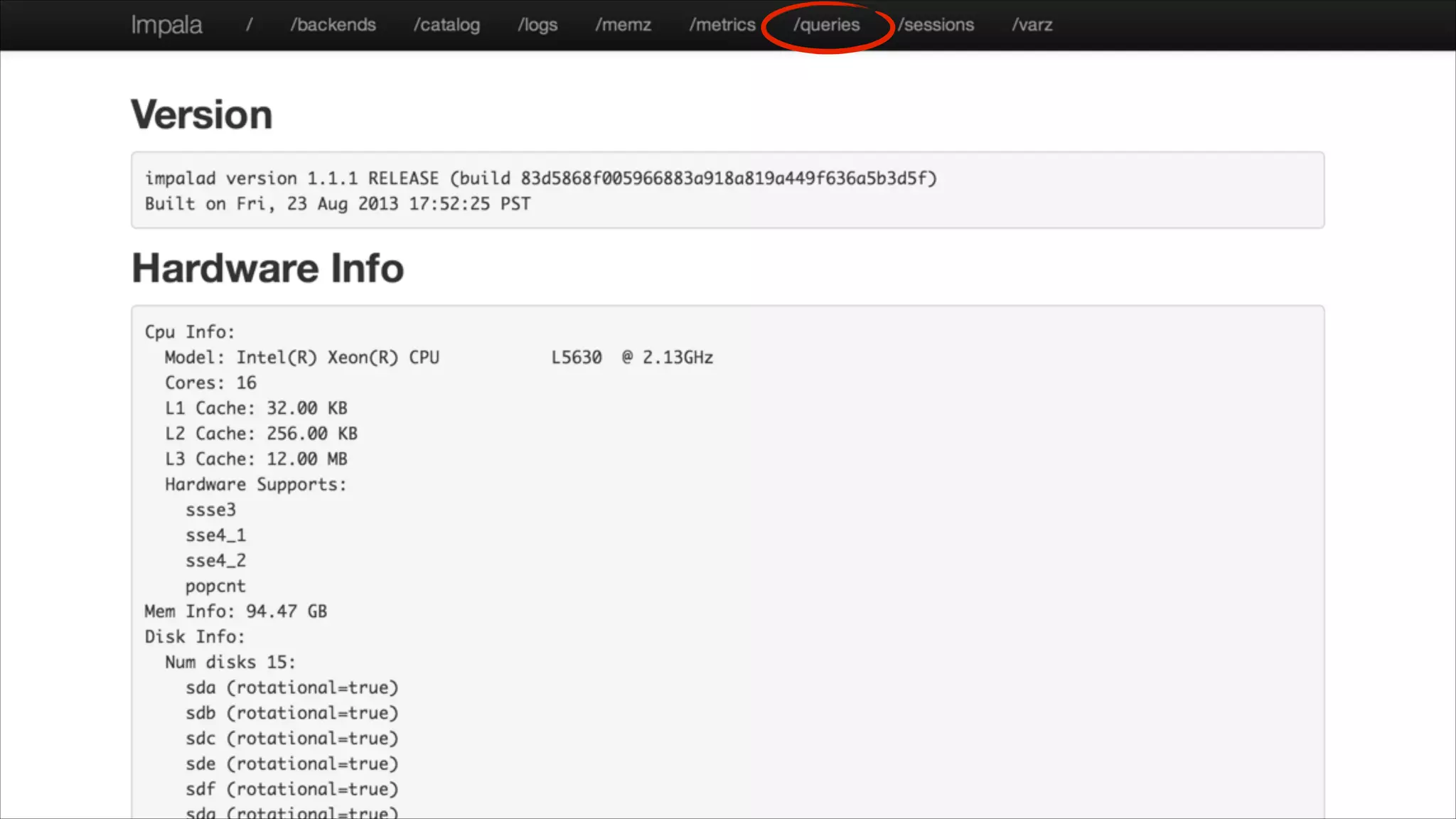

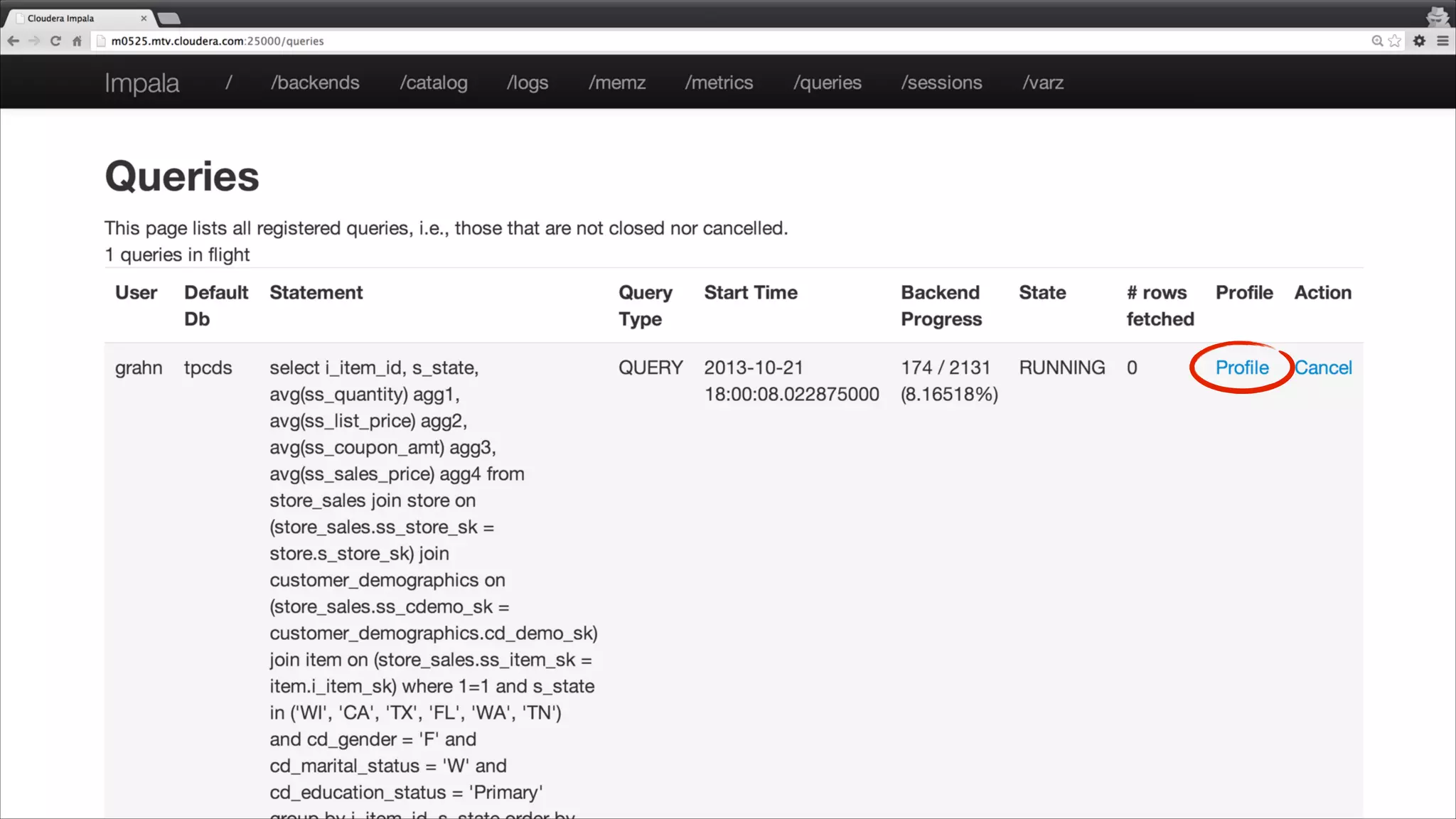

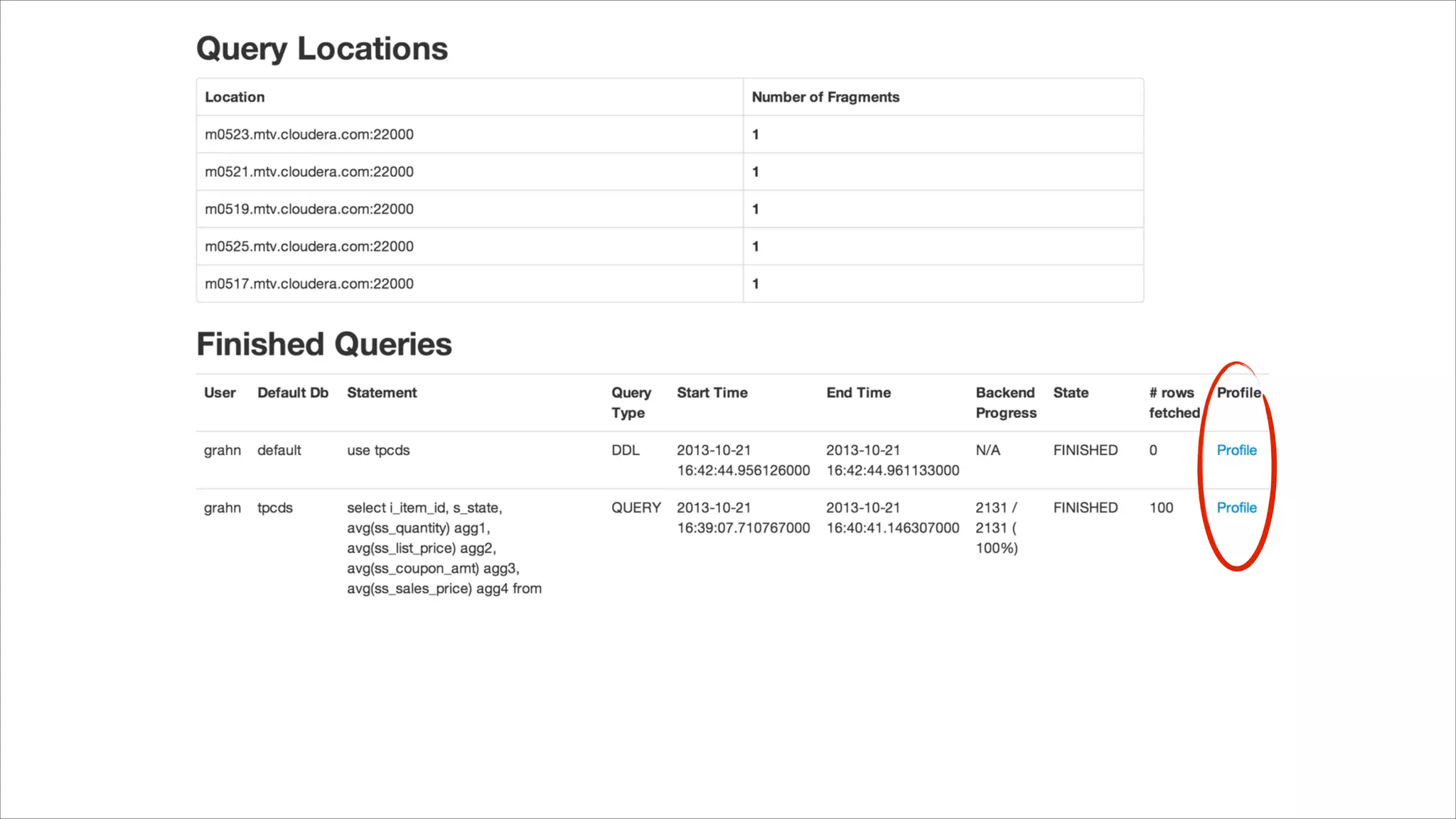

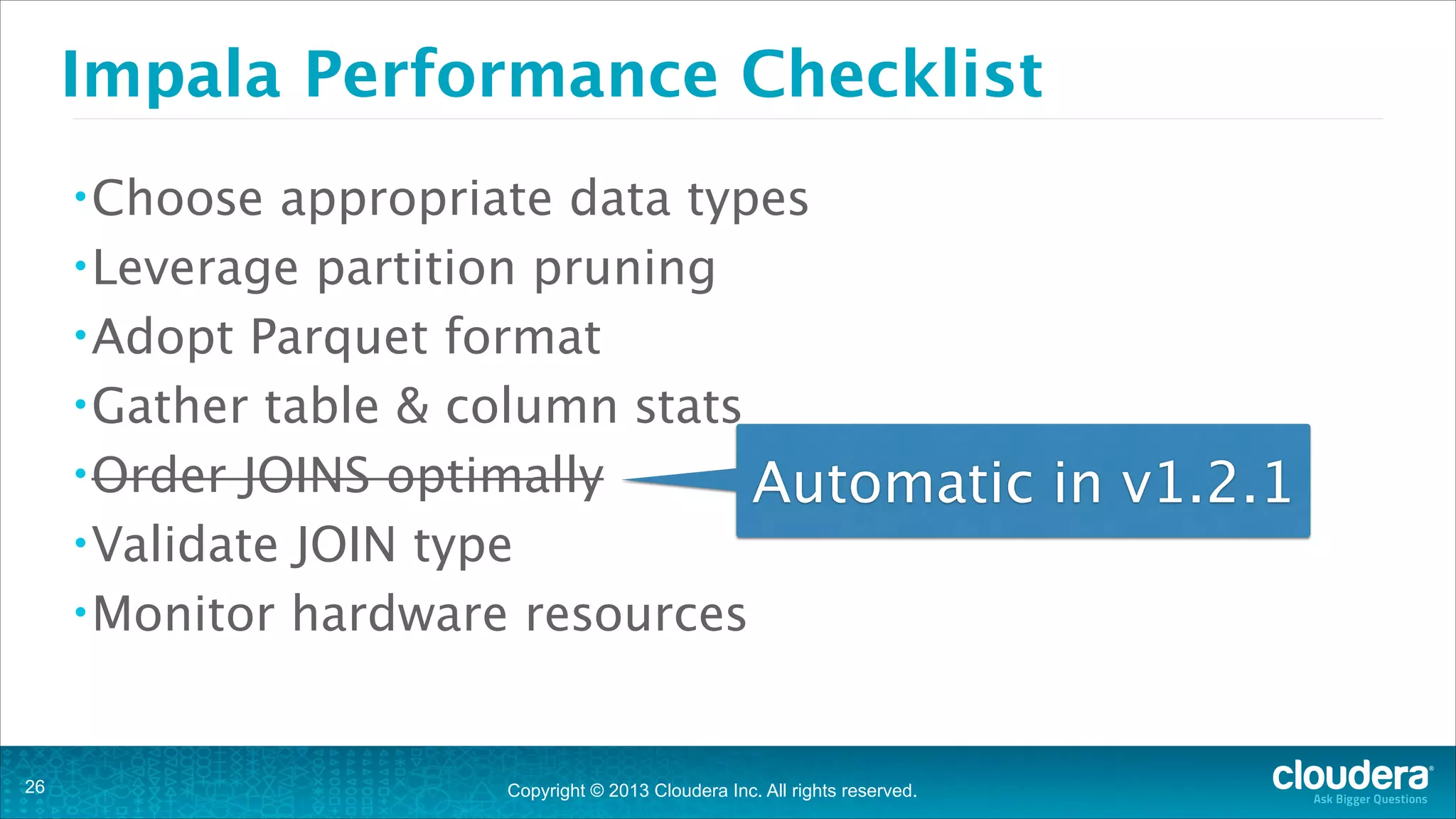

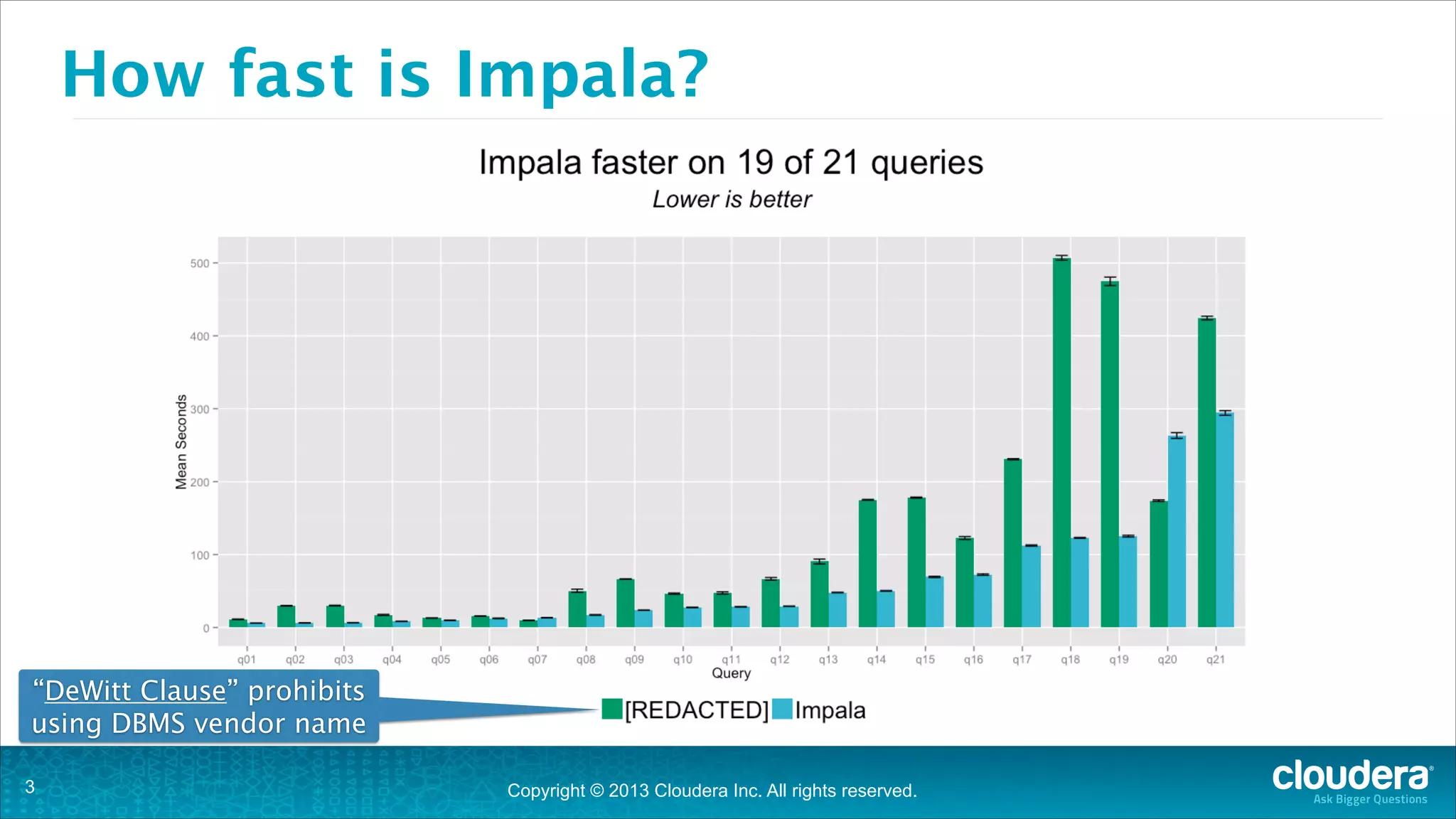

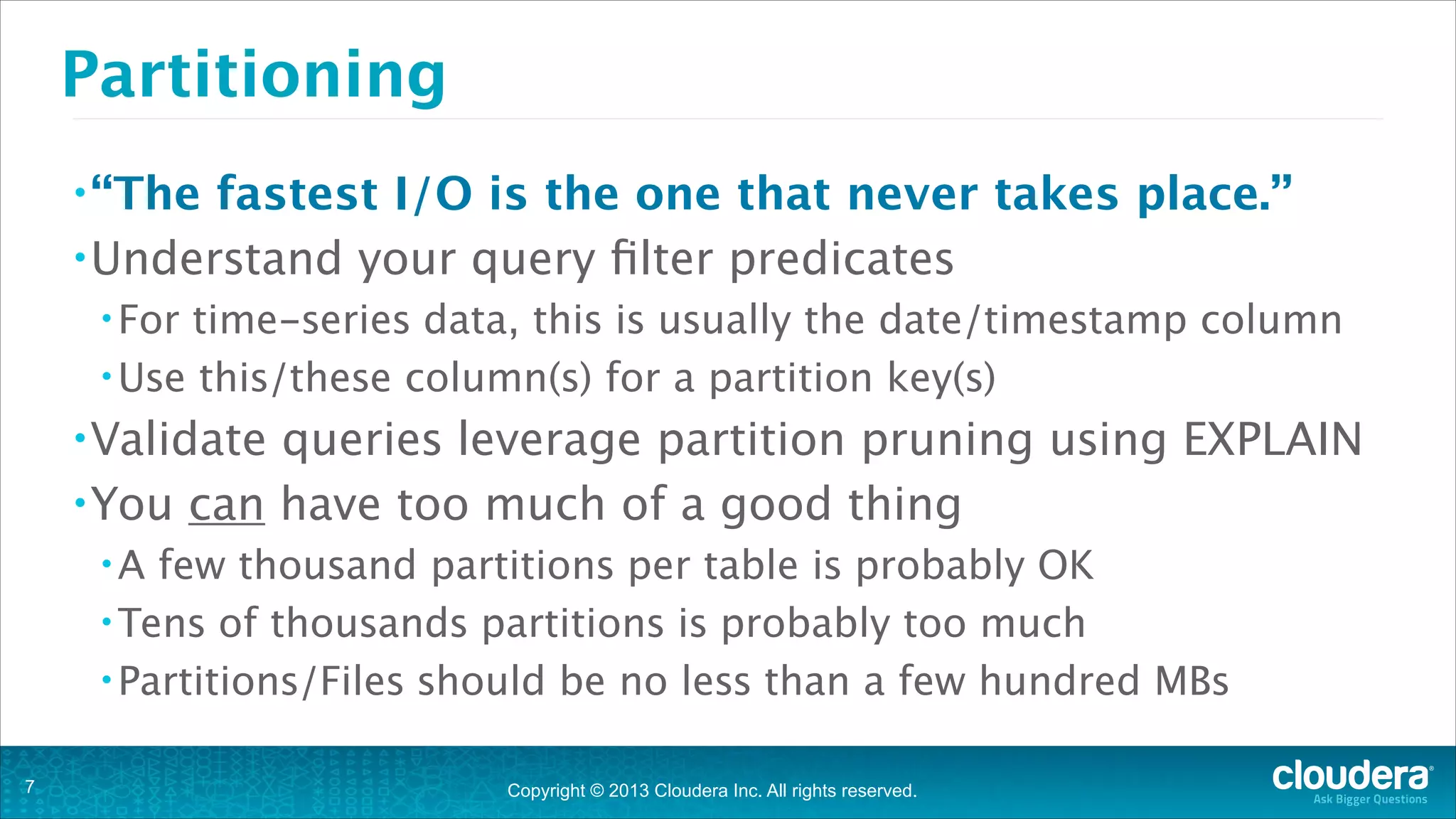

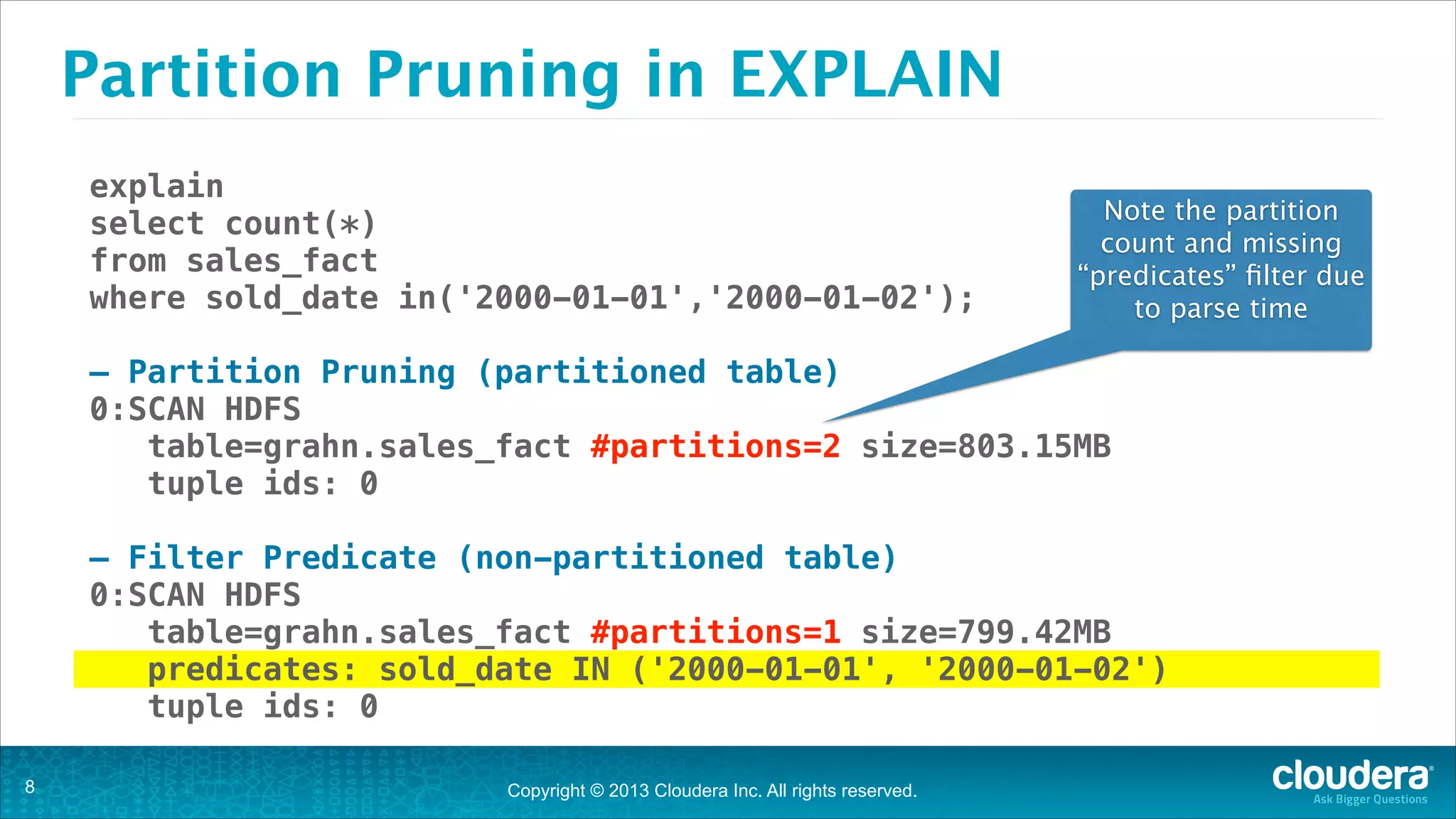

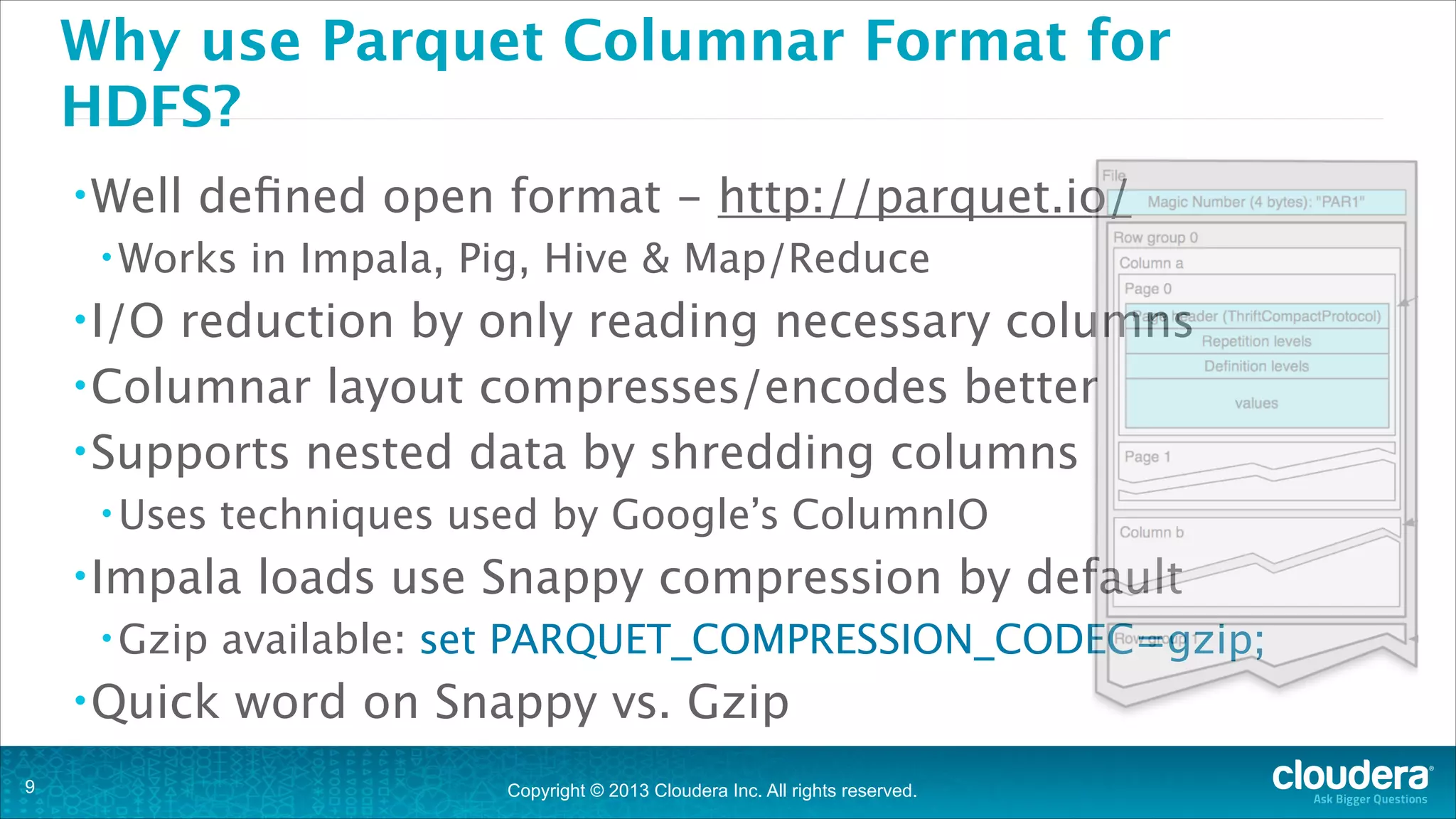

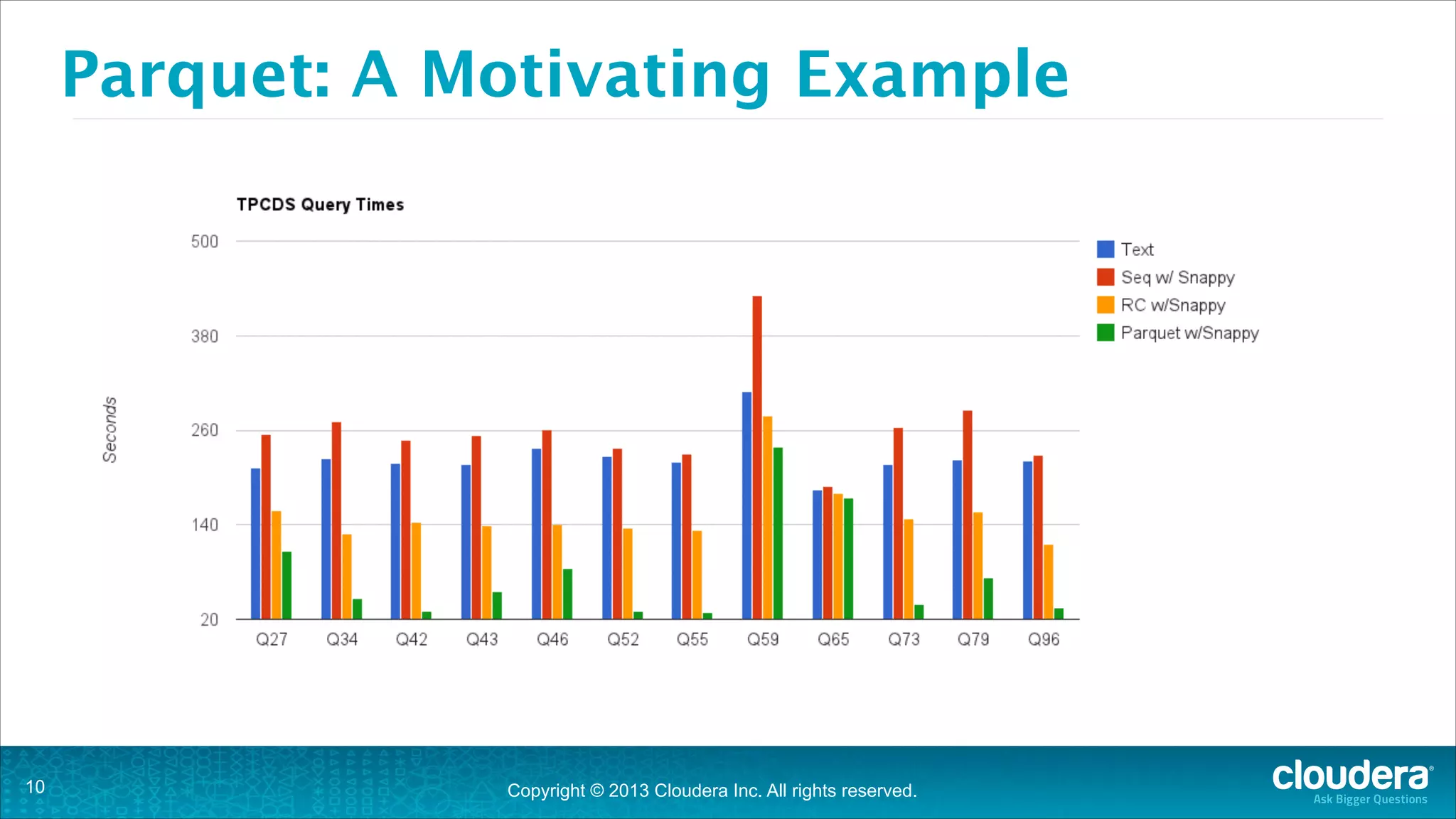

This document provides a summary of techniques for optimizing performance with Impala. It discusses how to choose optimal data types, leverage partitioning for pruning, use the efficient Parquet format, gather statistics, order joins, validate join types, and monitor resources. The document recommends adopting these techniques, analyzing explains plans, and using the Impala debug web pages to optimize performance. It also provides information on joining the Impala community to test Impala.

![How to use ANALYZE

• Table

Stats (from Hive shell)

• analyze

• Column

table T1 [partition(partition_key)] compute statistics;

Stats (from Hive shell)

• analyze

table T1 [partition(partition_key)]

compute statistics for columns c1,c2,…

• Impala

1.2.1 will have a built-in ANALYZE command

!

!17

Copyright © 2013 Cloudera Inc. All rights reserved.](https://image.slidesharecdn.com/hug-london-2013-131112015835-phpapp01/75/Cloudera-Impala-technical-deep-dive-17-2048.jpg)

![Hinting Joins

select ...

from large_fact

join [broadcast] small_dim

!

!

select ...

from large_fact

join [shuffle] large_dim

!

*square brackets required

!18

Copyright © 2013 Cloudera Inc. All rights reserved.](https://image.slidesharecdn.com/hug-london-2013-131112015835-phpapp01/75/Cloudera-Impala-technical-deep-dive-18-2048.jpg)