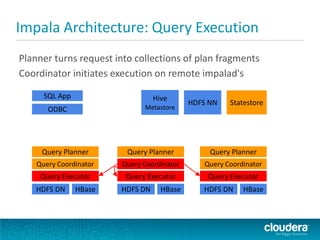

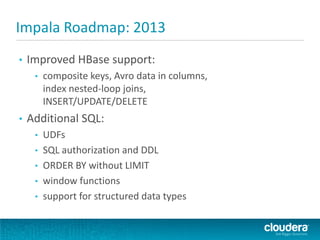

Cloudera Impala is a modern SQL engine designed for Apache Hadoop to support both analytical and transactional workloads efficiently. It features a high-performance architecture utilizing C++ and runtime code generation, operates as a distributed service across Hadoop nodes, and connects with Hive's metadata. The roadmap for Impala includes enhancements in data formats, query execution, and improved support for HBase, with an emphasis on optimizing performance for exploratory and production workloads.