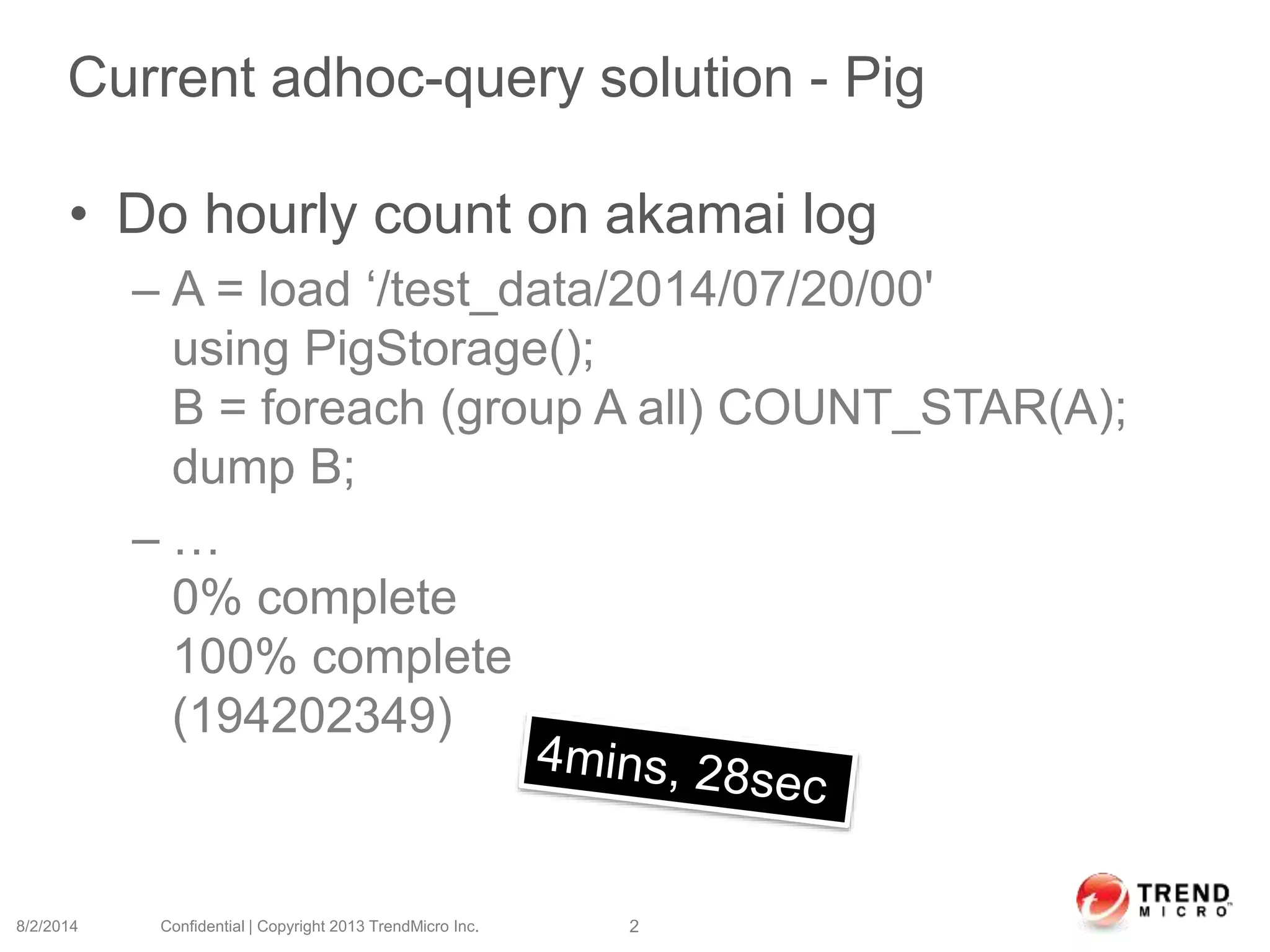

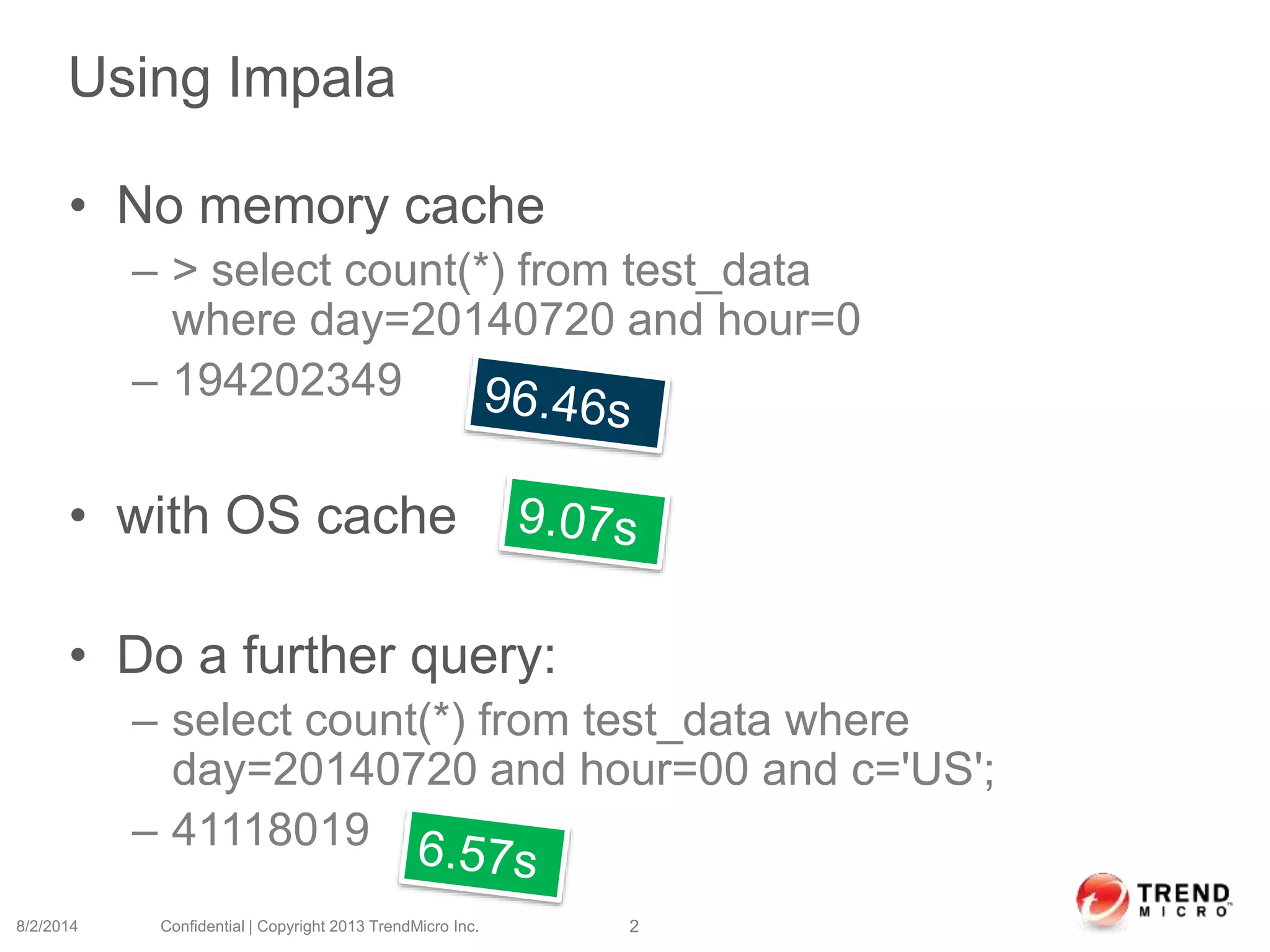

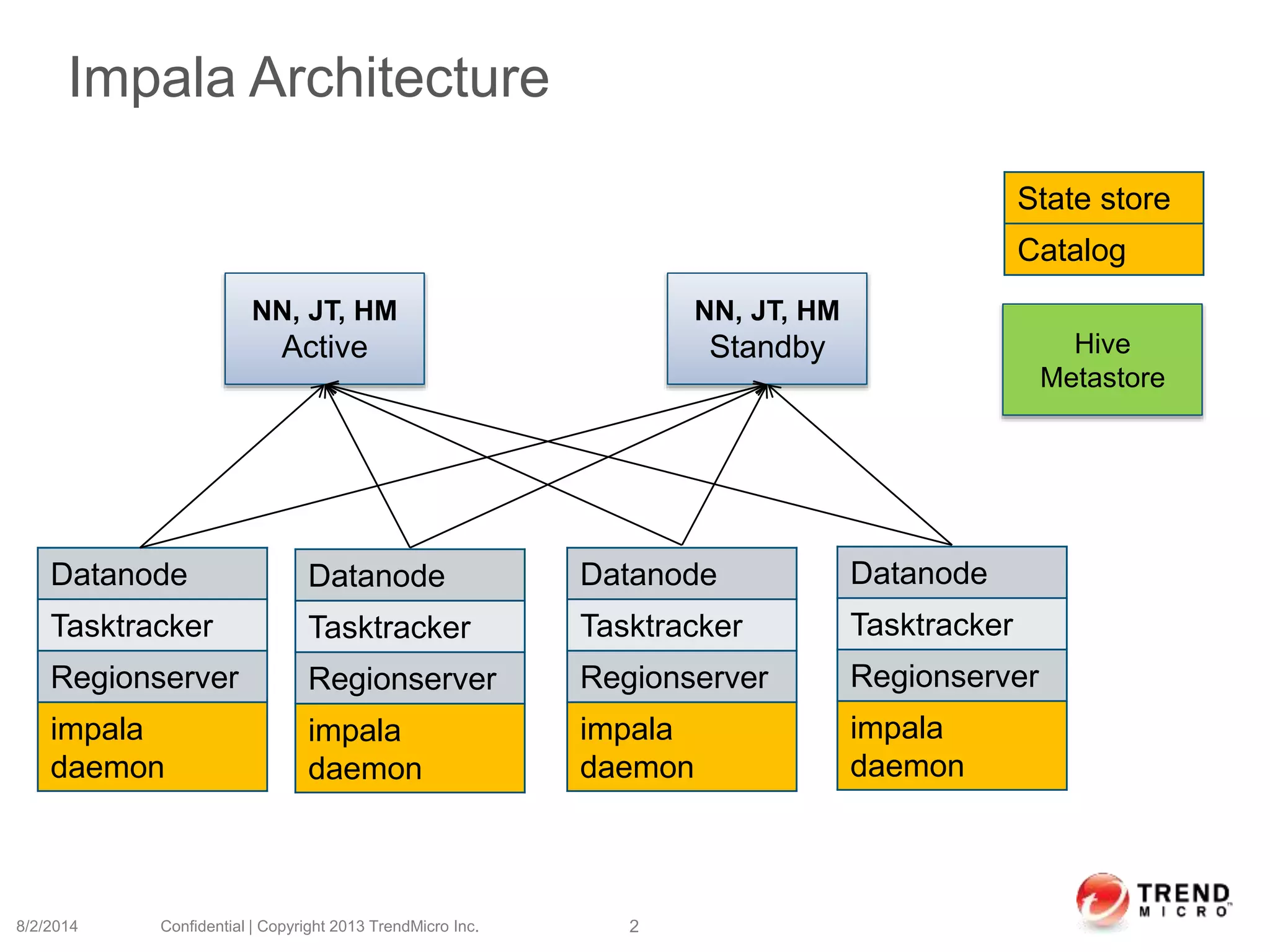

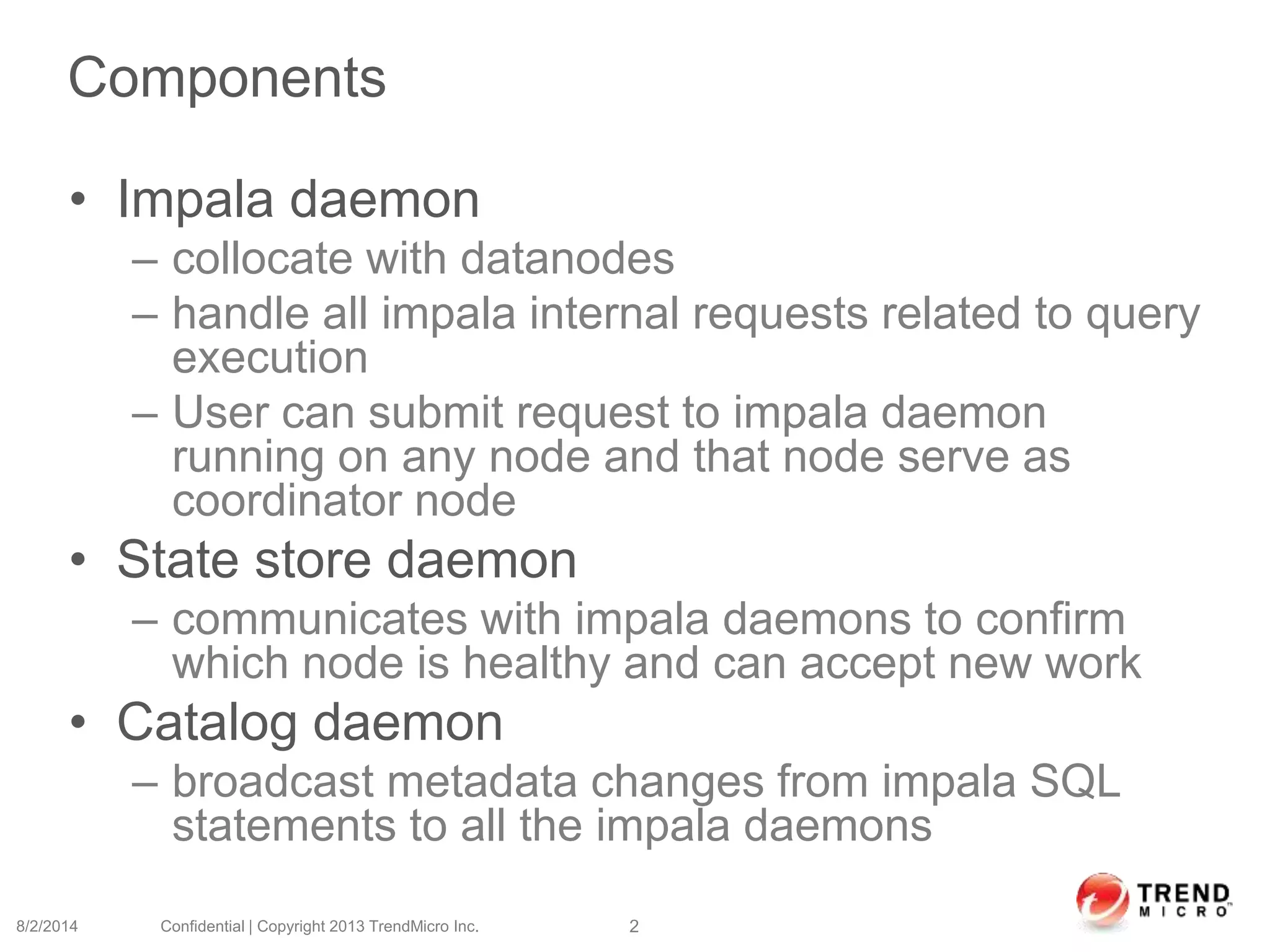

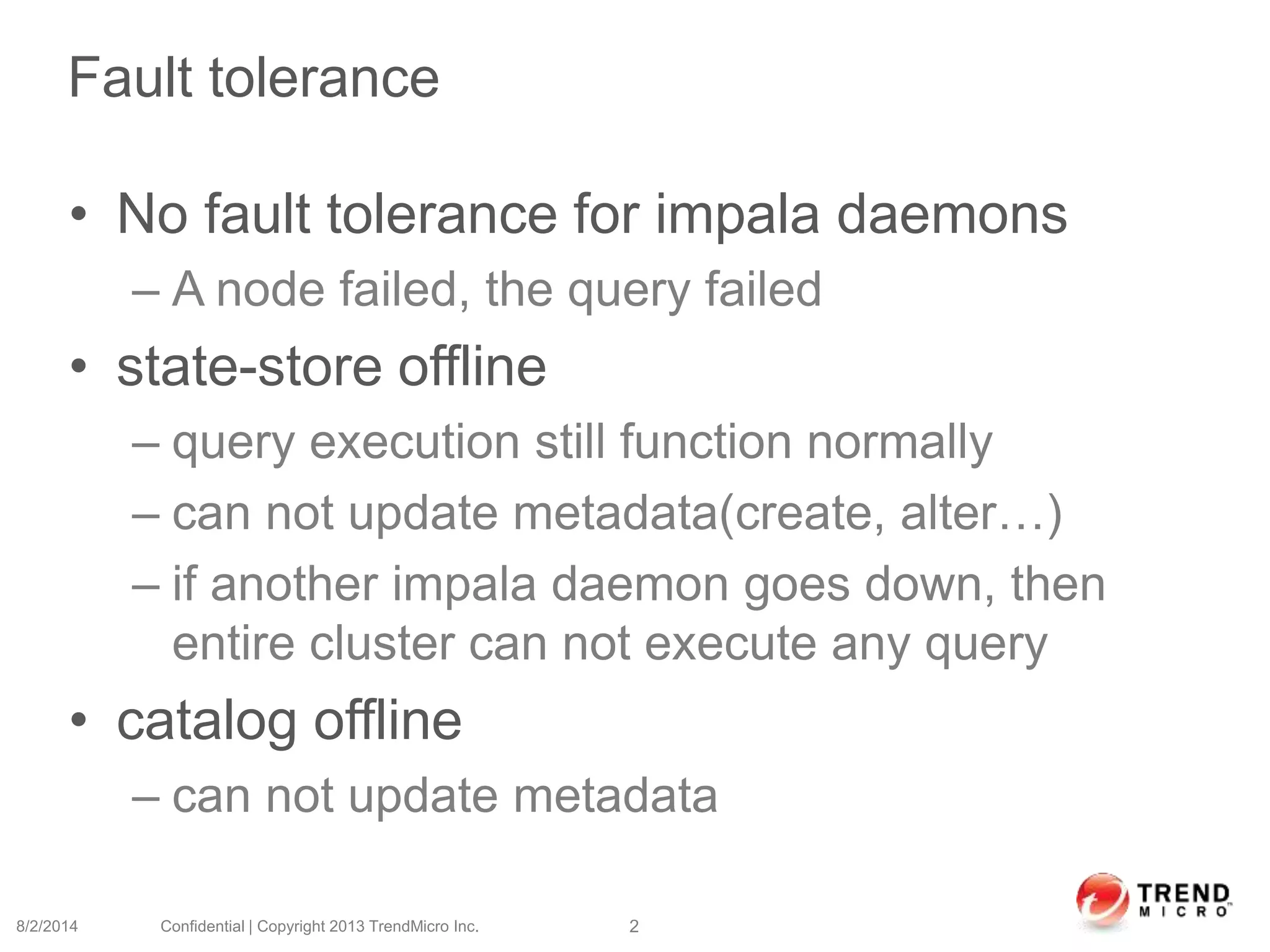

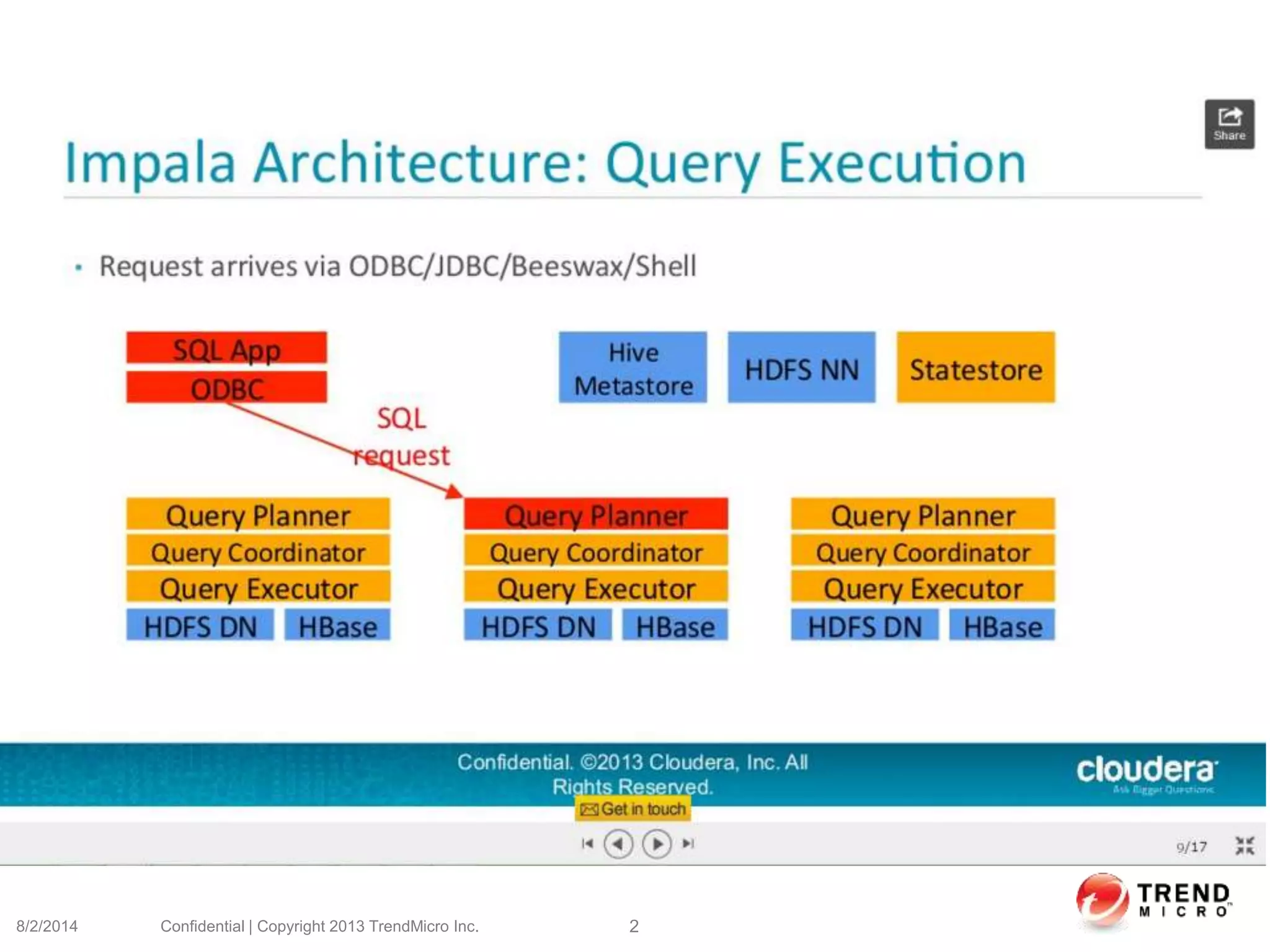

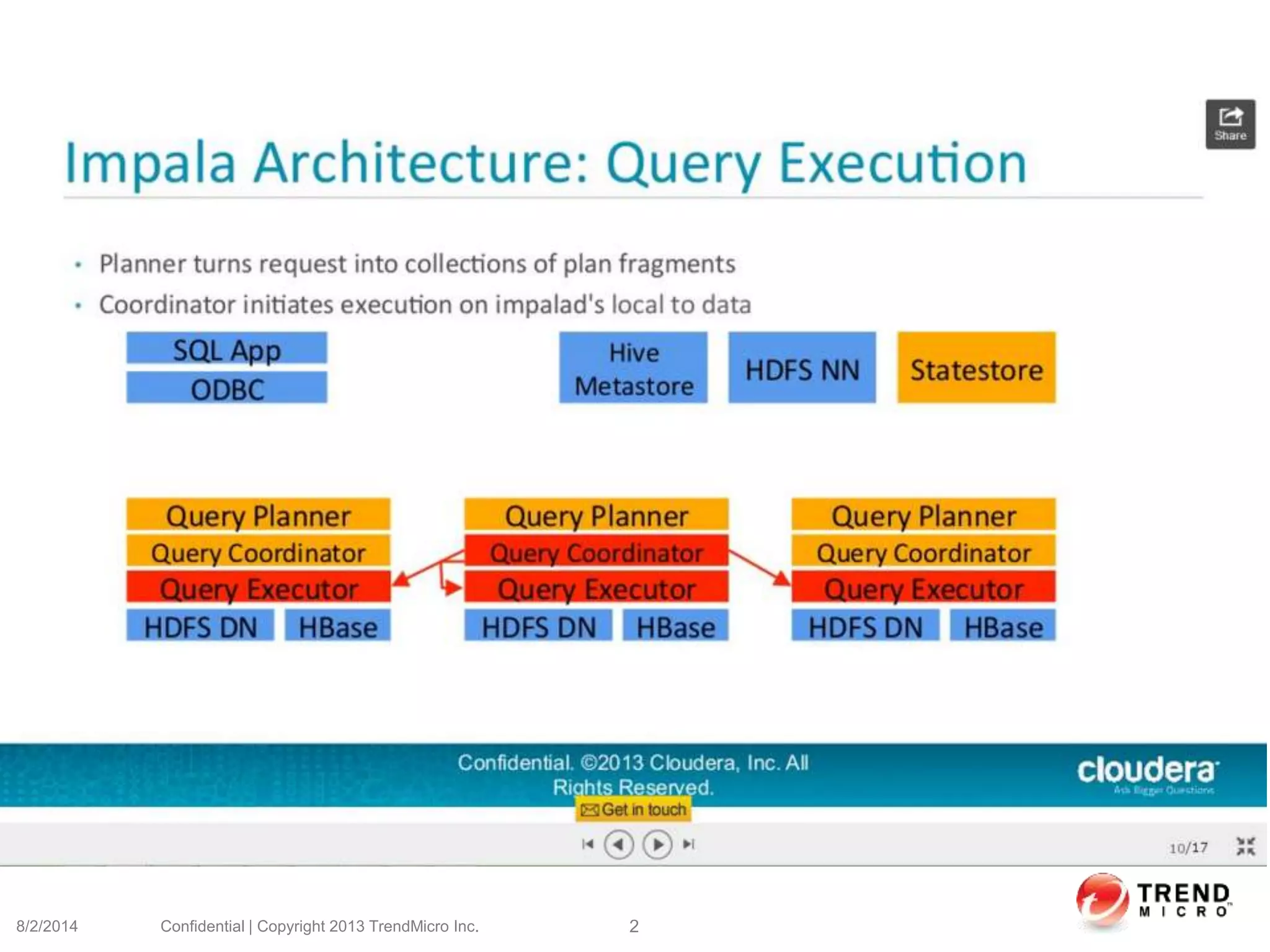

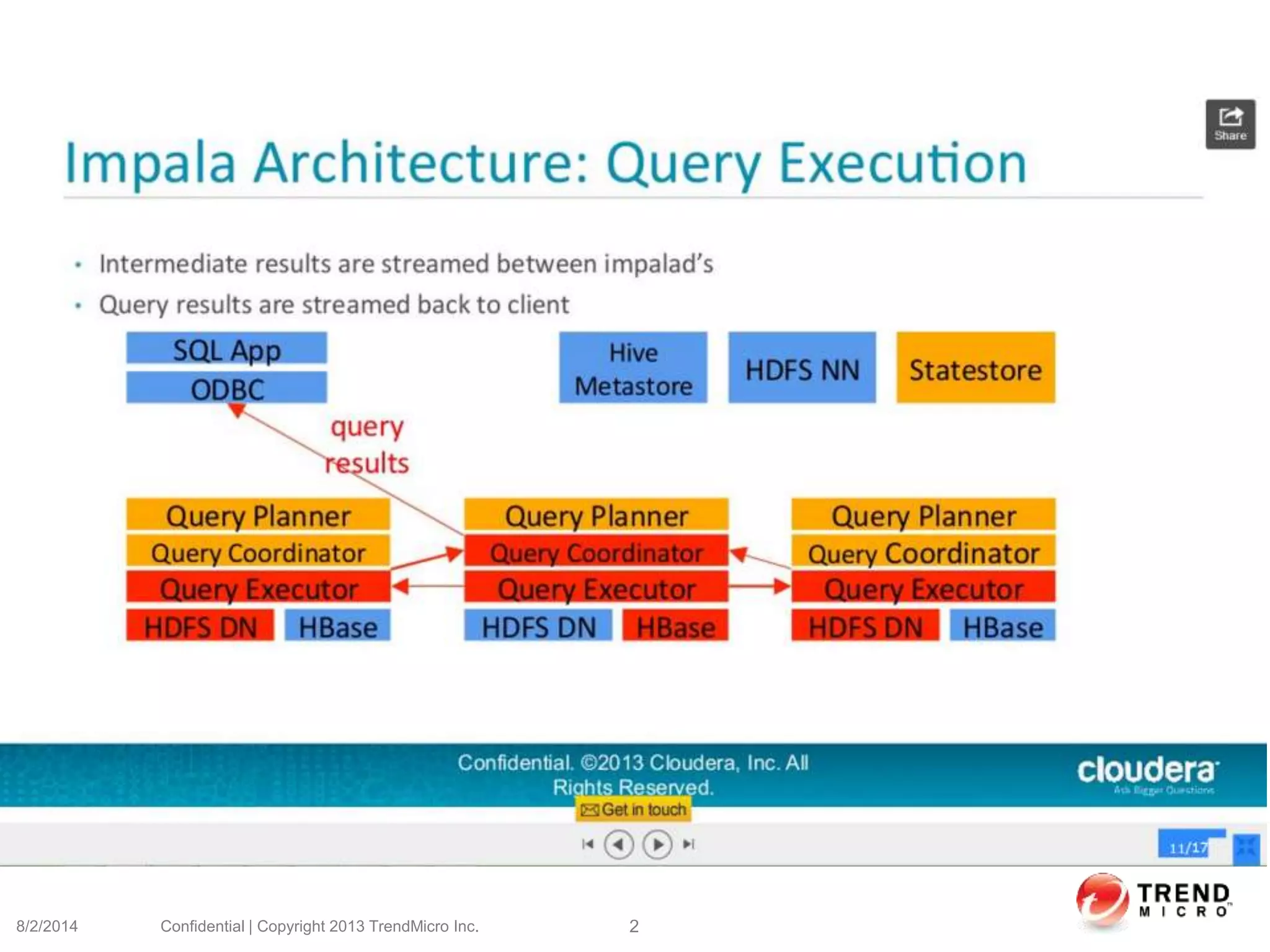

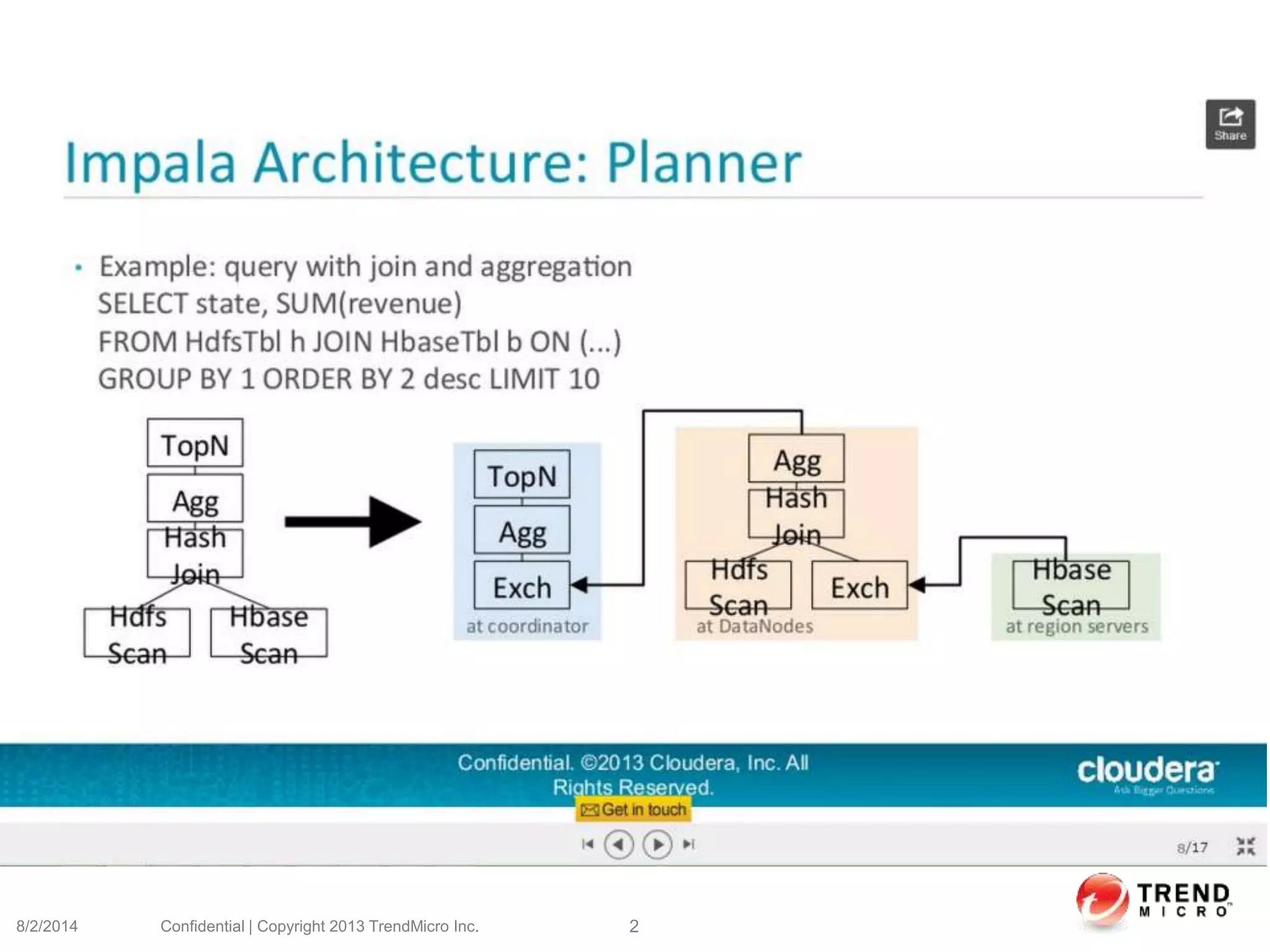

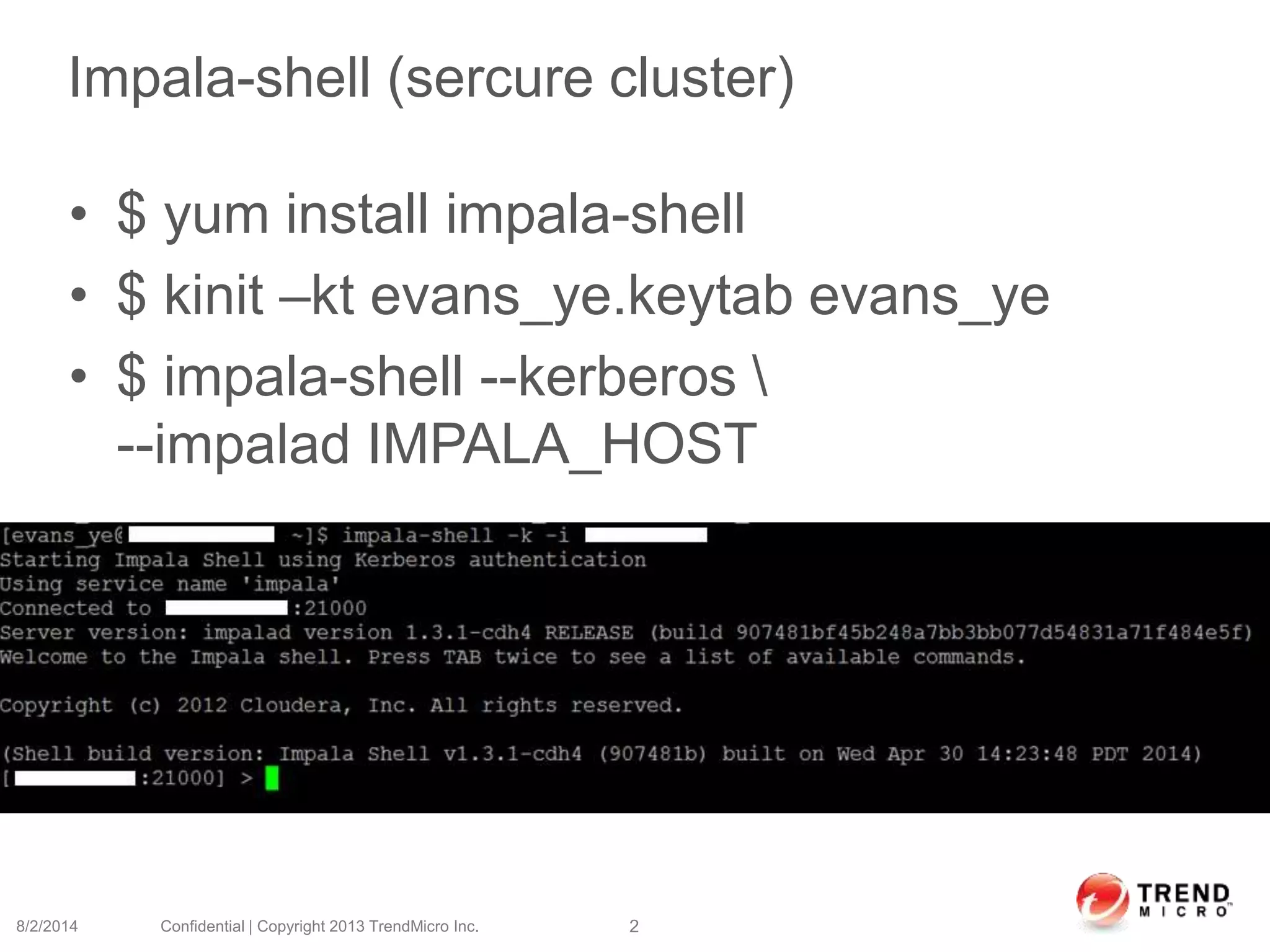

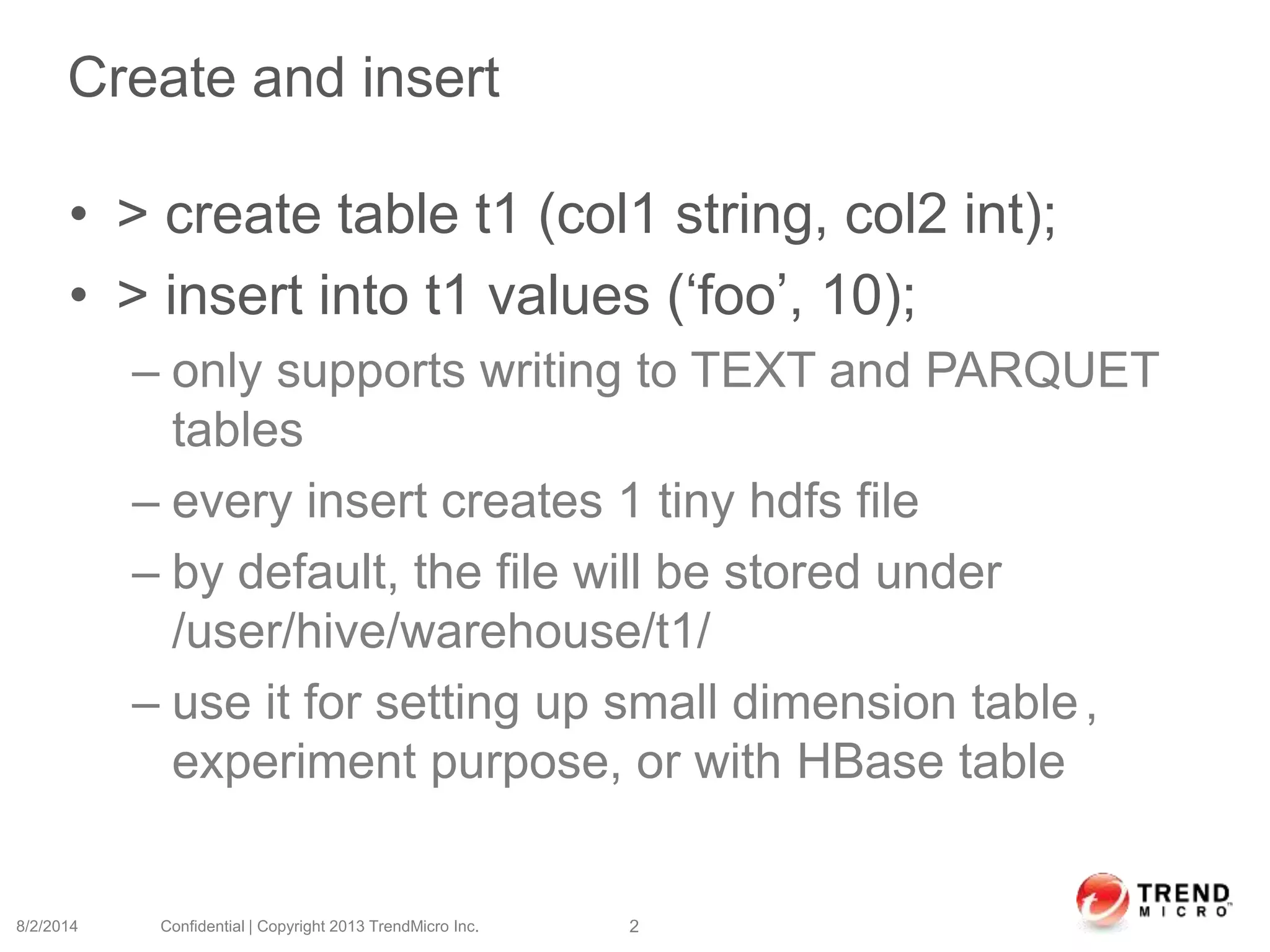

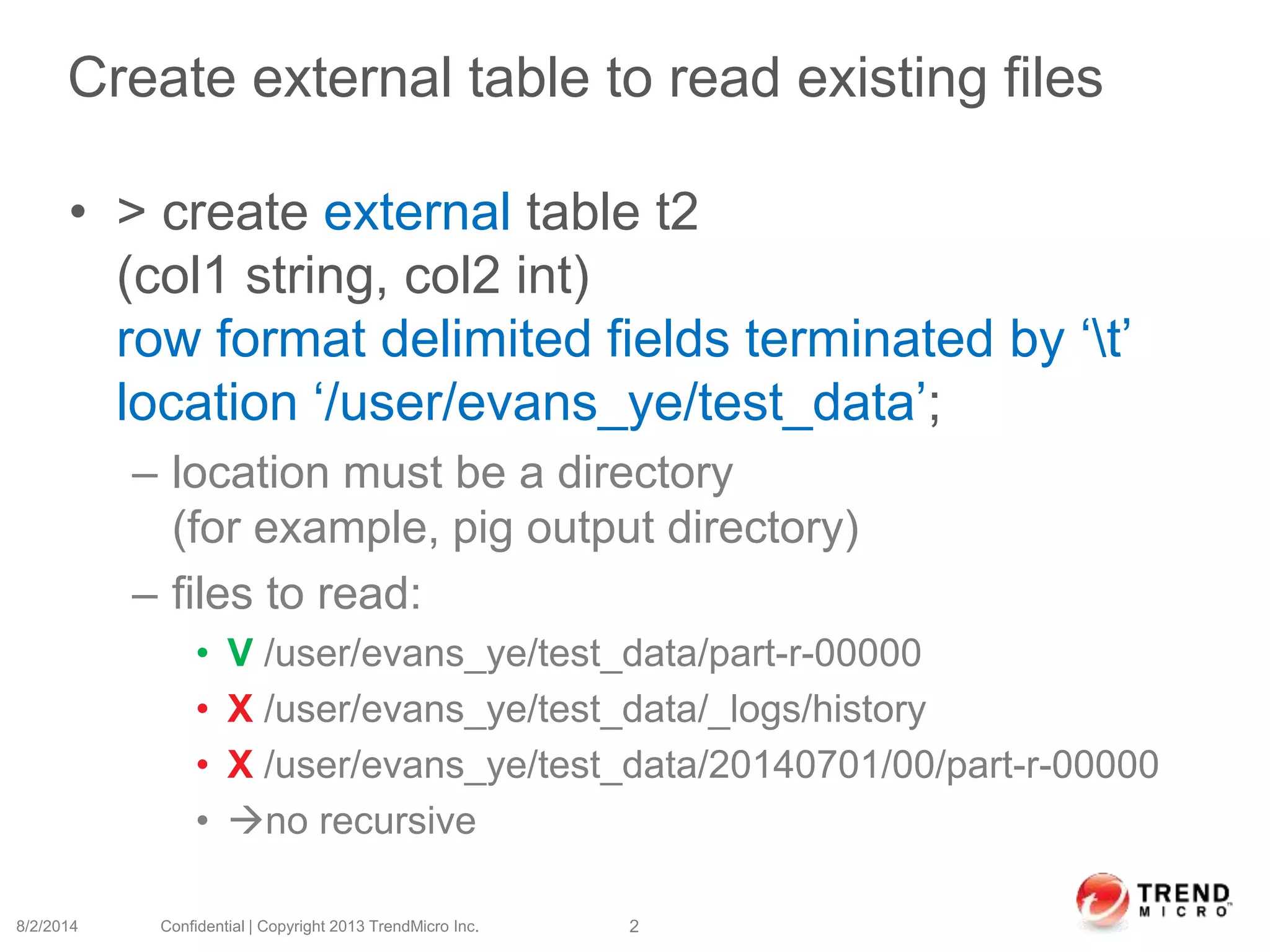

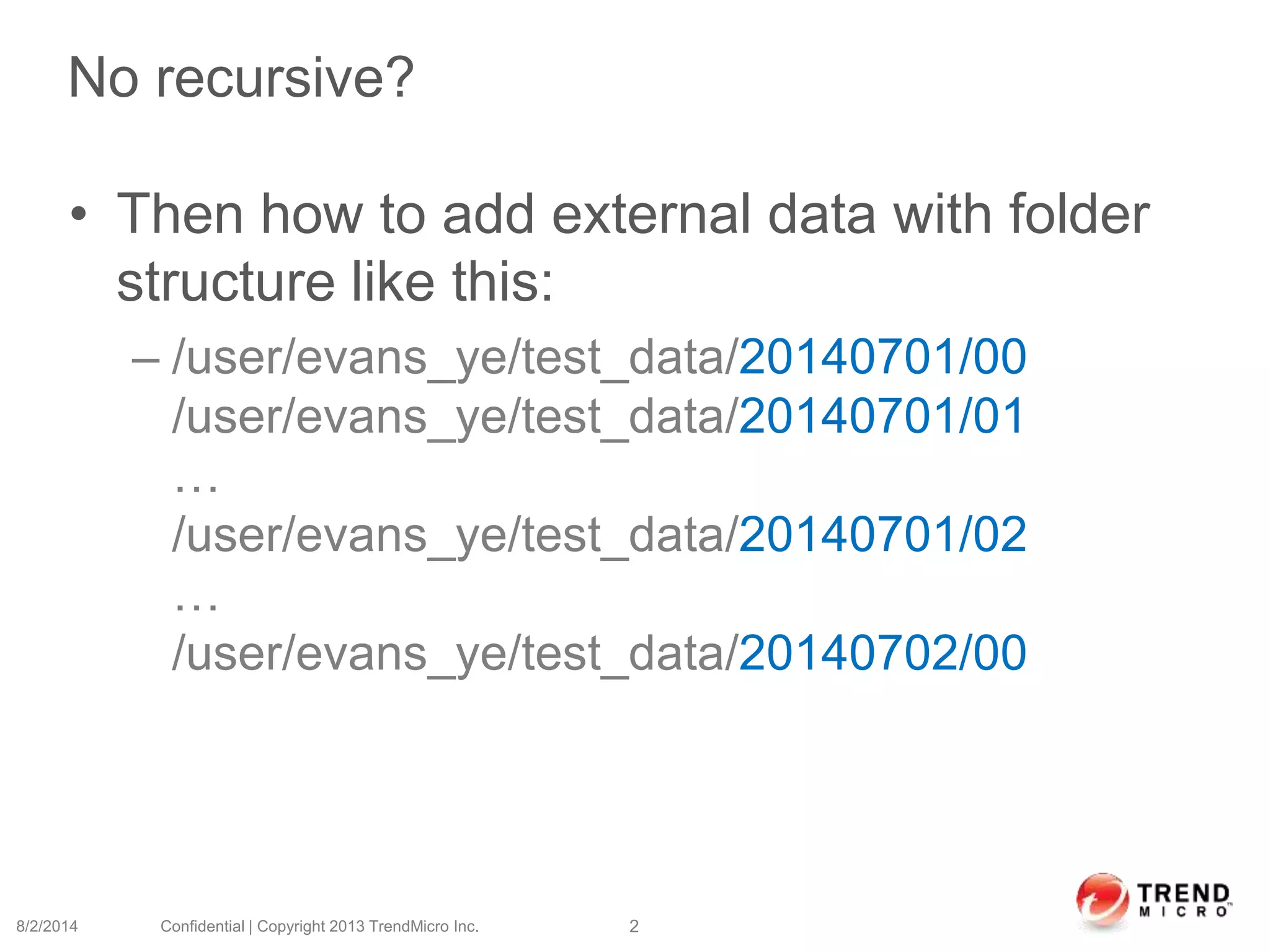

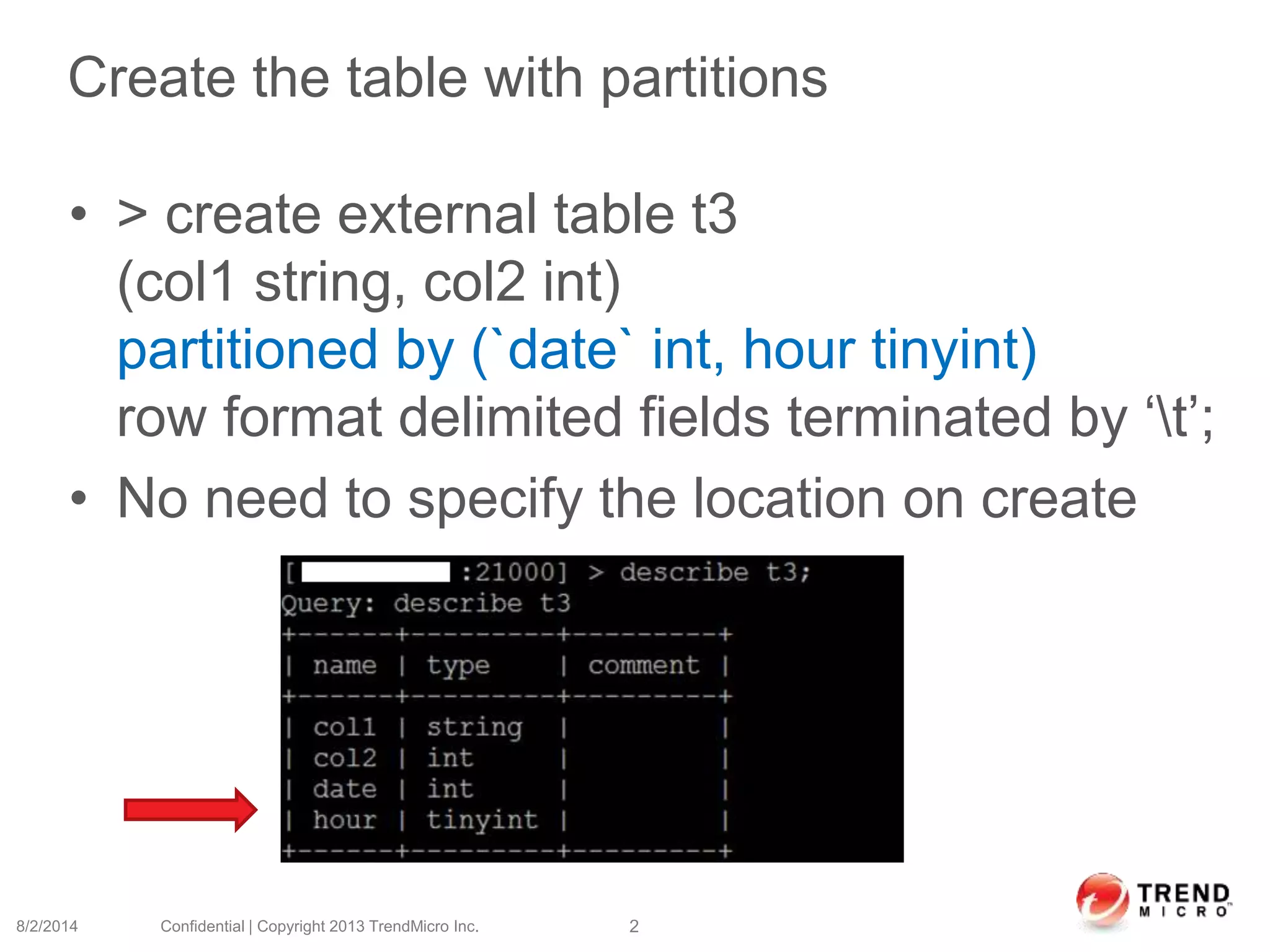

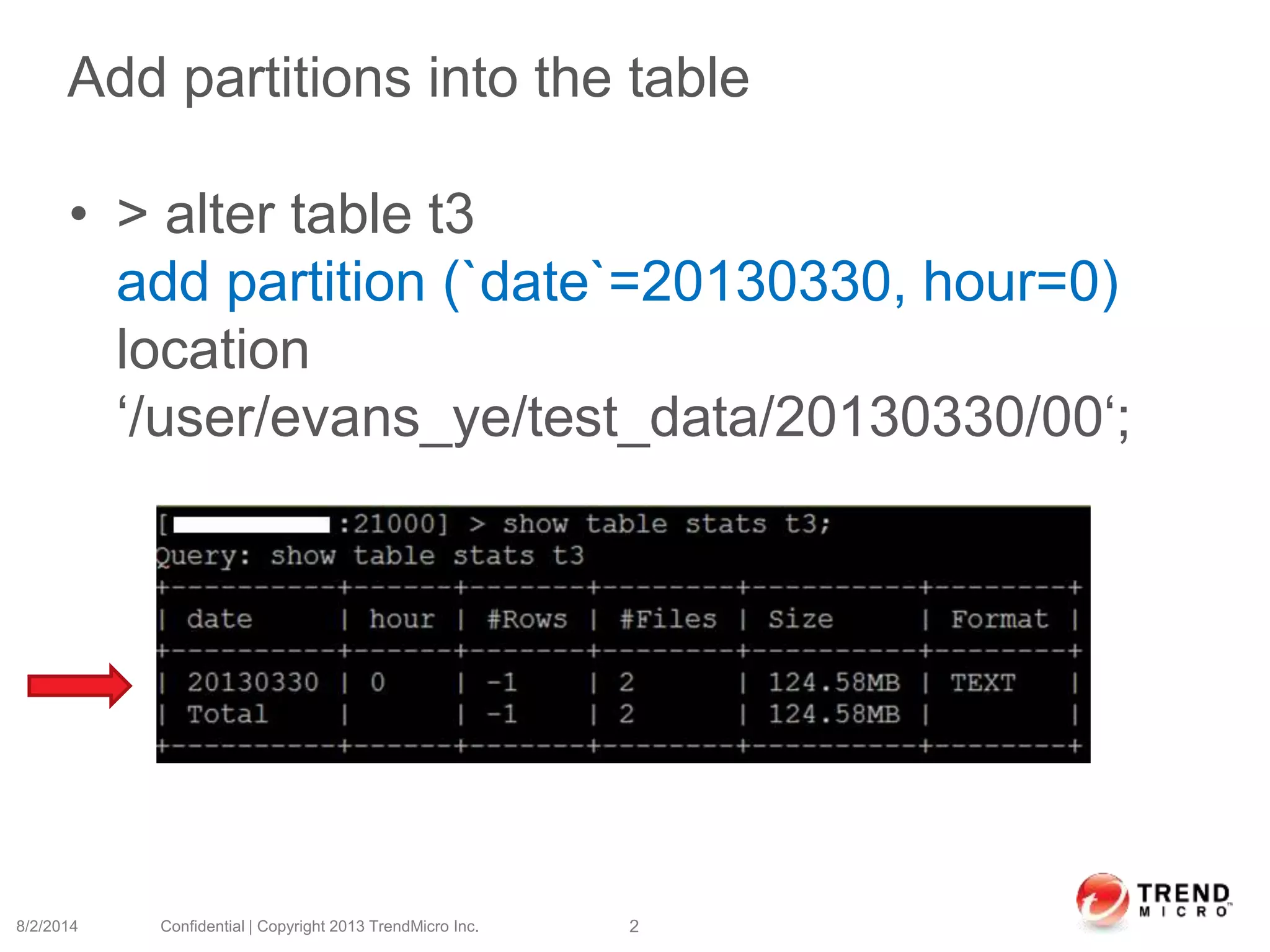

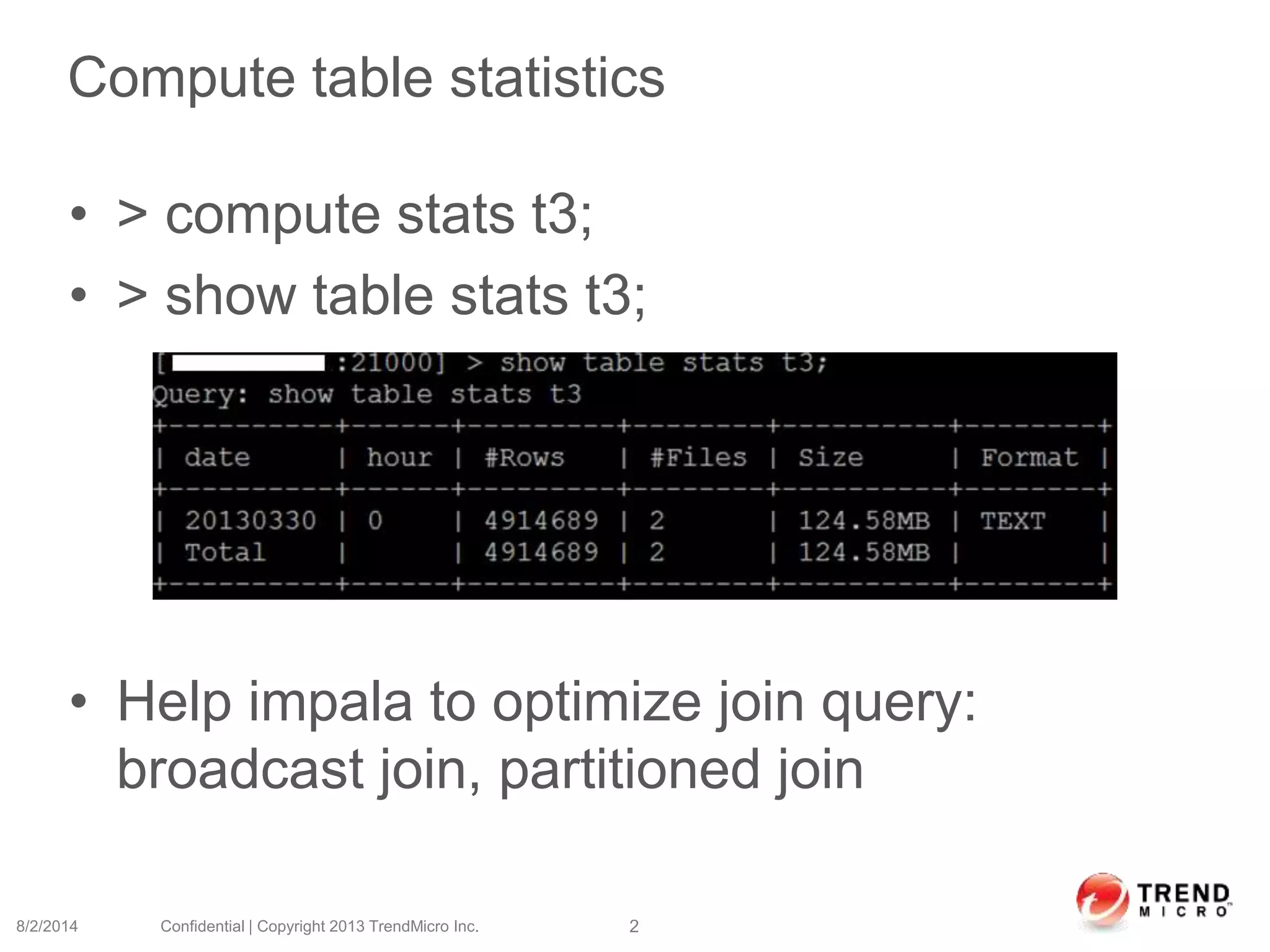

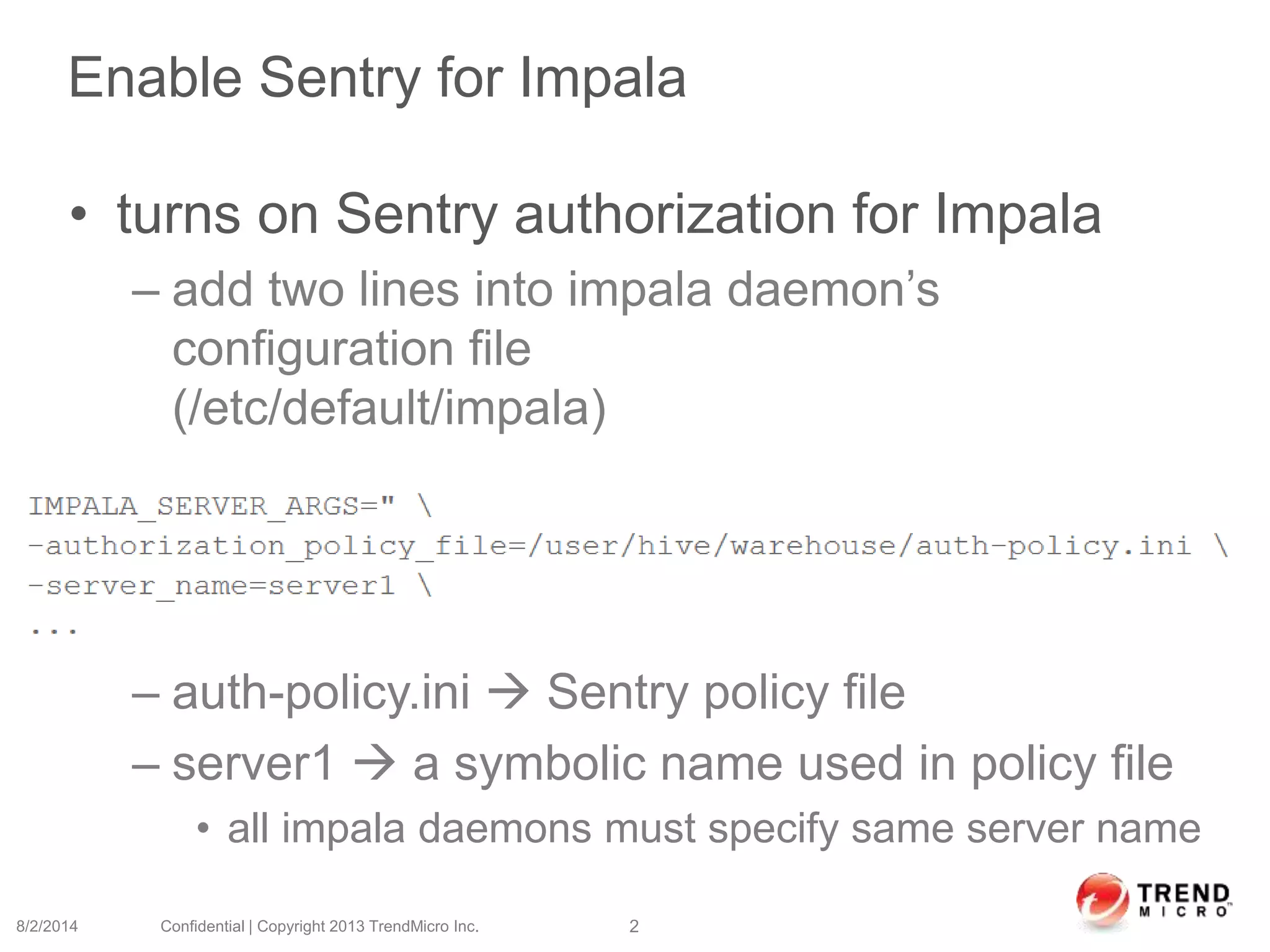

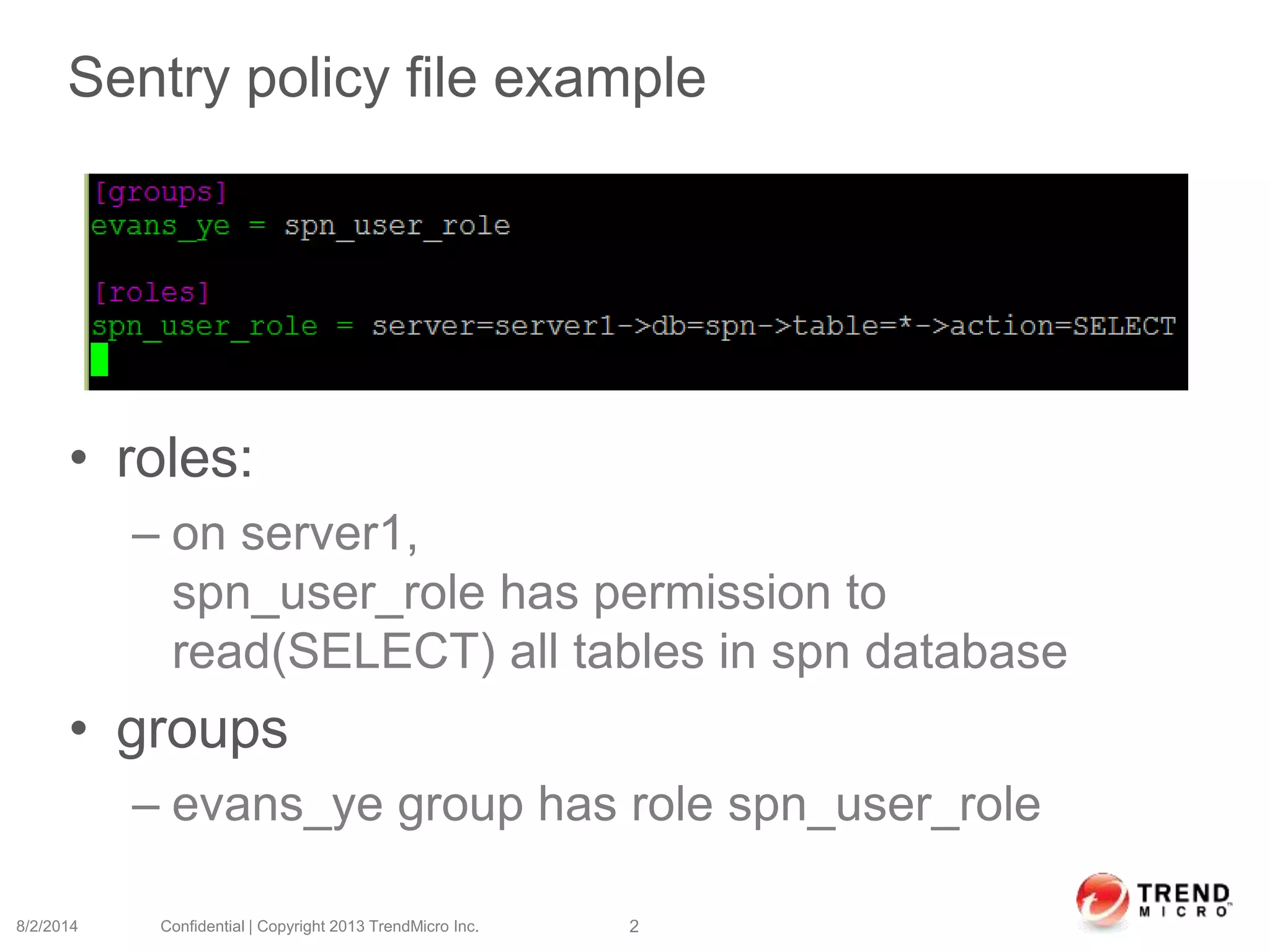

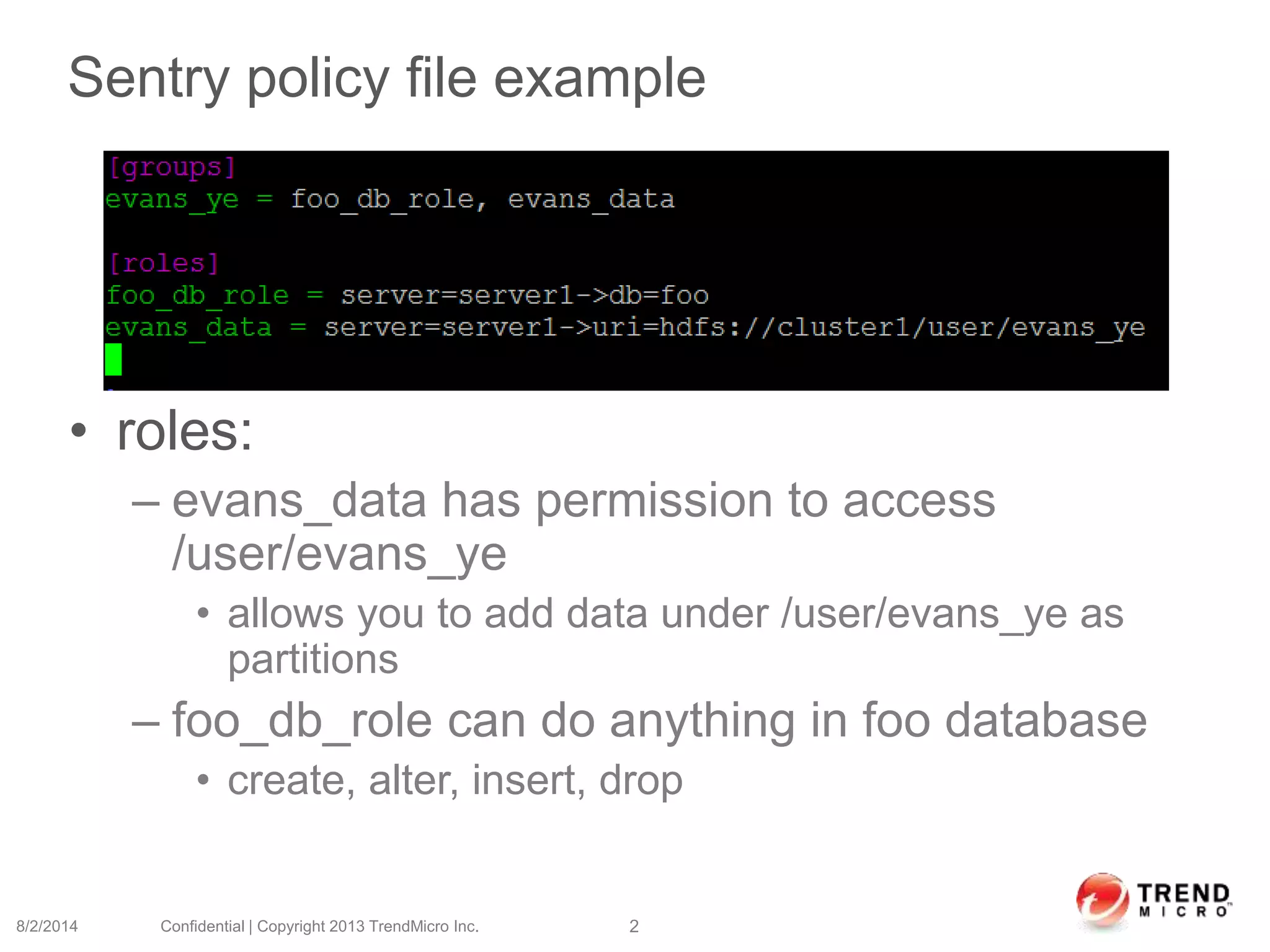

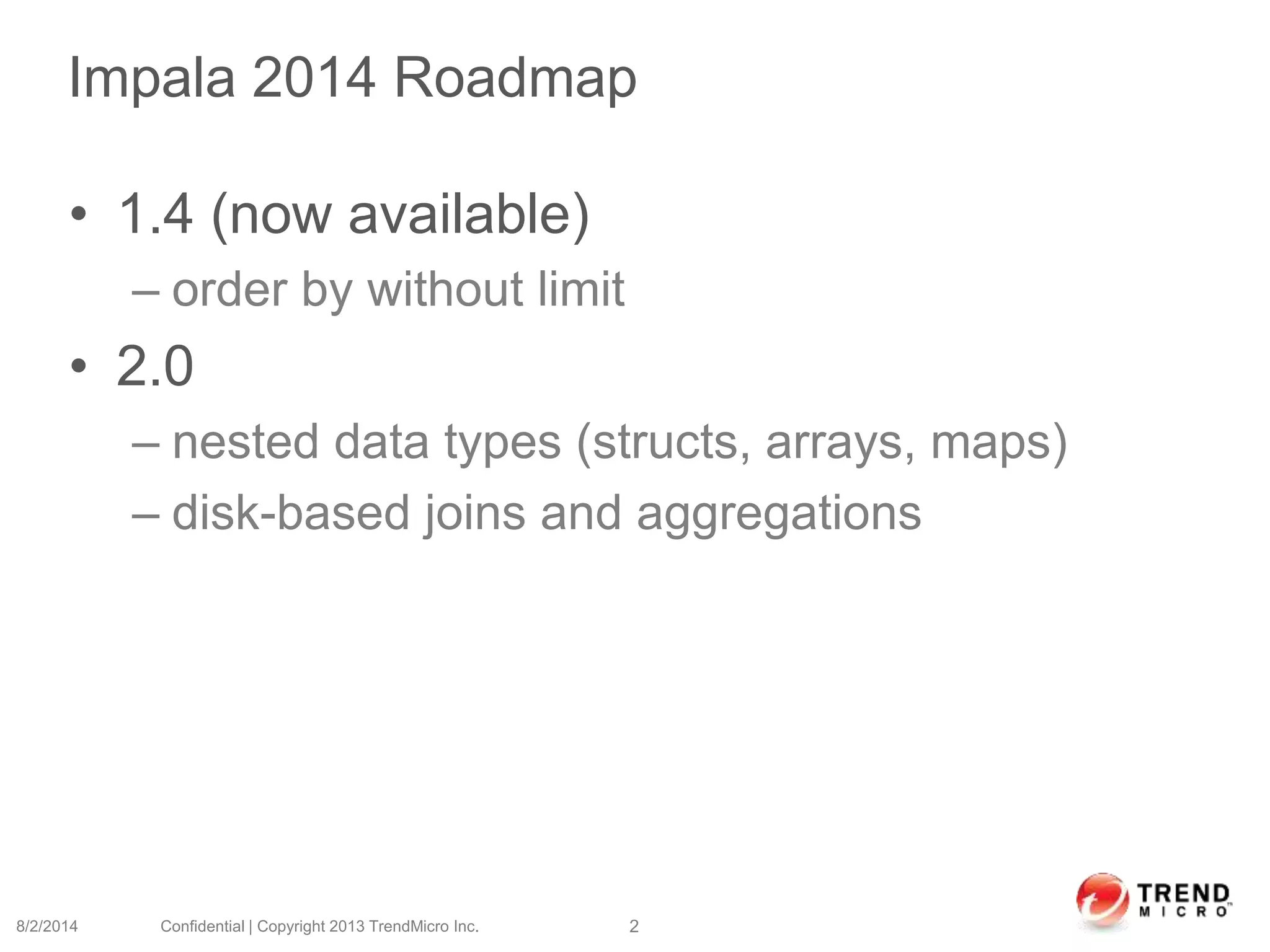

The document provides an overview of Impala, including its architecture, query execution process, getting started guide, Parquet file format, and access control using Sentry. Impala is an SQL query engine for Apache Hadoop that allows real-time queries directly on data stored in HDFS. It uses a stateless, shared-nothing architecture with distributed query coordination.