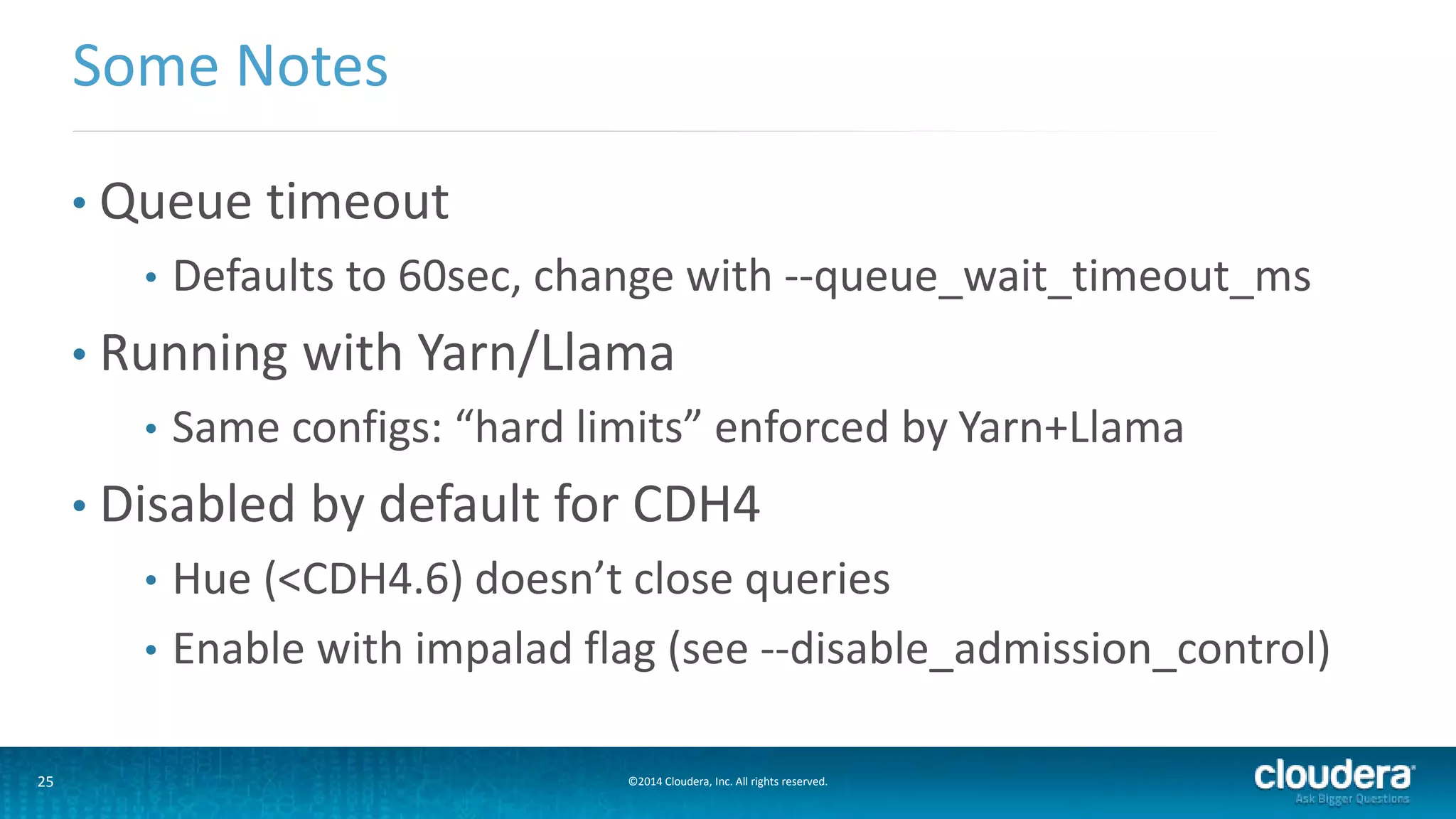

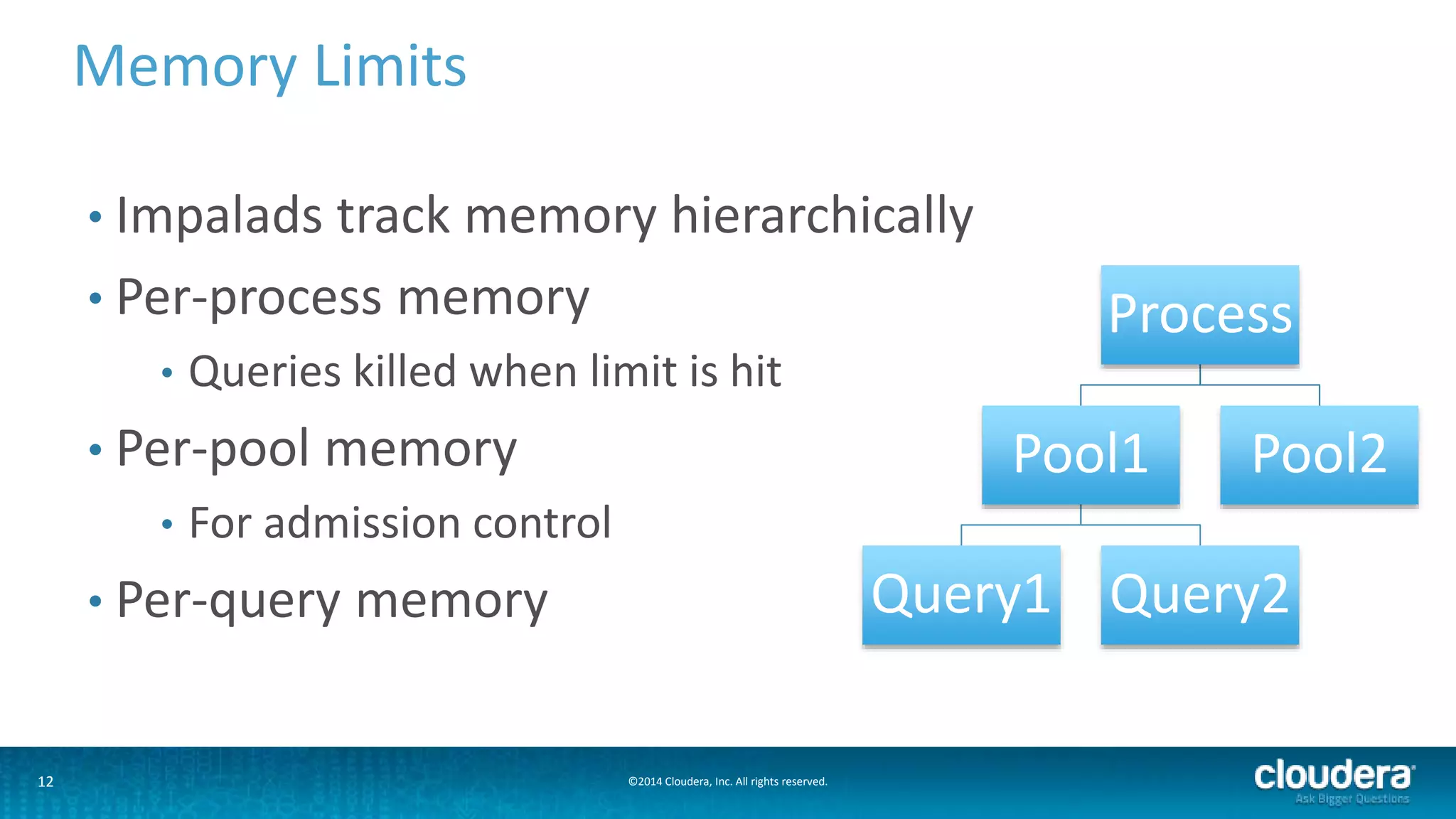

This document discusses admission control in Impala to prevent oversubscription of resources from too many concurrent queries. It describes the problem of all queries taking longer when too many run at once. It then outlines Impala's solution of adding admission control by throttling incoming requests, queuing requests when workload increases, and executing queued requests when resources become available. The document provides details on how Impala implements admission control in a decentralized manner without requiring Yarn/Llama to handle throttling and queuing locally on each Impalad daemon.

![22 ©2014 Cloudera, Inc. All rights reserved.

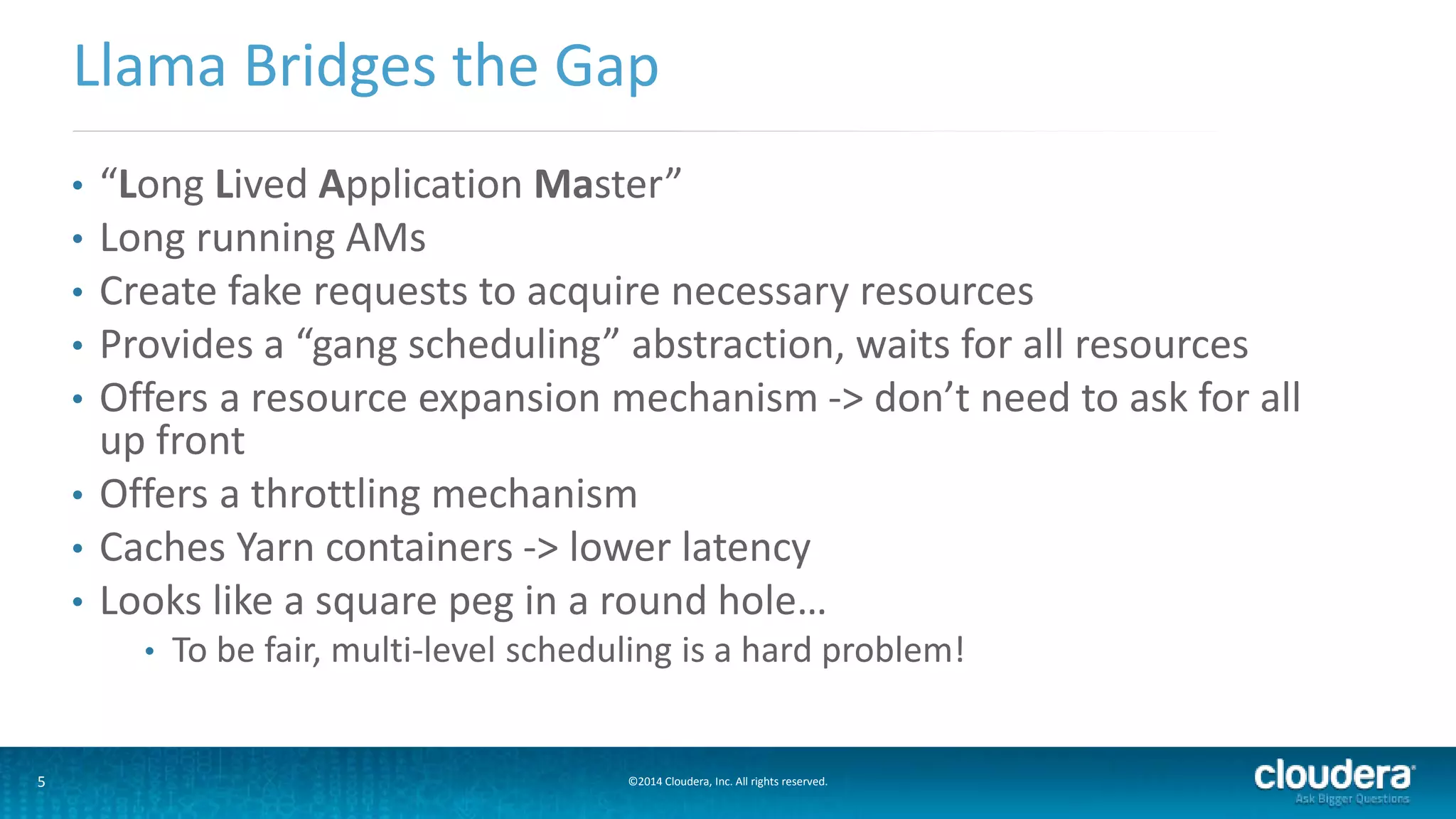

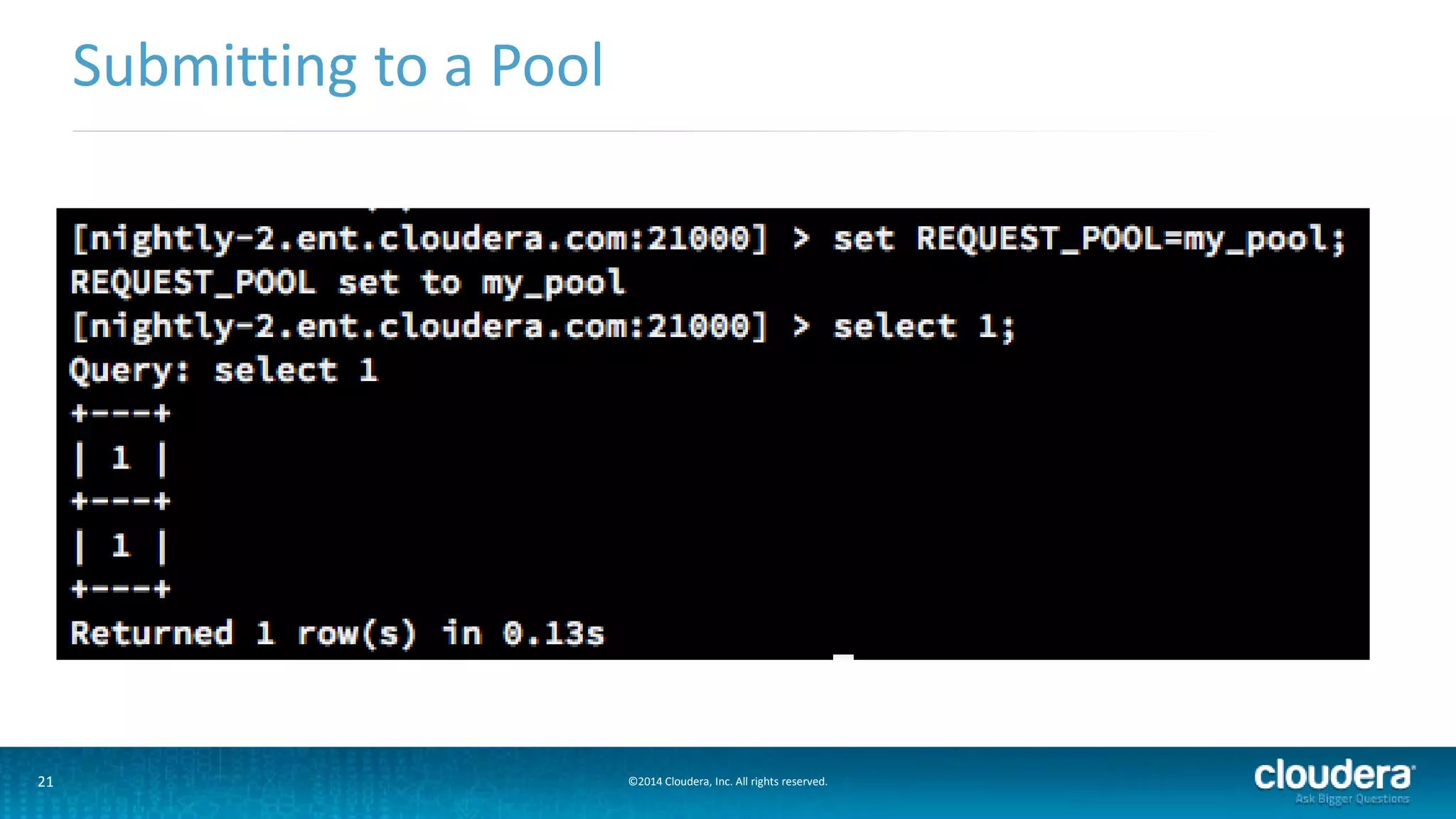

• Rejections and timeouts return error messages

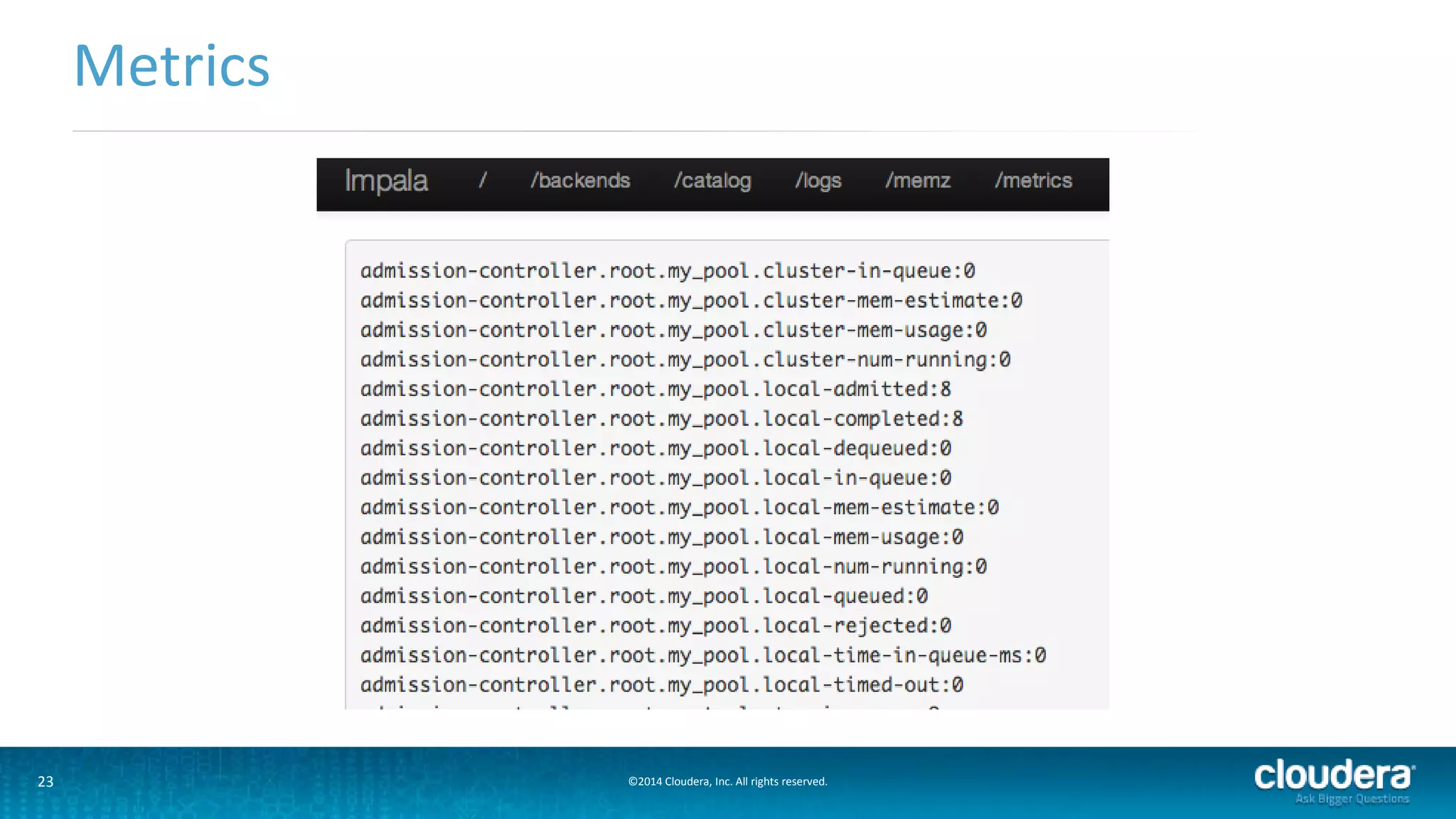

• Metrics

• Exposed in impalad web UI: /metrics

• Will be available in CM5.1

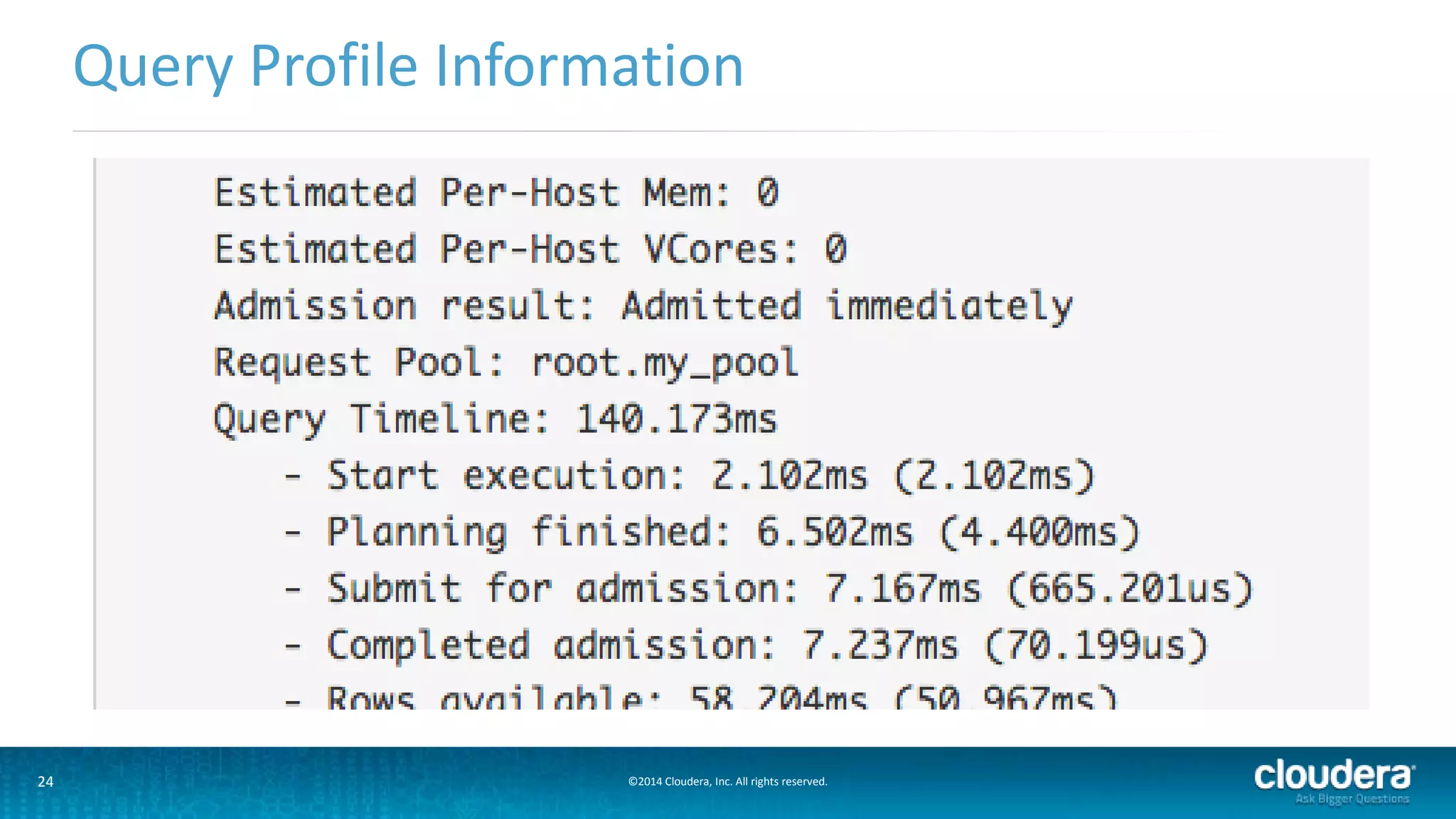

• Query profile has admission result

• Impalad logs have lots of useful information

“Debugging” Admission Control Decisions

admission-controller.cc:259] Schedule for id=c541aae43af74ed1:afdec812127f8097 in pool_name=root.test/admin

PoolConfig(max_requests=20 max_queued=50 mem_limit=-1.00 B) query cluster_mem_estimate=42.00 MB

admission-controller.cc:265] Stats: pool=root.test/admin Total(num_running=20, num_queued=7, mem_usage=239.07

MB, mem_estimate=800.00 MB) Local(num_running=20, num_queued=7, mem_usage=239.07 MB,

mem_estimate=800.00 MB)

admission-controller.cc:303] Queuing, query id=c541aae43af74ed1:afdec812127f8097](https://image.slidesharecdn.com/admissioncontrol-140521125909-phpapp02/75/Admission-Control-in-Impala-22-2048.jpg)