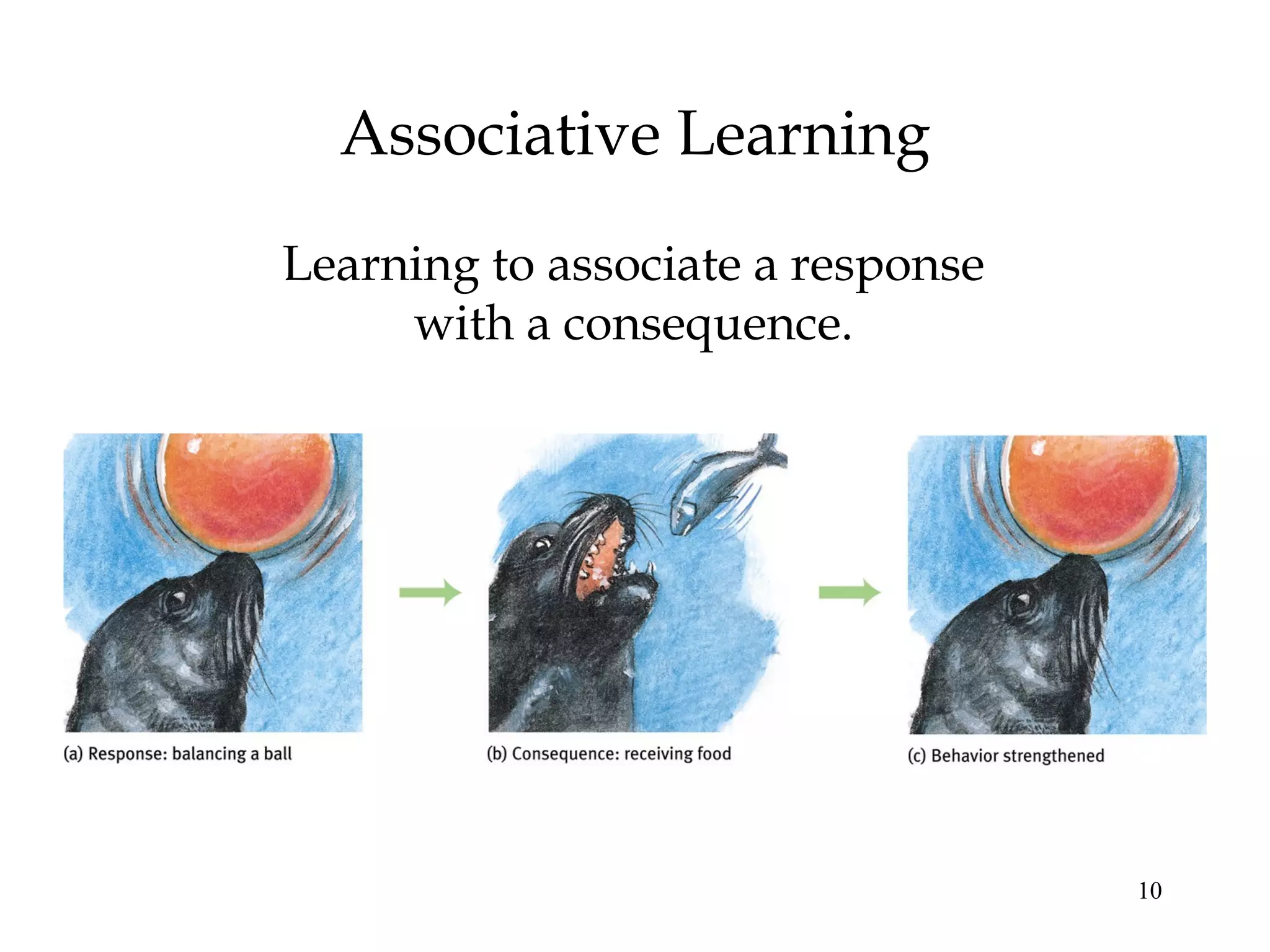

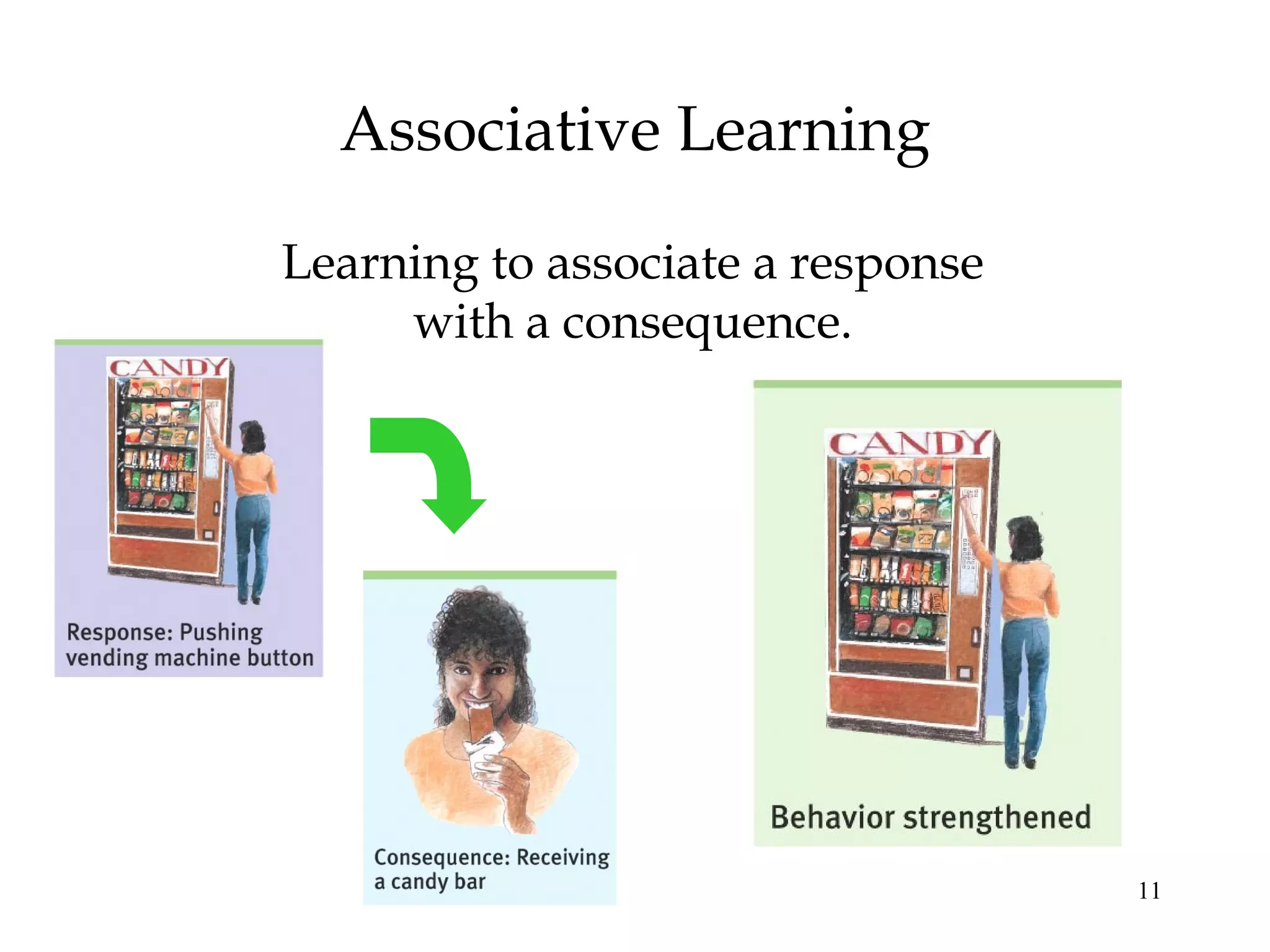

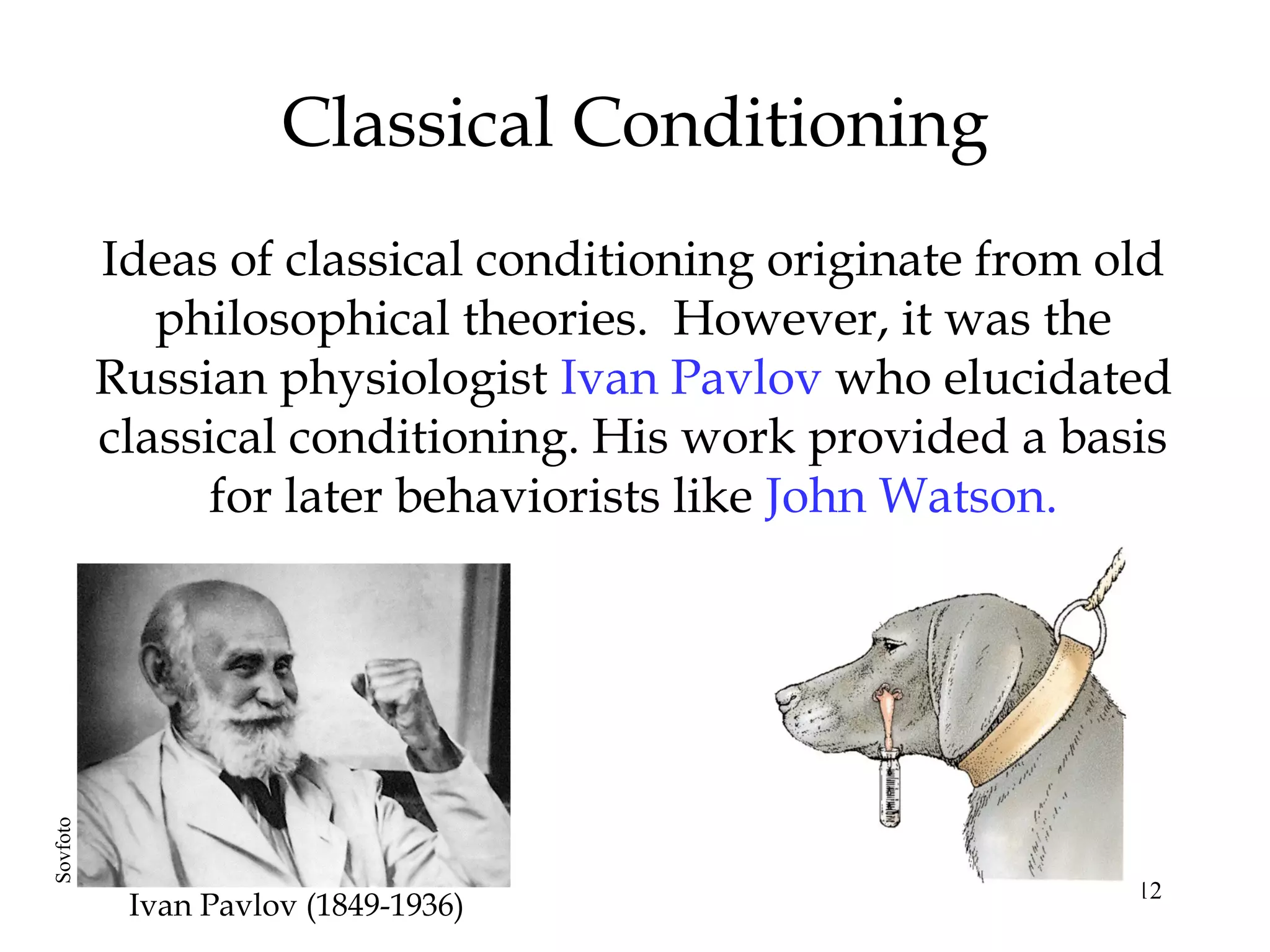

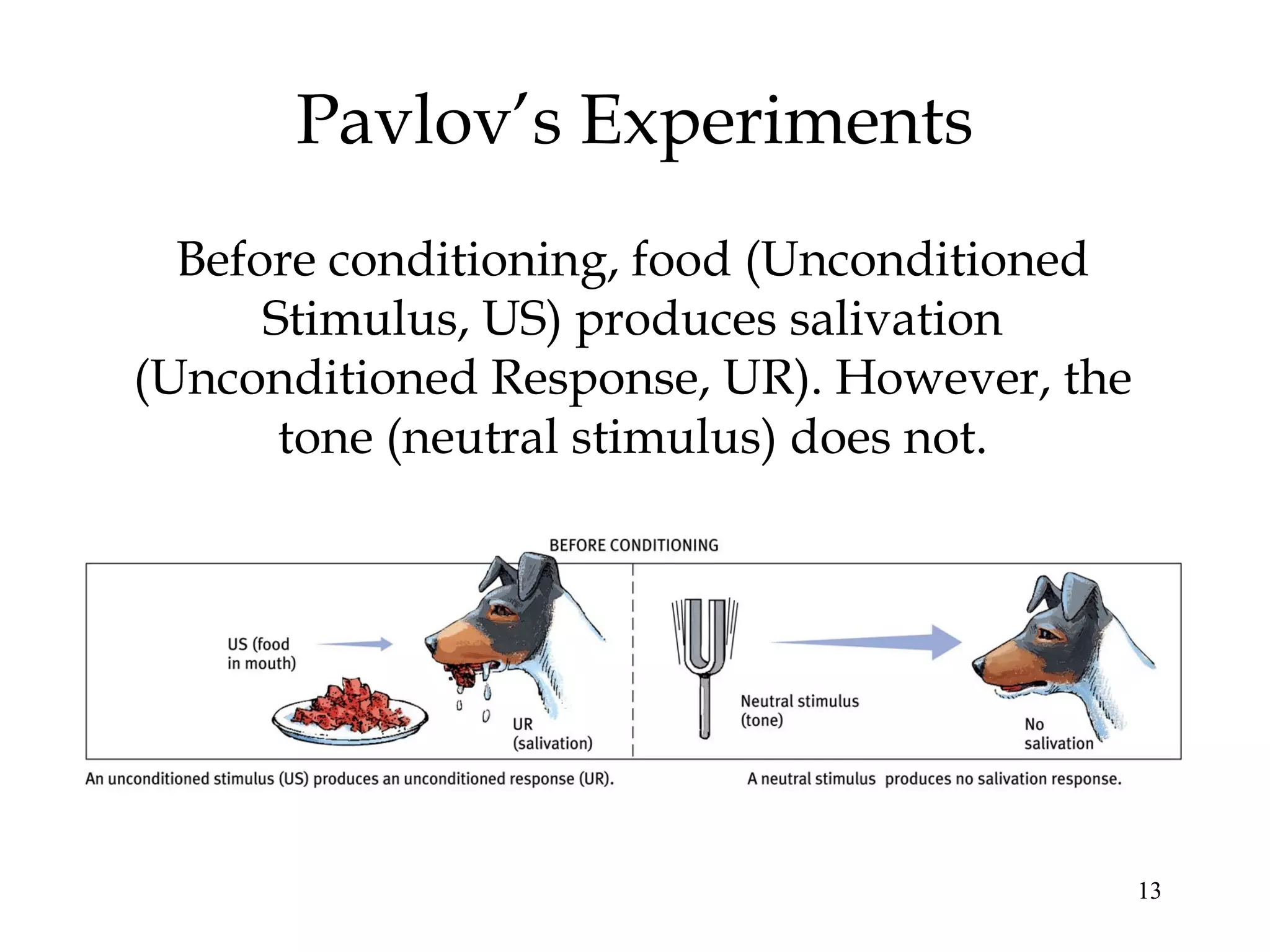

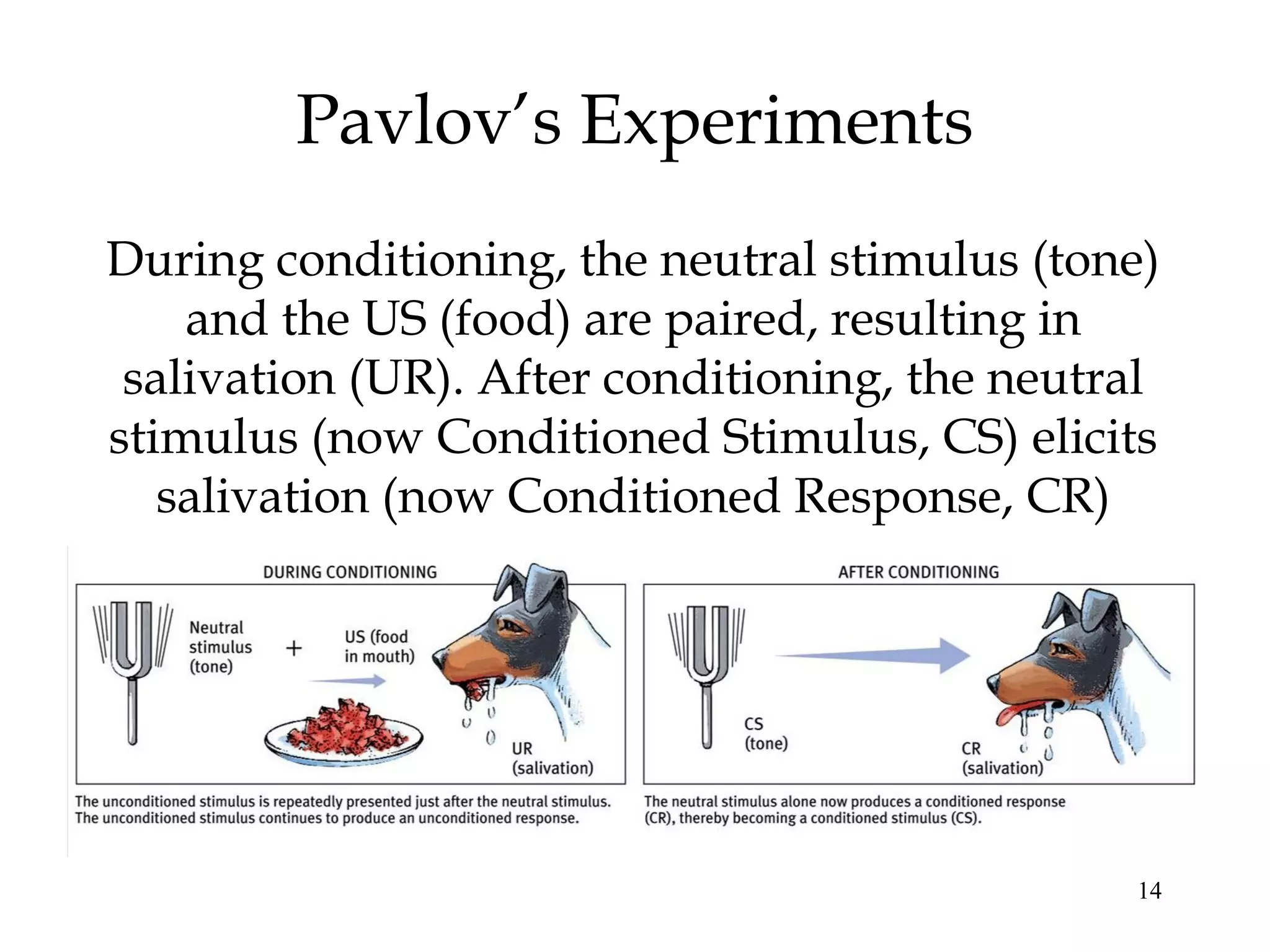

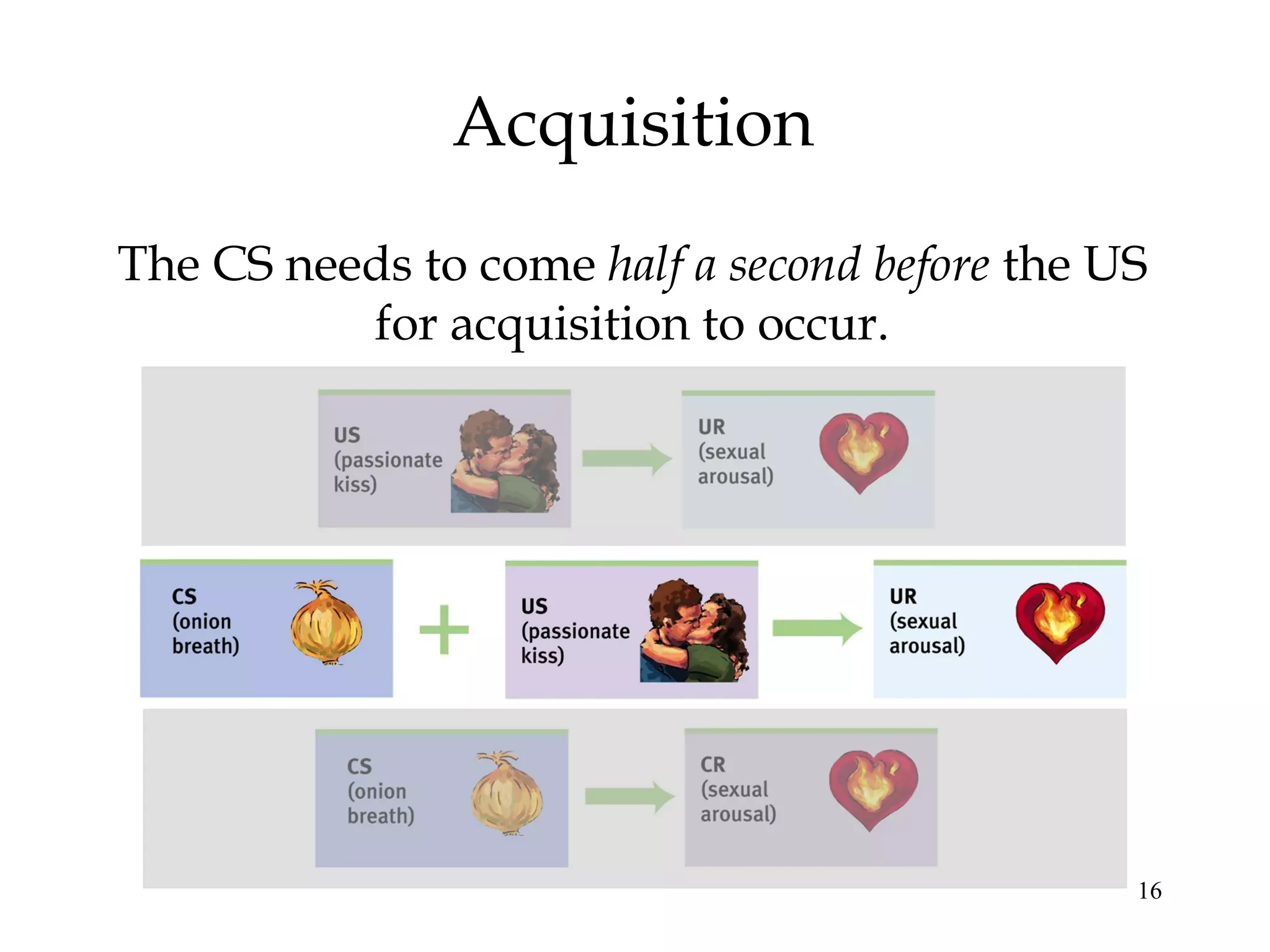

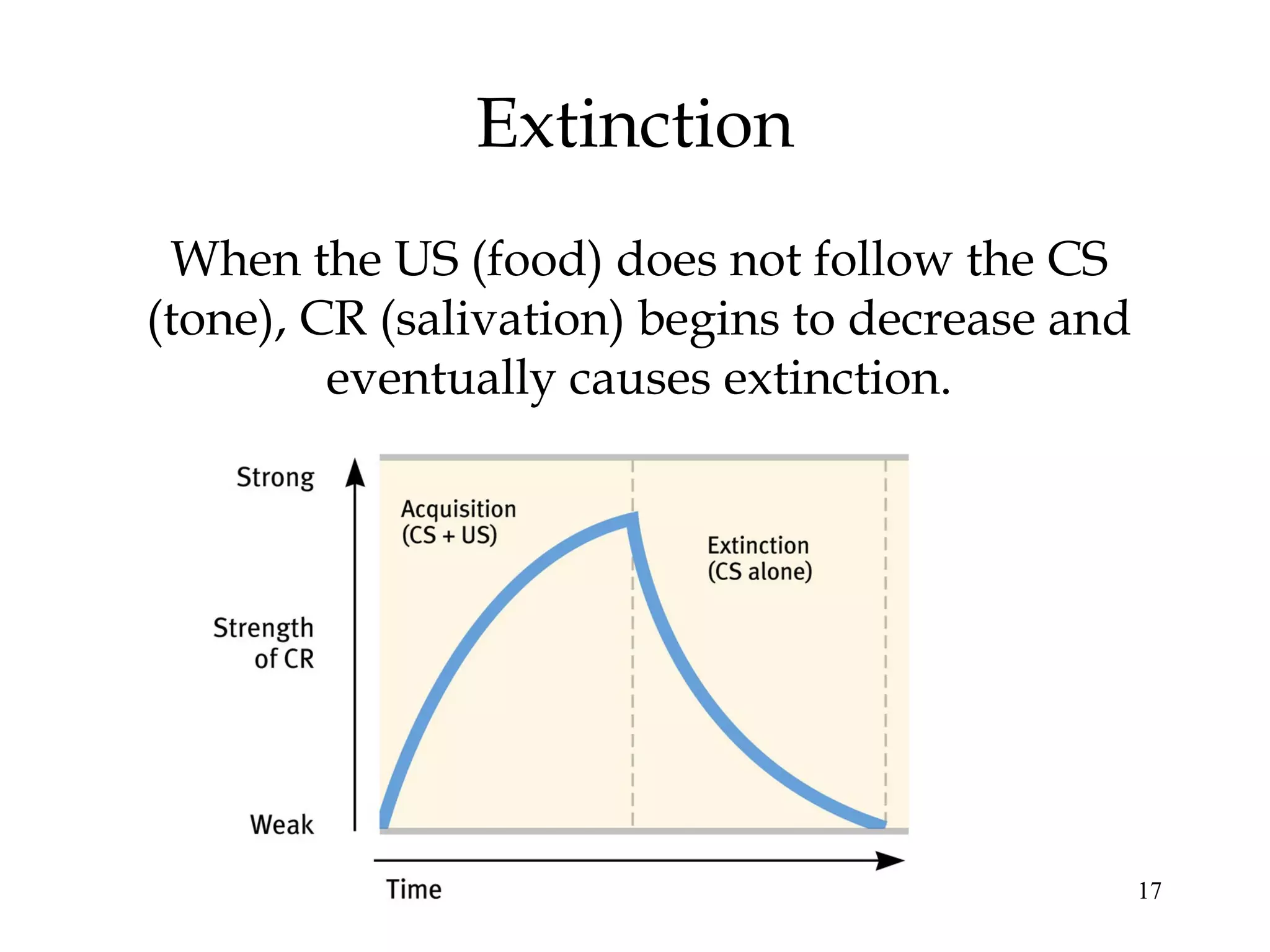

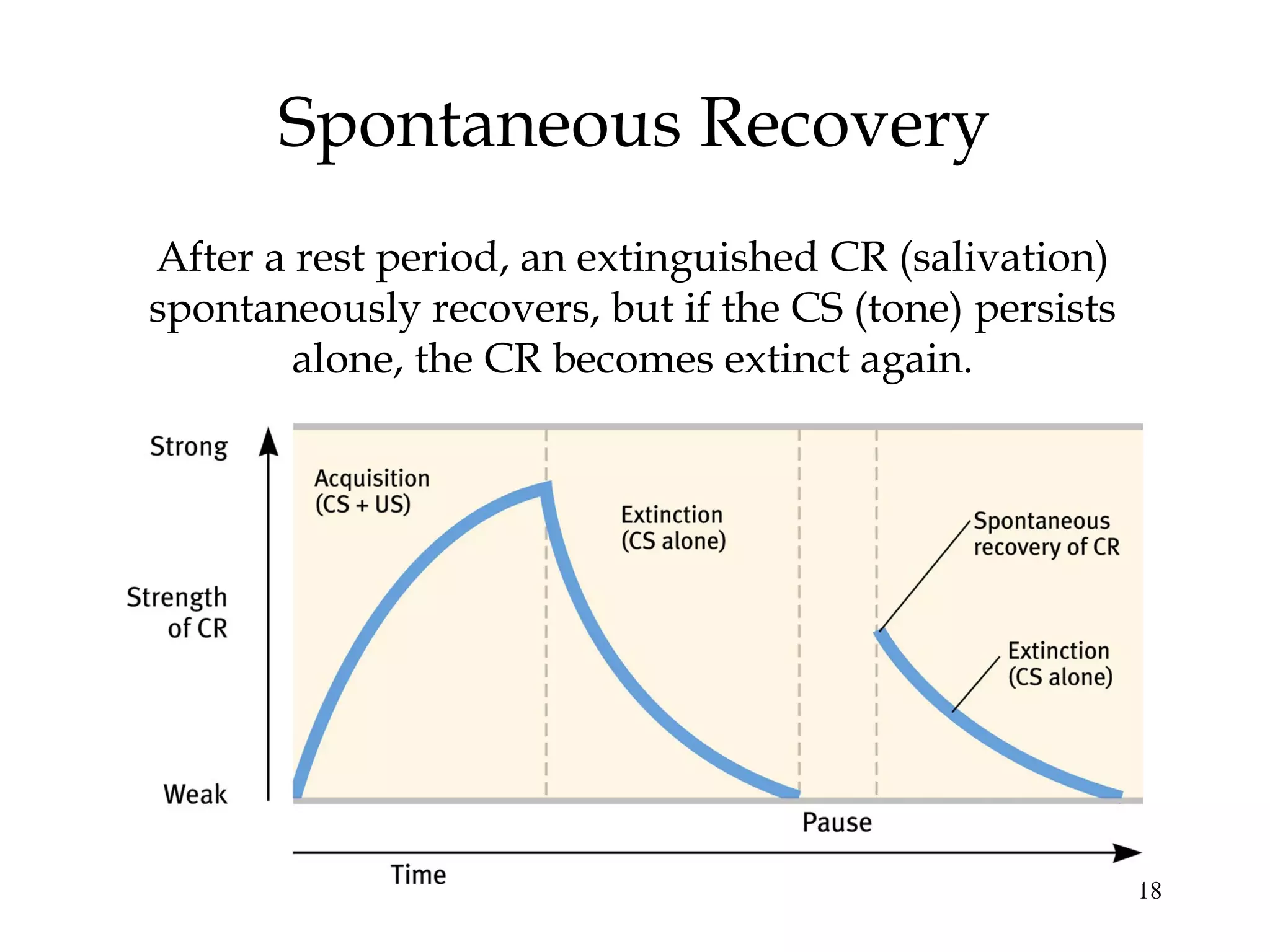

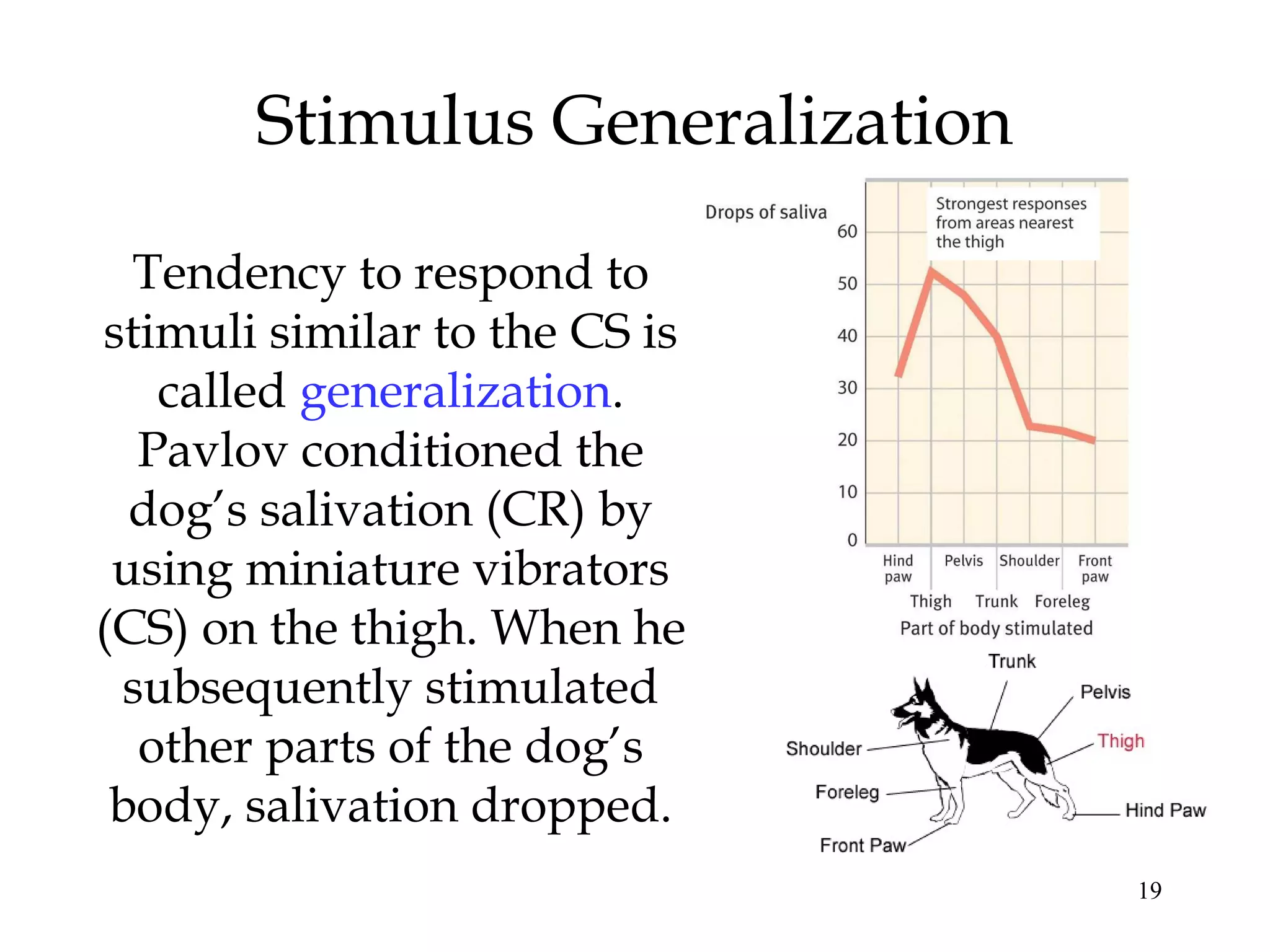

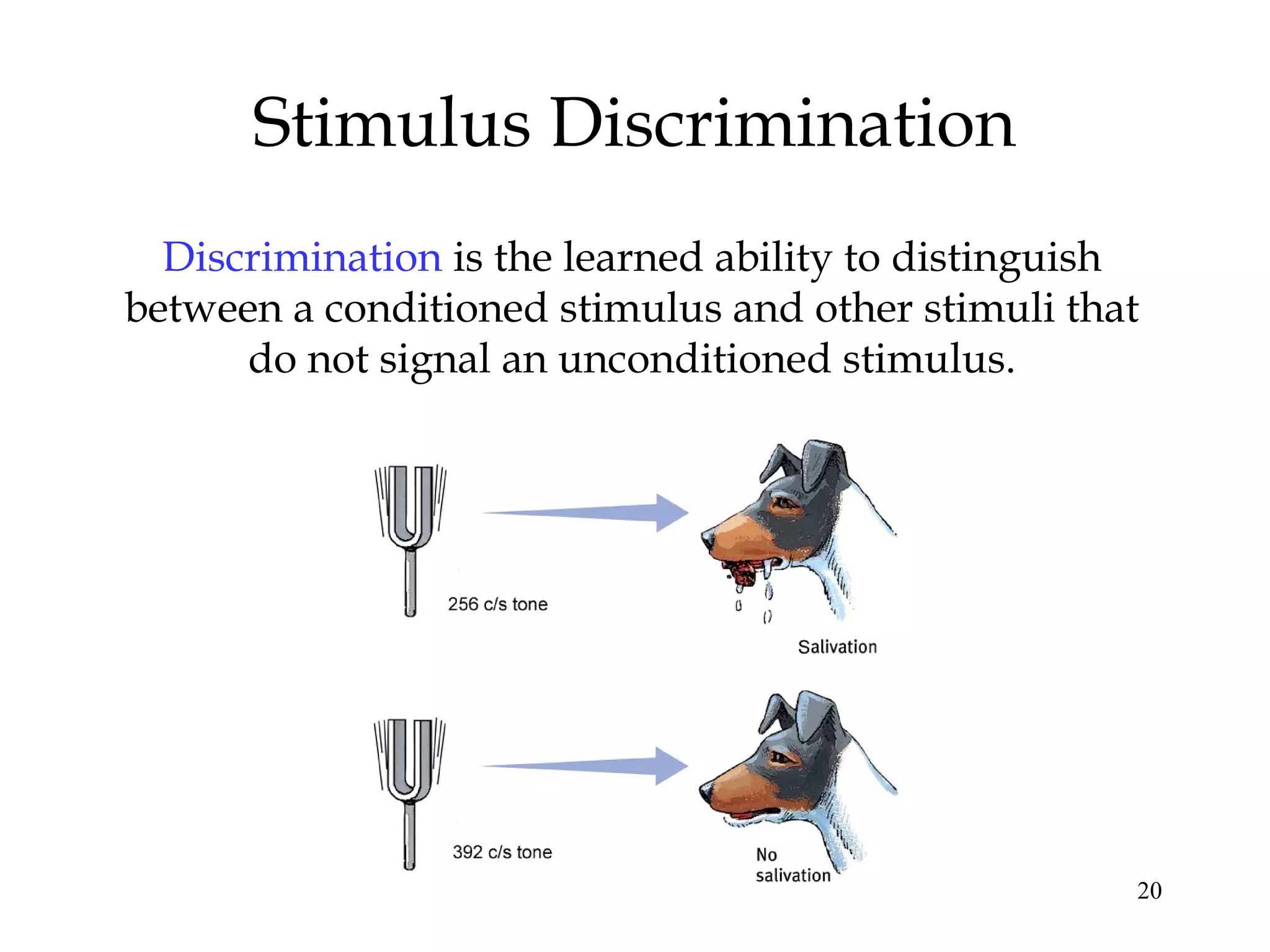

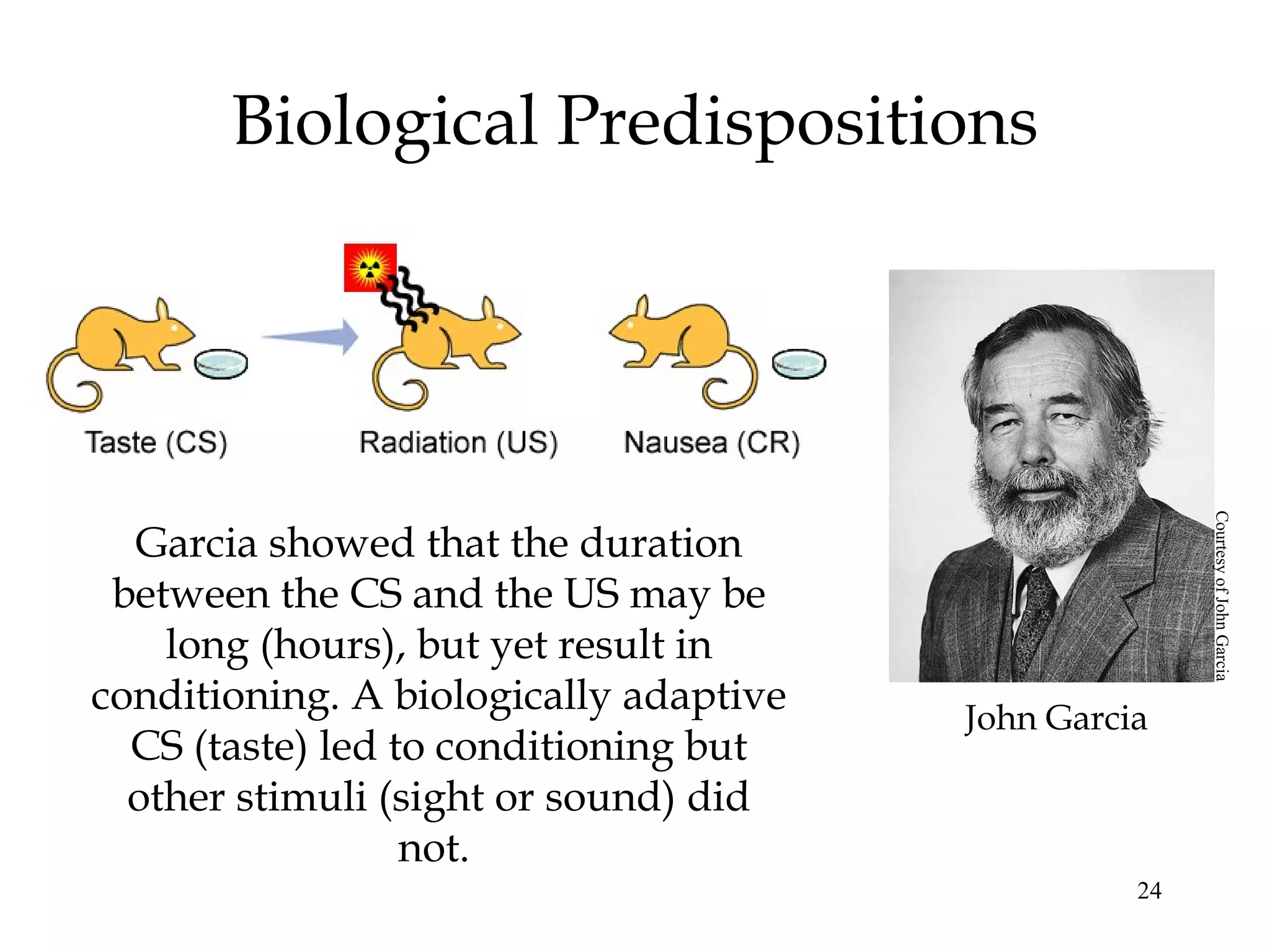

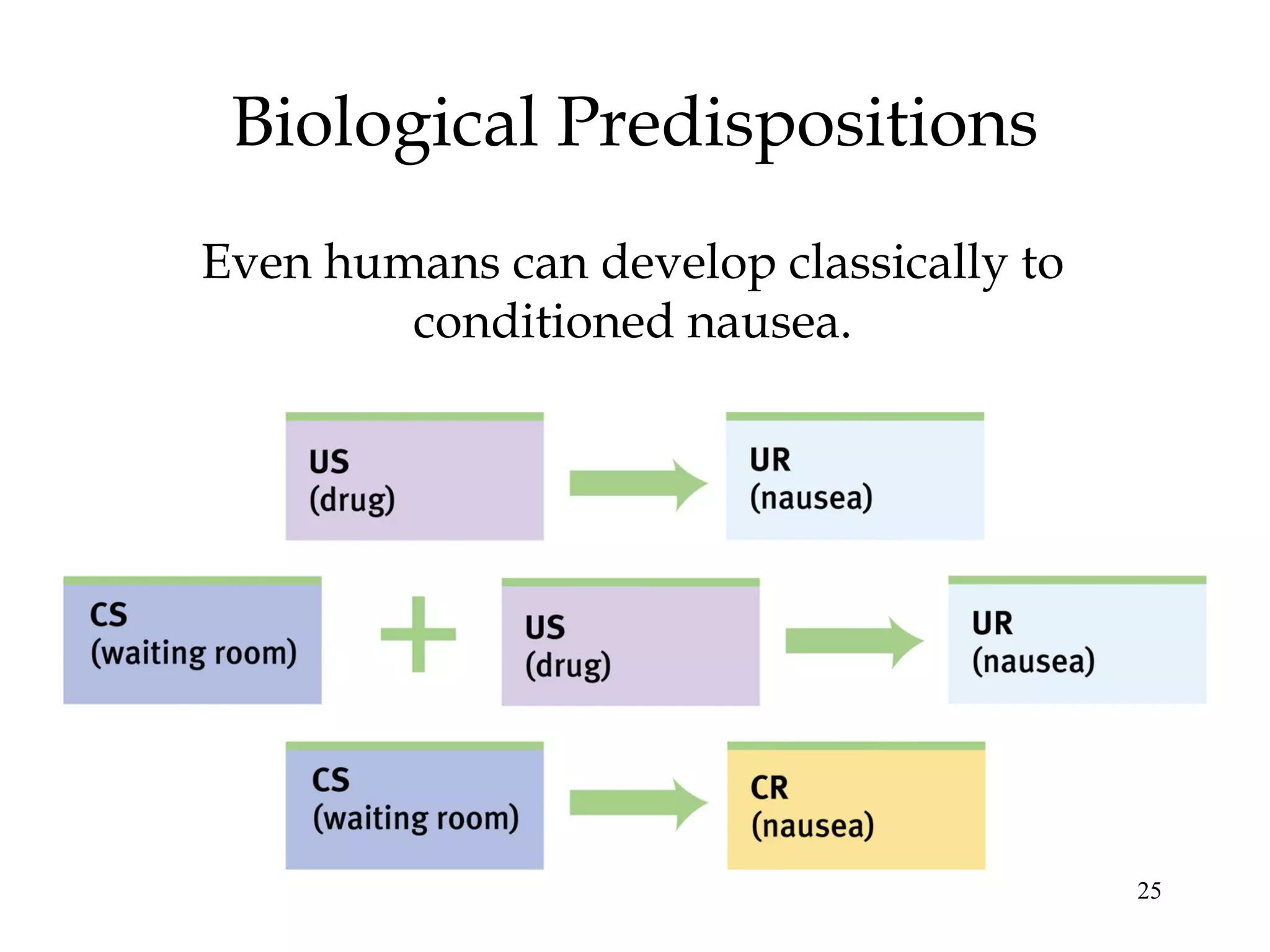

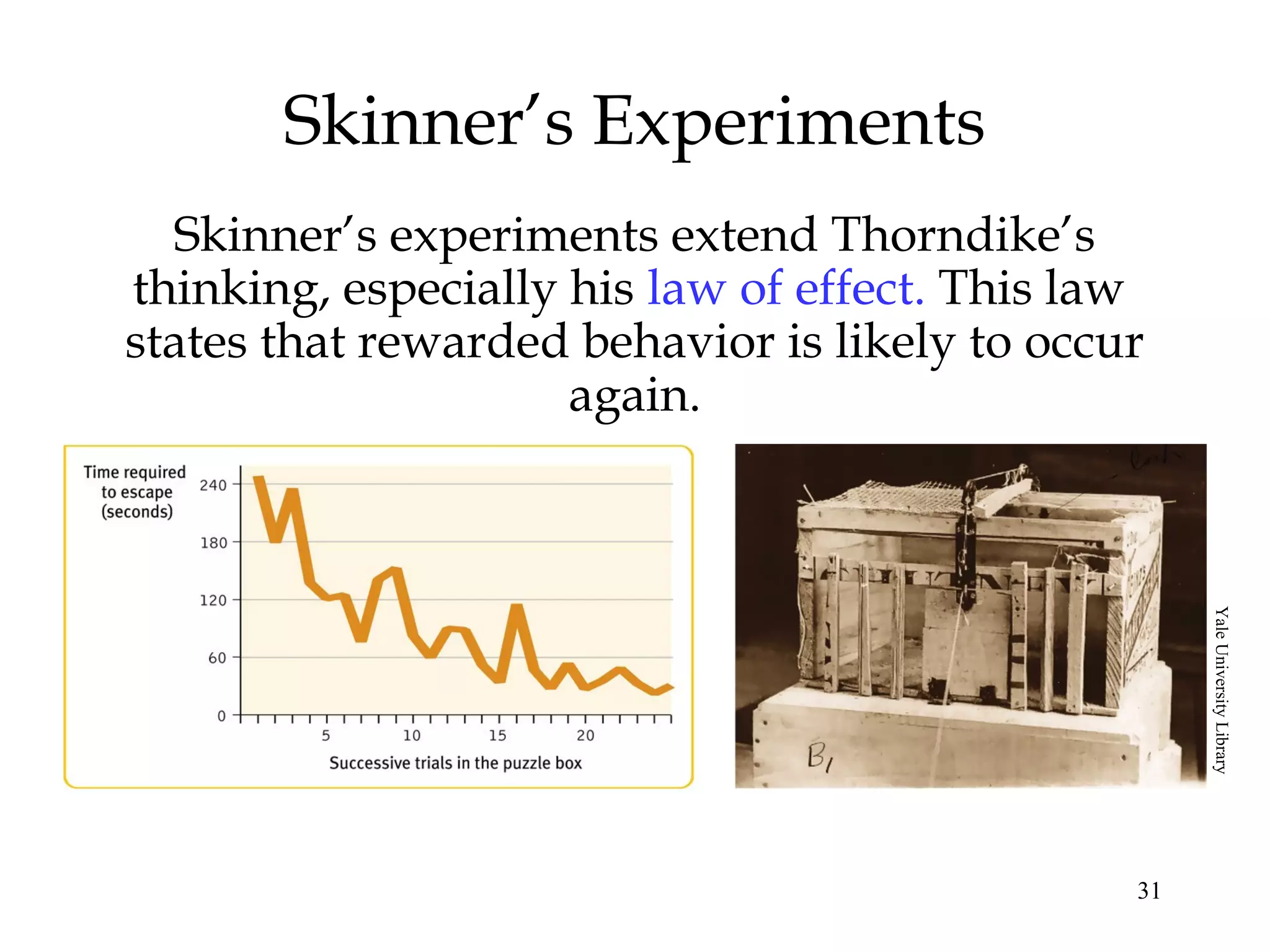

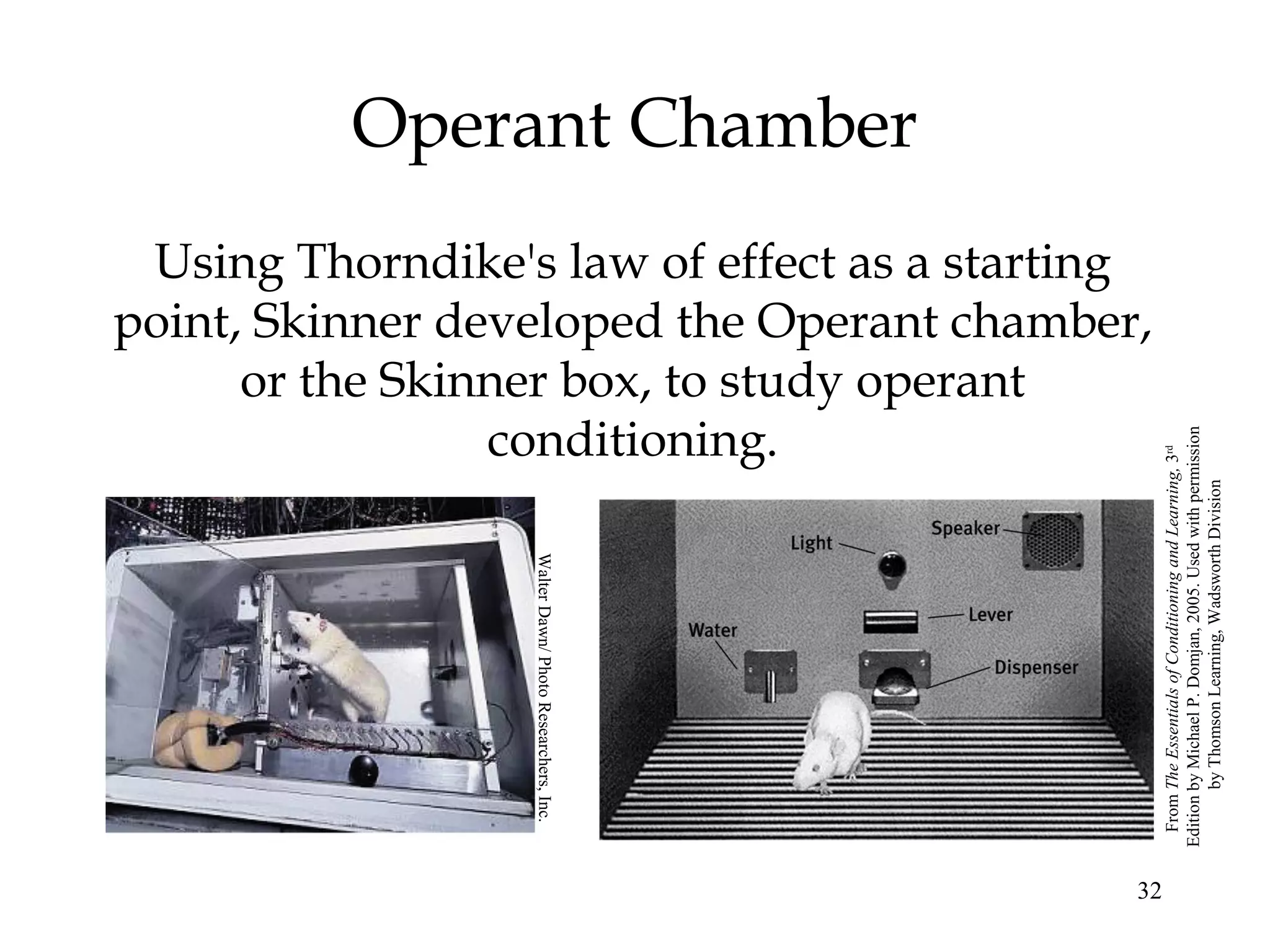

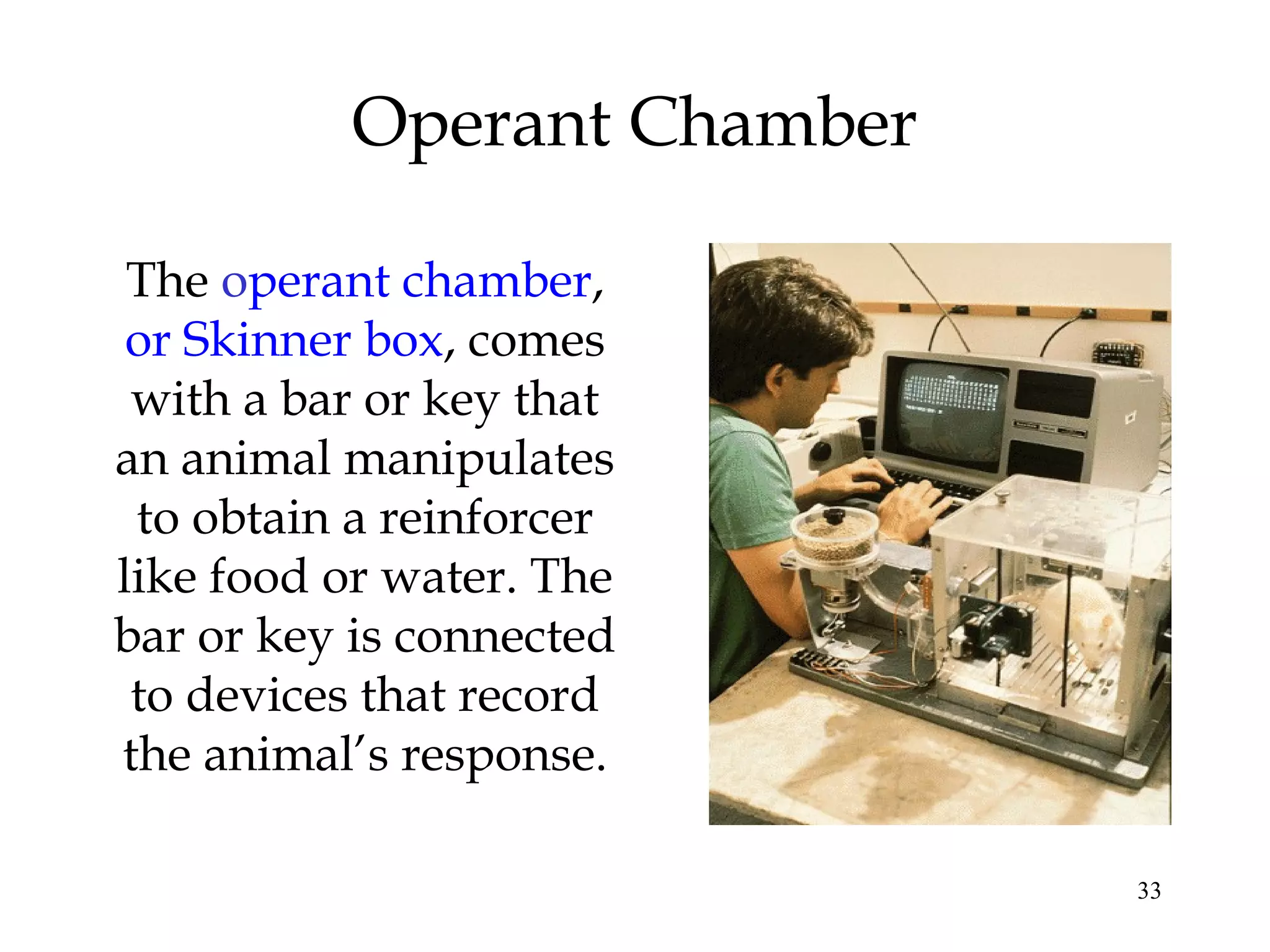

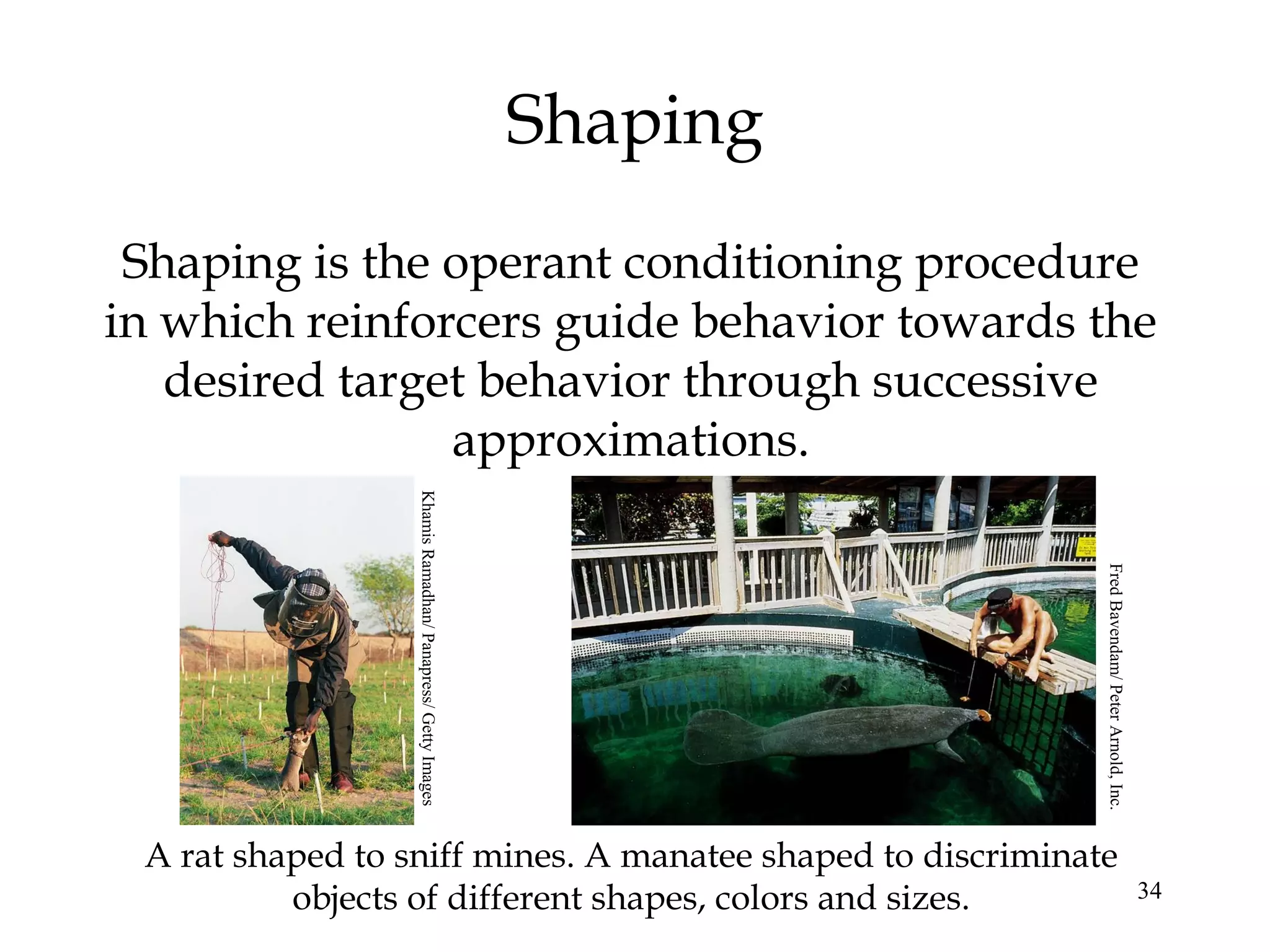

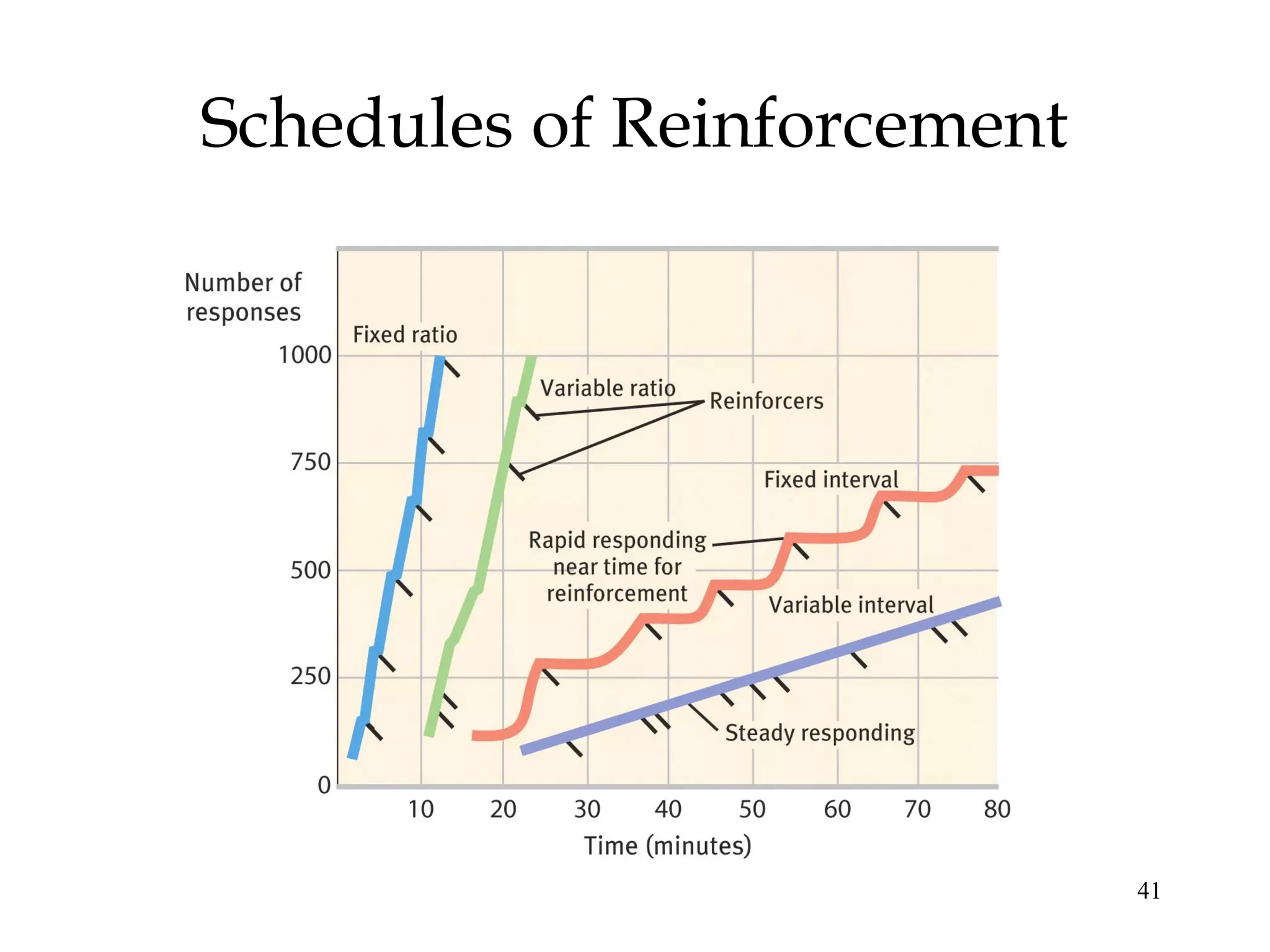

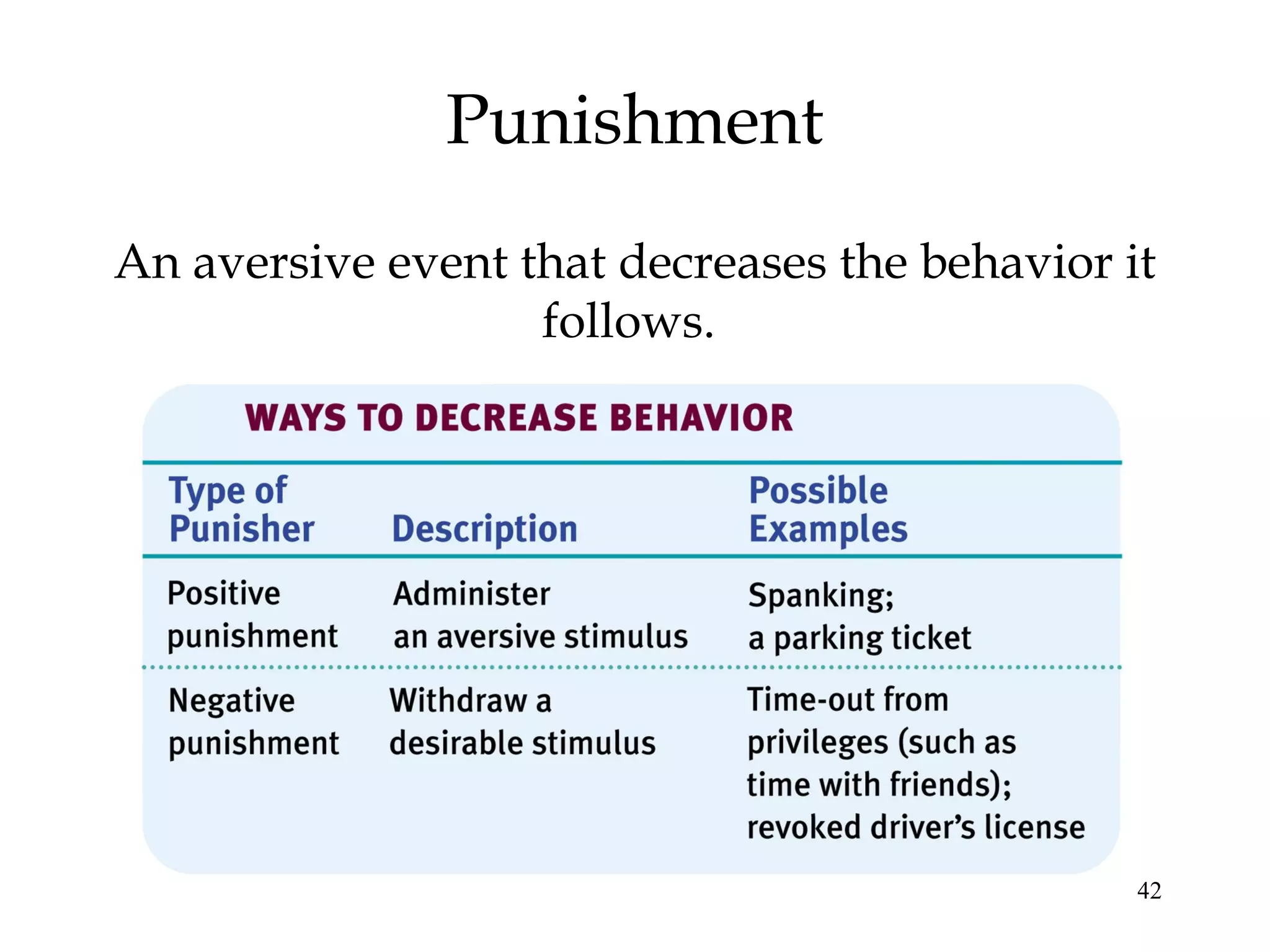

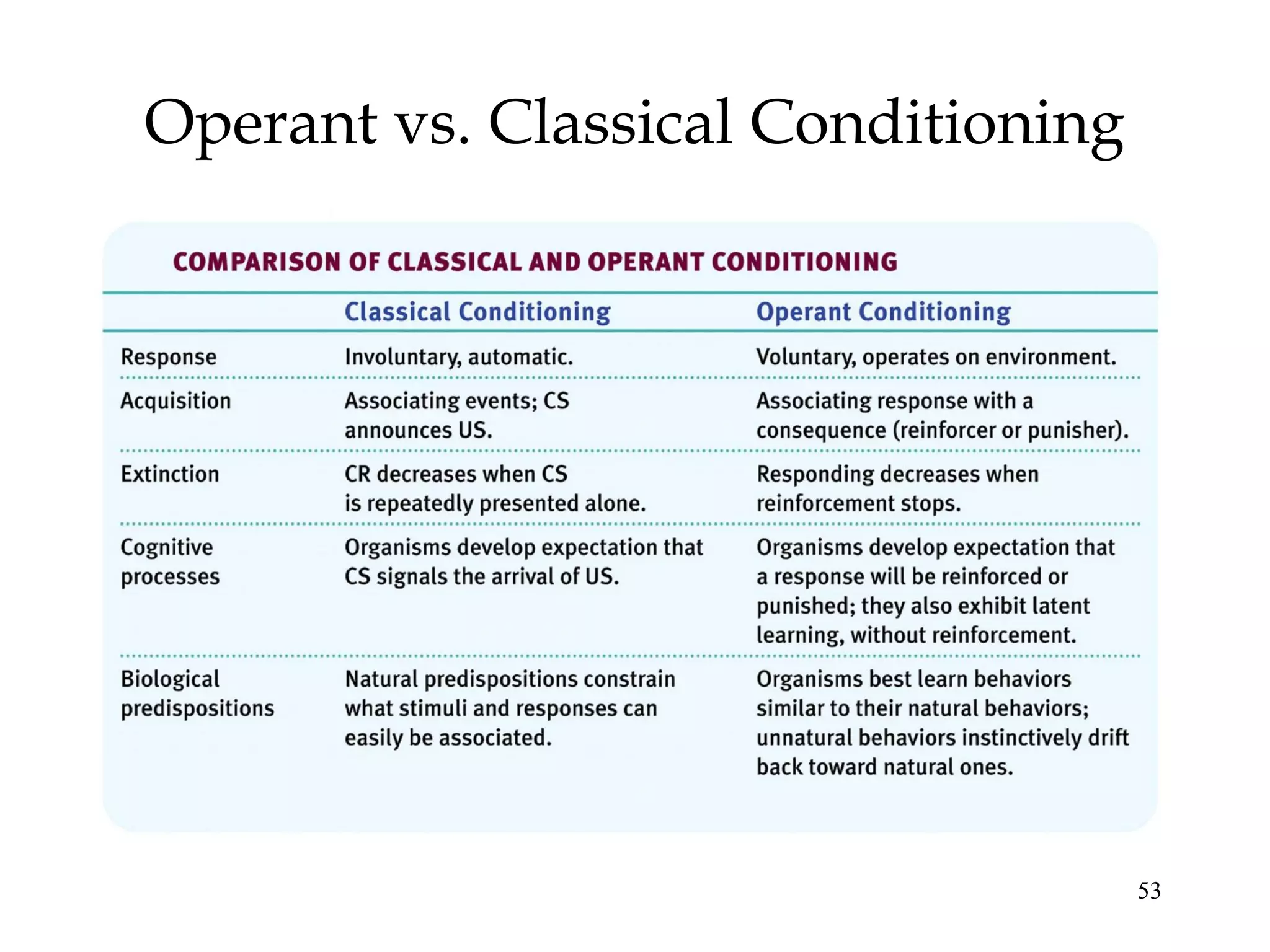

1) The document discusses classical and operant conditioning, summarizing key experiments and findings. It describes Ivan Pavlov's classical conditioning experiments with dogs and salivation. 2) It also summarizes B.F. Skinner's operant conditioning experiments using operant chambers and reinforcement schedules to shape animal behaviors. 3) The document notes extensions of classical and operant conditioning theories to incorporate cognitive processes and biological constraints on learning.