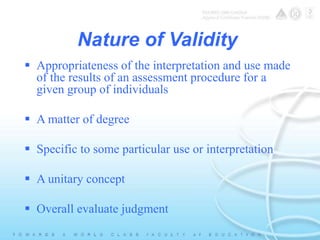

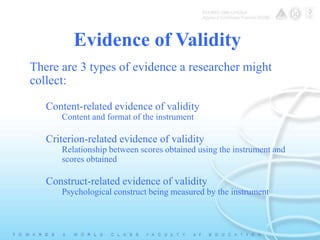

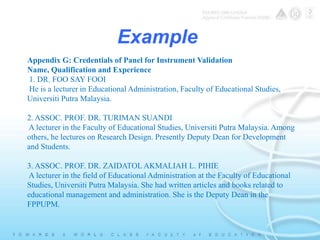

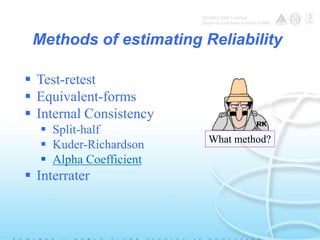

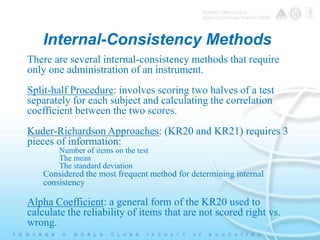

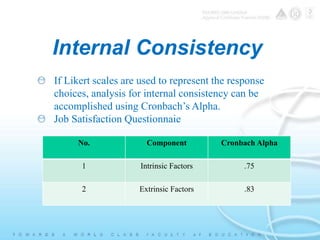

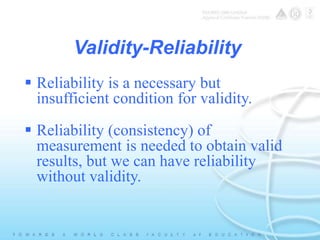

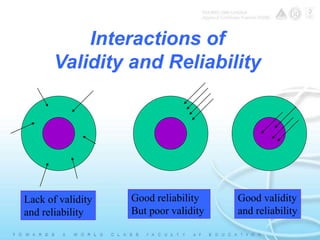

Validity refers to the appropriateness and usefulness of assessment interpretations and results, while reliability refers to the consistency of measurements. There are various types of validity evidence including content, criterion, and construct validity. Reliability can be estimated through methods like test-retest, equivalent forms, and internal consistency. Ensuring both validity and reliability of assessments is important for making fair and meaningful evaluations of students.