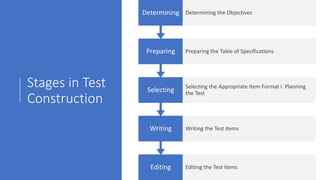

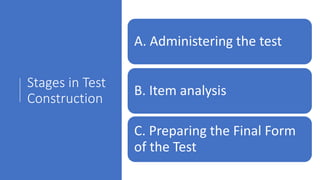

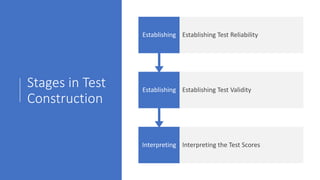

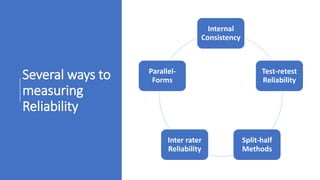

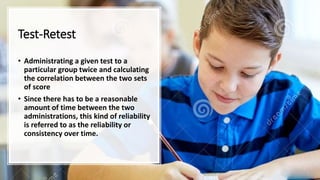

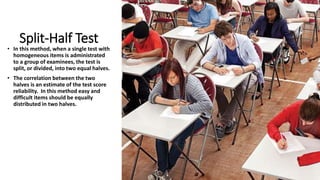

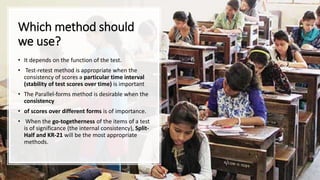

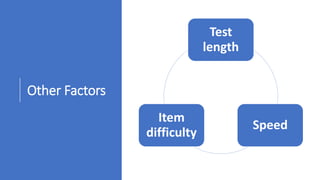

This document discusses the characteristics of a good test. It defines a test as an instrument used to observe and describe student characteristics numerically or through classification. A good test should demonstrate validity, reliability, practicality, administrability, comprehensiveness, objectivity, simplicity, and scorability. Validity refers to a test measuring what it intends to measure, and there are different types of validity including content, criterion-related, construct, and face validity. Reliability means a test produces consistent results and can be measured through methods like test-retest, parallel forms, and split-half reliability. Other characteristics include a test being practical to administer and score, comprehensive of the subject matter, objective in its scoring,