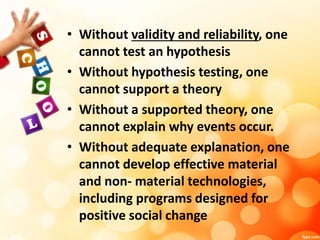

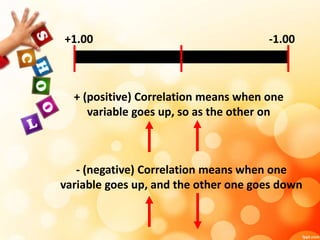

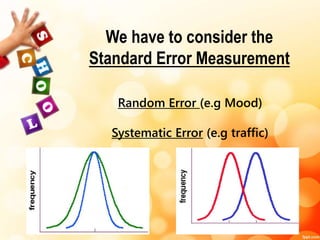

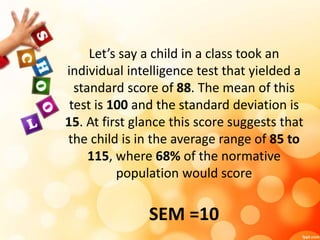

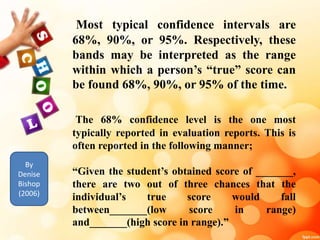

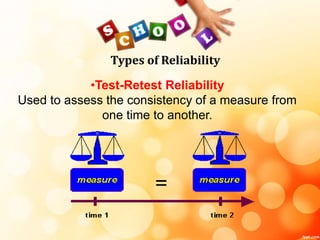

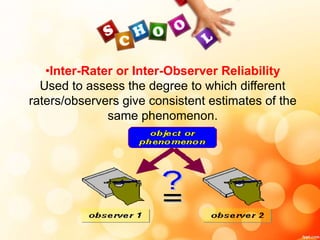

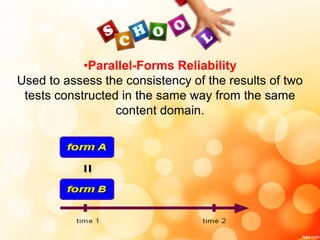

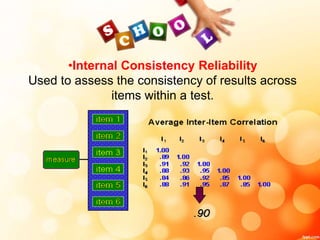

This document discusses the importance of reliability and validity in testing. It defines reliability as consistency and discusses different types of reliability including test-retest, inter-rater, parallel-forms, and internal consistency reliability. Validity refers to a test measuring what it intends to measure. There are several types of validity discussed including content, construct, criterion-related (concurrent and predictive), face, convergent, treatment, and social validity. The standard error of measurement is also explained as estimating how repeated measures on the same person tend to be distributed around their true score.