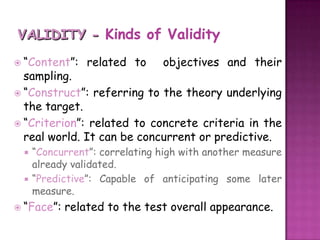

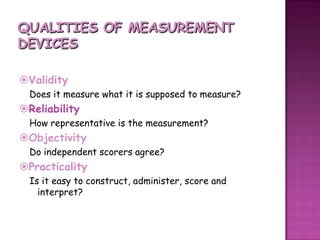

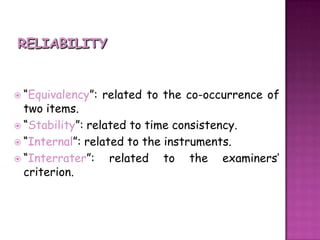

This document discusses key concepts related to validity and reliability in measurement devices. It defines validity as measuring what the device is intended to measure, and reliability as consistency of measurement. The document outlines several types of validity including content, construct, criterion (concurrent and predictive), and face validity. It also discusses reliability in terms of equivalency, stability, internal consistency, and interrater reliability. Validity and reliability are closely related but a test can be reliable without being valid. The document also notes sources of error in measurements and the backwash effect of test design on teaching.