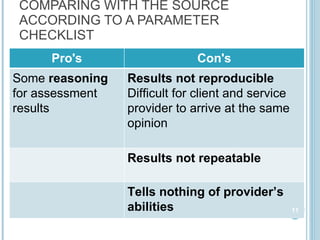

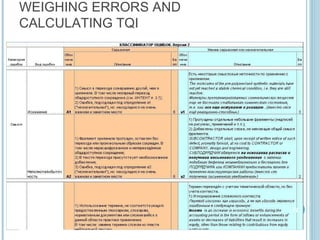

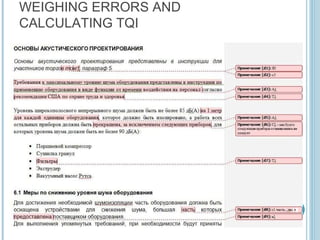

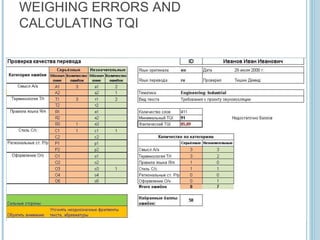

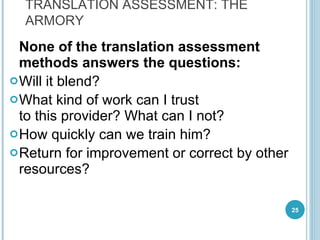

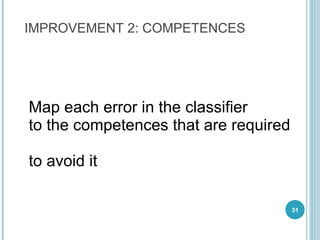

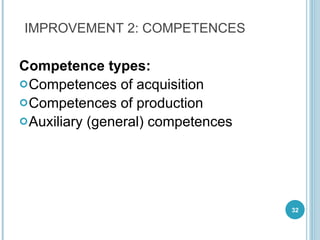

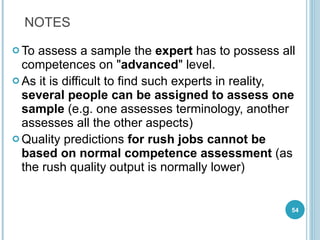

The document proposes improvements to translation quality assessment methods by redefining them in terms of competencies and suitability. It suggests assessing translations based on two error dimensions: factual errors and connotative errors. Errors would be mapped to required competencies and workflow roles. The assessment would indicate which competencies providers have and which require training. It would also determine what percentage of errors can be easily corrected, helping decide whether to return a translation for improvement or use other resources. This new model aims to provide clear, objective quality feedback to help clients and providers communicate effectively and optimize the translation process.

![Thank you! Questions ? [email_address]](https://image.slidesharecdn.com/demidtishinallcorrectlanguagesolutions-translationqualityassessmentredefined-101001075129-phpapp02/85/Translation-quality-assessment-redefined-63-320.jpg)