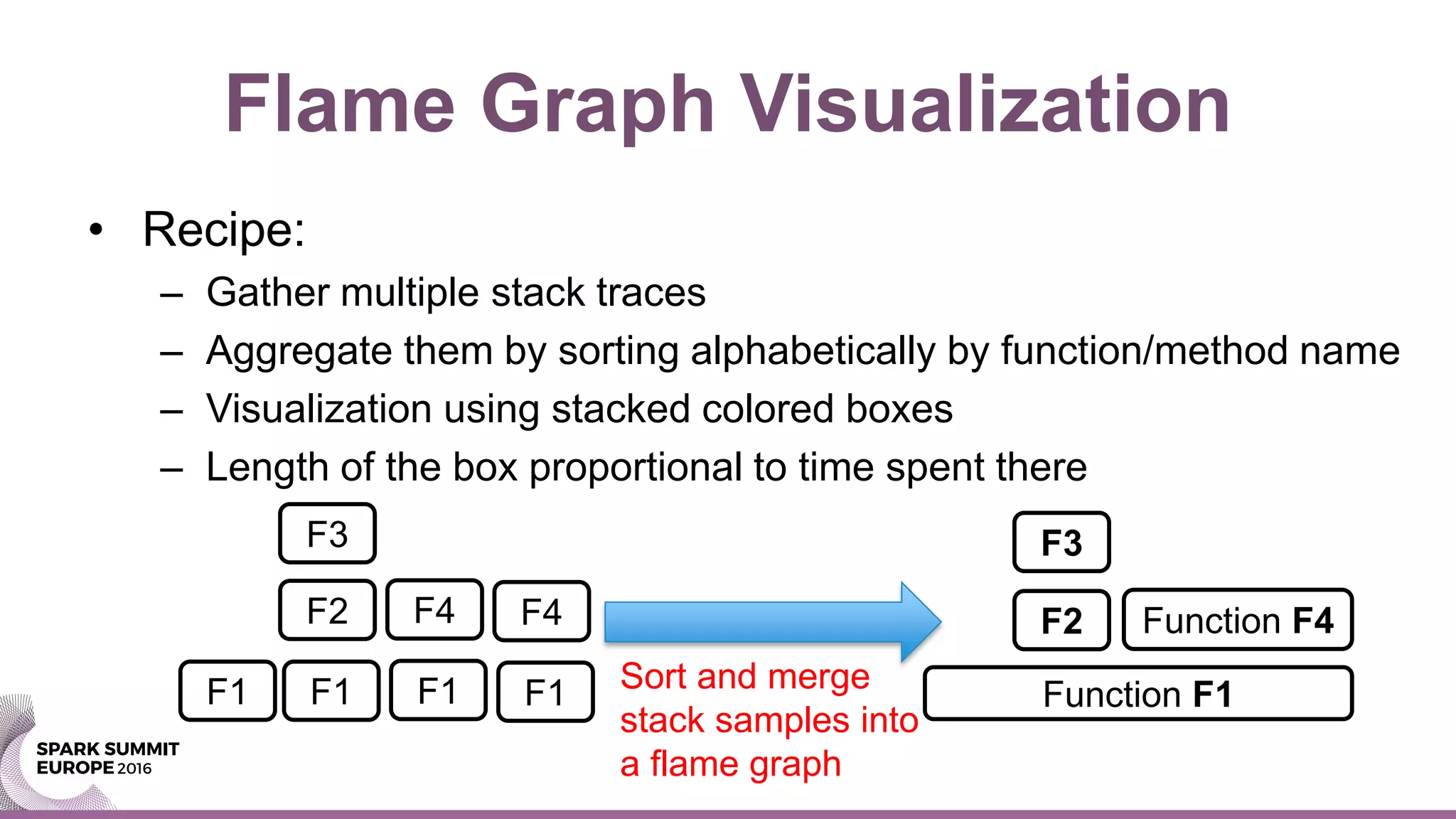

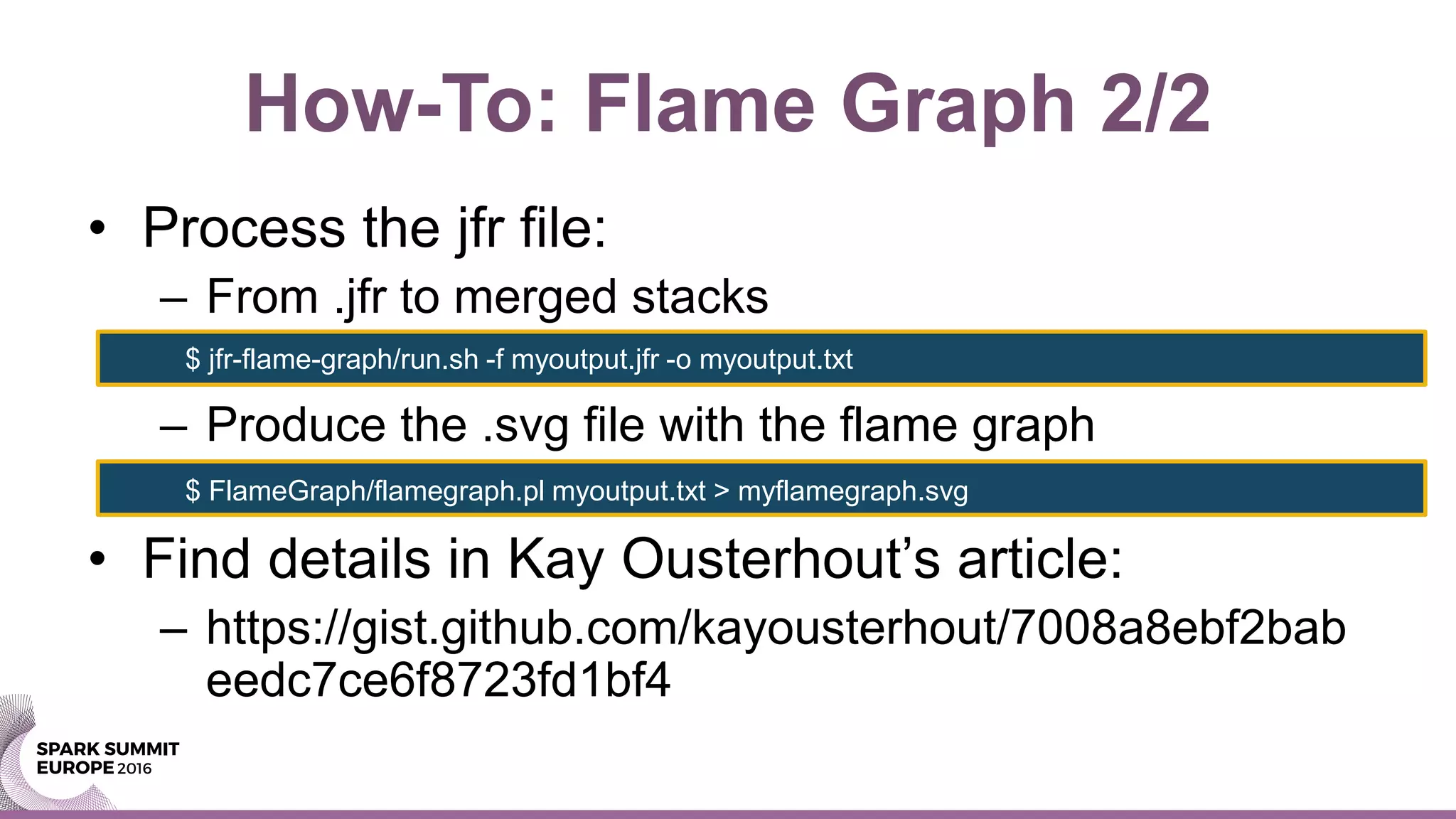

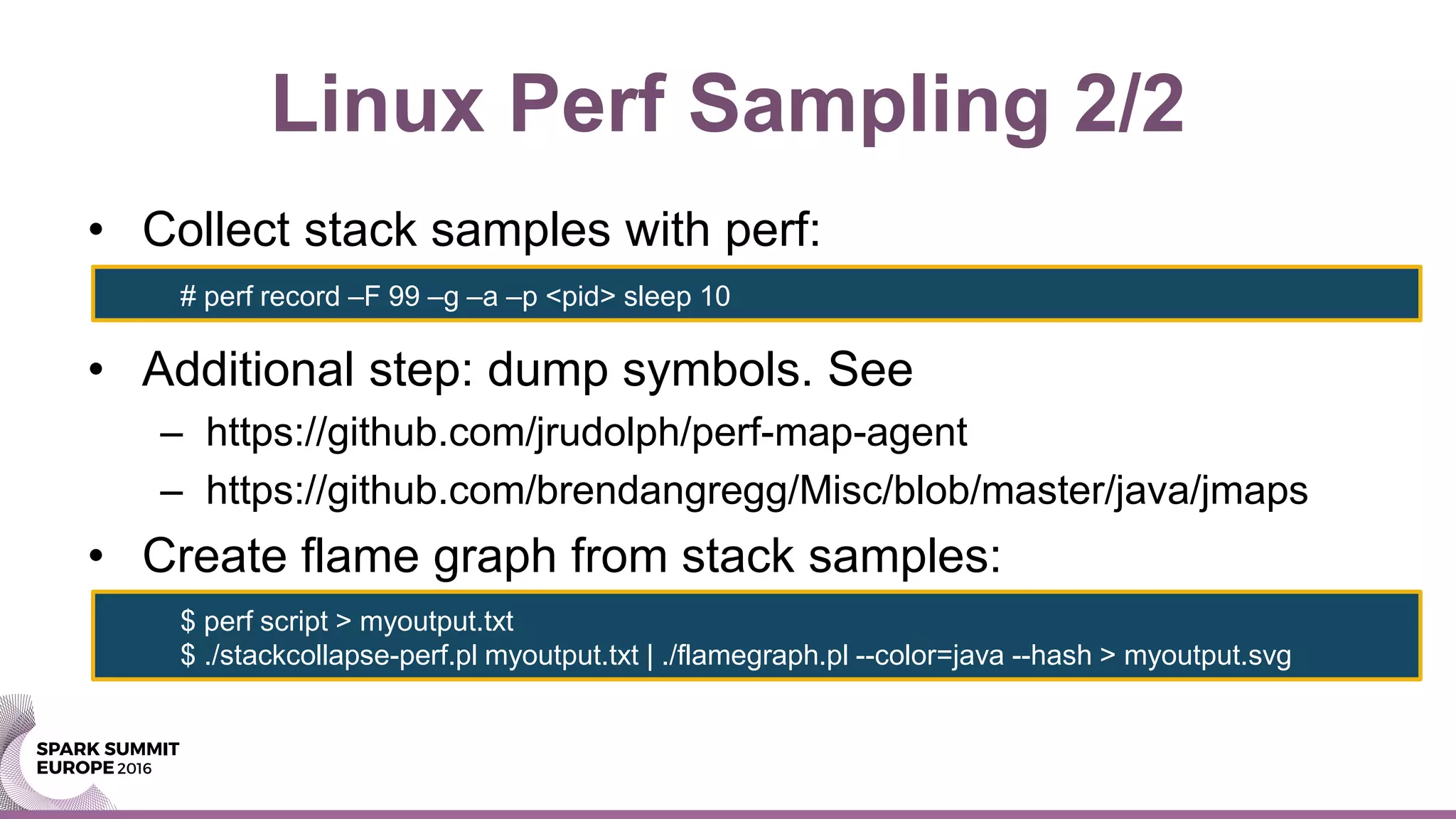

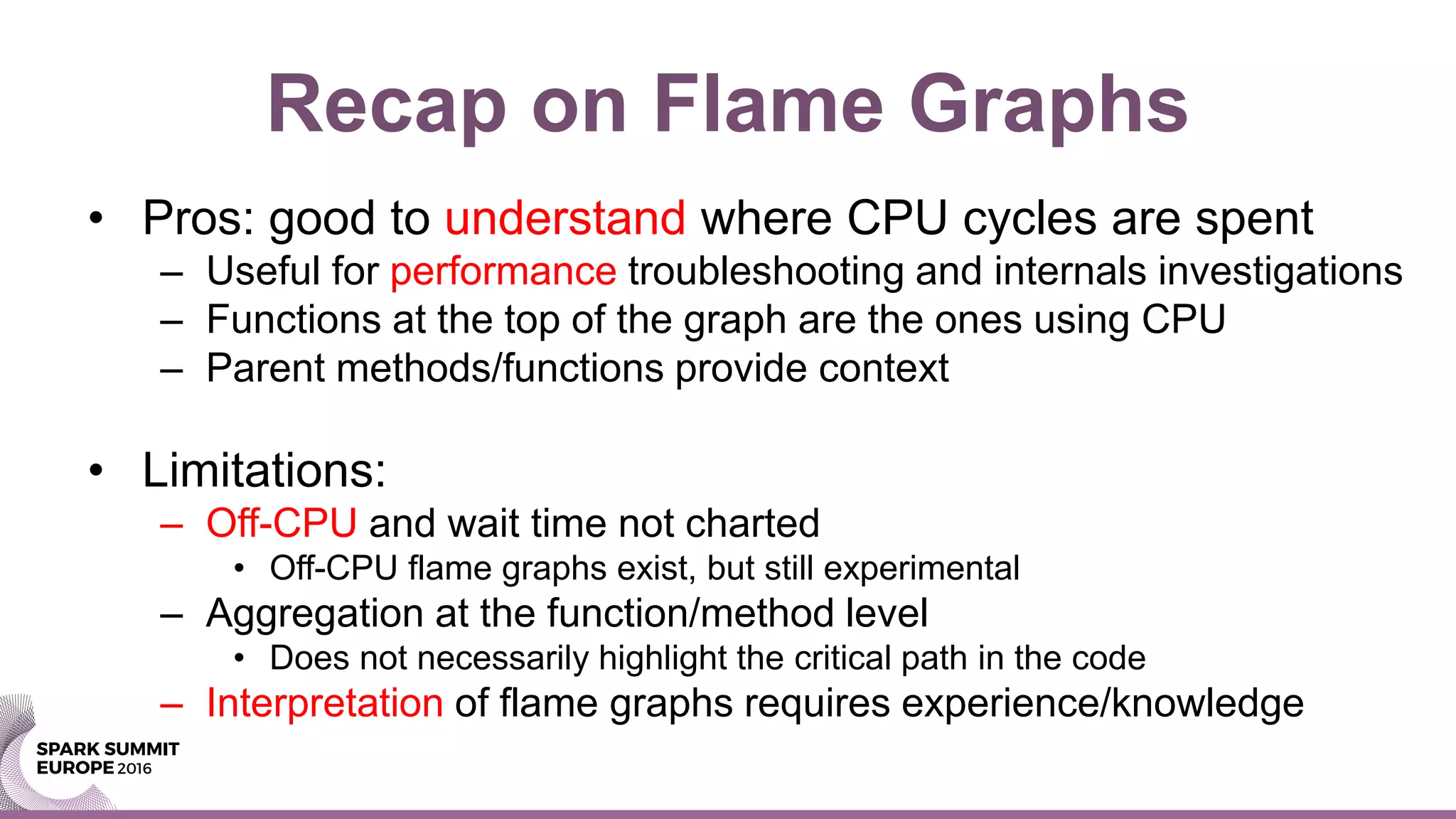

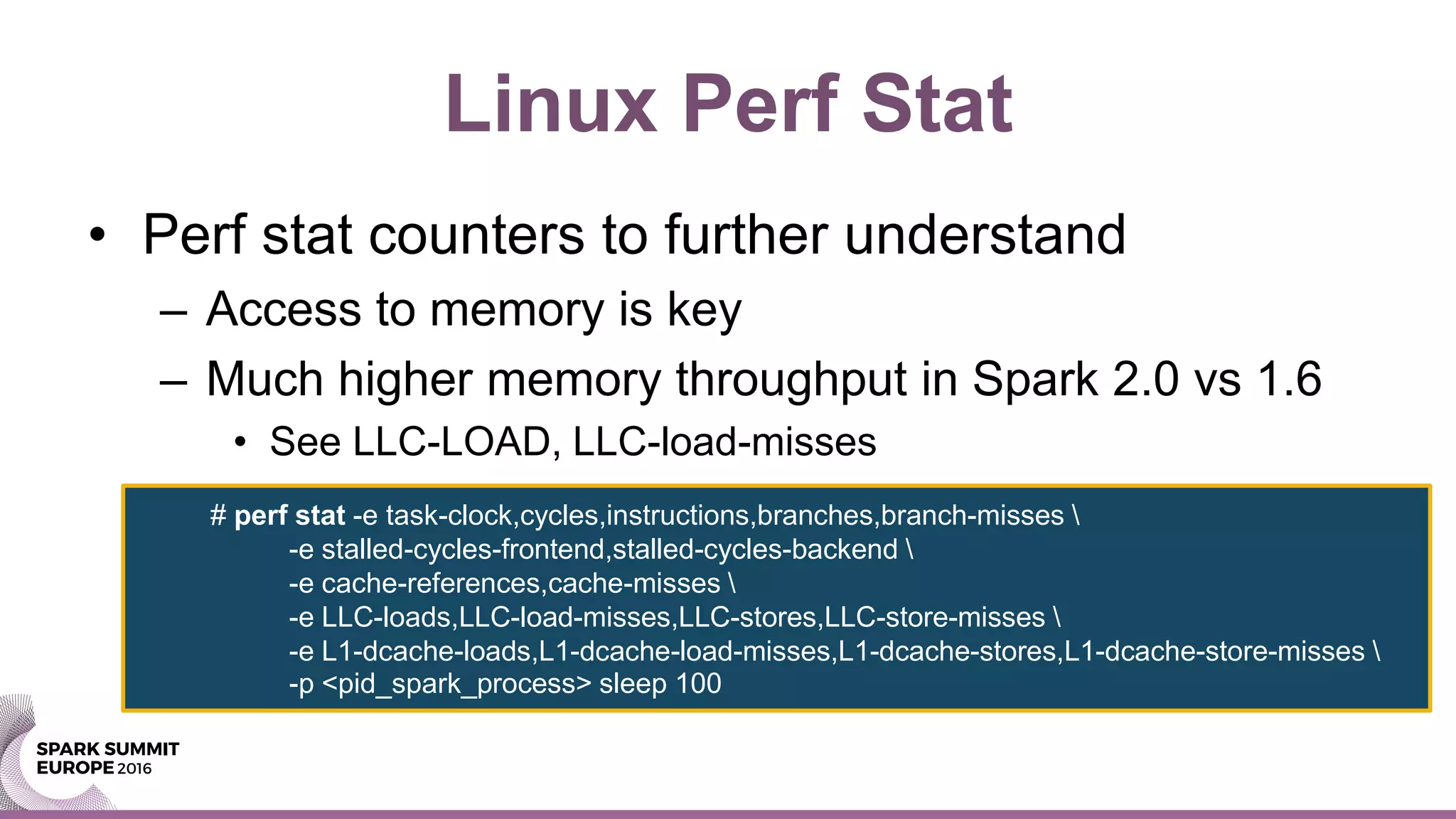

Luca Canali presented on using flame graphs to investigate performance improvements in Spark 2.0 over Spark 1.6 for a CPU-intensive workload. Flame graphs of the Spark 1.6 and 2.0 executions showed Spark 2.0 spending less time in core Spark functions and more time in whole stage code generation functions, indicating improved optimizations. Additional tools like Linux perf confirmed Spark 2.0 utilized CPU and memory throughput better. The presentation demonstrated how flame graphs and other profiling tools can help pinpoint performance bottlenecks and understand the impact of changes like Spark 2.0's code generation optimizations.