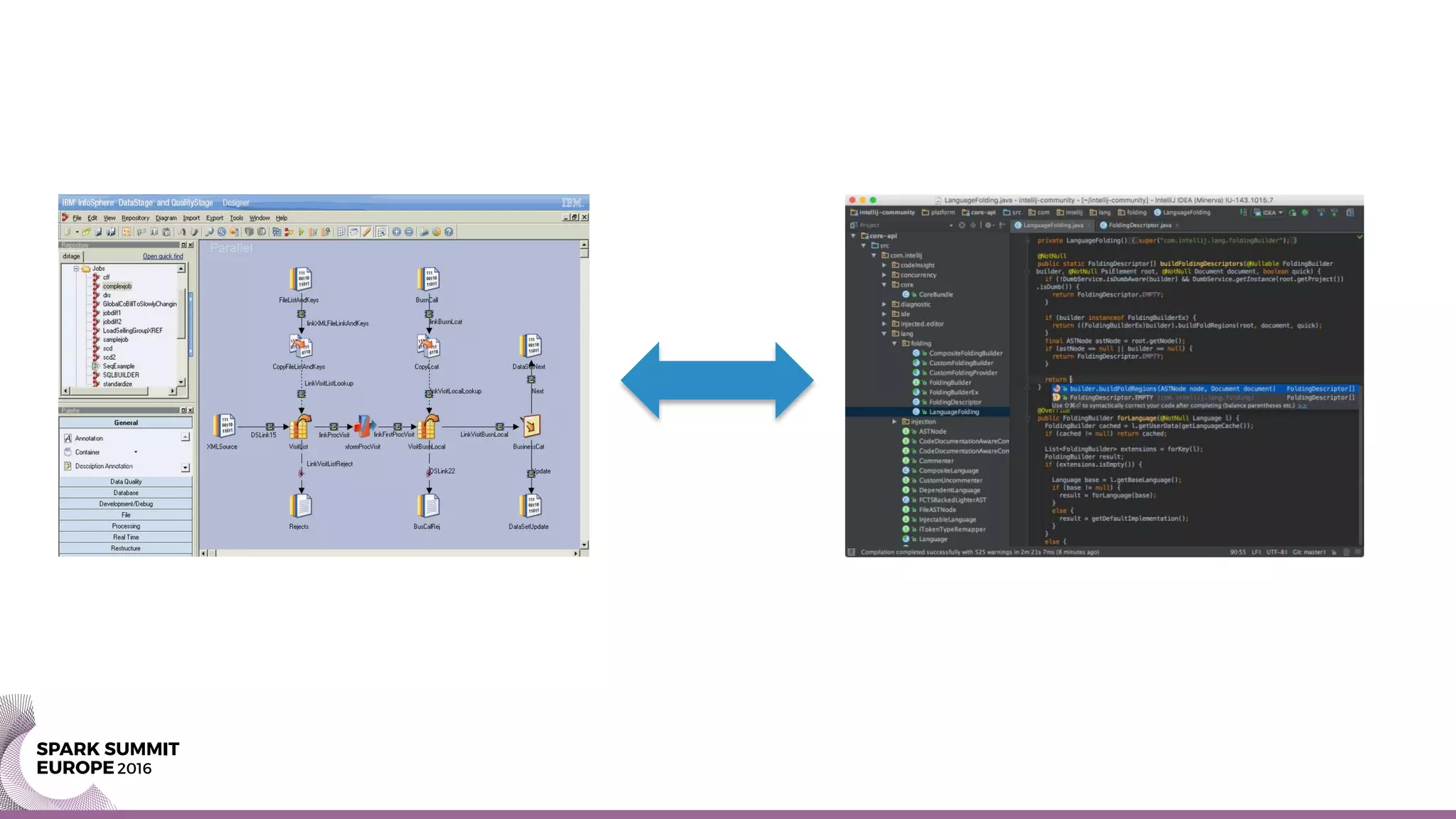

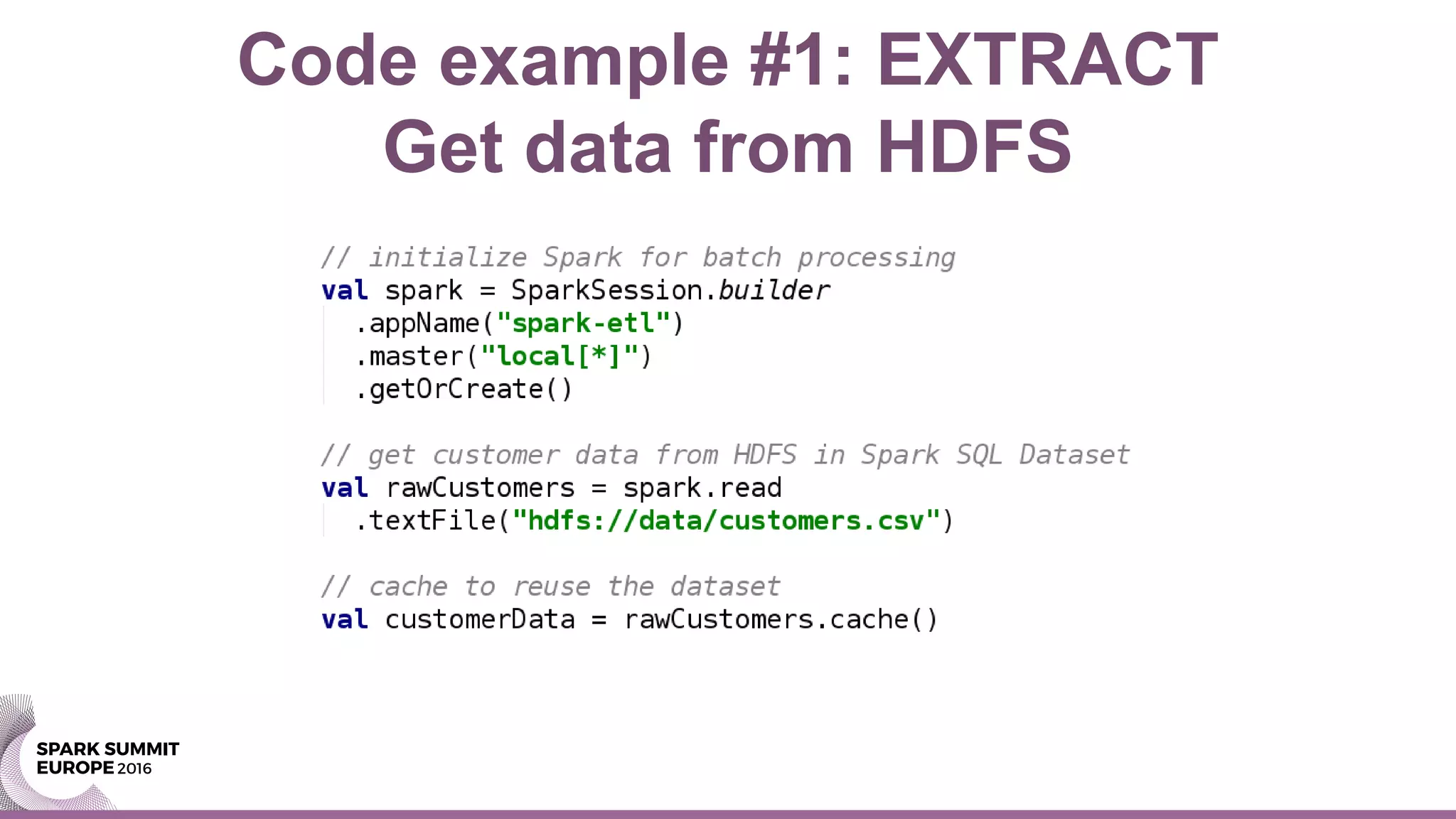

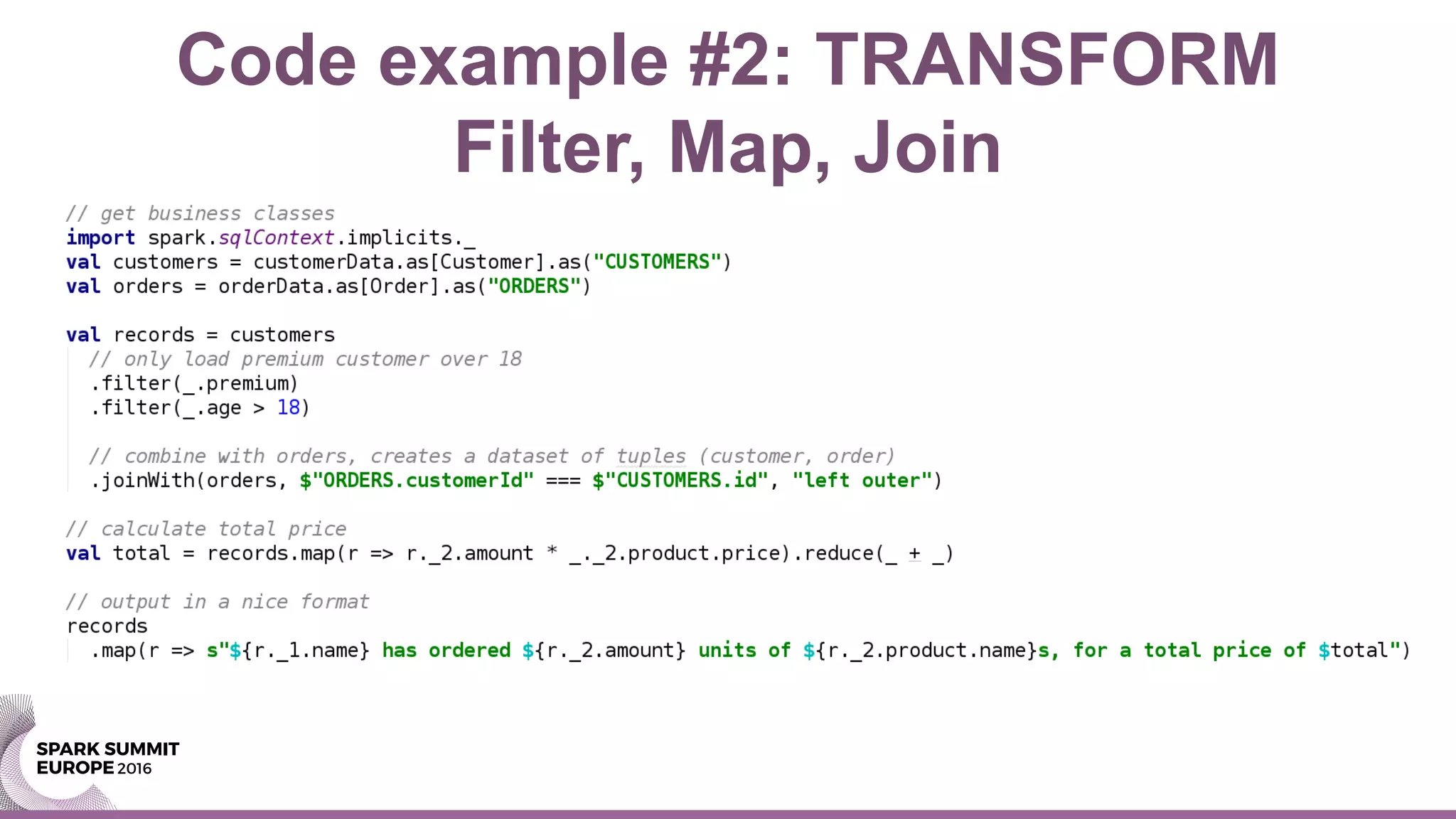

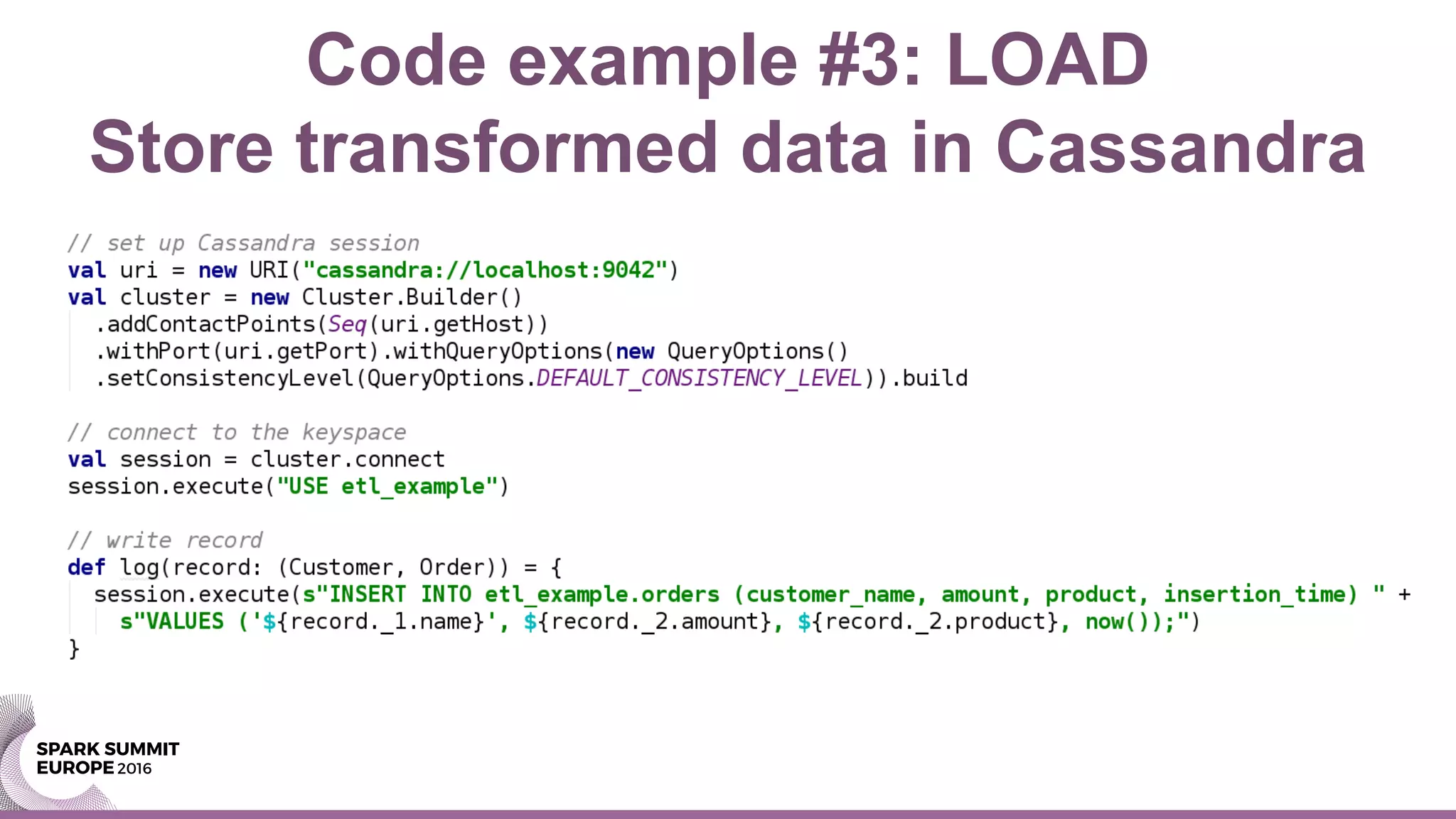

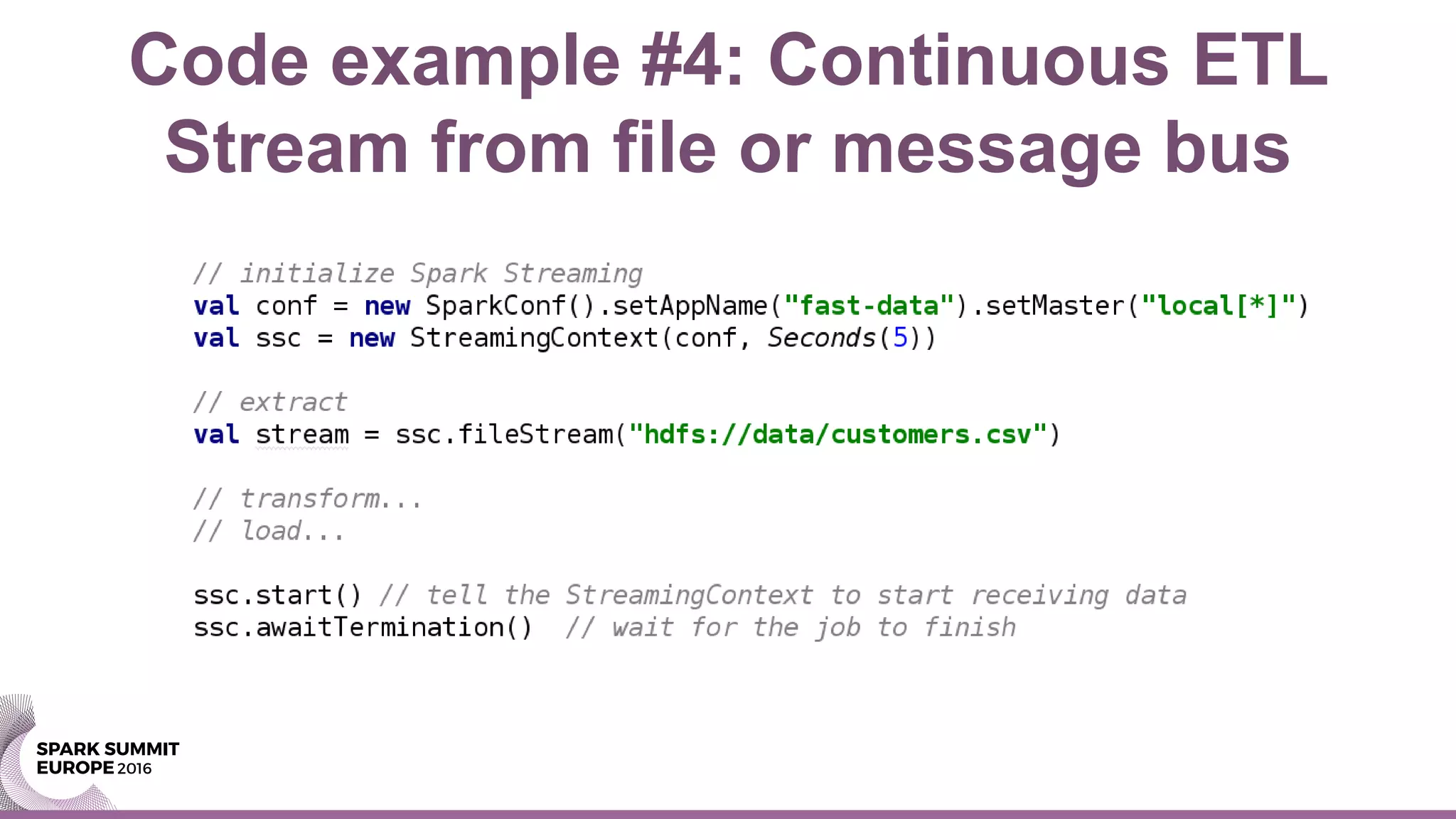

Get rid of traditional ETL processes and move to using Spark instead. Spark allows for parallel processing of data and can run on Hadoop systems where data is typically stored. It treats batch and streaming data equally and supports continuous processing without waiting for phases to complete. Spark code can extract, transform, and load data as well as perform machine learning tasks for data enrichment and prediction. This provides more flexibility than traditional ETL tools.