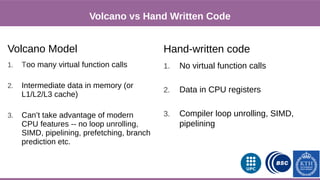

This document provides an overview of techniques to boost Spark performance, including:

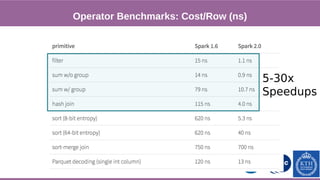

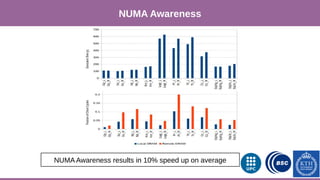

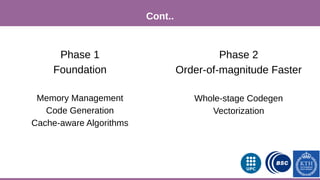

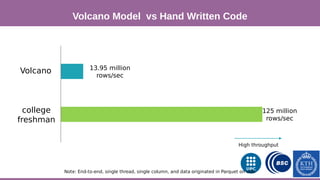

1) Phase 1 focused on memory management, code generation, and cache-aware algorithms which provided 5-30x speedups

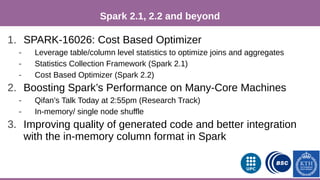

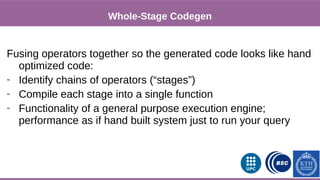

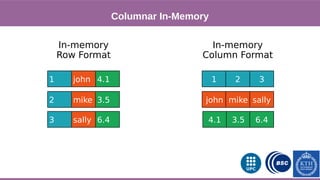

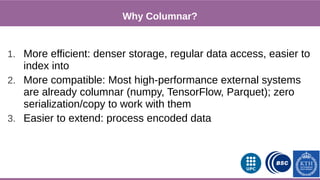

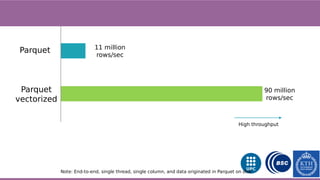

2) Phase 2 focused on whole-stage code generation and columnar in-memory support which are now enabled by default in Spark 2.0+

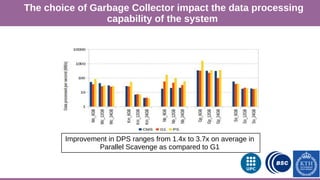

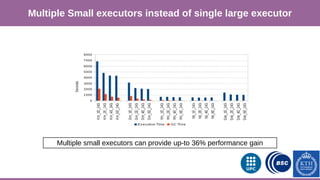

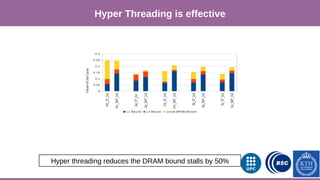

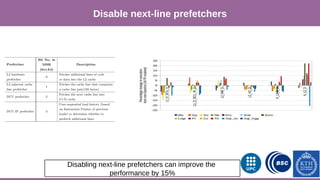

3) Additional techniques discussed include choosing an optimal garbage collector, using multiple small executors, exploiting data locality, disabling hardware prefetchers, and keeping hyper-threading on.

![Phase 1

Spark 1.4 - 1.6

Memory Management

Code Generation

Cache-aware Algorithms

Phase 2

Spark 2.0+

Whole-stage Code Generation

Columnar in Memory Support

Both whole stage codegen [SPARK-12795] and the vectorized

parquet reader [SPARK-12992] are enabled by default in Spark 2.0+](https://image.slidesharecdn.com/boostingsparkperformance-170223131918/85/Boosting-spark-performance-An-Overview-of-Techniques-23-320.jpg)