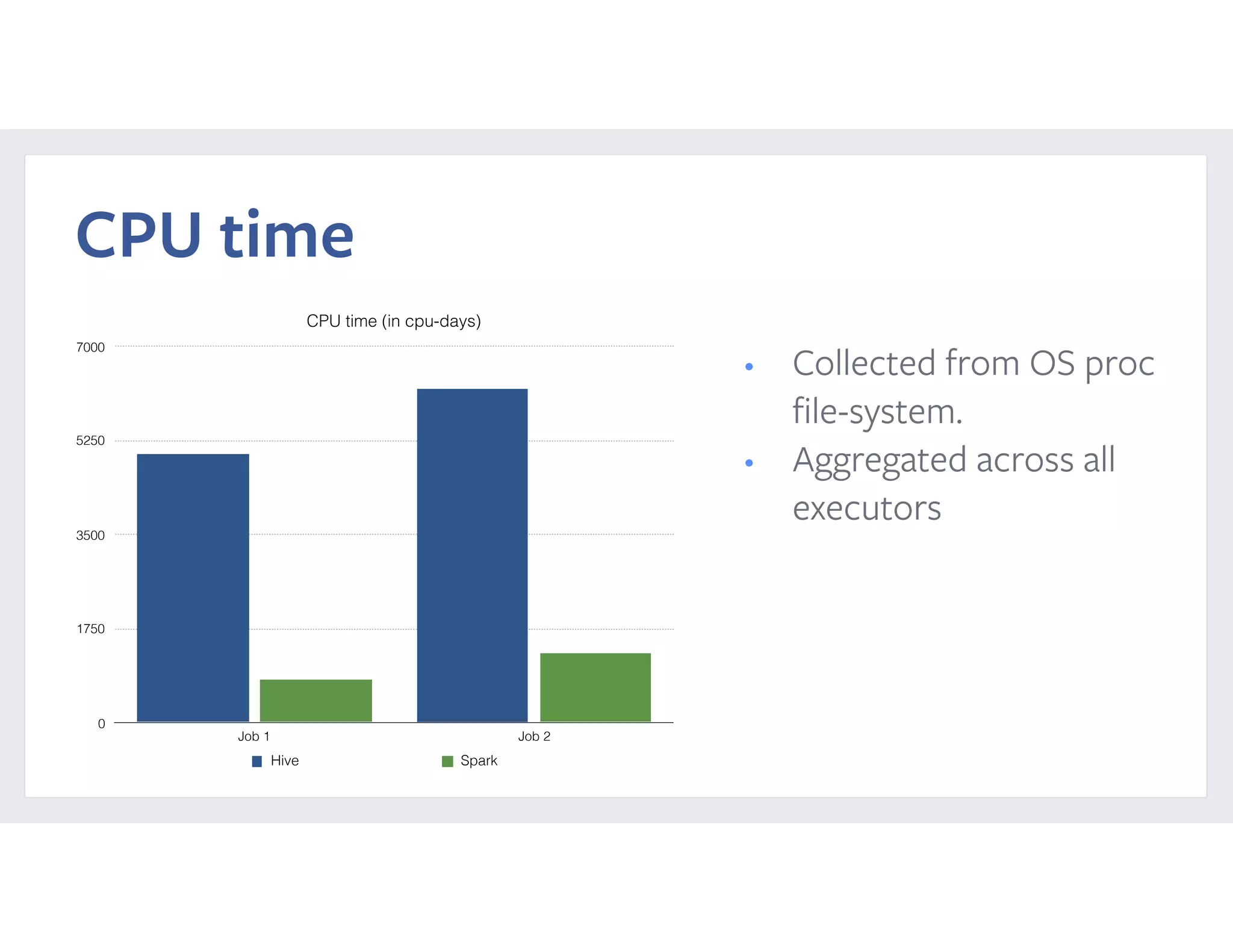

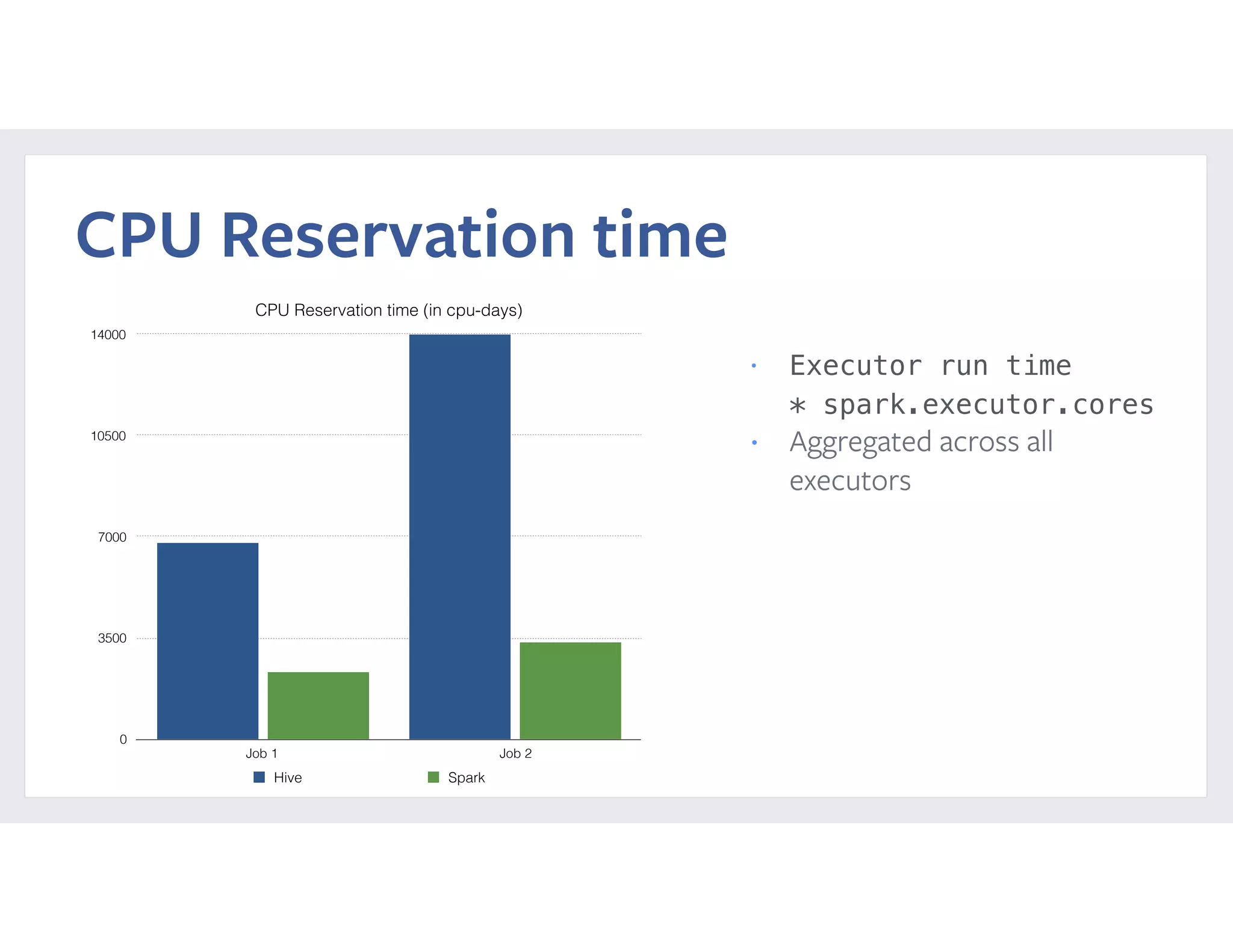

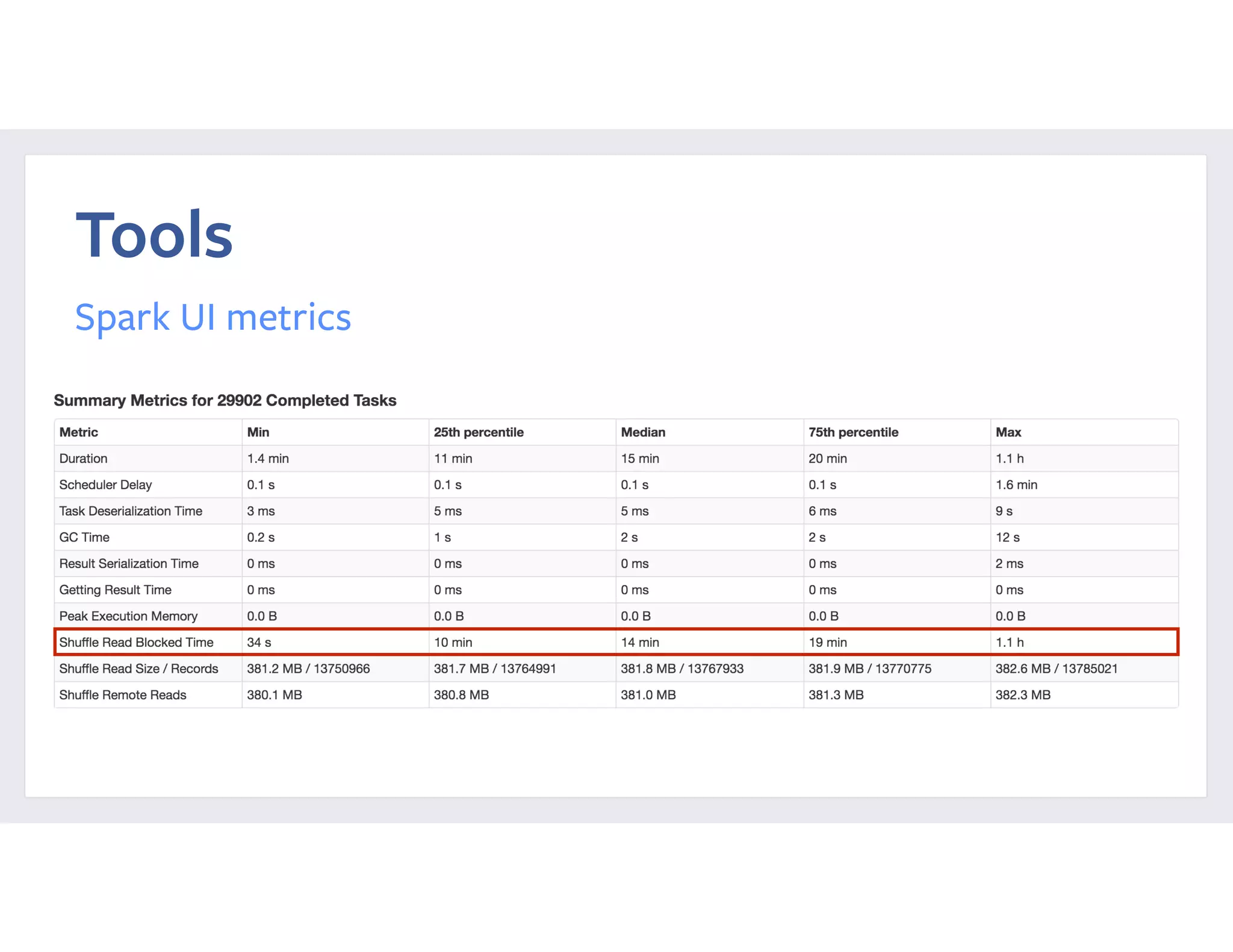

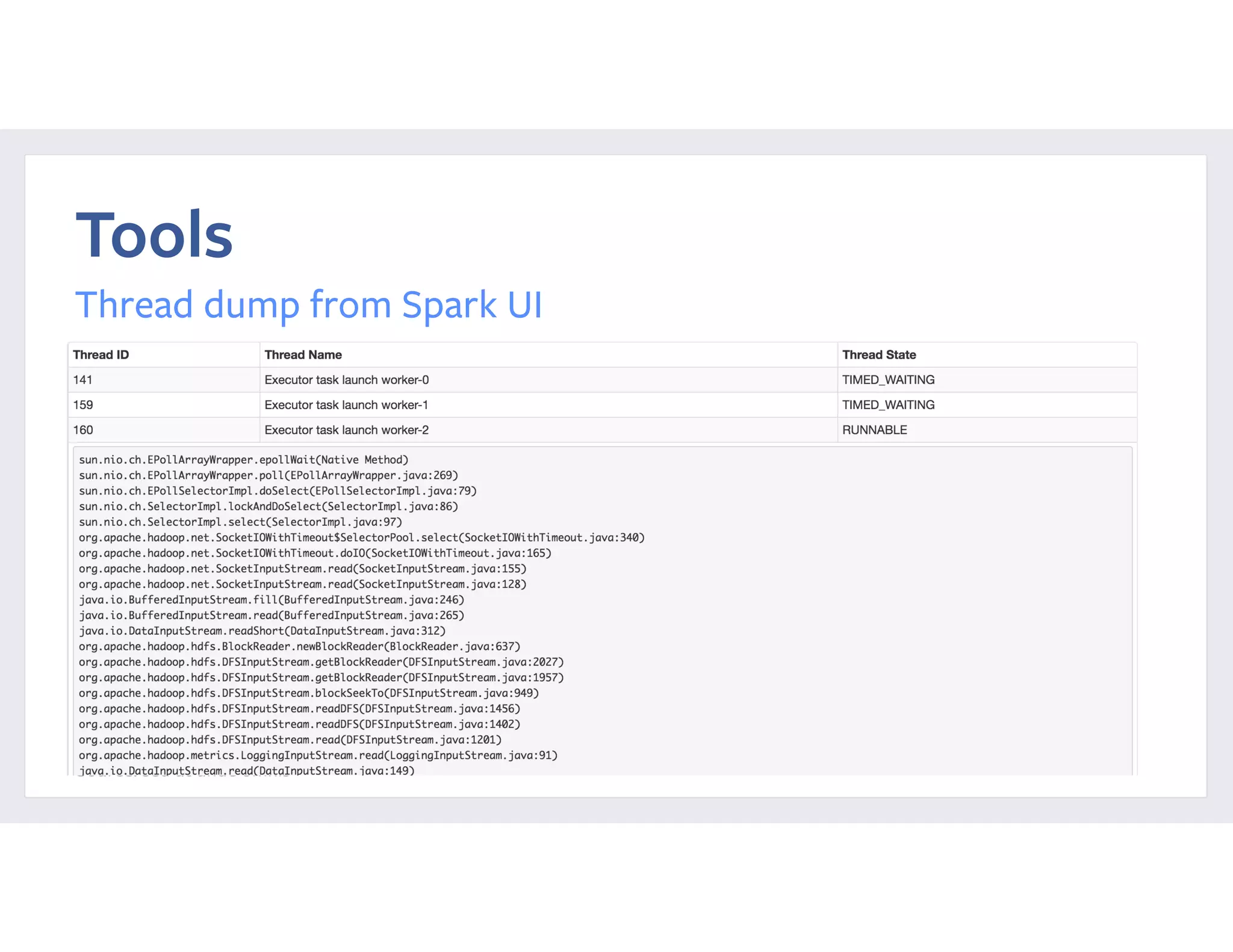

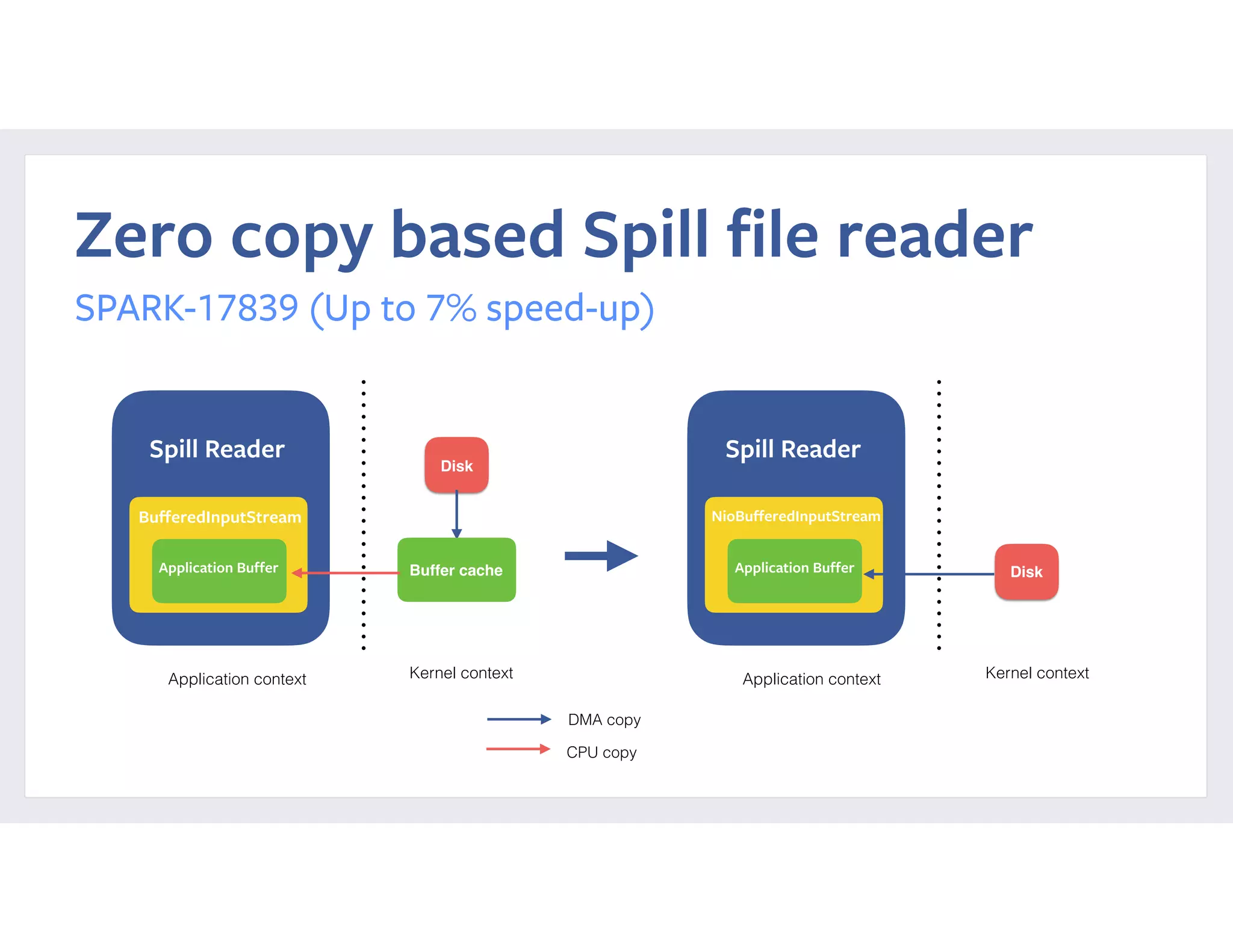

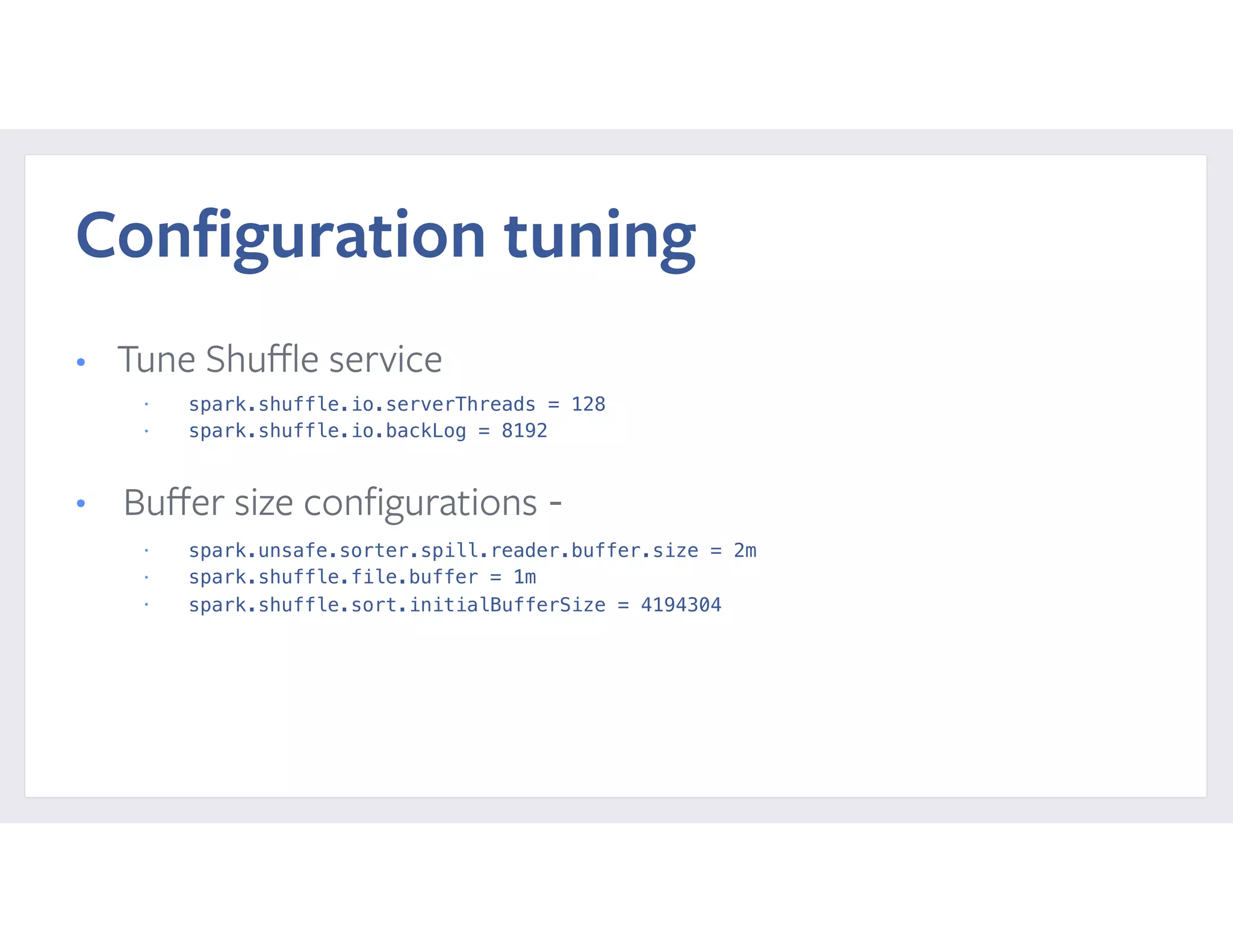

This document summarizes a case study of using Apache Spark at scale to process over 60 TB of data at Facebook. It describes the previous implementation using Hive, which involved many small jobs that were unmanageable and slow. The new Spark implementation processes the entire dataset in a single job with two stages, shuffling over 90 TB of intermediate data. It provides performance comparisons showing significant reductions in CPU time, latency, and resource usage. It also details reliability and performance improvements made to Spark, such as fixing memory leaks, enabling seamless cluster restarts, and reducing shuffle write latency. Configuration tuning tips are provided to optimize memory usage and shuffle processing.