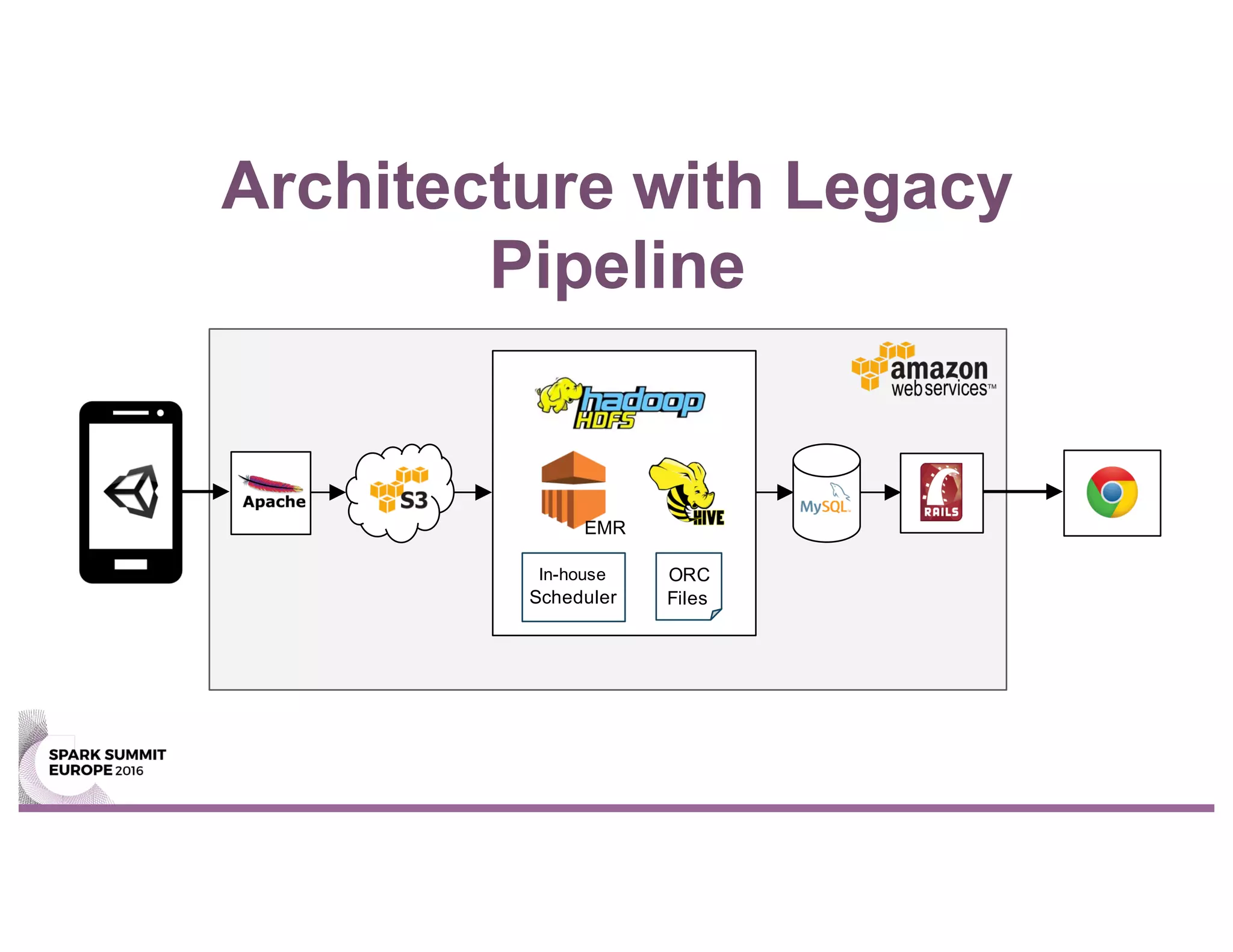

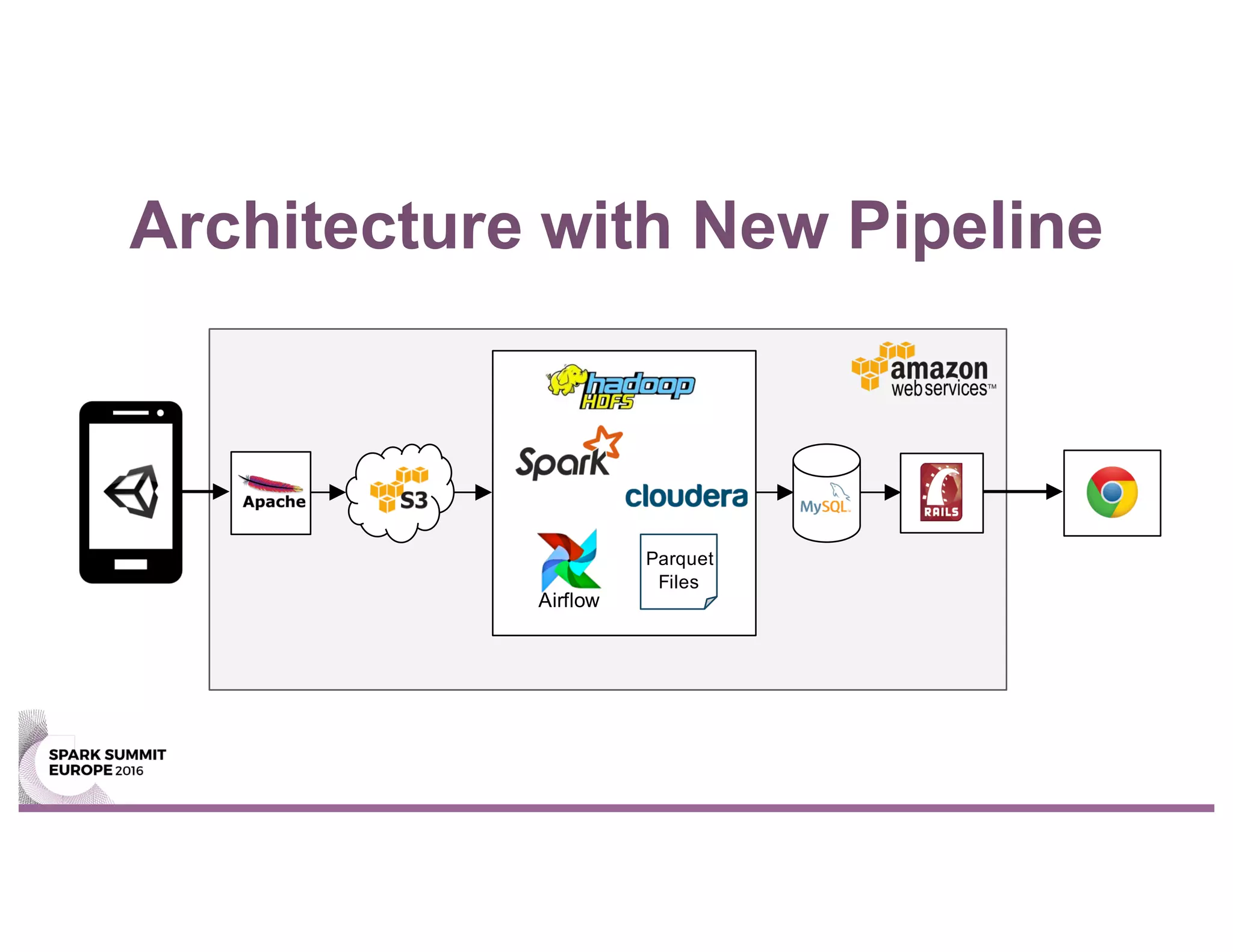

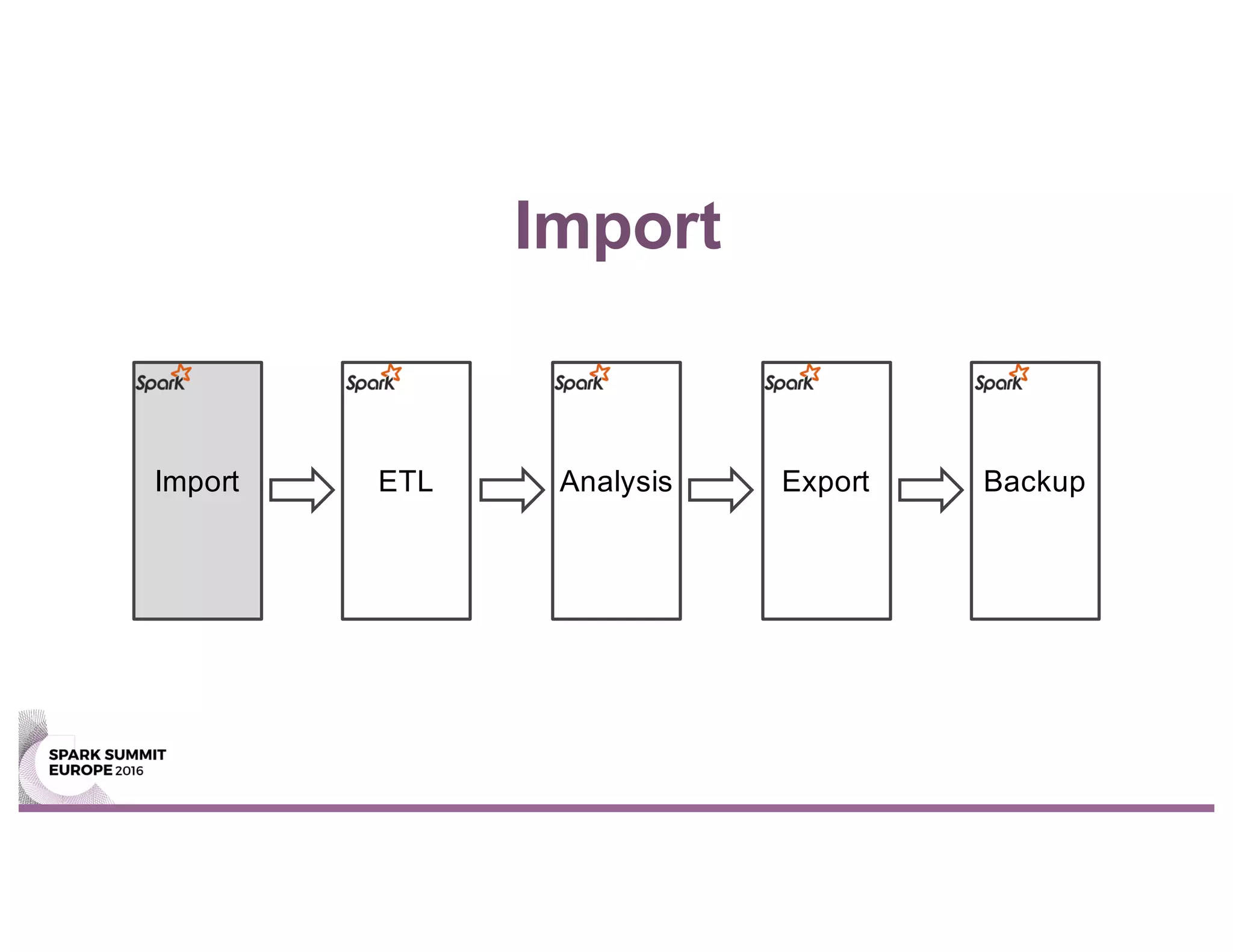

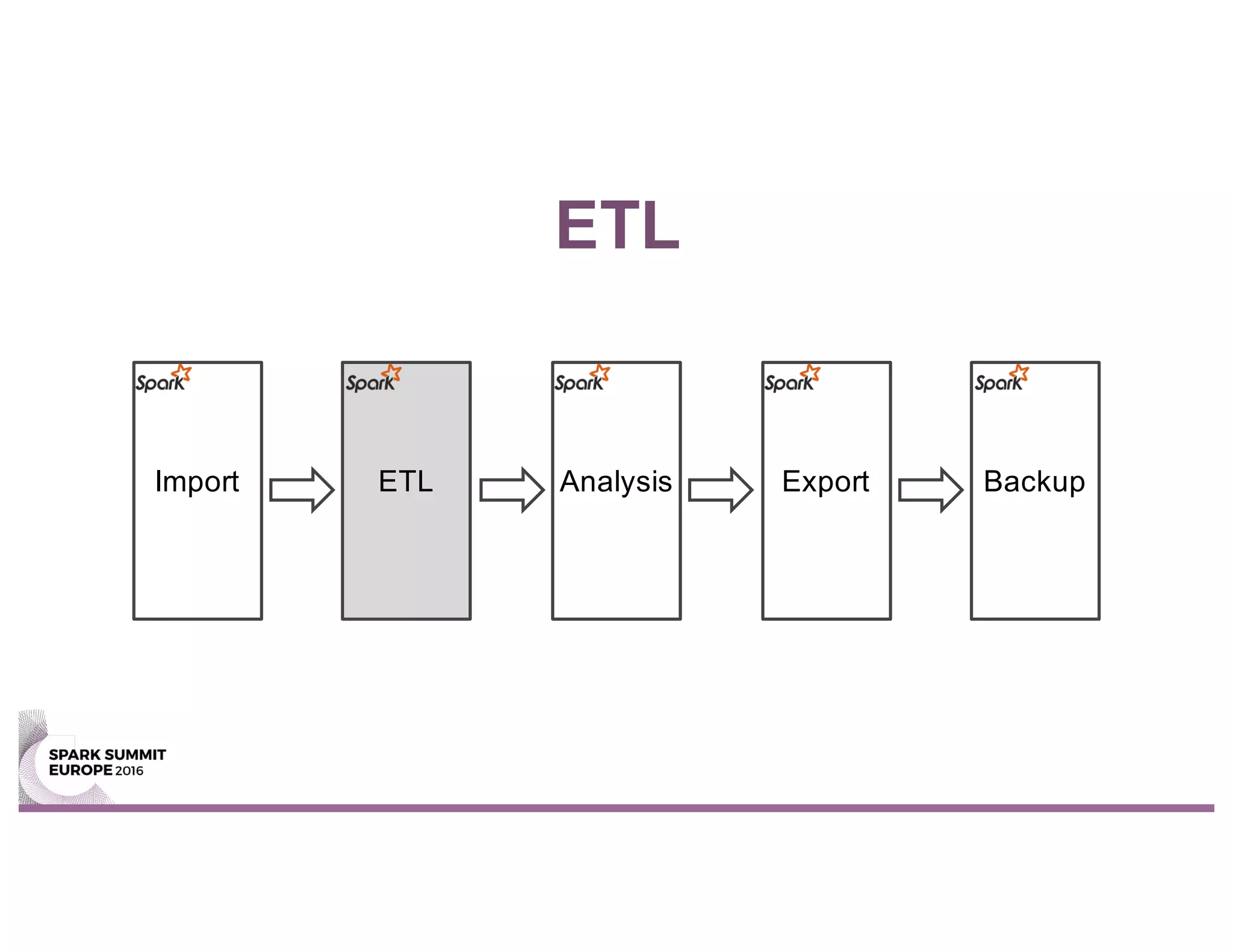

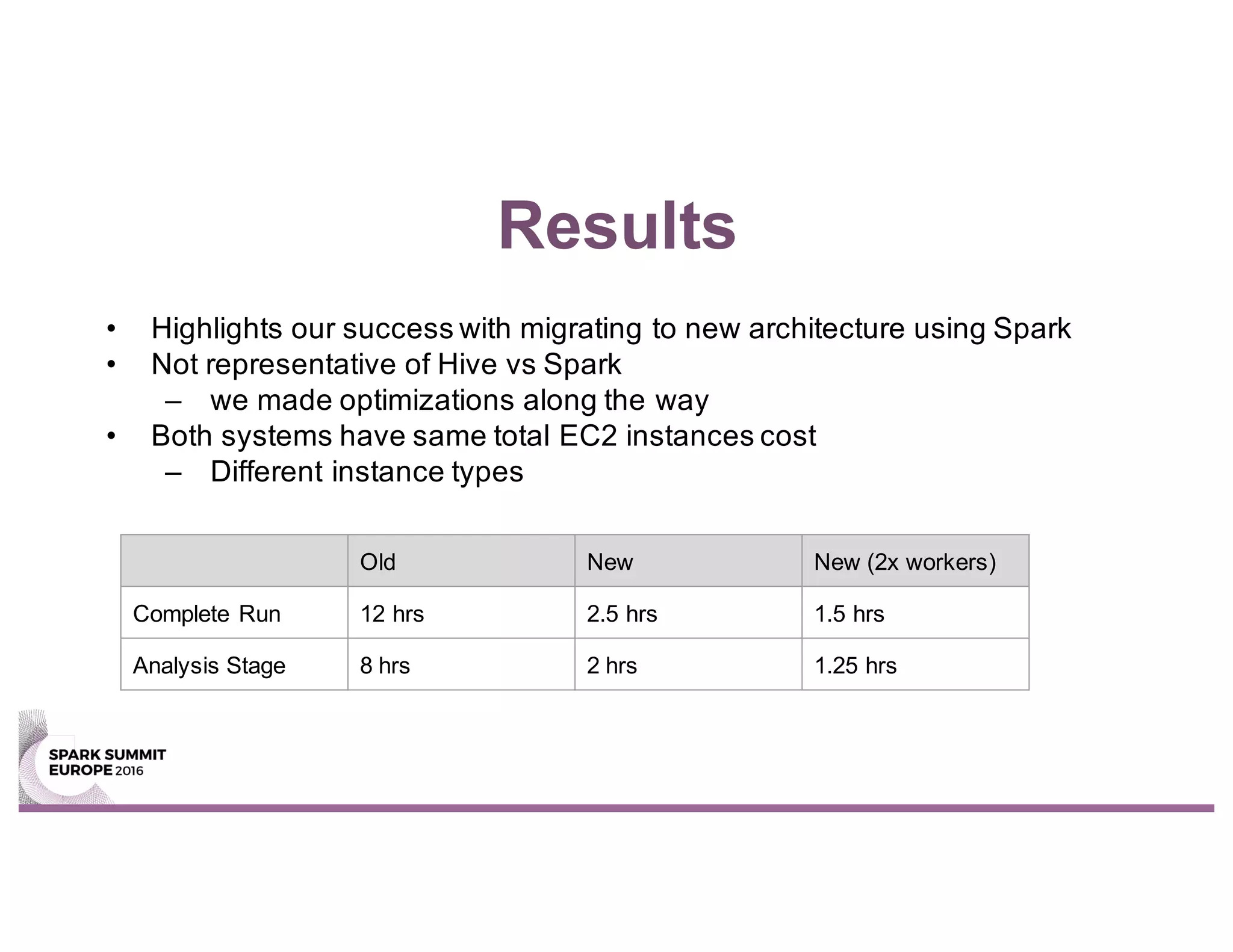

This document summarizes Unity Technologies' journey migrating their data pipeline from a legacy Hive-based system to using Spark. Some key points:

- They moved to Spark for its scaling, performance, and ability to handle both batch and streaming workloads from a single stack.

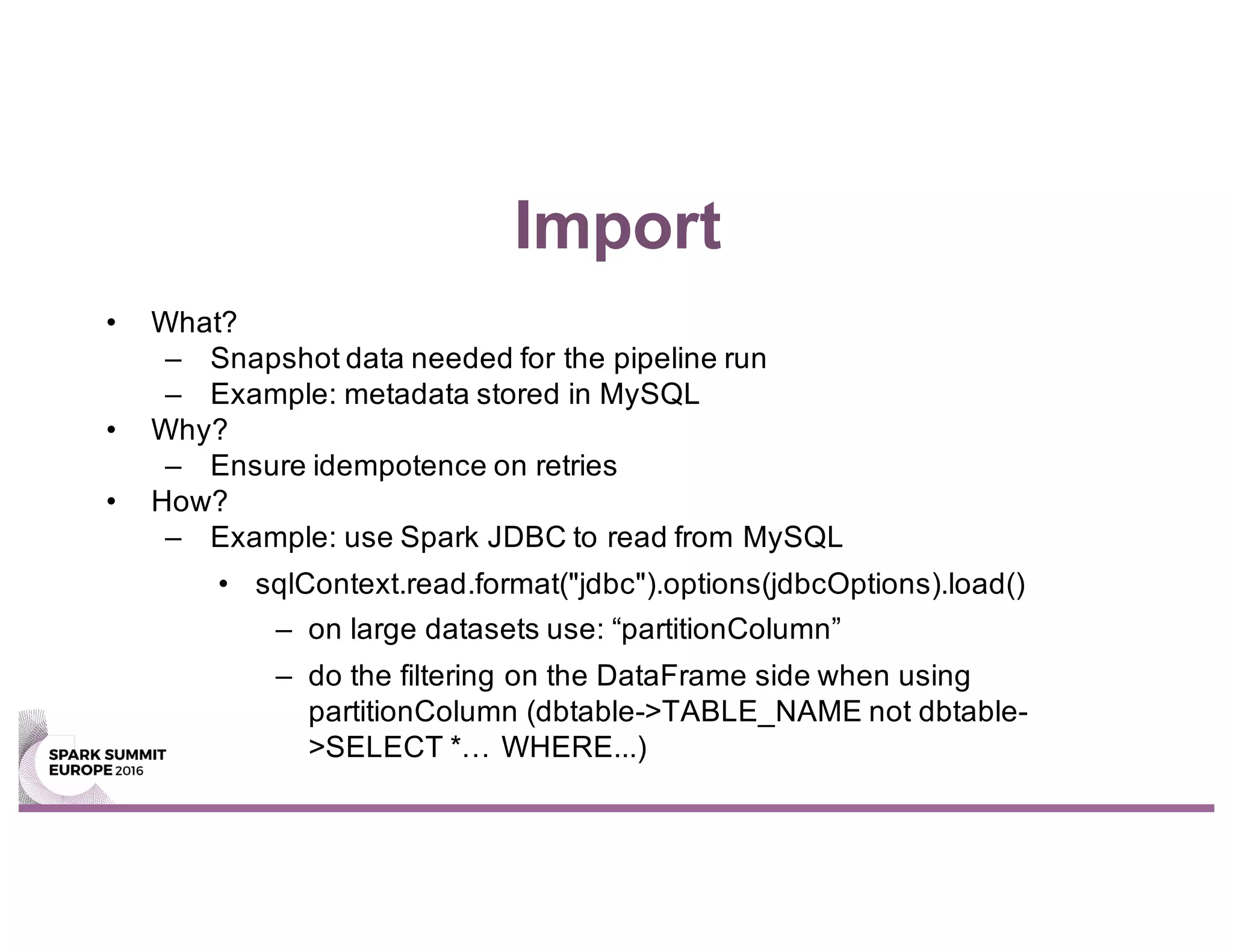

- The new Spark-based pipeline uses Airflow for workflow management and saves processed data to Parquet files stored in S3 for backup.

- Taking a test-driven development approach with unit and integration tests helped ensure a smooth migration. Staging the pipeline in an environment similar to production also helped address issues early.

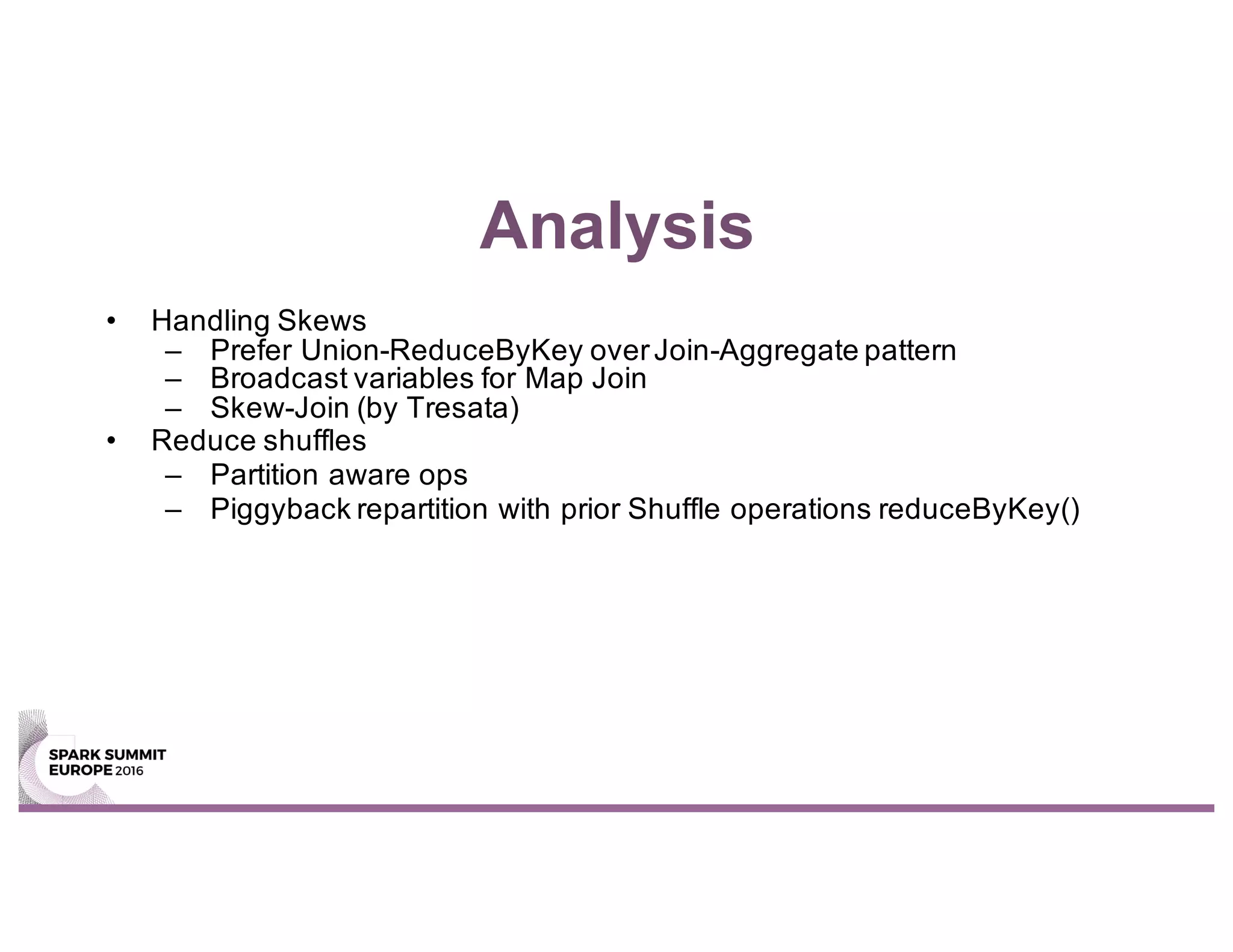

- The new Spark pipeline completed analysis stages up to 2x faster than the previous Hive-based system and