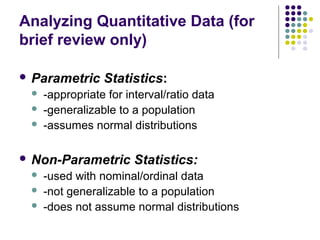

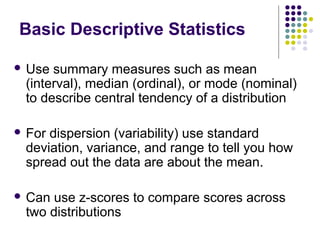

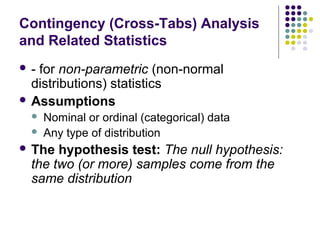

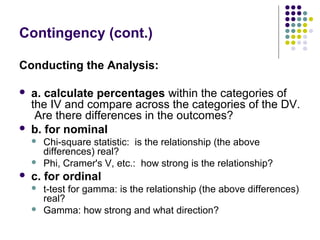

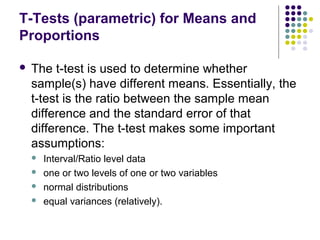

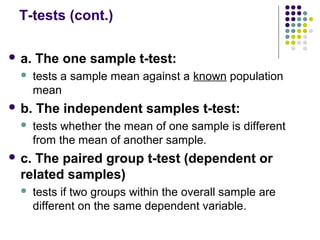

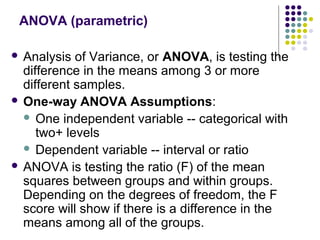

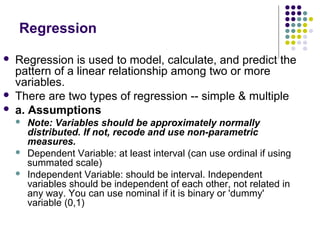

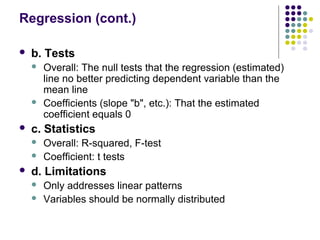

This document provides an overview of various quantitative data analysis techniques including parametric and non-parametric statistics, descriptive statistics, contingency analysis, t-tests, ANOVA, correlation, and regression. It discusses assumptions and processes for each technique and how to interpret results. Computer software like SPSS and SAS can be used to analyze large, complex datasets.