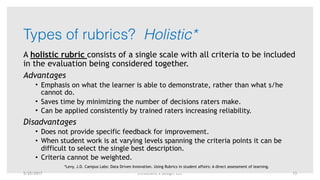

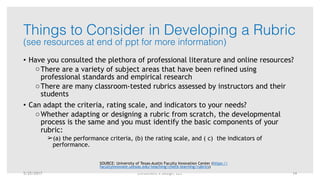

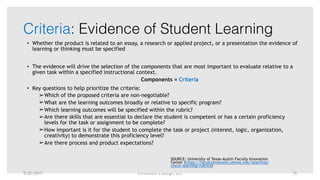

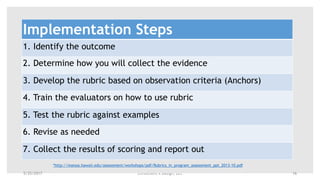

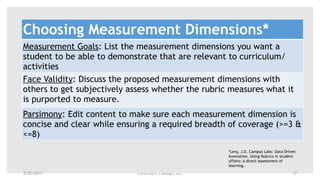

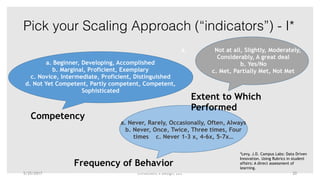

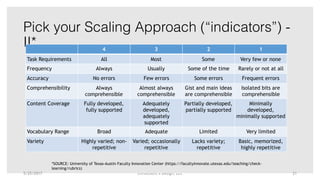

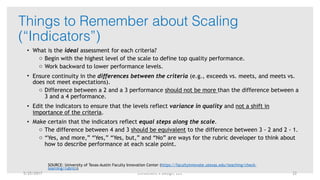

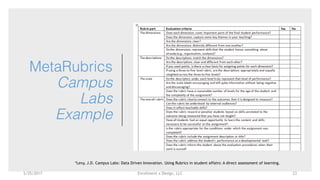

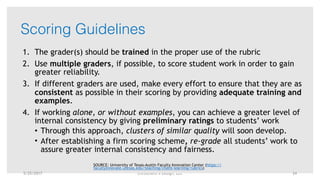

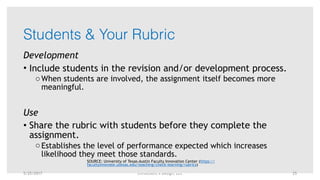

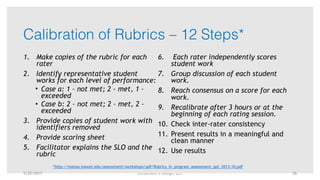

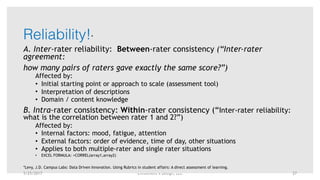

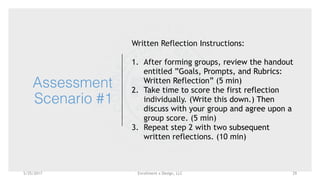

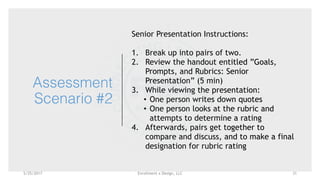

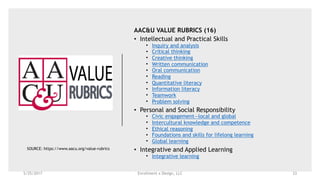

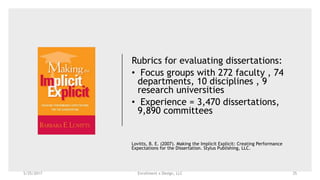

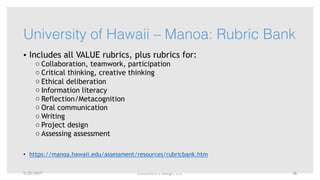

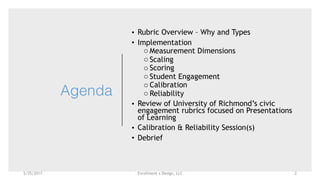

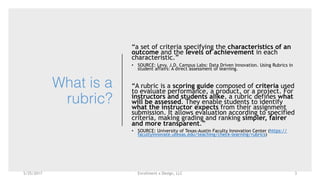

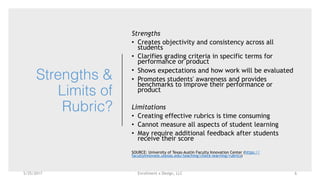

This document provides an overview of using rubrics for assessment. It discusses the definition and types of rubrics, including analytic and holistic rubrics. The presentation covers best practices for developing rubrics such as choosing criteria, developing descriptors, and implementing rubrics. It also includes examples of analytic and holistic rubrics. The document is intended to introduce rubrics and provide guidance on creating and using rubrics for assessment.

![Enrollment x Design, LLC

Holistic

Example 2:

Critical

Thinking

Source: http://teaching.temple.edu/sites/tlc/files/resource/pdf/

Holistic%20Critical%20Thinking%20Scoring%20Rubric.v2%20[Accessible].pdf

5/25/2017 12](https://image.slidesharecdn.com/interraterreliabilitymadeeasy-170605202139/85/Interrater-Reliability-Made-Easy-12-320.jpg)