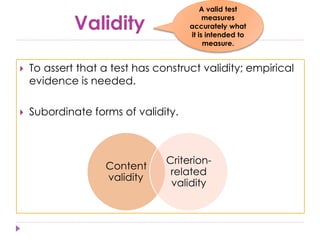

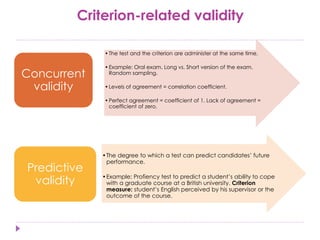

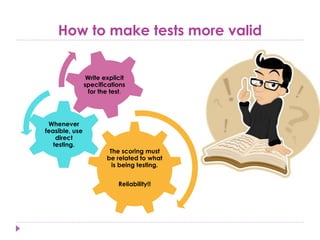

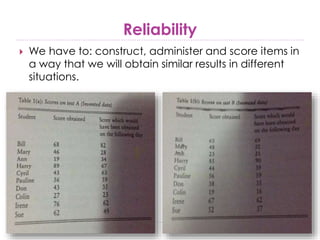

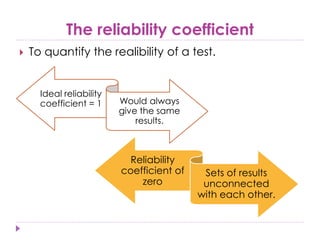

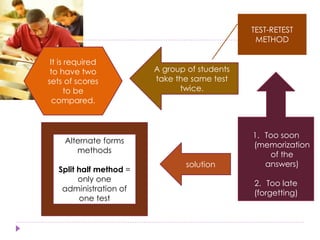

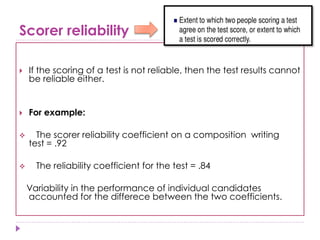

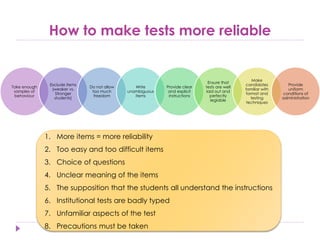

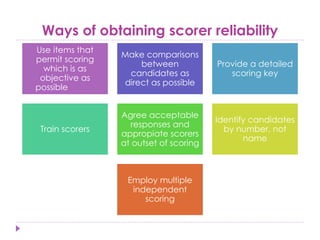

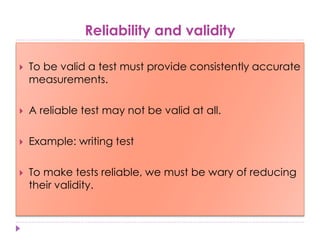

Validity refers to a test accurately measuring what it intends to. Content validity means a test samples relevant skills, while criterion-related validity compares test scores to external criteria. Reliability means a test gives consistent results. Key factors for reliability include multiple test items, clear instructions, uniform administration conditions, and scorer reliability through objective scoring and scorer training. While reliability ensures consistent results, a test may be reliable without being valid if it does not accurately measure the target construct. Both validity and reliability are important for effective test design and interpretation.