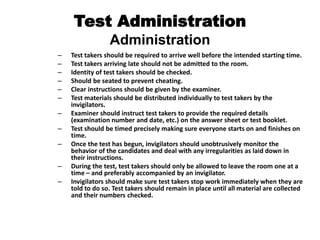

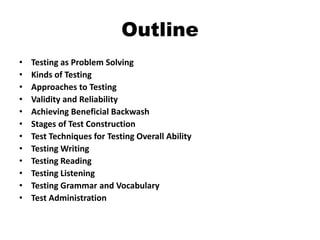

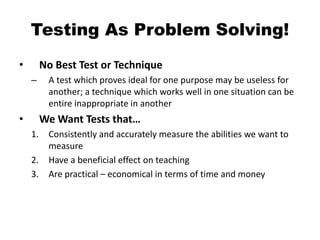

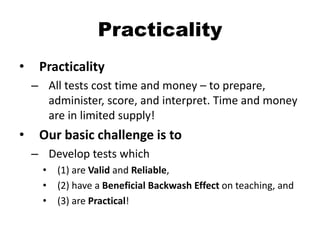

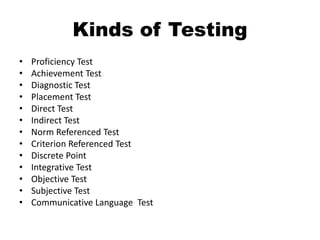

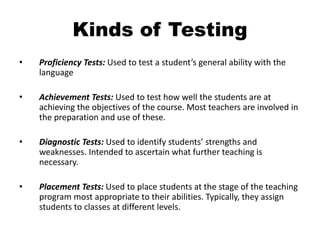

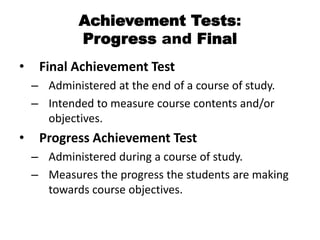

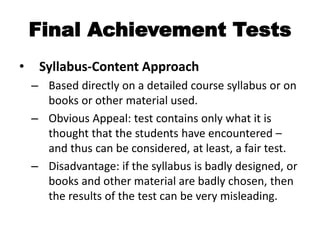

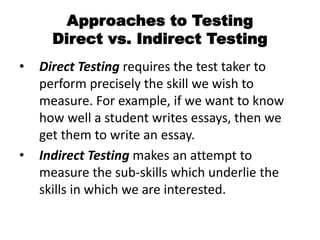

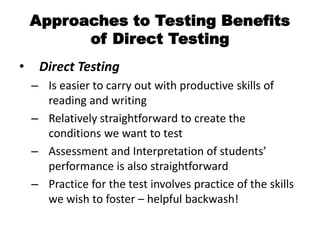

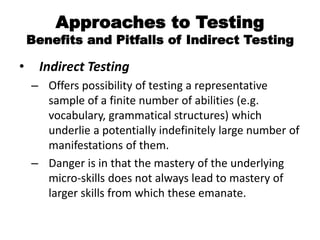

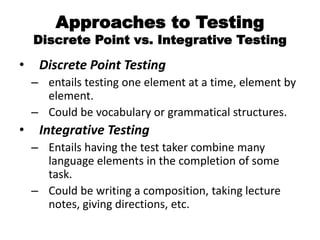

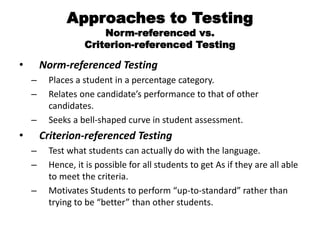

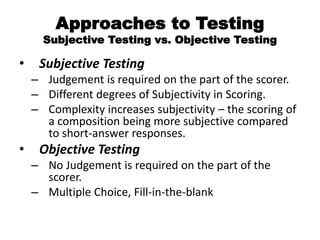

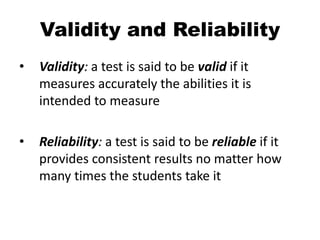

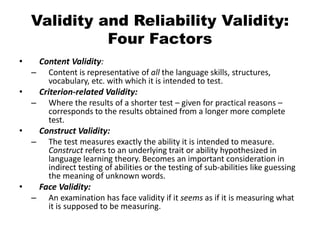

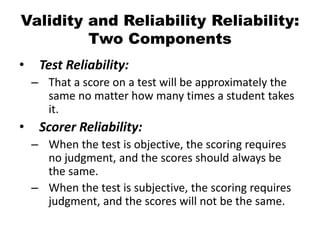

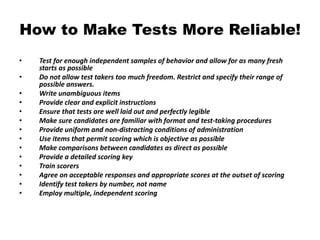

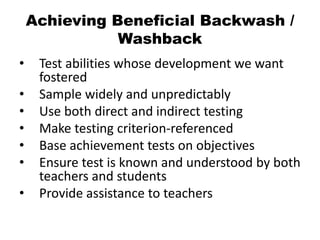

This document outlines various topics related to language testing, including types of tests, approaches to testing, validity and reliability, and achieving beneficial backwash effects. It discusses proficiency tests, achievement tests, and diagnostic tests. It also covers direct and indirect testing, norm-referenced and criterion-referenced testing, and objective and subjective testing. Validity is defined as accurately measuring the intended abilities, while reliability is consistency of results. Achieving beneficial backwash means testing abilities you want to foster and ensuring students and teachers understand the test.

![Stages of Test Construction

Statement of the Problem

• Statement of the Problem

– Be clear about what one wants to know and why!

• What kind of test is most appropriate?

• What is the precise purpose?

• What abilities are to be tested?

• How detailed must the results be?

• How accurate must the results be?

• How important is backwash?

• What are the constraints (unavailability of expertise,

facilities, time [for construction, administration, and

scoring])?](https://image.slidesharecdn.com/task2-180926122802/85/Types-of-Tests-33-320.jpg)

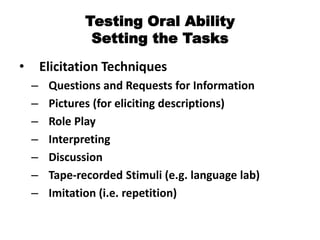

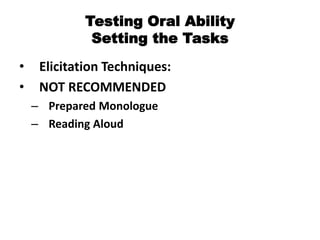

![Testing Oral Ability

Setting the Tasks

• Specify Appropriate Tasks

– Content

• Operations (Expressing, Narrating, Eliciting, etc.).

• Types of Text (Dialogue, Multi-participant Interactions

[face-to-face and also telephone])

• Addressees

• Topics

– Format

• Interview

• Interaction with Peers

• Response to tape-recordings](https://image.slidesharecdn.com/task2-180926122802/85/Types-of-Tests-61-320.jpg)

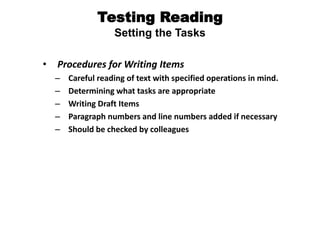

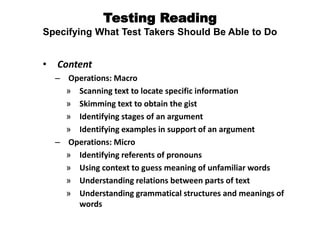

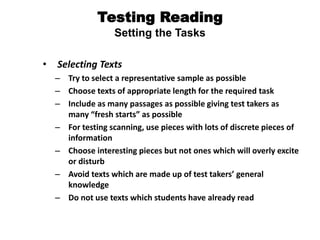

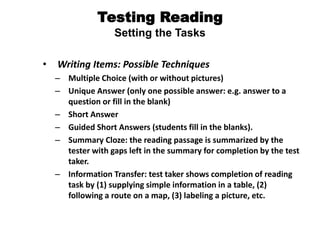

![Testing Reading

Setting the Tasks

• Writing Items: Possible Techniques

– Identifying Order of Events, Topics, or Arguments

– Identifying Referents: (e.g. “What does the word ‘it’ [line 25]

refer to?” _____________

– Guessing the meaning of unfamiliar words from context](https://image.slidesharecdn.com/task2-180926122802/85/Types-of-Tests-70-320.jpg)