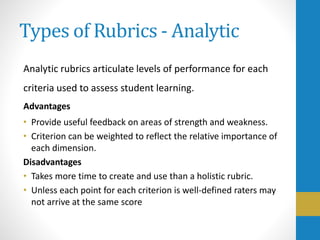

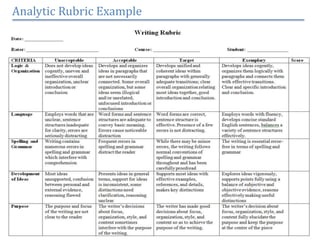

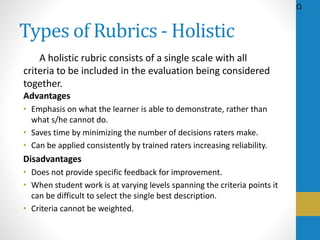

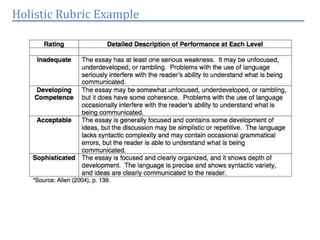

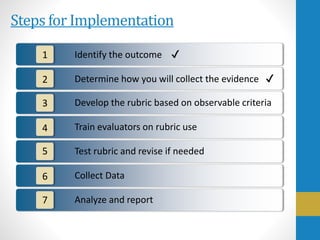

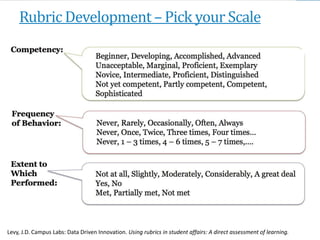

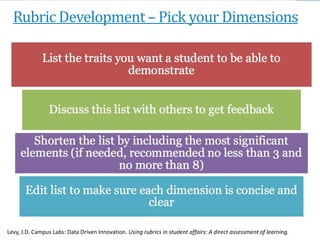

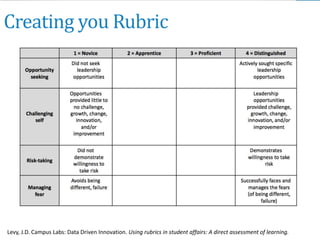

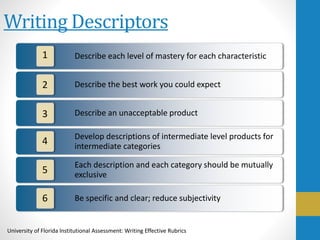

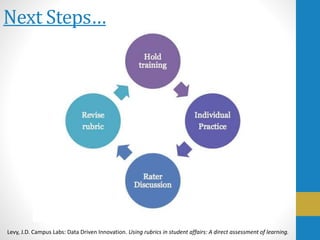

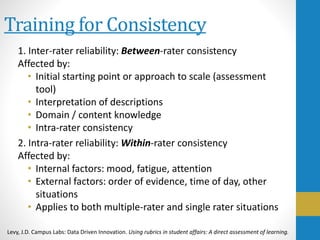

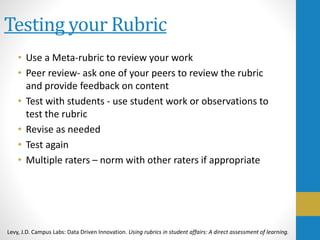

This document discusses rubrics, including what they are, why they are used, different types of rubrics, and steps for developing and implementing rubrics. A rubric is defined as a set of criteria that specifies the characteristics and levels of achievement for an outcome. Rubrics provide consistency in evaluation, gather rich assessment data, and allow for direct measurement of learning. There are two main types of rubrics: analytic rubrics that evaluate each criterion separately, and holistic rubrics that provide a single overall score. Developing an effective rubric involves identifying learning outcomes, determining assessment methods, choosing dimensions and performance levels, writing clear descriptors, testing the rubric, and training raters.