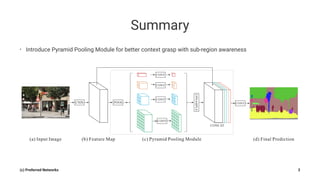

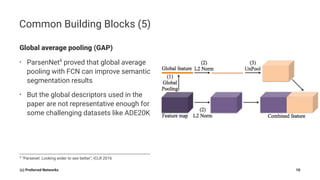

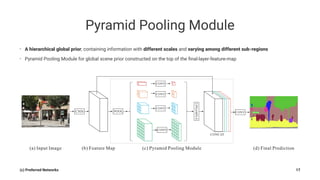

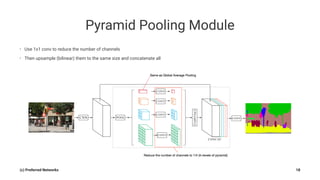

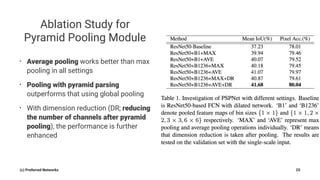

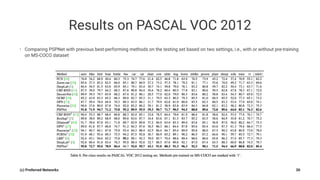

Pyramid Scene Parsing Network introduces the Pyramid Pooling Module to improve semantic segmentation. The module captures context at different regions and scales by performing average pooling at different pyramid levels on the final convolutional feature map. Experiments on ADE20K and PASCAL VOC datasets show the Pyramid Pooling Module improves mean Intersection-over-Union by over 4% compared to global average pooling, achieving state-of-the-art performance.